Strong Conscious Cues Suppress Preferential Gaze Allocation to Unconscious Cues

- 1Institute of Neuroscience, Université catholique de Louvain, Brussels, Belgium

- 2UMR5287 Institut de Neurosciences Cognitives et Intégratives d’Aquitaine (INCIA), CNRS, Bordeaux, France

Visual attention allows relevant information to be selected for further processing. Both conscious and unconscious visual stimuli can bias attentional allocation, but how these two types of visual information interact to guide attention remains unclear. In this study, we explored attentional allocation during a motion discrimination task with varied motion strength and unconscious associations between stimuli and cues. Participants were instructed to report the motion direction of two colored patches of dots. Unbeknown to participants, dot colors were sometimes informative of the correct response. We found that subjects learnt the associations between colors and motion direction but failed to report this association using the questionnaire filled at the end of the experiment, confirming that learning remained unconscious. The eye movement analyses revealed that allocation of attention to unconscious sources of information occurred mostly when motion coherence was low, indicating that unconscious cues influence attentional allocation only in the absence of strong conscious cues. All in all, our results reveal that conscious and unconscious sources of information interact with each other to influence attentional allocation and suggest a selection process that weights cues in proportion to their reliability.

Introduction

Attention is a mechanism for allocating cognitive resources to relevant stimuli (Desimone and Duncan, 1995). The highest priority for allocating attention is thought to be associated to the stimulus that maximizes expected information gain (i.e., provide the most information given the stimuli and its context; Summerfield and Egner, 2009; Manohar and Husain, 2013; Vossel et al., 2014). Attentional selection can be driven by stimulus- and context-specific features that make a given visual object stand out from its surrounding (Itti, 2005). This type of attention has been coined stimulus-driven, exogenous or bottom-up attention (Filali-Sadouk et al., 2010). On the other hand, selective attention can be driven to stimuli, features, or spatial location which are especially relevant with respect to the task that we are performing, an instance called goal-directed or top-down attention (Baluch and Itti, 2011; Henderson and Hayes, 2017).

Most studies of goal-directed attention have focused on attentional allocation to conscious cues (e.g., blue stimuli when looking for a blue flower). However, the influence of unconscious processing on visual attention remains debated. Despite persistent skepticism regarding the existence of unconscious processes (Shanks and St. John, 1994; Vadillo et al., 2016), many studies have reported evidence of unconscious processing in perceptual decision making (Greenwald et al., 1995; Peremen and Lamy, 2014; Alamia et al., 2016), motor learning (Cleeremans, 1993; Destrebecqz et al., 2005; Clerget et al., 2012) and dynamic system control (Berry and Broadbent, 1988). Regarding its interaction with attentional mechanisms, a recent study from Zhao et al. (2013) provided evidence in support of preferential attentional allocation to unconscious cues by showing that reaction times (RTs) were faster when task stimuli were presented within a structured stream of stimuli. In line with these results, in a previous study from our group, we also showed that temporal statistical regularities affect overt attention (i.e., eye movements): participants were attending more frequently the predictable onset of a novel target. Interestingly, we found this effect exclusively when the predictability was task-relevant (Alamia and Zénon, 2016 ). Another instance of unconscious influence on attentional allocation can be found in contextual cueing (Chun and Jiang, 1998; Chun, 2000), in which participants perform a visual search between two sets of stimuli having two different colors, only one of which includes the target. Unbeknown to participants, target location is paired with the spatial configurations of either one or the other set of stimuli. Even though the truly unconscious nature in this paradigm has been called into question (Smyth and Shanks, 2008), the results show that participants implicitly learn the rule, and bias their eye movements toward the portion of space where the target is more likely to appear (Jiang and Chun, 2001). In line with these results, studies based on subliminal spatial cues attained similar conclusions (Mulckhuyse et al., 2007; Mulckhuyse and Theeuwes, 2010). Thus, in the visual domain, evidence seems to suggest that attention is affected by predictability, even though previous studies have not tested subjects’ awareness thoroughly (Shanks and St. John, 1994; Newell and Shanks, 2014).

In ecological scenarios, conscious and unconscious sources of information are often mingled together, but how attentional allocation to unconscious cues interact with the amount of conscious information in the stimulus (i.e., signal strength) has not been investigated thoroughly so far. Here, we address this question aiming specifically at: (1) confirming that overt attentional exploration is influenced by unconscious sources of information, using a paradigm that addresses the main criticisms formulated against previous studies of unconscious processing; and (2) testing whether and how conscious and unconscious information interact when influencing attention. We explored these questions by exploiting an experimental design that leads to robust unconscious learning, in which color information biases decisions in a motion discrimination task and in which the awareness of the association has been thoroughly tested (Alamia et al., 2016). We manipulated the strength of the conscious signal (i.e., how easy it is to perceive the dots’ motion) to investigate how the reliability of conscious cues affects the weight of unconscious sources of information on attentional allocation. We hypothesized that: (1) eye movements are influenced by unconscious cues, in agreement with previous findings; and (2) reliable conscious information should decrease the influence of unconscious cues on task performance and attentional allocation.

Materials and Methods

Participants

Twenty-two healthy participants (15 females, mean age = 23.17, std = 1.68) took part in this experiment, receiving monetary compensation for their participation. All of them reported normal or corrected-to-normal vision. Two participants were discarded from the analyses because, during the debriefing at the end of the experiment, they could explicitly verbalize the association between color and motion. Therefore, all subsequent analyses were performed on 20 participants (the target sample size was estimated from previous experiments using similar task; Alamia et al., 2016). All the participants signed a written informed consent before the experiment. The experiment was approved by the local Ethics committee of the Université Catholique de Louvain and was carried out in accordance with the Declaration of Helsinki.

Experimental Design

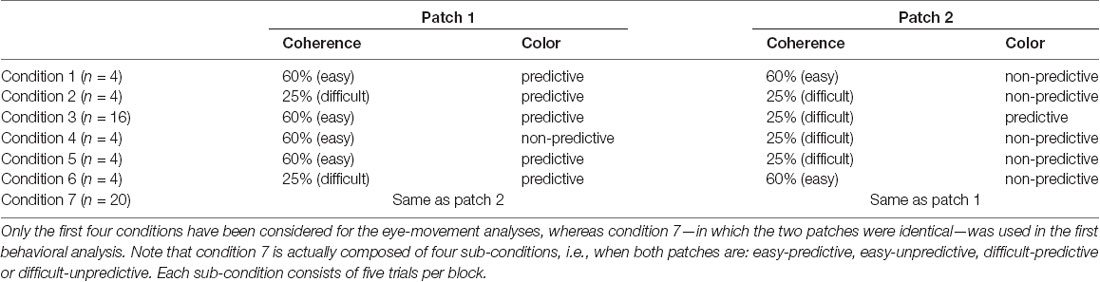

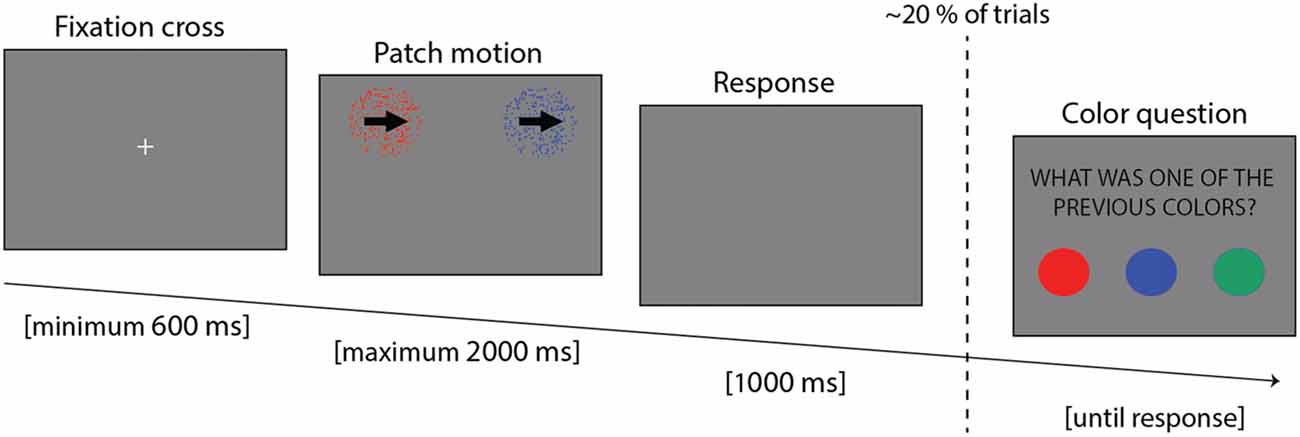

Participants were comfortably made to sit, with the head placed on a chin rest, at a distance of 58 cm from the screen. Eye movements and blinks were recorded with an Eyelink© 1,000 + eye tracker (SR Research Ltd., Kanata, ON, Canada; sample rate of 500 Hz). The experiment was implemented in Matlab 7.5 (The MathWorks, Natick, MA, USA), using the version 3.0.9 of the Psychotoolbox (Brainard, 1997). The experiment lasted around 45 min, and it was composed of 14 blocks, each lasting 56 trials. Each trial consisted of three parts (Figure 1): at first a fixation cross was displayed until the participants maintained fixation continuously for 600 ms; then two motion patches (see below) were presented until participants provided an answer, but no longer than 2,000 ms; finally, if the participant failed to provide an answer during the response period, an additional 1,000 ms blank display was presented to allow more time to respond.

Figure 1. Trial example showing the three parts: fixation cross, stimulus presentation and response time.

An auditory feedback was provided to inform the participants of the accuracy of their response. The stimuli consisted of two patches of dots, each having a diameter of 6° and located at two of the four pseudo-randomly selected corners of the screen, 20° from the center (center to center distance). The motion of the two patches of dots was either rightward or leftward, and both patches always had the same motion direction. Participants were instructed to fixate the cross at the beginning, and then visually explore the two patches to report their motion direction. The patches could have two different coherence levels: 25% and 60%. The coherence level of a patch reflects the percentage of dots moving towards the main direction of motion (i.e., left/rightward). The motion direction of the remaining dots was selected at random. The lower the coherence the more difficult it is to discriminate the motion direction (Gold et al., 2008). Thus, there were three types of trials: easy (both patches at 60% coherence), difficult (both patches having 25% coherence) and mixed (one patch 60% and the other 25%). The rationale for having two levels of coherence was two-fold. On the one hand, it was used to investigate the interaction between conscious and unconscious sources of information on attentional allocation. On the other hand, it was also used as a way to favor visual exploration, since in pilot studies that did not include this manipulation, we found that subjects tended to fixate stimuli on the basis of their spatial location. In addition to coherence, the overall difficulty level of the task was tuned subject by subject in the first six blocks by changing the lifetime of the dots of both patches. Dot lifetime corresponds to the number of frames each dot is displayed before disappearing: the longer the lifetime, the easier it is to perceive the motion. The possible lifetime values were 15, 6, 4, 3 and 2, chosen on the basis of pilot studies performed on a different set of participants (given 100 Hz refresh rate, each frame lasts 10 ms and a lifetime of two corresponds to 20 ms). On the first training block, the lifetime of both patches was 15, and it was decreased by one level in subsequent blocks provided the participants’ average performance of the last block was above 70%. This approach allowed us to adjust motion discrimination difficulty to each participant, while concomitantly training them on the task. All except one participant reached the shortest lifetime (i.e., lifetime of 2) before the 7th block. Starting from the 7th block, the lifetime remained unchanged throughout the whole experiment. All the dots of each patch were of the same color, with three possible colors (i.e., red, blue and green). Unbeknownst to the participants, starting from the 7th block, one color was always associated to the rightward direction, another color to the leftward direction and the last color was uninformative of the motion direction of the dots. This association was pseudo randomized between participants. Briefly, two types of trials were possible: both patches shared the same color, or they had different colors. The color associated with leftward motion and the one associated with rightward motion were never presented together (the color-motion association was 100% valid). The frequency of occurrence of the colors was balanced during the whole experiment. Moreover, in 20% of the trials (11 out of 56 in a block chosen randomly and independently of the conditions) participants were asked to report one of the patch colors, forcing them to pay attention to the colors and providing us with an additional measure of the attended color. When the patches had different colors, both answers were considered as correct. All the possible types of trials are summarized in Table 1 (see in “Data Analysis” section). At the end of the experiment, participants responded to a de-briefing questionnaire composed of four questions: first, whether one motion direction was easier to discriminate than the other; second, whether one of the four positions was attended more than the others; third, whether the motion was easier to perceive with one of the three colors; and fourth, whether they had remarked an association between colors and motion. In case of positive answer to this last question, they were asked to report the nature of the association.

Data Analysis

During the whole experiment, we recorded eye position, participants’ responses and RTs. Two participants out of 22 reported the correct color-motion association in the final questionnaire, and thus were excluded from further analyses.

Statistical analyses consisted of Bayesian ANOVA, performed in JASP (Love et al., 2015): all the Bayes Factors, if not otherwise specified, refer to the alternative hypothesis, and are reported as BF10. Practically, a BF between 0.3 and 3 advocates for a lack of effect, whereas BF below 0.3 or above 3 suggests evidence in favor of the null or alternative hypothesis, respectively. The larger the BF, the stronger the evidence in favor of the alternative hypothesis (Bernardo and Smith, 2001; Masson, 2011). All other analyses, including eye movement preprocessing and feature extraction were performed in Matlab7.5 (The MathWorks, Natick, MA, USA).

Accuracy and RT Analysis

We performed behavioral and eye-movements analyses starting from the 7th block, i.e., the block in which the color-motion association was introduced in the experiment. Regarding the behavioral part, we tested two Bayesian ANOVAs considering either the accuracy (model I) or RT (model II) as dependent variables: the accuracy was modeled as a binary variable, whereas the RT was modeled as Gaussian. The independent variables considered were: PREDICTABILITY (a categorical variable modeling whether the color was informative or not), BLOCKS (categorical variable from 7 to 14), DIFFICULTY (categorical variable modeling whether the trial was difficult or easy) and all their interactions. Initially, we analyzed only trials in which the patches had the same color and the same coherence level, in order to remove all confounds induced by eye movements during patch selection (condition 7 in Table 1). In a second analysis, to study how selective attention affected accuracy and RT, we performed two additional Bayesian ANOVAs, one on accuracy (model III) and one on RT (model IV), considering the patch on which attention was allocated. We determined attention allocation as the distance between the eye position and the patches at the time of the participant’s response (see “Eye Movement Analysis” section below). Trials in which participants moved back and forth between the patches were excluded from this analysis (~15% of the trials). As in the previous analysis, we considered PREDICTABILITY and DIFFICULTY of the attended patch as factors. In this analysis and in the subsequent analyses regarding eye movements, we focused on two sub-categories of trials in order to simplify the interpretation of the findings (see Table 1): the first category of trials (conditions 1 and 2 in Table 1) included patches with the same coherence but different predictability levels (one predictive and one non-predictive). In the second category, patches had the same predictability in the color-motion association but different coherence levels (conditions 3 and 4 in Table 1). This approach allowed us to investigate specifically the effects of the color-motion association (condition 1 and 2), independently from the effect of difficulty (i.e., coherence levels, condition 3 and 4) on attentional allocation. We did not investigate further the remaining conditions 5 and 6 because it would have been challenging to properly disentangle the effects of predictability from the effect of patch coherence.

Eye Movement Analysis

We first removed the blinks (automatically detected by the Eyelink© algorithm) by means of linear interpolation. Afterward, we determined attentional allocation trial by trial, on the basis of which patch was attended by the participants when they provided the answer: first we computed the distance between the eye position and each patch, then we normalized these distances by their sum, and finally we attributed a positive value in the attentional allocation binary variable to the patch with a normalized distance lower than 0.4. We then compared the percentage of trials in which the predictive or non-predictive patches were attended (model Va, conditions 1 and 2) and the easy or the difficult one was attended (model Vb, conditions 3 and 4). In this analysis, we included only trials in which participants attended a single patch (around 85% of all the trials). In a second analysis, we focused on the remaining portion of trials in which participants switched their attention from one patch to the other (around 15% of the trials): we compared the percentage of time in which they switched from predictive to non-predictive color and vice versa (model VIa, conditions 1 and 2) and from easy to difficult patches and vice versa (model VIb, conditions 3 and 4). Both models V and VI were implemented by means of Bayesian ANOVA. Finally, as an indirect measure of attention, we tested whether participants reported more often the predictive or the non-predictive color, when asked, at the end of each trial, which colors had been presented (Bayesian paired t-test).

Results

Behavioral Analysis

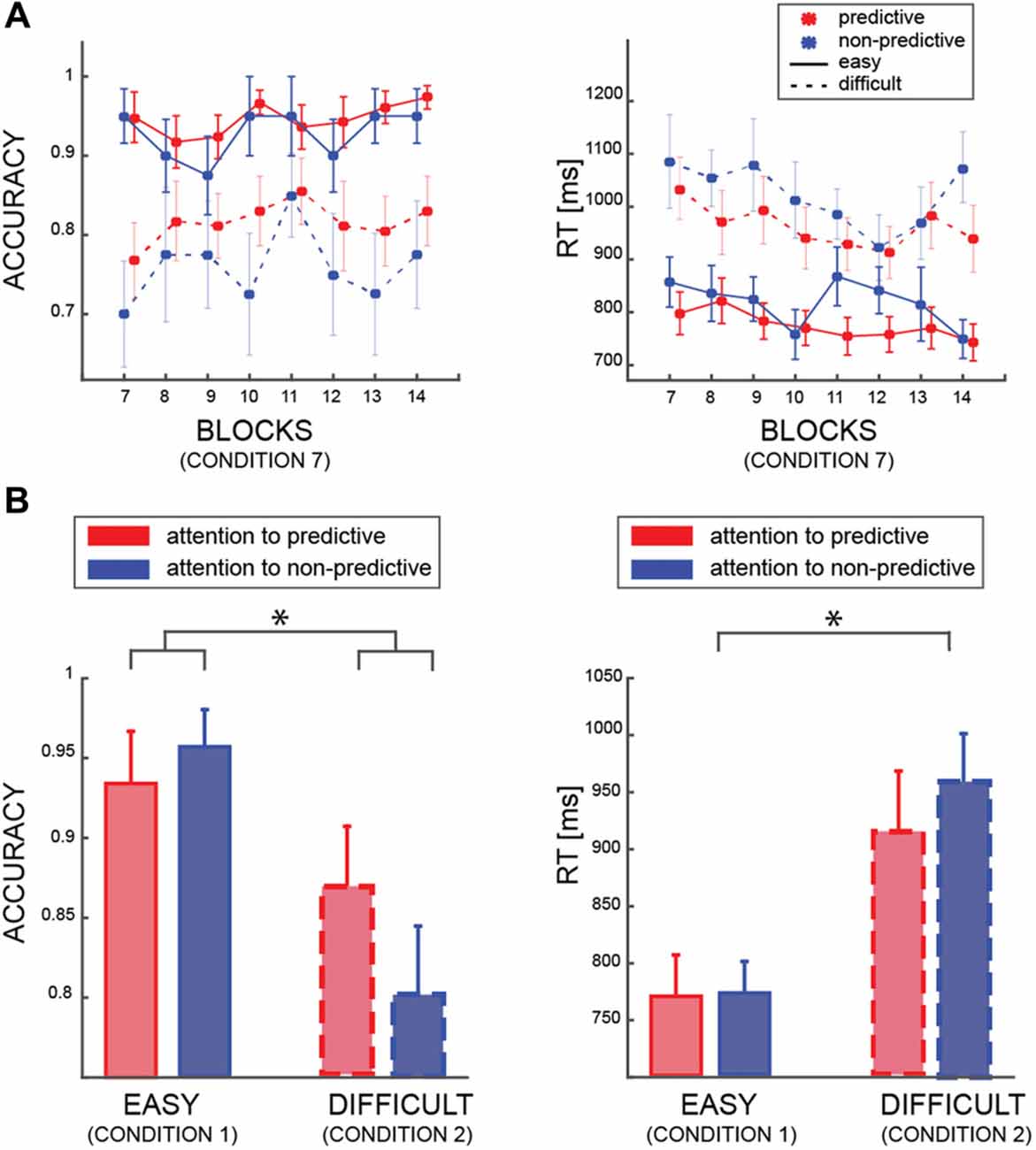

In the first model (model I), we tested whether accuracy was affected by the PREDICTABILITY of the color, the BLOCK and the DIFFICULTY factors. This analysis was restricted to trials in which both patches had the same color and coherence level (i.e., condition 7, see Table 1). As expected, we found a very strong effect of the factor DIFFICULTY (BF10 ≫100 very strong evidence), indicating that participants were better at discriminating the motion direction of the patches when both had a coherence of 60%, than when both had 25% coherence (Figure 2A). Interestingly, we found also an effect of the factor PREDICTABILITY (BF10 = 17.86, strong evidence), with better performance for predictive than non-predictive colors, confirming that participants learned the implicit association between color and motion. No interaction between PREDICTABILITY and DIFFICULTY was found (BFinclusion < 1). We found a strong negative results of the factor BLOCK (BF01 = 42.08) but all related interactions lacked sensitivity (all 0.3 < BF10 < 3).

Figure 2. (A) Averaged accuracy and reaction time (RT) results for the easy (solid lines) and difficult (dashed lines) trials, and for predictive (red) and non-predictive (blue) trials. All data are from trials in which the patches are identical (condition 7—see Table 1). (B) Averaged accuracy and RT results according to which patch was attended by participants (same color code as in “a”) in trial from condition 1 and condition 2 (see Table 1). In all panels, error bars are standard errors, and asterisks indicate significant difference (p < 0.05).

Regarding RT (model II), we found a similar significant effect of DIFFICULTY (BF10 ≫ 100 very strong evidence), indicating faster responses for easier patches (i.e., patches with 60% coherence), and an effect of PREDICTABILITY (BF10 ≫ 10 strong evidence). All the other factors or interactions, were far from reaching significance (all 0.3 < BF10 < 3).

The second behavioral analysis aimed at investigating how accuracy and RT changed as a function of attentional allocation (Figure 2B). Here, we included only trials in which coherence was identical in both stimuli, such that the patches differed only in color (i.e., conditions 1 and 2, see Table 1). We found that the difficulty level of the attended patch affected performance (Model III; BF10 ≫ 100, very strong evidence), but we found no main effect of the ATTENDEND_PREDICTABILITY factor (BF10 = 1.2). Nevertheless, we found positive evidence of an interaction between DIFFICULTY and ATTENDEND_PREDICTABILITY (BF10 = 3.425), contrary to the results from condition 7 (i.e., both patches are identical—no interaction). This analysis reveals that participants were exploiting the predictability when one patch but not the other was predictive, and specifically when both patches were difficult (post hoc comparison between predictive and non-predictive patches in the difficult condition: BF10 = 3.824, in the easy condition BF10 = 0.410). Regarding the RT (model IV), we also found a significant impact of the factor DIFFICULTY (BF10 ≫ 100, very strong evidence) but no other effects (all 0.3 < BF10 < 3). All in all, these analyses show that participants’ accuracy was higher when they attended the patch whose color conveyed information about motion direction when the motion of both patches was harder to perceive.

Questionnaire Analysis

We provided a questionnaire with four questions at the end of the experiment, with the purpose of assessing the awareness of the associations. The last question asked directly whether participants had remarked a color-motion association, and in case of a positive answer they were invited to specify which one. Only 2 out of 22 participants were able to provide the correct association (the remaining 20 participants responded negatively and did not provide any associations). The precedent three questions about general biases (see “Materials and Methods” section for details) were meant to keep the participants unaware of the true purpose of the questionnaire, thus not implying the existence of the association. The responses to these previous questions did not reveal any specific bias in the participants (specifically, five and three participants reported respectively rightward and leftward motion direction as easier to perceive; and three and two participants reported respectively upper and lower positions as easier to discriminate motion directions).

Eye Movement Analysis

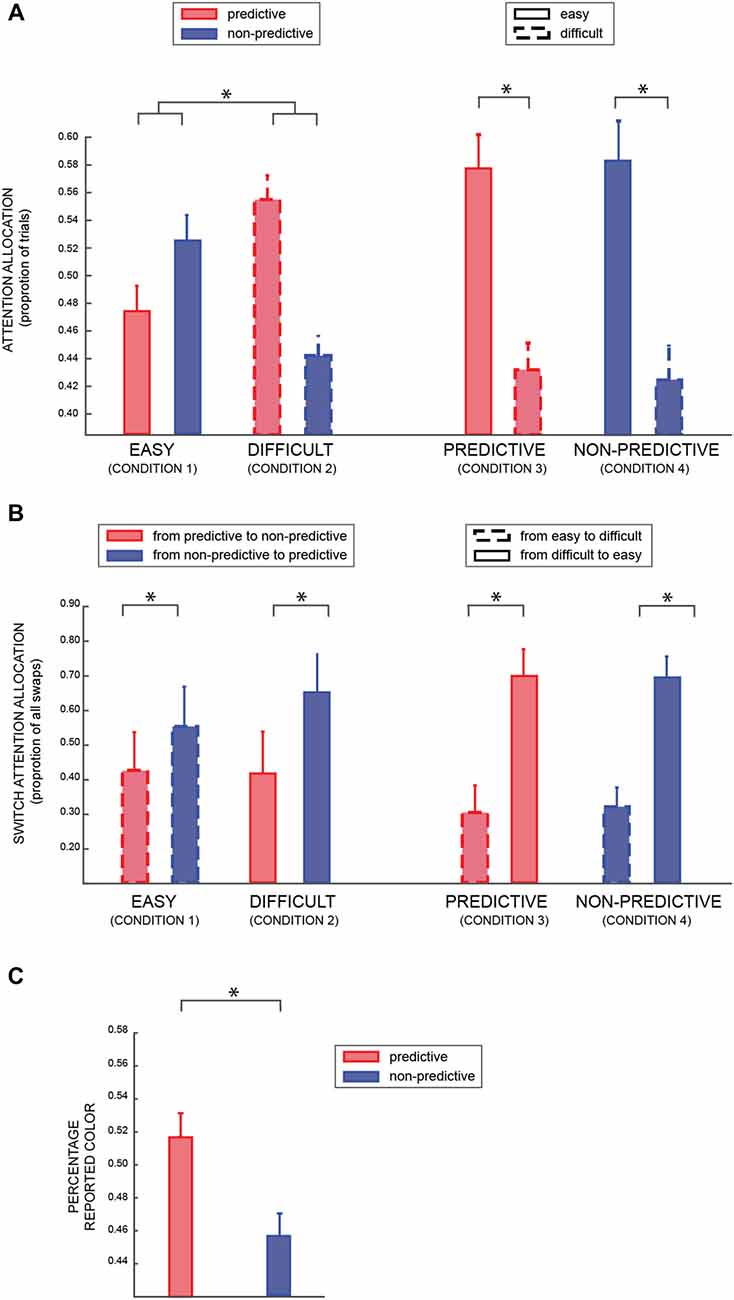

For the eye movement analysis, we considered as binary dependent variable the proportion of trials in which participants attended the predictive or the non-predictive patch (Table 1: conditions 1 and 2; model Va), when both patches were either easy or difficult (factors PREDICTABILITY and DIFFICULTY respectively). As shown in Figure 3A, we found a significant result for both factors (DIFFICULTY BF10 = 11.48, PREDICTABILITY BF10 = 18.37, positive evidence), and a strong interaction between the two factors (BF10 = 53.61, strong evidence). A post hoc analysis revealed a significant difference between predictable and non-predictable colors in the difficult (BF > 100) but not in the easy condition (BF = 1.433), suggesting that participants were looking more at the informative patch when the coherence of both patches was lower (i.e., 25%), in agreement with the results of the previous analysis (model III).Not surprisingly, in the condition in which one of the patches was easy and the other one difficult (Table 1: conditions 3 and 4; model Vb), we found a very significant effect of the factor DIFFICULTY (BF10 ≫ 100).

Figure 3. (A) The average percentage of trials in which participants looked at predictive or non-predictive patches (conditions 1 and 2—see Table 1), respectively red and blue (left part); and high or low coherence level (conditions 3 and 4), respectively solid and dashed lines (right part). (B) Average percentage of trials in which participants switched attention from one patch to the other (left column: from predictive to non-predictive and vice versa –conditions 1 and 2; right column: from low to high coherence level and vice versa—conditions 3 and 4). (C) Average percentage of time participants reported the predictive (red) or non-predictive (blue) color. In all the panels, error bars are standard errors, and asterisks indicate significant difference (p < 0.05).

Figure 3B shows the proportion of trials in which participants made a saccade from one patch to the other (around 15% of trials, with three participants having a percentage closer to 40% and all the other participants <10%). We found a positive effect of PREDICTABILITY (left part of the Figure; BF10 = 5.11, positive evidence), irrespective of the difficulty level of both patches (Table 1: conditions 1 and 2; model VIa). Similarly, in conditions 3 and 4 (model VIb), we found a significant effect of the difficulty level (right part of the Figure; BF10 > 100).

Finally, we investigated which color was reported more frequently when participants were asked to report the patch’s color in 10% of the trials. Importantly, in these trials one patch had a predictive color and the other patch a non-predictive one, so it provided us with an indirect measure of attentional allocation. Considering only trials in which participants reported a color that had actually been displayed (accuracy for this task was >95%), we found that participants reported more frequently the predictive than the non-predictive color (Bayesian paired t-test of choosing the predictive color against chance level: BF > 40, very strong evidence), as shown in Figure 3C, in agreement with the previous analyses that suggested a bias of attention toward the informative colors.

Discussion

In this study, we investigated the effect of unconscious learning on visual attention by means of eye movements, and how unconscious biases are influenced by conscious, task-relevant information (i.e., signal strength). Participants were instructed to report the motion direction of two patches of variable coherence and color: unbeknown to them, two out of three colors were 100% informative of the correct response. Participants failed to notice this association consciously, but nevertheless exploited the color information to perform the task, replicating our previous findings (Alamia et al., 2016). The question we addressed in the current study was whether unconscious knowledge of the color-motion association affects eye movements, and how this influence interacts with the strength of the stimuli (i.e., coherence of the patch). As expected, we found that the color cue affected behavioral measures (RT and accuracy), and that participants were attending more frequently the patches exhibiting the predictive colors, despite not being consciously aware of the associations. Importantly, this pattern occurred more frequently during difficult trials, that is when the motion direction was harder to perceive, thus revealing an interaction between conscious and unconscious features of the stimuli in biasing attentional allocation.

One crucial point of this study is the measure of awareness of the association: we rely on a four-item questionnaire, in which the last question directly probes the knowledge of the color-motion association. In our previous study (Alamia et al., 2016), we investigated awareness of the same association in a simpler design, with only one patch per trial and fixed coherence. We showed that participants who failed the questionnaire also failed more sensitive tests (i.e., generative and familiarity tests- Derosiere et al., 2017). This indicates that -in this task- the questionnaire provides a reliable measure of awareness, and that other tests would have reached similar conclusions. Moreover, it is legitimate to assume that participants whom are aware of the color-motion associations would base their choices to a large extent on color, leading to different pattern of results, with drastic differences between conditions (as reported in Alamia et al., 2016). Yet, we did not observe such effects in our results. Altogether, these considerations provide good evidence in favor of truly unconscious bias of color on decisions.

Several previous studies have investigated the relationship between attention and unconscious learning. On the one hand, recent studies have investigated how attentional allocation is affected by unconscious sources of information. One study from Zhao et al. (2013) investigated the relationship between statistical learning and visual attention (i.e., spatial and feature-selection). In a series of experiments, one out of four locations displayed a statistically structured sequence of abstract shapes, whereas the order was random in the other locations. They showed that RTs of an orthogonal task were decreased in the location exhibiting statistical regularities, thus suggesting that covert attention was affected by regularities even when these were not relevant for performing the task. Interestingly, another study about visual selection suggested that attention was driven away from the location where distractors were more likely to appear (Wang and Theeuwes, 2018). It is note worthy, however, that in that study some participants—yet included in the analysis—reported to have explicitly noticed the regularity.

On the other hand, several studies have also investigated the reciprocal relationship: how attentional allocation affects the implicit learning of statistical regularities. Regarding visual statistical learning, a seminal study from Turk-Browne and colleagues (Turk-browne et al., 2005) has revealed that selective attention is necessary to implicitly learn the regularities of a stream of stimuli: in his study the unattended stimuli were not learnt by the participants. Conversely, a recent study failed to replicate these findings, showing that the unattended regularities are learnt as well as the attended ones (Musz et al., 2015). Besides visual statistical learning, the impact of attention on unconscious learning has been also investigated in the context of implicit sequence learning. In a serial RT task (SRTT), the most commonly used paradigm to study implicit sequence learning, participants learn a sequence of responses implicitly, and these sequences can be either deterministic, probabilistic or random. In the first two cases (i.e., when the sequence is predictable) participants perform better than in the random case, even when they are not aware of the predictability (Destrebecqz and Cleeremans, 2001; Destrebecqz et al., 2005). In a seminal study, Nissen and Bullemer (1987) showed that the addition of a secondary orthogonal task, which pulls participant’s attentional resources away from the main task, impaired learning, thus indicating the crucial role of attention in implicit learning; whereas some other authors suggested a rather different interpretation, framing the results in terms of interference between the first and the secondary task (Stadler, 1995), and not in terms of attentional resources. Conversely, Cohen et al. (1990) showed no effect of a secondary task on participants’ performance, even though further studies failed to replicate these results (Frensch et al., 1998). Finally, the results of Cleeremans et al. (1998) fell in between, showing lesser but significant implicit learning in the presence of an orthogonal task. All in all, the role of attention in implicit learning in the context of SRTT remains disputed.

Beyond implicit learning, a few studies have investigated how subliminal stimuli affect attentional allocation, and conversely how subliminal stimuli are affected by visual attention. Specifically, one study from Kanai et al. (2006) investigated how attentional allocation affects subliminal perception. In their task, the perception of the orientation of a grating pattern was altered by the presentation of a previous stimulus, which was allegedly subliminal due to continuous flash suppression. This tilt after effect (TAE) was induced by a subliminal stimulus (due to continuous flash suppression) at two different locations, one of which was attended by the participants. Their results showed a TAE with subliminal adaptors both when participants were attending the location and when they were not, suggesting that spatial attention does not influence low level processing of subliminal stimuli (Kanai et al., 2006). Regarding the effect of subliminal stimuli on attentional allocation, other studies have showed that spatial attention can be affected by subliminal stimuli (Mulckhuyse et al., 2010; Chou and Yeh, 2011; Mastropasqua and Turatto, 2015).

All in all, the results from the current literature seem to suggest that unconscious information, both subliminal and supraliminal, can affect visual attention. However, the actual unconscious nature of these processes has been strongly questioned (Shanks, 2003; Smyth and Shanks, 2008; Vadillo et al., 2016). In this regard, this study adds an important and original contribution to the previous literature. As such, we used a paradigm whose rules are simple to learn and in which all relevant information is supraliminal (i.e., color and motion coherence), thus voiding possible confounds related to other implicit learning paradigms (Shanks and St. John, 1994; Newell and Shanks, 2014). As discussed at length in our previous study (Alamia et al., 2016), the simplicity of the unconscious association ensures that participants do not exploit alternative strategies to perform the task (as in more complex paradigms, e.g., artificial grammars). Additionally, the usage of supraliminal stimuli allows us to avoid the issues related to subliminal perception, since in that domain it is difficult to determine whether stimuli are truly unconsciously perceived or not (Lovibond and Shanks, 2002).

The conclusion that attention is biased toward the most informative patch is in line with our previous findings that suggested that unconscious information bias attentional allocation only when it is beneficial for task performance (Alamia and Zénon, 2016). Whereas in that earlier study, we manipulated the relevance of the unconscious information, here we manipulated the strength of the conscious source of information. These combined findings suggest that attentional allocation to unconscious cues depends on top-down mechanisms since it is modulated by high-level factors, such as relevance and relative reliability.

Further than that, another important element of novelty introduced in our study lays in the interaction between unconscious and conscious sources of influence on attentional allocation. An intriguing perspective raised by this finding is that unconscious and conscious attentional cues are weighted as a function of their behavioral reliability, as suggested by the fact that attention is deployed to the most informative stimuli primarily when both patches have low coherence (i.e., when the task is more difficult). Importantly, the unconscious color-motion association was as effective in the easy as in the difficult trials, indicating that failure to allocate attention to predictive stimuli in the high-coherence trials was not caused merely by the fact that subjects did not use the unconscious information in easy trials. Additionally, this interpretation would utterly fit within a Bayesian framework, in which cues are weighted based on the variance of their distribution (i.e., precision, Feldman and Friston, 2010), as much as sensory cues from differences modalities are combined based on their reliability (Battaglia et al., 2003). However, further experiments are required to effectively test this hypothesis.

As suggested in other studies (Beilock et al., 2002; Olivers and Nieuwenhuis, 2005), attention and consciousness can have different and dissociable effects on behavior, thus hinting that these two processes potentially rely on different neuronal mechanisms. Our results advocate in favor of the dissociation between attention and consciousness (Koch and Tsuchiya, 2007, 2012; Tsuchiya and Koch, 2016), showing that it is possible to have an attentional effect (i.e., eye movement) which is driven by unconscious information: conceivably, participants are aware of which patch they are attending at each trial, but their choice is influenced to some extent by unconscious knowledge. In conclusion, our study shows that unconscious processing affects attentional allocation and eye movements in a perceptual decision-making task.

Author Contributions

AA and AZ conceived the study and contributed to the interpretation of data. AA realized the experiments. AA and OS processed the data. All the authors wrote the manuscript.

Funding

This work was performed at the Institute of Neuroscience (IoNS) of the Université catholique de Louvain (Brussels, Belgium); it was supported by grants from the Fondation Médicale Reine Elisabeth (FMRE), from the Fonds de la Recherche Scientifique (FNRS–FDP), from the Fondation Louvain and from IdEx Bordeaux. AA and OS are Research Fellows at the FNRS and AZ is a CNRS researcher.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to thank Camille Bauthier for helping in collecting the data.

References

Alamia, A., Orban de Xivry, J.-J., San Anton, E., Olivier, E., Cleeremans, A., and Zenon, A. (2016). Unconscious associative learning with conscious cues. Neurosci. Conscious. 2016:niw016. doi: 10.1093/nc/niw016

Alamia, A., and Zénon, A. (2016). Statistical regularities attract attention when task-relevant. Front. Hum. Neurosci. 10:42. doi: 10.3389/fnhum.2016.00042

Baluch, F., and Itti, L. (2011). Mechanisms of top-down attention. Trends Neurosci. 34, 210–224. doi: 10.1016/j.tins.2011.02.003

Battaglia, P. W., Jacobs, R. A., and Aslin, R. N. (2003). Bayesian integration of visual and auditory signals for spatial localization. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 20, 1391–1397. doi: 10.1364/josaa.20.001391

Beilock, S. L., Carr, T. H., MacMahon, C., and Starkes, J. L. (2002). When paying attention becomes counterproductive: impact of divided versus skill-focused attention on novice and experienced performance of sensorimotor skills. J. Exp. Psychol. Appl. 8, 6–16. doi: 10.1037/1076-898x.8.1.6

Bernardo, J. M., and Smith, A. F. M. (2001). Bayesian theory. Meas. Sci. Technol. 12, 221–222. doi: 10.1088/0957-0233/12/2/702

Berry, D. C., and Broadbent, D. E. (1988). On the relationship between task performance and verbal knowledge. Q. J. Exp. Psychol. 36, 209–231. doi: 10.1080/14640748408402156

Brainard, D. H. (1997). The psychophysics toolbox. Spat. Vis. 10, 433–436. doi: 10.1163/156856897x00357

Chou, W.-L., and Yeh, S.-L. (2011). Subliminal spatial cues capture attention and strengthen between-object link. Conscious. Cogn. 20, 1265–1271. doi: 10.1016/j.concog.2011.03.007

Chun, M. M. (2000). Contextual cueing of visual attention. Trends Cogn. Sci. 4, 170–178. doi: 10.1016/s1364-6613(00)01476-5

Chun, M. M., and Jiang, Y. (1998). Contextual cueing: implicit learning and memory of visual context guides spatial attention. Cogn. Psychol. 36, 28–71. doi: 10.1006/cogp.1998.0681

Cleeremans, A. (1993). “Attention and awareness in sequence learning,” in Proceedings of the 15th Annual Conference of the Cognitive Science Society (Hillsdale, NJ: Erlbaum).

Cleeremans, A., Destrebecqz, A., and Boyer, M. (1998). Implicit learning: news from the front. Trends Cogn. Sci. 2, 406–416. doi: 10.1016/s1364-6613(98)01232-7

Clerget, E., Poncin, W., Fadiga, L., and Olivier, E. (2012). Role of Broca’s area in implicit motor skill learning: evidence from continuous theta-burst magnetic stimulation. J. Cogn. Neurosci. 24, 80–92. doi: 10.1162/jocn_a_00108

Cohen, A., Ivry, R. I., and Keele, S. W. (1990). Attention and structure in sequence learning. J. Exp. Psychol. Learn. Mem. Cogn. 16, 17–30. doi: 10.1037/0278-7393.16.1.17

Derosiere, G., Zénon, A., Alamia, A., and Duque, J. (2017). Primary motor cortex contributes to the implementation of implicit value-based rules during motor decisions. Neuroimage 146, 1115–1127. doi: 10.1016/j.neuroimage.2016.10.010

Desimone, R., and Duncan, J. (1995). Neural mechanisms of selective visual. Annu. Rev. Neurosci. 18, 193–222. doi: 10.1146/annurev.ne.18.030195.001205

Destrebecqz, A., and Cleeremans, A. (2001). Can sequence learning be implicit? New evidence with the process dissociation procedure. Psychon. Bull. Rev. 8, 343–350. doi: 10.3758/bf03196171

Destrebecqz, A., Peigneux, P., Laureys, S., Degueldre, C., Del Fiore, G., and Aerts, J. (2005). The neural correlates of implicit and explicit sequence learning: interacting networks revealed by the process dissociation procedure. Learn. Mem. 12, 480–490. doi: 10.1101/lm.95605

Feldman, H., and Friston, K. J. (2010). Attention, uncertainty and free-energy. Front. Hum. Neurosci. 4:215. doi: 10.3389/fnhum.2010.00215

Filali-Sadouk, N., Castet, E., Olivier, E., and Zenon, A. (2010). Similar effect of cueing conditions on attentional and saccadic temporal dynamics. J. Vis. 10, 21.1–21.13. doi: 10.1167/10.4.21

Frensch, P. A., Lin, J., and Buchner, A. (1998). Learning versus behavioral expression of the learned: the effects of a secondary tone-counting task on implicit learning in the serial reaction task. Psychol. Res. 61, 83–98. doi: 10.1007/s004260050015

Gold, J. I., Law, C.-T., Connolly, P., and Bennur, S. (2008). The relative influences of priors and sensory evidence on an oculomotor decision variable during perceptual learning. J. Neurophysiol. 100, 2653–2668. doi: 10.1152/jn.90629.2008

Greenwald, A. G., Klinger, M. R., and Schuh, E. S. (1995). Activation by marginally perceptible (“subliminal”) stimuli: dissociation of unconscious from conscious cognition. J. Exp. Psychol. Gen. 124, 22–42. doi: 10.1037/0096-3445.124.1.22

Henderson, J. M., and Hayes, T. R. (2017). Meaning-based guidance of attention in scenes as revealed by meaning maps. Nat. Hum. Behav. 1, 743–747. doi: 10.1038/s41562-017-0208-0

Itti, L. (2005). “CHAPTER 94 - models of bottom-up attention and saliency,” in Neurobiology of Attention, eds L. Itti, G. Rees and J. K. Tsotsos (Burlington, MA: Academic Press), 576–582. doi: 10.1016/B978-012375731-9/50098-7

Jiang, Y., and Chun, M. M. (2001). Selective attention modulates implicit learning. Q. J. Exp. Psychol. A 54, 1105–1124. doi: 10.1080/713756001

Kanai, R., Tsuchiya, N., and Verstraten, F. A. J. (2006). The scope and limits of top-down attention in unconscious visual processing. Curr. Biol. 16, 2332–2336. doi: 10.1016/j.cub.2006.10.001

Koch, C., and Tsuchiya, N. (2007). Attention and consciousness: two distinct brain processes. Trends Cogn. Sci. 11, 16–22. doi: 10.1016/j.tics.2006.10.012

Koch, C., and Tsuchiya, N. (2012). Attention and consciousness: related yet different. Trends Cogn. Sci. 16, 103–105. doi: 10.1016/j.tics.2011.11.012

Love, J., Selker, R., Verhagen, J., Marsman, M., Gronau, Q. F., Jamil, T., et al. (2015). Software to sharpen your stats. APS Obs. 28, 27–29. Available online at: https://www.narcis.nl/publication/RecordID/oai%3Adare.uva.nl%3Apublications%2Fb544692f-ce8a-41ea-8b8a-c2755aa927b1

Lovibond, P. F., and Shanks, D. R. (2002). The role of awareness in Pavlovian conditioning: empirical evidence and theoretical implications. J. Exp. Psychol. Anim. Behav. Process. 28, 3–26. doi: 10.1037/0097-7403.28.1.3

Manohar, S. G., and Husain, M. (2013). Attention as foraging for information and value. Front. Hum. Neurosci. 7:711. doi: 10.3389/fnhum.2013.00711

Masson, M. E. J. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behav. Res. Methods 43, 679–690. doi: 10.3758/s13428-010-0049-5

Mastropasqua, T., and Turatto, M. (2015). Attention is necessary for subliminal instrumental conditioning. Sci. Rep. 5:12920. doi: 10.1038/srep12920

Mulckhuyse, M., Talsma, D., and Theeuwes, J. (2007). Grabbing attention without knowing: automatic capture of attention by subliminal spatial cues. Vis. cogn. 15, 779–788. doi: 10.1080/13506280701307001

Mulckhuyse, M., Talsma, D., and Theeuwes, J. (2010). Grabbing attention without knowing: automatic capture of attention by subliminal spatial cues. J. Vis. 7:1081. doi: 10.1167/7.9.1081

Mulckhuyse, M., and Theeuwes, J. (2010). Unconscious attentional orienting to exogenous cues: a review of the literature. Acta Psychol. 134, 299–309. doi: 10.1016/j.actpsy.2010.03.002

Musz, E., Weber, M. J., and Thompson-Schill, S. L. (2015). Visual statistical learning is not reliably modulated by selective attention to isolated events. Atten. Percept. Psychophys. 77, 78–96. doi: 10.3758/s13414-014-0757-5

Newell, B. R., and Shanks, D. R. (2014). Unconscious influences on decision making: a critical review. Behav. Brain Sci. 37, 1–19. doi: 10.1017/S0140525X12003214

Nissen, M. J., and Bullemer, P. (1987). Attentional requirements of learning: evidence from performance measures. Cogn. Psychol. 19, 1–32. doi: 10.1016/0010-0285(87)90002-8

Olivers, C. N. L., and Nieuwenhuis, S. (2005). The beneficial effect of concurrent task-irrelevant mental activity on temporal attention. Psychol. Sci. 16, 265–269. doi: 10.1111/j.0956-7976.2005.01526.x

Peremen, Z., and Lamy, D. (2014). Do conscious perception and unconscious processing rely on independent mechanisms? A meta-contrast study. Conscious. Cogn. 24, 22–32. doi: 10.1016/j.concog.2013.12.006

Shanks, D. R. (2003). Attention and awareness in “implicit” sequence learning. Adv. Conscious. Res. 48, 11–42. doi: 10.1075/aicr.48.05sha

Shanks, D. R., and St. John, M. F. (1994). Characteristics of dissociable human learning-systems. Behav. Brain Sci. 17, 367–395. doi: 10.1017/s0140525x00035032

Smyth, A. C., and Shanks, D. R. (2008). Awareness in contextual cuing with extended and concurrent explicit tests. Mem. Cognit. 36, 403–415. doi: 10.3758/mc.36.2.403

Stadler, M. A. (1995). Role of attention in implicit learning. J. Exp. Psychol. Learn. Mem. Cogn. 21, 674–685. doi: 10.1037/0278-7393.21.3.674

Summerfield, C., and Egner, T. (2009). Expectation (and attention) in visual cognition. Trends Cogn. Sci. 13, 403–409. doi: 10.1016/j.tics.2009.06.003

Tsuchiya, N., and Koch, C. (2016). “The relationship between consciousness and top-down attention,” in The Neurology of Consciousness: Cognitive Neuroscience and Neuropathology, Cognitive Neuroscience and Neuropathology, 2nd Edn. eds S. Laureys, O. Gosseries and G. Tononi (San Diego, CA: Academic Press), 71–91. doi: 10.1016/B978-0-12-800948-2.00005-4

Turk-browne, N. B., Jungé, J. A., and Scholl, B. J. (2005). The automaticity of visual statistical learning. J. Exp. Psychol. Gen. 134, 552–564. doi: 10.1037/0096-3445.134.4.552

Vadillo, M. A., Konstantinidis, E., and Shanks, D. R. (2016). Underpowered samples, false negatives and unconscious learning. Psychon. Bull. Rev. 23, 87–102. doi: 10.3758/s13423-015-0892-6

Vossel, S., Geng, J. J., and Friston, K. J. (2014). Attention, predictions and expectations and their violation: attentional control in the human brain. Front. Hum. Neurosci. 8:490. doi: 10.3389/fnhum.2014.00490

Wang, B., and Theeuwes, J. (2018). Statistical regularities modulate attentional capture. J. Exp. Psychol. Hum. Percept. Perform. 44, 13–17. doi: 10.1037/xhp0000472

Keywords: unconscious learning, eye movements, visual attention, eye tracking, implicit learning

Citation: Alamia A, Solopchuk O and Zénon A (2018) Strong Conscious Cues Suppress Preferential Gaze Allocation to Unconscious Cues. Front. Hum. Neurosci. 12:427. doi: 10.3389/fnhum.2018.00427

Received: 17 July 2018; Accepted: 01 October 2018;

Published: 16 October 2018.

Edited by:

Xiaolin Zhou, Peking University, ChinaReviewed by:

Jing Chen, Shanghai University of Sport, ChinaPing Wei, Capital Normal University, China

Copyright © 2018 Alamia, Solopchuk and Zénon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andrea Alamia, artipago@gmail.com

Andrea Alamia

Andrea Alamia Oleg Solopchuk

Oleg Solopchuk Alexandre Zénon

Alexandre Zénon