Abstract

The present paper intends to draw the conception of language implied in the technique of word embeddings that supported the recent development of deep neural network models in computational linguistics. After a preliminary presentation of the basic functioning of elementary artificial neural networks, we introduce the motivations and capabilities of word embeddings through one of its pioneering models, word2vec. To assess the remarkable results of the latter, we inspect the nature of its underlying mechanisms, which have been characterized as the implicit factorization of a word-context matrix. We then discuss the ordinary association of the “distributional hypothesis” with a “use theory of meaning,” often justifying the theoretical basis of word embeddings, and contrast them to the theory of meaning stemming from those mechanisms through the lens of matrix models (such as vector space models and distributional semantic models). Finally, we trace back the principles of their possible consistency through Harris’s original distributionalism up to the structuralist conception of language of Saussure and Hjelmslev. Other than giving access to the technical literature and state of the art in the field of natural language processing to non-specialist readers, the paper seeks to reveal the conceptual and philosophical stakes involved in the recent application of new neural network techniques to the computational treatment of language.

Similar content being viewed by others

Notes

See for instance Hale and Wright (1997).

See for instance Christopher Manning and Richard Socher’s tutorial “Deep Learning for Natural Language Processing (without Magic)” at https://nlp.stanford.edu/courses/NAACL2013/, implicitly denouncing such attitudes in the reception of field.

The following presentation is deliberately concise and intends to give a background idea only. The reader already acquainted with NN architectures may skip this section. A complete presentation of deep NNs can be found in Goodfellow et al. (2016). See Schmidhuber (2015) for a historical overview of deep learning techniques in neural networks.

Each one of those transformations consists typically of n weighted sums \(s_{i}=\sum w_{ij} x_{j}\) of the components x j of the input vector (where 1 ≤ i ≤ n, 1 ≤ j ≤ m, with n the dimension of the output vector and m the dimension of the input vector), plus a bias term b i such that z i = s i + b i. A non-linear transformation a i = f(z i) is computed on top of that, such as a sigmoid function which “squeezes” the result of the linear transformation z between − 1 and 1. If the weights w ij are expressed as a matrix W of dimensions n × m and the biases b i as an n-dimensional vector, the entire transformation can be expressed as a = f(W x + b). Each layer of the network (i.e., each successive transformation) has a comparable form, and the parameters to be adjusted correspond to the weights collected in the matrices W and the biases of the vectors b of each layer.

Implementing (different versions of) stochastic gradient descent.

The whole system can thus be seen as a procedure to approximate any kind of function. For an accessible presentation of universal approximation theorems, see Nielsen (2015, ch. 4).

See for instance Dahl et al. 2012.

See Manning and Schütze 1999 for a detailed presentation.

As it is the case for the vocabulary of pre-trained word vectors available for download at the official word2vec website (Google inc. 2013). This vocabulary contains all the word forms seen in the corpus (for instance, “house” and “houses” are two different words of the vocabulary) as well as proper names and phrases such as “college grads”, “geographically dispersed”, “Volga river”, or “Chief Executive Steve Ballmer”. The vocabulary is ordered by the frequency of words and phrases in the training corpus. The models preceding word2vec were however of a much smaller scale.

The study of the connectionist image of language falls out the scope of the present paper. For an analysis convergent with the one we elaborate here, see Maniglier (2016).

Google had officially adopted deep NN technology for speech recognition the year before (Jaitly et al. 2012).

A window size is defined in advance, determining the number of words to be taken as context words immediately to the left and to the right of the chosen word.

In this sense, the NN implementing the model is “shallow,” i.e., not “deep.”

Typically of dimension d = 300, also defined in advance.

Standard normalization function is softmax. In the case of word2vec, more efficient versions are used, namely hierarchical softmax and negative sampling.

As provided by the word2vec package (Google inc. 2013). It is important to bear in mind that models resulting from different training procedures as well as from different training corpora may differ substantially and that there is no unique linguistic model for a language.

Relying significantly on the exceptional computational and data resources of a company such as Google, which were not available to most researchers at the time.

The cosine distance between the vectors v and u is defined as follows: \(\textrm {cosine distance}(u,v) = 1-\frac {v.u}{\|v\|\|u\|}\), where x.y is the dot product between the vectors x and y and ∥⋅∥ is the norm of the vector.

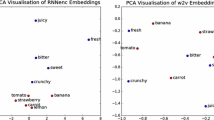

Computed through techniques such as principal component analysis (PCA) or T-distributed stochastic neighbor embedding (t-SNE).

An online tool for visualizing such projections can be found at https://projector.tensorflow.org.

Other than increasing the performance of linguistic tools, such a profusion of models brought about important analytical insights into the mechanisms of NLP and of NN models in particular. Incidentally, they revealed a fundamental fact of linguistic models that should not be overlooked: in spite of a relative equivalence or convergence on elementary results, no two models are identical. In the specific case of NN word embeddings, the non-deterministic nature of the training procedure can yield diverging models even with identical training parameters. Such a disparity is not only related to the irregular behavior of those models, but also to the deep problem of the unity and homogeneity of language itself. For this reason, the facts revealed by word embeddings which will interest us in the following pages should neither be ascribed to a single model, nor to the nature of a unified and homogeneous language, but to a common property of a family of related but differing models with respect to the possibility of general yet partial reconstructions of the underlying mechanisms of specific language practices.

This idea is not entirely new. Accompanied by a significant increase in computational capabilities, the resurgence of empiricist, frequentist, corpus-based and emergentist trends in linguistics in the last decades has sufficiently shown the strengths of all kinds of data-driven approaches to language structure. For an overview of those different trends, see Elman (1996), Bybee and Hopper (2001), McEnery and Wilson (2001), MacWhinney (1999), and Chater et al. (2015). The novel means by which word2vec and alike models connect unsupervised treatment of corpora and derivation of a global implicit structure are however endowed with their own originality.

A similar argument holds for CBOW.

Schnabel et al. (2015) noted that NN word embeddings encode a great amount of information about word frequencies.

Formally, \(\text {PMI}(x,y)=\log \frac {p(x,y)}{p(x)p(y)}\).

Lenci (2018) gives a recent account of existing DSMs with special attention on the kind of matrix they employ.

We follow here the general perspective of Turney and Pantel (2010, p. 148): “In general, we may have a word-context matrix, in which the context is given by words, phrases, sentences, paragraphs, chapters, documents, or more exotic possibilities, such as sequences of characters or patterns.

As we have seen, PMI is one of the usual ways of determining the weights of the frequencies.

The similarity between terms (resp. contexts) can be measured through the already mentioned similarity measures among the corresponding row (resp. column) vectors. In practice, dot product is the common measure in this case.

For instance, when he argues that “Harris talks about meaning differences, but […] the distributional hypothesis professes to uncover meaning similarities. There is no contradiction in this, since differences and similarities are, so to speak, two sides of the same coin” (p. 51, note 3); or when he affirms that “the distributional methodology is only concerned with meaning differences, or, expressed in different terms, with semantic similarity” (p. 37).

Incidentally, notice that discrimination is not necessarily symmetric, which can be easily seen by observing that {x, y} discriminate {a, b} but {a, b} discriminate {x, y, z} (likewise {a, b, c} discriminate {w, x, y, z}, and not just {y, z}).

LSA terms were taken to be words and contexts were documents or paragraphs. The latent space derived through SVD was therefore singularly suited for the task of information retrieval. But the idea of a latent space as we have presented it is not essentially tied to such a task, no more than to word-by-document matrices, as neither is SVD as a general technique for deriving relevant dimensions of that space. The generality of LSA methods is not unknown to Landauer and his team, as reflected in Landauer et al. (2007), where the relevance of LSA for other models and tasks is assessed.

In fact, it can be shown that such k-approximation is the best possible approximation, i.e., minimizes the approximation errors with respect to the Frobenius norm.

The same is true for a possible disjoint distribution of “say” and “make” with respect to that of “says” and “makes” if the similarity of “she” and “we” can be established by their joint distribution outside those contexts.

To get an idea of a rather widespread feeling within the NLP community in this respect, it might be worth reminding here the “snappy” version of the words of Fred Jelinek, of the IBM speech group, as reported in Jurafsky and Martin (2008, p. 189): “Every time I fire a linguist the performance of the recognizer improves”. Let us better not try to know what NLP computer scientists think about philosophers.

See, for instance, Spence and Owens (1990), for a classical study on the correlation between co-occurrence and association strength.

A solid defense of this position can be found in Glenberg and Mehta (2008).

Incidentally, this entails a critique of a conception of language as a “bag of words,” which is common in the field of NLP (including word2vec, the name of one of the models of which—CBOW—makes explicit reference to it): “Some authors have also characterized LSA as a “bag-of-words” technique. This is true in the narrow sense that the data it uses does not include word order within passages. However, what the words are and what the model does with the words is critically different from the keyword or “vector space models” of current search engines with which the sobriquet of “bag-of-words method” is usually associated” (Landauer et al. 2007, p. 21).

The word “my” appears in 8665 (\(\sim 0.027\%\)) of the 316,928 different ± 2-word contexts surrounding the word “house” within the Corpus of Contemporary American English, compared, for instance, to only 3 of those contexts (\(\sim 0.0000095\%\)) in which the word “bungalow” appears.

Some aspects of the phenomenon of linguistic reduplication have found an interesting treatment in “The salad-salad paper” (Ghomeshi et al. 2004).

Understanding by “documents” regions of text of any possible length—from extended regions down to sentences and even below.

Which already raises the issue of the identity of words as fundamental units of language.

One could also add that hybrid models are conceivable, in which some columns represent words while others documents, which would result in an increase of precision in the analysis of similarity, without any loss of information. This would be unlikely if both models were different in kind.

It might be relevant to remind here that both models applying SVD as well as word2vec and other related NN models produce different representations for terms and contexts.

To use a classical Fregean way of referring to functional expressions. Cf. Frege (1984).

Terms do not have any priority over contexts in this back and forth, since, as we have seen, if terms are arguments for functional contexts, the inverse can also be true, by simple transposition of the matrix. Terms and contexts differ only formally, the only important thing is that one is seen as saturated and the other as unsaturated.

This understanding of articulated opposition and structural similarity in terms of duality and bi-duality, as well as its possible connection to the conditions for typing logical terms through bi-orthogonality relations owes everything to a joint work in progress with Luc Pellissier.

If attention is paid to the technical details of word2vec models, it appears that such duality is pervasive: not only the duality between central words and context words motivates the introduction of two different models (CBOW and Skip-gram, the former producing vector representations of context words out of central words, and vice versa), but for each of those models, two different sets of vector representations are produced corresponding to words taken as central or as context words, and the combination of those two representations for the same word has not received a satisfactory conceptual solution (in the original word2vec model, representations of output vectors are simply discarded; in other models, both vectors are added). For a careful assessment of the technical implications attached to the possible ways of associating both sets of vectors, see Levy et al. (2015, §3.3).

Of course, linguistic constraints are not as minimal, rigid and explicit as those of chess or Go, and leave the room for strategies to be at the origin of new rules. This is how a deeper notion of use can find its place in the new landscape. Such creations are, however, rare at a large scale and constantly guided by existing regularities at each state of the language.

This is, for instance, what Levy et al. (2015) carry out in a way, although they only focus on the technical details, without assessing the conceptual aspects concerning language itself.

This circumstance motivates the frequent conflation between MMs and DSMs (distributional semantic models). See, for instance, Baroni and Lenci (2010).

In the particular case of LSA, for instance, although implied in the idea of manipulations of strings of words and abstract mutual constraints between terms and contexts, syntactic properties are entirely disregarded as dimensions of language worth capturing by the model. In fact, syntax is discarded twice in the original formulation of LSA: first, because the model is not supposed to capture syntactic features, but only semantic content; second, because syntactic contribution to semantic content is neglected by design, namely by neglecting word order. Certainly, those two exclusions are not oversights and find valid grounds and explicit explanations: the first one in the fact that LSA was conceived above all as a system of information retrieval (and not as a general language model), the second in the fact that, from the specific perspective of information retrieval, syntactic structure (as given by word order) conveys negligible information. Landauer and his team provide fairly convincing arguments that estimate the contribution of word order to the meaning LSA is supposed to capture at around 10–15% (Landauer et al. 2007, pp. 25–29).

Carnap’s broadening of the notion of syntax was originally intended to provide a syntactic and linguistic conception of logic: “logic will become a part of syntax, provided that the latter is conceived in a sufficiently wide sense and formulated with exactitude” (Carnap 2001, pp. 2). He thus introduced the notion of “logical syntax” of a language to refer to “the formal theory of the linguistic forms of that language—the systematic statement of the formal rules which govern it together with the development of the consequences which follow from these rules.” And he defined “formal” in the following terms: “A theory, a rule, a definition, or the like is to be called formal when no reference is made in it either to the meaning of the symbols (for example, the words) or to the sense of the expressions (e.g. the sentences), but simply and solely to the kinds and order of the symbols from which the expressions are constructed.” (Carnap 2001, pp. 1). Morris, in turn, defined “syntactics” as the “the study of syntactical relations of signs to one another in abstraction from the relations of signs to objects or to interpreters” (Morris 1938, p. 13), while semantics “deals with the designation of signs to their designata” (Morris 1938, p. 21).

Certainly, from the viewpoint of the study of natural languages, multiple relations between both dimensions have been proposed, and what is known as “syntax-semantics interface” remains an active domain in the field (see Rappaport Hovav and Levin 2015 for an overview). Yet rather than eroding the frontier between both dimensions of language, those approaches presuppose it and intend to specify it.

Consider for instance the fact that “er” will probably be an observed right context for “play” and “lov” as well, although for different reasons, involving here also the relations between syntactic and semantic features.

The study of these more recent models, which have redefined the current state of the art, could therefore significantly contribute to the questions raised in these pages. Unfortunately, such an inquiry falls outside the scope of the present paper.

In the first paragraph, Harris affirms: “Here we will discuss how each language can be described in terms of a distributional structure, i.e. in terms of the occurrence of parts (ultimately sounds) relative to other parts, and how this description is complete without intrusion of other features such as history or meaning” (Harris 1970, p. 775).

Harris follows Bloomfield’s program on this point: “As Leonard Bloomfield pointed out, it frequently happens that when we do not rest with the explanation that something is due to meaning, we discover that it has a formal regularity or ‘explanation’. It may still be ‘due to meaning’ in one sense, but it accords with a distributional regularity.” (Harris 1970, 785).

Shortly after, he also characterizes distribution as “the freedom of occurrence of portions of an utterance relatively to each other” (Harris 1960, p. 5).

Harris despises as much as Landauer a conception of language as a “bag of words.” See Harris(1970, p. 785).

The interested reader could simply refer to Harris’s (1960).

Note that Harris’s procedure can be understood as an elementary technique of dimensionality reduction.

This is no less true of written or any other kind of language than of spoken language.

This fact has been verified since by multiple empirical studies. See for instance (Liberman 1957).

It would not be too difficult to interpret NN supervised training along these lines, the training set being typically composed, as we have seen, of pairs of vector representations belonging to heterogeneous domains of content (e.g. the image of an animal and its written name). For an interpretation of NN training in terms of structuralist procedures, see Maniglier (2016).

Incidentally, MMs help to understand that syntagmatic relations are not symmetrical but connect saturated terms with unsaturated ones—an idea that is absent in Saussure’s Course. Hjelmslev’s functional reformulation of structuralism will make this asymmetry explicit.

While in his early work Hjelmslev thought of those conceptual zones in semantic terms, his theory evolved into a purely formal conception based on abstract notions of correlation, participation and exclusion. See Hjelmslev’s (1975, §Gb3.1) and Herreman’s introduction to this difficult text (Herreman 2011).

We presuppose here the existence of adjectives, although such a class should in principle be structurally derived as well, partly based on the fact that the terms that fall under it are subject to a comparative category, following the basic mechanisms of (bi)-duality.

Here again, the class of nouns is presupposed. Its actual inference would in part stand on the possible relation to the class of adjectives. As we already mentioned, this semantic characterization of comparison in terms of intensity will be abandoned in Hjelmslev’s later works, in favor of purely formal definitions.

This is not necessarily the case for other languages, in which the comparative category is otherwise structured. See Hjelmslev (2016, §7).

Due to internal dependencies between the six values (which tend to respect the original pairs), not every combination is actually possible. Hjelmslev identifies a total of seven, defining axes of two up to six values: α, A; β, B, γ; β, B, Γ; β, B, γ, Γ; α, A, β, B, γ; α, A, β, B, Γ and α, A, β, B, γ, Γ.

Although we presented the elementary mechanisms of Hjelmslev’s theory through mostly morphological categories, their formal definition could, in theory, be applied to any linguistic level in which a latent structure is to be drawn as the underlying principle of identification and characterization of significant units.

This does not imply that the complex phenomenon of meaning is reducible to the linguistic mechanisms by which language organizes its semantic effects, since a theory of meaning could hardly avoid the difficult question of the contribution of perception to meaning. The structuralist perspective just exposed suggests already a possible treatment of this question when it appeals to an heterogeneous plane as a condition for establishing discriminating criteria for linguistic units, since that other plane could in principle be related to perceptual properties. But the role played by perception in this case is still far from being clear.

References

Baroni, M., & Lenci, A. (2010). Distributional memory: a general framework for corpus-based semantics. Computational Linguistics, 36(4), 673–721. https://doi.org/10.1162/coli_a_00016.

Baroni, M., Dinu, G., & Kruszewski, G. (2014). Don’t count, predict! A systematic comparison of context-counting vs. context-predicting semantic vectors. In Proceedings of the 52nd annual meeting of the association for computational linguistics (Volume 1: Long Papers) (pp. 238–247): Association for Computational Linguistics, DOI https://doi.org/10.3115/v1/P14-1023.

Bengio, Y. (2008). Neural net language models. Scholarpedia, 3(1), 3881. https://doi.org/10.4249/scholarpedia.3881. Revision #140963.

Bengio, Y., Ducharme, R., Vincent, P., & Janvin, C. (2003). A neural probabilistic language model. Journal of Machine Learning Research, 3, 1137–1155.

Blei, D.M., Ng, A.Y., & Jordan, M.I. (2003). Latent Dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022.

Blitzer, J., Weinberger, K., Saul, L.K., & Pereira, F.C.N. (2005). Hierarchical distributed representations for statistical language modeling. In Advances in neural and information processing systems, Vol. 17. Cambridge: MIT Press.

Bojanowski, P., Grave, E., Joulin, A., & Mikolov, T. (2016). Enriching word vectors with subword information. arXiv:1607.04606.

Bullinaria, J.A., & Levy, J.P. (2012). Extracting semantic representations from word co-occurrence statistics: stop-lists, stemming, and svd. Behavior Research Methods, 44(3), 890–907. https://doi.org/10.3758/s13428-011-0183-8.

Bybee, J.L., & Hopper, P.J. (Eds.). (2001). Frequency and the emergence of linguistic structure. No. 45 in Typological studies in language. Amsterdam: Benjamins. OCLC: 216471429.

Carnap, R. (2001). Logical syntax of language. Routledge. OCLC: 916122384.

Caron, J. (2001). Computational information retrieval. chap. Experiments with LSA scoring: optimal rank and basis, (pp. 157–169). Philadelphia: Society for Industrial and Applied Mathematics.

Chater, N., Clark, A., Goldsmith, J.A., & Perfors, A. (2015). Empiricism and language learnability, 1st edn. Oxford: Oxford University Press. OCLC: ocn907131354.

Chen, J., Tao, Y., & Lin, H. (2018). Visual exploration and comparison of word embeddings. Journal of Visual Languages and Computing, 48, 178–186. https://doi.org/10.1016/j.jvlc.2018.08.008.

Collobert, R., & Weston, J. (2008). A unified architecture for natural language processing: deep neural networks with multitask learning. In Proceedings of the 25th international conference on machine learning, ICML ’08. http://doi.acm.org/10.1145/1390156.1390177 (pp. 160–167). New York: ACM.

Dahl, G.E., Yu, D., Deng, L., & Acero, A. (2012). Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Transactions on Audio, Speech, and Language Processing, 20(1), 30–42. https://doi.org/10.1109/TASL.2011.2134090.

Deerwester, S.C., Dumais, S.T., Landauer, T.K., Furnas, G.W., & Harshman, R.A. (1990). Indexing by latent semantic analysis. JASIS, 41(6), 391–407.

Deleuze, G. (1994). Difference and repetition. New York: Columbia University Press.

Dennis, S., Landauer, T., Kintsch, W., & Quesada, J. (2003). Introduction to latent semantic analysis. Slides from the tutorial given at the 25th anual meeting of the cognitive science society. Boston.

Devlin, J., Chang, M., Lee, K., & Toutanova, K. (2018). BERT: pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805.

Elman, J.L. (1990). Finding structure in time. Cognitive Science, 14, 213–252.

Elman, J.L. (Ed.). (1996). Rethinking innateness: a connectionist perspective on development. Neural network modeling and connectionism. Cambridge: MIT Press.

Firth, J.R. (1957). A synopsis of linguistic theory 1930–1955. In Studies in linguistic analysis (pp. 1–32). Oxford: Blackwell.

Frege, G. (1984). Function and concept. In McGuinness, B. (Ed.) Collected papers on mathematics, logic, and philosophy, pp. 137–156. Basil Blackwell.

Ghomeshi, J., Jackendoff, R., Rosen, N., & Russell, K. (2004). Contrastive focus reduplication in english (the salad-salad paper). Natural Language & Linguistic Theory, 22(2), 307–357. https://doi.org/10.1023/B:NALA.0000015789.98638.f9.

Girard, J.Y. (2001). Locus solum: from the rules of logic to the logic of rules. Mathematical Structures in Computer Science, 11(3), 301–506.

Gladkova, A., Drozd, A., & Matsuoka, S. (2016). Analogy-based detection of morphological and semantic relations with word embeddings: what works and what doesn’t. In SRW@HLT-NAACL (pp. 8–15): The Association for Computational Linguistics.

Glenberg, A.M., & Mehta, S. (2008). Constraint on covariation: it’s not meaning. Special issue of the Italian Journal of Linguistics, 1(20), 241–264.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. Cambridge: MIT Press.

Hale, B., & Wright, C. (1997). A companion to the philosophy of language. Oxford: Blackwell Publishers.

Hamilton, W.L., Leskovec, J., & Jurafsky, D. (2016). Diachronic word embeddings reveal statistical laws of semantic change. arXiv:1605.09096.

Harris, Z. (1960). Structural linguistics. Chicago: University of Chicago Press.

Harris, Z. (1970). Distributional structure. In Papers in Structural and Transformational Linguistics (pp. 775–794). Dordrecht: Springer.

Herreman, A. (2011). Analyser l’analyse, décrire la description. texto! Textes & Cultures, 16, 2. http://www.revue-texto.net/index.php?id=2875.

Hewitt, J., & Manning, C.D. (2019). A structural probe for finding syntax in word representations. In Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers) (pp. 4129–4138). Minneapolis: Association for Computational Linguistics, DOI https://doi.org/10.18653/v1/N19-1419.

Hinton, G.E. (1986). Learning distributed representations of concepts. In Proceedings of the Eighth annual conference of the cognitive science society (pp. 1–12). Hillsdale: Erlbaum.

Hjelmslev, L. (1935). La catégorie des cas. Munchen: Wilhelm Fink.

Hjelmslev, L. (1953). Prolegomena to a theory of language. Baltimore: Wawerly Press.

Hjelmslev, L. (1975). Résumé of a Theory of Language. No. 16 in Travaux du Cercle linguistique de Copenhague. Copenhagen: Nordisk Sprog-og Kulturforlag.

Hjelmslev, L. (2016). Système linguistique et changement linguistique. Paris: Classiques Garnier.

Hofmann, T. (1999). Probabilistic latent semantic indexing. In Proceedings of the 22Nd annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’99. http://doi.acm.org/10.1145/312624.312649 (pp. 50–57). New York: ACM.

Hofmann, T. (2001). Unsupervised learning by probabilistic latent semantic analysis. Machine Learning, 42(1–2), 177–196. https://doi.org/10.1023/A:1007617005950.

Howard, J., & Ruder, S. (2018). Fine-tuned language models for text classification. arXiv:1801.06146.

Google inc. (2013). word2vec, https://code.google.com/archive/p/word2vec/.

Jaitly, N., Nguyen, P., Senior, A., & Vanhoucke, V. (2012). Application of pretrained deep neural networks to large vocabulary speech recognition. In Proceedings of Interspeech 2012.

Jansen, S. (2017). Word and phrase translation with word2vec. arXiv:1705.03127.

Jurafsky, D., & Martin, J.H. (2008). Speech and language processing, 2nd edn. Upper Saddle River: Prentice Hall.

Krivine, J.L. (2001). Typed lambda-calculus in classical Zermelo-Fraenkel set theory. Archive for Mathematical Logic, 40(3), 189–205.

Kulkarni, V., Al-Rfou, R., Perozzi, B., & Skiena, S. (2014). Statistically significant detection of linguistic change. arXiv:1411.3315.

Landauer, T.K. (2002). On the computational basis of learning and cognition: arguments from LSA, (pp. 43–84): Academic Press.

Landauer, T.K., Foltz, P.W., & Laham, D. (1998). An introduction to latent semantic analysis. Discourse Processes, 25, 259–284.

Landauer, T.K., McNamara, D.S., Dennis, S., & Kintsch, W. (Eds.). (2007). Handbook of latent semantic analysis. New Jersey: Lawrence Erlbaum Associates.

Lenci, A. (2008). Distributional semantics in linguistic and cognitive research. From context to meaning: distributional models of the lexicon in linguistics and cognitive science. Italian Journal of Linguistics, 1(20), 1–31.

Lenci, A. (2018). Distributional models of word meaning. Annual Review of Linguistics, 4 (1), 151–171. https://doi.org/10.1146/annurev-linguistics-030514-125254.

Levy, O., & Goldberg, Y. (2014a). Linguistic regularities in sparse and explicit word representations. In Proceedings of the eighteenth conference on computational natural language learning, CoNLL 2014, Baltimore, Maryland, USA, June 26-27, 2014 (pp. 171–180).

Levy, O., & Goldberg, Y. (2014b). Neural word embedding as implicit matrix factorization. In Proceedings of the 27th international conference on neural information processing systems - volume 2, NIPS’14 (pp. 2177–2185). Cambridge: MIT Press.

Levy, O., Goldberg, Y., & Dagan, I. (2015). Improving distributional similarity with lessons learned from word embeddings. TACL, 3, 211–225.

Li, J., Chen, X., Hovy, E.H., & Jurafsky, D. (2016). Visualizing and understanding neural models in NLP. In HLT-NAACL (pp. 681–691): The Association for Computational Linguistics.

Liberman, A.M. (1957). Some results of research on speech perception. The Journal of the Acoustical Society of America, 29(1), 117–123. https://doi.org/10.1121/1.1908635.

Linzen, T. (2016). Issues in evaluating semantic spaces using word analogies. arXiv:1606.07736.

Liu, S., Bremer, P., Thiagarajan, J.J., Srikumar, V., Wang, B., Livnat, Y., & Pascucci, V. (2018). Visual exploration of semantic relationships in neural word embeddings. IEEE Transactions on Visualization and Computer Graphics, 24(1), 553–562. doi.ieeecomputersociety.org/10.1109/TVCG.2017.2745141.

Lund, K., & Burgess, C. (1996). Producing high-dimensional semantic spaces from lexical co-occurrence. Behavior Research Methods, Instruments & Computers, 28 (2), 203–208. https://doi.org/10.3758/BF03204766.

Luong, T., Pham, H., & Manning, C.D. (2015). Bilingual word representations with monolingual quality in mind. In Proceedings of the 1st workshop on vector space modeling for natural language processing, VS@NAACL-HLT 2015, June 5, 2015, Denver, Colorado, USA (pp. 151–159).

MacWhinney, B. (Ed.). (1999). The emergence of language. Carnegie Mellon symposia on cognition. Mahwah: Lawrence Erlbaum Associates.

Maniglier, P. (2006). La vie énigmatique des signes. Paris: Léo Scheer.

Maniglier, P. (2016). Milieux de culture. In Beividas, W., Lopes, I. C., & Badir, S. (Eds.) Cem Anos com Saussure, Textos de Congresso Internacional, Vol. 2. Sao Paulo: Annablume editora.

Manning, C.D., & Schütze, H. (1999). Foundations of statistical natural language processing. Cambridge: MIT Press.

McEnery, A.M., & Wilson, A. (2001). Corpus linguistics: an introduction. Edinburgh: Edinburgh University Press.

Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013a). Efficient estimation of word representations in vector space. arXvi:1301.3781.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G., Dean, J., Le, Q., & Strohmann, T. (2013b). Learning representations of text using neural networks. NIPS Deep learning workshop 2013 slides. NIPS Deep Learning Workshop 2013.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G., & Dean, J. (2013c). Distributed representations of words and phrases and their compositionality. arXiv:1310.4546.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G., Dean, J., Le, Q., & Strohmann, T. (2013d). Learning representations of text using neural networks (nips deep learning workshop 2013 slides).

Mikolov, T., Yih, W.t., & Zweig, G. (2013e). Linguistic regularities in continuous space word representations. In Proceedings of the 2013 conference of the North American chapter of the association for computational linguistics: human language technologies (pp. 746–751): Association for Computational Linguistics.

Miller, G.A., & Charles, W.G. (1991). Contextual correlates of semantic similarity. Language and Cognitive Processes, 6(1), 1–28. https://doi.org/10.1080/01690969108406936.

Mnih, A., & Hinton, G. (2007). Three new graphical models for statistical language modelling. In Proceedings of the 24th international conference on machine learning, ICML ’07. http://doi.acm.org/10.1145/1273496.1273577 (pp. 641–648). New York: ACM.

Morris, C.W. (1938). Foundations of the theory of signs. Chicago: The University of Chicago Press.

Nickel, M., & Kiela, D. (2017). Poincaré embeddings for learning hierarchical representations. arXiv:1705.08039.

Nielsen, M.A. (2015). Neural networks and deep learning. Determination Press. http://neuralnetworksanddeeplearning.com/.

Österlund, A., Ödling, D., & Sahlgren, M. (2015). Factorization of latent variables in distributional semantic models. In Proceedings of the 2015 conference on empirical methods in natural language processing (pp. 227–231). Lisbon: Association for Computational Linguistics, DOI https://doi.org/10.18653/v1/D15-1024.

Park, D., Kim, S., Lee, J., Choo, J., Diakopoulos, N., & Elmqvist, N. (2018). Conceptvector: text visual analytics via interactive lexicon building using word embedding. IEEE Transactions on Visualization and Computer Graphics, 24 (1), 361–370. https://doi.org/10.1109/TVCG.2017.2744478.

Pennington, J., Socher, R., & Manning, C.D. (2014a). Glove: global vectors for word representation. In EMNLP, (Vol. 14 pp. 1532–1543).

Pennington, J., Socher, R., & Manning, C.D. (2014b). Glove: global vectors for word representation. https://nlp.stanford.edu/projects/glove/, https://nlp.stanford.edu/projects/glove/.

Peters, M.E., Neumann, M., Iyyer, M., Gardner, M., Clark, C., Lee, K., & Zettlemoyer, L. (2018). Deep contextualized word representations. arXiv:1802.05365.

Qiu, Y., & Frei, H.P. (1993). Concept based query expansion. In Proceedings of the 16th annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’93. http://doi.acm.org/10.1145/160688.160713 (pp. 160–169). New York: ACM.

Radford, A. (2018). Improving language understanding by generative pre-training.

Rappaport Hovav, M., & Levin, B. (2015). The syntax-semantics interface. In Lappin, S., & Fox, C. (Eds.) The handbook of contemporary semantic theory, chap. 19. https://onlinelibrary.wiley.com/doi/abs/10.1002/9781118882139.ch19 (pp. 593–624): Wiley-Blackwell.

Rastier, F. (2001). Arts et sciences du texte. Paris: Presses Universitaires de France.

Rastier, F., Cavazza, M., & Abeillé, A. (2001). Semantics for descriptions: from linguistics to computer science. Chicago: The University of Chicago Press.

Rumelhart, D.E., & McClelland, J.L. (1986). Parallel distributed processing. Cambridge: MIT Press.

Sahlgren, M. (2006). The word-space model: using distributional analysis to represent syntagmatic and paradigmatic relations between words in high-dimensional vector spaces. Ph.D. thesis. Stockholm: Stockholm University.

Sahlgren, M. (2008). The distributional hypothesis. Special issue of the Italian Journal of Linguistics, 1(20), 33–53.

Salton, G. (1971). The SMART retrieval system: experiments in automatic document processing. Upper Saddle River: Prentice-Hall.

Salton, G., Wong, A., & Yang, C.S. (1975). A vector space model for automatic indexing. Communications of the ACM, 18(11), 613–620.

Saussure, F. de. (1959). Course in general linguistics. New York: McGraw-Hill. Translated by Wade Baskin.

Saussure, F. de. (1995). Cours de linguistique générale. Grande Bibliothéque Payot. Paris: Editions Payot & Rivages.

Schmidhuber, J. (2015). Deep learning in neural networks: an overview. Neural Networks, 61, 85–117. https://doi.org/10.1016/j.neunet.2014.09.003.

Schnabel, T., Labutov, I., Mimno, D.M., & Joachims, T. (2015). Evaluation methods for unsupervised word embeddings. In Proceedings of the 2015 conference on empirical methods in natural language processing, EMNLP 2015, Lisbon, Portugal, September 17-21, 2015, pp. 298–307.

Schütze, H. (1992). Dimensions of meaning. In Proceedings of the 1992 ACM/IEEE conference on supercomputing, supercomputing ’92 (pp. 787–796). Los Alamitos: IEEE Computer Society Press.

Schütze, H. (1993). Word space. In Advances in neural information processing systems 5, [NIPS Conference] (pp. 895–902). San Francisco: Morgan Kaufmann Publishers Inc.

Schütze, H. (1998). Automatic word sense discrimination. Computational Linguistics, 24(1), 97–123.

Schwenk, H., & luc Gauvain, J. (2002). Connectionist language modeling for large vocabulary continuous speech recognition. In International conference on acoustics, speech and signal processing (pp. 765–768).

Senel, L.K., Utlu, I., Yücesoy, V., Koç, A., & Çukur, T. (2017). Semantic structure and interpretability of word embeddings. arXiv:1711.00331.

Sennrich, R., Haddow, B., & Birch, A. (2016). Neural machine translation of rare words with subword units. In Proceedings of the 54th annual meeting of the association for computational linguistics (volume 1: long papers) (pp. 1715–1725). Berlin: Association for Computational Linguistics, DOI https://doi.org/10.18653/v1/P16-1162.

Smilkov, D., Thorat, N., Nicholson, C., Reif, E., Viégas, F. B., & Wattenberg, M. (2016). Embedding projector: interactive visualization and interpretation of embeddings. arXiv:https://arxiv.org/abs/1611.05469.

Spence, D.P., & Owens, K.C. (1990). Lexical co-occurrence and association strength. Journal of Psycholinguistic Research, 19(5), 317–330. https://doi.org/10.1007/BF01074363.

Turian, J., Ratinov, L.A., & Bengio, Y. (2010). Word representations: a simple and general method for semi-supervised learning. In Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics (pp. 384–394). Uppsala: Association for Computational Linguistics.

Turney, P.D. (2008). A uniform approach to analogies, synonyms, antonyms, and associations. In Proceedings of the 22nd international conference on computational linguistics - volume 1, COLING ’08 (pp. 905–912). Stroudsburg: Association for Computational Linguistics.

Turney, P.D., & Pantel, P. (2010). From frequency to meaning: vector space models of semantics. arXiv:https://arxiv.org/abs/1003.1141.

Yang, Z., Dai, Z., Yang, Y., Carbonell, J., Salakhutdinov, R., & Le, Q.V. (2019). Xlnet: generalized autoregressive pretraining for language understanding.

Acknowledgments

The author wishes to thank the reviewers for their insightful remarks and generous suggestions.

Funding

This work was partly funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 839730.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gastaldi, J.L. Why Can Computers Understand Natural Language?. Philos. Technol. 34, 149–214 (2021). https://doi.org/10.1007/s13347-020-00393-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13347-020-00393-9