- 1Institute of Management Studies, Goldsmiths, University of London, London, United Kingdom

- 2Holistic AI, London, United Kingdom

- 3HireVue, Inc., London, United Kingdom

Recent years have seen rapid advancements in selection assessments, shifting away from human and toward algorithmic judgments of candidates. Indeed, algorithmic recruitment tools have been created to screen candidates’ resumes, assess psychometric characteristics through game-based assessments, and judge asynchronous video interviews, among other applications. While research into candidate reactions to these technologies is still in its infancy, early research in this regard has explored user experiences and fairness perceptions. In this article, we review applicants’ perceptions of the procedural fairness of algorithmic recruitment tools based on key findings from seven key studies, sampling over 1,300 participants between them. We focus on the sub-facets of behavioral control, the extent to which individuals feel their behavior can influence an outcome, and social presence, whether there is the perceived opportunity for a social connection and empathy. While perceptions of overall procedural fairness are mixed, we find that fairness perceptions concerning behavioral control and social presence are mostly negative. Participants feel less confident that they are able to influence the outcome of algorithmic assessments compared to human assessments because they are more objective and less susceptible to manipulation. Participants also feel that the human element is lost when these tools are used since there is a lack of perceived empathy and interpersonal warmth. Since this field of research is relatively under-explored, we end by proposing a research agenda, recommending that future studies could examine the role of individual differences, demographics, and neurodiversity in influencing fairness perceptions of algorithmic recruitment.

Introduction

Recent years have seen rapid advances in the way that pre-employment tests are delivered, with commercial providers offering game- and image-based assessments, on-demand asynchronous video interviews, and tools to screen applicant resumes, all of which use algorithms to make decisions on the suitability of candidates (Albert, 2019; Guenole et al., forthcoming). Recent estimates indicate that over 75% of organizations are currently or are interested in using algorithms in their talent sourcing, with 7% fully automating their approach (Laurano, 2022). Much of the work on algorithmic recruitment tools thus far has focused on performance metrics and bias (e.g., Köchling and Wehner, 2020; Landers et al., 2021; Hilliard et al., 2022a) over fairness, a social construct that is distinct from bias (Society for Industrial and Organizational Psychology, 2018). Perceptions of fairness can concern distributive fairness – fairness in outcomes – or procedural fairness – fairness in the procedure used during an assessment, typically characterized by perceived ability to influence a decision (Gilliland, 1993). Much of the research regarding the perceived fairness of algorithmic recruitment tools investigates procedural fairness since it is a concern shared by both candidates and human resources practitioners (Fritts and Cabrera, 2021; Mirowska and Mesnet, 2021).

In this review, we explore the perceived procedural fairness of algorithmic recruitment tools, where we define an algorithmic recruitment tool as one that combines non-traditional or unstructured data sources with machine learning to predict a job-relevant construct, such as personality (Guenole et al., 2022). Google Scholar was used to source the studies included in this review through searches including key words such as algorithm, algorithmic, hiring, selection, recruitment, fairness perceptions, and perceived fairness. Article abstracts were used to identify the recurring themes of general procedural justice, behavioral control, and social presence. Studies investigating these constructs were therefore included while studies investigating other sub-facets of procedural justice or distributive justice were excluded to limit the scope of the review.

We find that perceptions of general procedural fairness are mixed but when examined at a sub-facet level, perceptions are more negative. Specifically, algorithmic recruitment tools can be perceived as unfair because of their objectivity, which makes it harder for candidates to use impression management and influence decisions, and because there is a lack of human connection. Since research in this field is in a nascent stage, we also suggest a research agenda to better understand perceptions of algorithmic selection assessments, recommending that future research could examine the influence of individual differences and demographics on fairness perceptions, as well as use real-life applicants over hypothetical scenarios.

Perceptions of algorithmic selection assessments

Although a relatively new research area, some studies have investigated reactions to algorithmic selection assessments. Game-based assessments, for example, offer shorter testing times (Atkins et al., 2014; Leutner et al., 2020) and are perceived as more engaging (Lieberoth, 2015), more satisfying (Georgiou and Nikolaou, 2020), and more immersive (Leutner et al., 2020) than their questionnaire-based equivalents. However, much of the research so far has investigated user experience, rather than fairness perceptions.

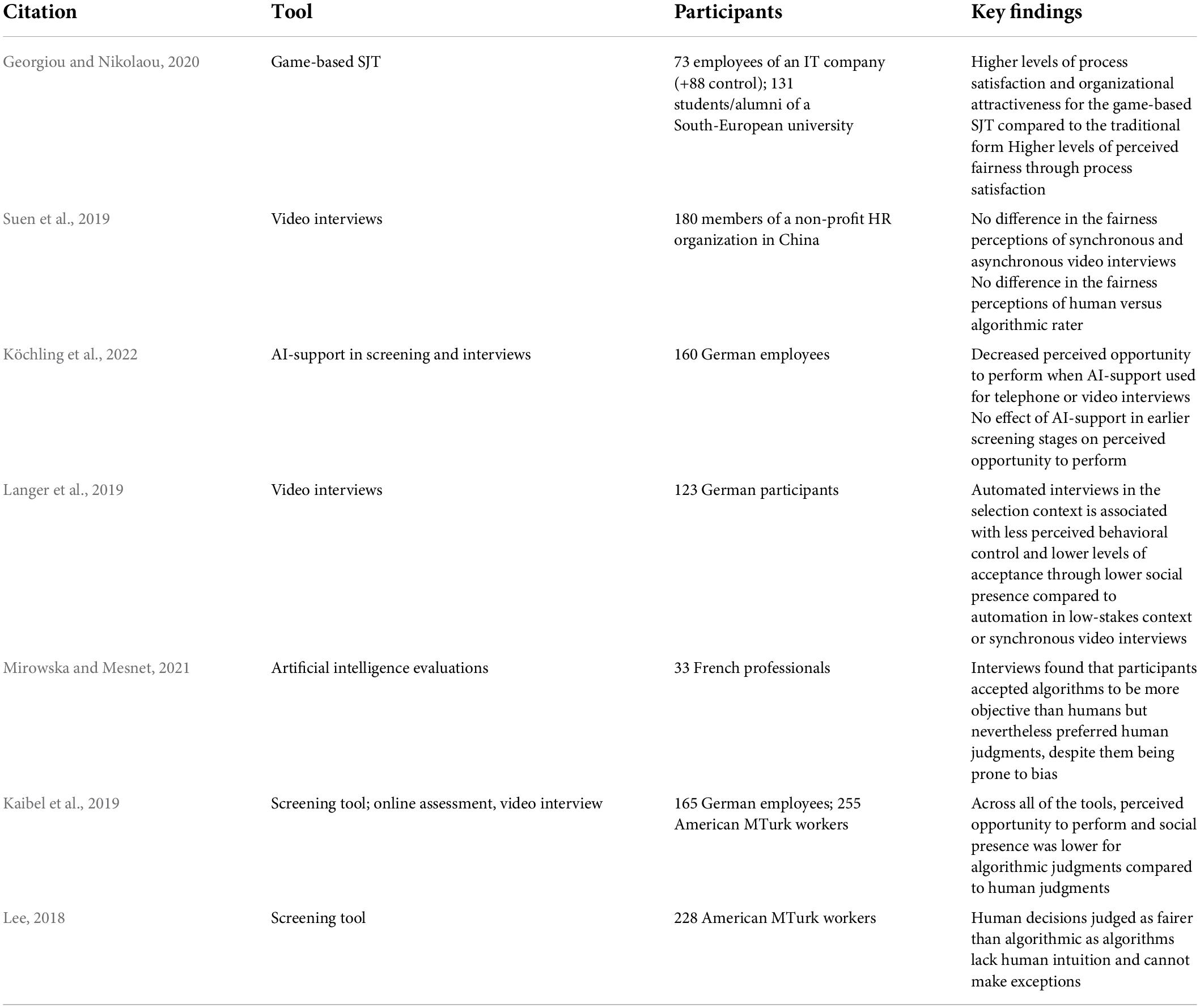

While it is of course important to investigate how candidates feel when they interact with these tools, it is also important to investigate the perceptions of these assessments since negative perceptions might discourage candidates from interacting with these tools in the first place, something that is likely to be a major concern for employers given the current labor shortages (Office for National Statistics, 2021). However, perceptions of organizations can be worsened when they use automatic analysis of interviews, and there is a preference for resumes to be screened by humans rather than algorithms (Wright and Atkinson, 2019). To this end, in the following section, we examine the research into the fairness perceptions of algorithmic recruitment tools. We start by examining perceptions of procedural fairness as a whole before focusing on the sub-facets of behavioral control and social presence. We provide an overview of the key themes and findings of the seven key studies we discuss in Table 1.

Algorithmic recruitment and procedural fairness

When examined at an overall level, perceptions of procedural fairness of algorithmic recruitment tools are typically positive, although this can vary by assessment tool. For example, Georgiou and Nikolaou (2020) report that game-based situational judgment tests, which are scored using an algorithm, are viewed as fairer than traditional situational judgment formats. In contrast, Suen et al. (2019) report that although there is a preference for synchronous video interviews compared to asynchronous, fairness perceptions do not vary when asynchronous video interviews are judged by humans compared to an algorithm. More recent research reveals further contrasts, with algorithmic tools being seen as less fair when used in later stages of the recruitment funnel and equally as fair as human ratings when used in earlier stages, such as resume screening (Köchling et al., 2022).

While perceptions of procedural fairness can be examined using a broad definition, as was the approach of Suen et al. (2019) and Georgiou and Nikolaou (2020), they can also be examined at a more granular level. Indeed, procedural fairness can be examined in relation to specific variables including social presence, interpersonal treatment, perceived behavioral control, and consistency (Langer et al., 2019). Examining procedural fairness at this level can provide greater insight into the factors driving fairness perceptions, and how applicants interact with the tools. In the following sub-sections, we examine procedural fairness perceptions in relation to the sub-facets of social presence, referring to the perceived ability to form social connections and experience empathy during the application experience, and perceived behavioral control, referring to the extent to which an individual believes they can control or influence an outcome with their behavior (Langer et al., 2019) to reflect the elements of procedural fairness most frequently investigated in the literature.

Algorithmic recruitment and behavioral control

In contrast to the mixed findings of overall procedural fairness, when procedural fairness is examined at a more granular level, fairness perceptions of algorithmic recruitment tools are more consistent. Several studies report that across different algorithmic recruitment tools, there is less of an opportunity to perform. In other words, participants perceive less behavioral control. Indeed, despite being seen as more objective, algorithmic asynchronous video interviews are seen as having less opportunity to form in comparison to human-rated asynchronous video interviews (Kaibel et al., 2019). Likewise, algorithmic resume screening tools are perceived to be less able to judge human character compared to when humans screen resumes (Lee, 2018), despite humans spending less than 10 seconds reading each resume (Ladders, 2018), and being biased against non-white applicants (Bertrand and Mullainathan, 2004). Specifically, applicants perceive it to be unfair that algorithms cannot make exceptions whereas human raters can (Lee, 2018). This indicates that applicants perceive that there is less opportunity for them to perform with algorithmic judgments because of their objectivity, meaning that they are less able to manipulate the algorithm than human raters. Therefore, efforts to make recruitment funnels more objective and standardized, and therefore fairer in terms of a lack of bias, has resulted in them being perceived as more unfair.

A possible explanation for applicants perceiving less opportunity to perform might be influenced by a lack of knowledge about what the algorithm uses to make judgments, particularly because algorithms are typically considered black-box or glass-box systems (Cheng and Hackett, 2021), meaning the internals of the model are uninterpretable or unknown (Guidotti et al., 2018). However, providing some explanation of how the algorithms make decisions to candidates does not always improve perceptions (Langer et al., 2018), and can even worsen perceptions of fairness and organizational attractiveness by invoking concerns about privacy due to the data being used to compute judgments (Langer et al., 2021). This suggests that it is not the lack of knowledge about algorithmic decision-making that drives lower perceptions of opportunity to perform with algorithmic tools, but the fact that candidates are more confident in influencing human decision-making since the factors that humans consider when making judgments are more intuitive and superficial compared to datapoints used by algorithms. Therefore, the more subjective nature of human judgments is preferred because participants believe that they are more able to influence the decision of their rater through impression management, which is not uncommon for candidates to use during their application (e.g., Weiss and Feldman, 2006). Since human biases are notoriously difficult to address (Atewologun et al., 2018) and a properly trained algorithm that has been tested for bias is likely to result in fewer sub-group differences (Lepri et al., 2018), impression management is less likely to have an effect on algorithmic decision tools.

Algorithmic recruitment and social presence

Perceptions of social presence are concerned with the extent to which applicants perceive there to be an interpersonal connection facilitated by empathy and warmth during an interaction (Langer et al., 2019). Investigations of this aspect of procedural fairness have found that algorithmically analyzed video interviews and tests of performance are rated as less personable, or lower in social presence, than manual ratings, despite applicants not interacting with a human in either condition (Kaibel et al., 2019). This suggests that algorithmic judgments are perceived as being unfair as they are less able to reflect human values and replicate interpersonal exchanges as an algorithm cannot empathize with candidates like humans can. Indeed, despite acknowledging that algorithmic recruitment tools are more objective than human ratings, and that human ratings can be biased, participants still report perceiving algorithmic recruitment tools are less fair due to the lack of human connection and interaction (Mirowska and Mesnet, 2021). This could explain why algorithmic tools used earlier in the funnel, where there is typically less human interaction, are seen as equally fair to human ratings, while algorithmic tools used later in the funnel, such as during the interview stage, are viewed as less fair than human ratings (Köchling et al., 2022) since there are differences in the level of human connection expected.

Concerns about lack of opportunity to perform are also echoed by human resource (HR) practitioners who believe that algorithms have artificial instead of human values. This is because the relationship between humans is very different to the relationship between humans and computers as the latter can become gamified and lack sincerity (Fritts and Cabrera, 2021). Removal of or limiting the human element in the recruitment process also has implications for the connections that recruiters can form with candidates (Li et al., 2021), particularly if much of the process is automated and recruiters are only involved in making the final decision based on algorithmic recommendations.

A research agenda

While the findings discussed above are an important step in the right direct toward understanding perceptions of algorithmic recruitment tools, this area of research is still in its infancy and there are a number of limitations with these studies and additional factors that might influence perceptions to still be explored. Indeed, a major limitation with these studies is that they use hypothetical scenarios instead of real-life applicants. Although Langer et al. (2019) made some progress toward this by framing the video interviews they used in their study as high-stakes (selection scenario) or low-stakes (training scenario), there is still a lack of research using real-life applicants. We therefore propose that future studies should examine perceptions among real-life applicants, perhaps by partnering with a commercial provider assisting an employer implementing automated approaches for the first time. This would allow between-group comparisons to take place, where perceptions of applicants completing the traditional process and the newly introduced automated process could be compared.

Existing research also does little to investigate whether perceptions of automated tools are influenced by demographic or neurological differences. To this end, we propose that future studies could investigate whether age influences perceptions of fairness since technology self-efficacy decreases with age (Hauk et al., 2018; Ellison et al., 2020), meaning that older applicants may perceive particularly low levels of fairness. Future research could also examine whether fairness perceptions of algorithmic recruitment tools differ by racial group. This is because both human and algorithmic judgments may negatively impact non-white candidates; humans are notorious for being biased when screening resumes (Bertrand and Mullainathan, 2004) and facial recognition (Buolamwini, 2018) and voice recognition technologies (Bajorek, 2019), which are used during analysis of video interviews, are less accurate for darker-skinned individuals. Future studies could, therefore, investigate whether human or algorithmic judgments are more favored among non-white applicants, given that there is the potential for discrimination with both avenues. Additionally, perceptions among neurodivergent populations, such autistic applicants, could also be examined, particularly since much of the research investigating neurodivergence and employment relates to support and coaching during job search, rather than experiences with pre-employment tests (e.g., Baldwin et al., 2014; Fontechia et al., 2019). Research that has examined autism in relation to pre-employment tests resulted in mixed findings, with one battery of game-based cognitive ability assessments resulting in no significant difference in the performance of autistic and neurotypical respondents and the second resulting in neurotypical respondents performing better than autistic (Willis et al., 2021). To extend these findings, further research could investigate the fairness perceptions of autistic candidates instead of performance, exploring how they may or may not benefit from the social element being removed or limited. Investigation of these suggested factors would provide important insights into whether certain groups might be disproportionately discouraged from applying to positions where candidates will be evaluated by algorithmic tools.

Individual differences in personality is another area that is underexplored in the currently available research that might help to explain some of the mechanisms underlying algorithmic skepticism in recruitment. Specifically, the influence of emotional intelligence, Machiavellianism, and the Big Five traits on fairness perceptions might provide some particularly useful insights. This is because emotional intelligence is characterized by being able to control the emotions of others as well as one’s own emotions (Salovey and Mayer, 1990) and is related to the selective presentation of information and behavior (Kilduff et al., 2010), meaning that individuals high in emotional intelligence may feel that there is less opportunity to perform with an algorithmic tool since they cannot manipulate these as easily as humans. Likewise, Machiavellianism is associated with selective self-presentation and manipulation of others to achieve goals (Kessler et al., 2010) and algorithmic recruitment tools are perceived as less sensitive to social cues and less likely to make exceptions (Lee, 2018), which may result in them being disfavored by those high in Machiavellianism. These influences are yet to be investigated by the literature. Although these factors are yet to be investigated in the literature, there have been some efforts toward investigating the influence of the Big Five, with Georgiou and Nikolaou (2020) finding no influence of openness to experience on fairness perceptions. However, there is a lack of research to corroborate this finding or investigating the remaining traits. We, therefore, recommend that future research could investigate the influence of the Big Five traits on perceptions, at both an overall and a facet level.

Finally, future research could examine the influence of explanation on perceptions since although explanations have little effect on organizational attractiveness (Langer et al., 2018) or can in fact worsen perceptions of fairness due to privacy concerns, providing a justification for why the tool is being used can mitigate this (Langer et al., 2021). Further, since negative perceptions of algorithmic recruitment tools can occur when candidates are given explanations about algorithmic tools due to concerns about privacy (Langer et al., 2021), further research could investigate how to overcome these concerns, particularly since concerns about privacy can influence whether or not practitioners are willing to adopt these tools (Guenole et al., forthcoming). The introduction of the Artificial Intelligence Video Interview Act (820 ILCS 42, 2020) and the passing of mandatory bias audits in New York City (Int 1894-2020, 2021) – which require that the features being used by algorithms be disclosed to candidates, facilitating greater informed consent for the use of the tool (Hilliard et al., 2022b) – could provide an opportunity to further examine how the framing of explanations influences fairness perceptions, which might inform practical recommendations regarding explaining tools to candidates.

Conclusion

While applicant reactions to algorithmic selection assessments, particularly game-based assessments, are generally positive (Atkins et al., 2014; Lieberoth, 2015; Georgiou and Nikolaou, 2020; Leutner et al., 2020), the same cannot be said for fairness perceptions. At a general level, fairness perceptions are mixed and are not consistent between assessment tools (Suen et al., 2019; Georgiou and Nikolaou, 2020; Köchling et al., 2022) but when looking at specific aspects of procedural justice, algorithmic tools are perceived as less fair than human ratings. Indeed, candidates perceive that there is less opportunity for behavioral control when assessments are automated compared to when they are judged by humans, meaning that they feel they are given less chance to perform and manipulate the raters to influence them toward a positive judgment (Lee, 2018; Kaibel et al., 2019). Candidates also perceive that there is less social presence when recruitment processes are automated (Kaibel et al., 2019; Mirowska and Mesnet, 2021), a view that is also shared by HR professionals (Fritts and Cabrera, 2021; Li et al., 2021). However, research into fairness perceptions of algorithmic recruitment tools is still in its infancy and there is scope for further investigations, which could help to inform how fairness perceptions might be improved, particularly for groups that are already underrepresented in organizations and application processes. As such, we suggest a research agenda for exploring this further, recommending that future studies should use real-life applicants and could investigate the role of individual differences in personality and emotional intelligence as well as age and race in influencing fairness perceptions, and how explanations of the algorithms should be framed. Given the current labor market shortages (Office for National Statistics, 2021) and the importance of perceptions of an organization’s recruitment process in influencing whether candidates accept a job offer (Hausknecht et al., 2004), improving fairness perceptions of algorithmic recruitment tools has significant implications for both candidates and organizations and is, therefore, an important area for further investigation.

Author contributions

AH was responsible for writing the first draft of the manuscript. FL and NG were responsible for writing and editing the manuscript. FL supervised the manuscript. All authors were responsible for conceptualization.

Conflict of Interest

AH and FL were employed by Holistic AI and HireVue, respectively.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

820 ILCS 42 (2020). Available online at: https://www.ilga.gov/legislation/ilcs/ilcs3.asp?ActID=4015&ChapterID=68 (accessed May 9, 2022).

Albert, E. T. (2019). AI in talent acquisition: a review of AI-applications used in recruitment and selection. Strateg. HR Rev. 18, 215–221. doi: 10.1108/SHR-04-2019-0024

Atewologun, D., Cornish, T., and Tresh, F. (2018). Unconscious Bias Training: An Assessment of the Evidence for Effectiveness Equality and Human Rights Commission Research Report Series. Available online at: www.equalityhumanrights.com (accessed May 9, 2022).

Atkins, S. M., Sprenger, A. M., Colflesh, G. J. H., Briner, T. L., Buchanan, J. B., Chavis, S. E., et al. (2014). Measuring working memory is all fun and games. Exp. Psychol. 61, 417–438. doi: 10.1027/1618-3169/a000262

Bajorek, J. P. (2019). Voice Recognition Still Has Significant Race And Gender Biases. Available online at: https://hbr.org/2019/05/voice-recognition-still-has-significant-race-and-gender-biases (accessed May 9, 2022).

Baldwin, S., Costley, D., and Warren, A. (2014). Employment activities and experiences of adults with high-functioning autism and asperger’s disorder. J. Autism Dev. Disord. 44, 2440–2449. doi: 10.1007/s10803-014-2112-z

Bertrand, M., and Mullainathan, S. (2004). Are Emily and greg more employable than lakisha and jamal? A field experiment on labor market discrimination. Am. Econ. Rev. 94, 991–1013. doi: 10.1257/0002828042002561

Buolamwini, J. (2018). Gender shades: intersectional accuracy disparities in commercial gender classification. Proc. Mach. Learn. Res. 81, 1–15.

Cheng, M. M., and Hackett, R. D. (2021). A critical review of algorithms in HRM: definition, theory, and practice. Hum. Resour. Manag. Rev. 31:100698. doi: 10.1016/j.hrmr.2019.100698

Ellison, L. J., McClure Johnson, T., Tomczak, D., Siemsen, A., and Gonzalez, M. F. (2020). Game on! Exploring reactions to game-based selection assessments. J. Manag. Psychol. 35, 241–254. doi: 10.1108/JMP-09-2018-0414

Fontechia, S. A., Miltenberger, R. G., Smith, T. J., and Berkman, K. (2019). Evaluating video modeling for teaching professional E-mailing skills in transition-age job seekers with autism. J. Appl. Rehabil. Couns. 50, 73–90. doi: 10.1891/0047-2220.50.1.73

Fritts, M., and Cabrera, F. (2021). AI recruitment algorithms and the dehumanization problem. Ethics Inf. Technol. 23, 791–801. doi: 10.1007/s10676-021-09615-w

Georgiou, K., and Nikolaou, I. (2020). Are applicants in favor of traditional or gamified assessment methods? Exploring applicant reactions towards a gamified selection method. Comput. Hum. Behav. 109:106356. doi: 10.1016/j.chb.2020.106356

Gilliland, S. W. (1993). The perceived fairness of selection systems: an organizational justice perspective. Acad. Manag. Rev. 18, 694–734. doi: 10.5465/amr.1993.9402210155

Guenole, N., Svensson, C., Wille, B., Aloyan, K., and Saville, P. (eds). (2022). “A European perspective on Psychometric Measurement Technology,” in Technology and Measurement Around the Globe, (Cambridge: Cambridge University Press).

Guidotti, R., Monreale, A., Ruggieri, S., Turini, F., Giannotti, F., and Pedreschi, D. (2018). A survey of methods for explaining black box models. ACM Comput. Surv. 51:93. doi: 10.1145/3236009

Hauk, N., Hüffmeier, J., and Krumm, S. (2018). Ready to be a silver surfer? A meta-analysis on the relationship between chronological age and technology acceptance. Comput. Hum. Behav. 84, 304–319. doi: 10.1016/j.chb.2018.01.020

Hausknecht, J. P., Day, D. V., and Thomas, S. C. (2004). Applicant reactions to selection procedures: an updated model and meta-analysis. Pers. Psychol. 57, 639–683. doi: 10.1111/j.1744-6570.2004.00003.x

Hilliard, A., Kazim, E., Bitsakis, T., and Leutner, F. (2022a). Measuring personality through images: validating a forced-choice image-based assessment of the big five personality traits. J. Intell. 10:12. doi: 10.3390/jintelligence10010012

Hilliard, A., Kazim, E., Koshiyama, A., Zannone, S., Trengove, M., Kingsman, N., et al. (2022b). Regulating the robots: NYC mandates bias audits for ai-driven employment decisions. SSRN Electronic J. 14, 1–14. doi: 10.2139/SSRN.4083189

Int 1894-2020 (2021). Available online at: https://legistar.council.nyc.gov/LegislationDetail.aspx?ID=4344524&GUID=B051915D-A9AC-451E-81F8-6596032FA3F9&Options=Advanced&Search (accessed May 9, 2022).

Kaibel, C., Koch-Bayram, I., Biemann, T., and Mühlenbock, M. (2019). “Applicant perceptions of hiring algorithms - Uniqueness and discrimination experiences as moderators,” in Proceedings of the 79th Annual Meeting of the Academy of Management 2019: Understanding the Inclusive Organization (Briarcliff Manor, NY: AoM). doi: 10.5465/AMBPP.2019.210

Kessler, S. R., Bandelli, A. C., Spector, P. E., Borman, W. C., Nelson, C. E., and Penney, L. M. (2010). Re-Examining machiavelli: a three-dimensional model of machiavellianism in the workplace. J. Appl. Soc. Psychol. 40, 1868–1896. doi: 10.1111/J.1559-1816.2010.00643.X

Kilduff, M., Chiaburu, D. S., and Menges, J. I. (2010). Strategic use of emotional intelligence in organizational settings: exploring the dark side. Res. Organ. Behav. 30, 129–152. doi: 10.1016/j.riob.2010.10.002

Köchling, A., and Wehner, M. C. (2020). Discriminated by an algorithm: a systematic review of discrimination and fairness by algorithmic decision-making in the context of HR recruitment and HR development. Bus. Res. 13, 795–848. doi: 10.1007/S40685-020-00134-W/FIGURES/2

Köchling, A., Wehner, M. C., and Warkocz, J. (2022). Can I show my skills? Affective responses to artificial intelligence in the recruitment process. Rev. Manag. Sci. 2022, 1–30. doi: 10.1007/S11846-021-00514-4/FIGURES/3

Ladders (2018). Eye-Tracking Study 2018. Available online at: https://www.theladders.com/static/images/basicSite/pdfs/TheLadders-EyeTracking-StudyC2.pdf (accessed May 9, 2022).

Landers, R. N., Armstrong, M. B., Collmus, A. B., Mujcic, S., and Blaik, J. (2021). Theory-driven game-based assessment of general cognitive ability: design theory, measurement, prediction of performance, and test fairness. J. Appl. Psychol. doi: 10.1037/apl0000954 [Epub ahead of print].

Langer, M., Baum, K., König, C. J., Hähne, V., Oster, D., and Speith, T. (2021). Spare me the details: how the type of information about automated interviews influences applicant reactions. Int. J. Sel. Assess. 29, 154–169. doi: 10.1111/ijsa.12325

Langer, M., König, C. J., and Fitili, A. (2018). Information as a double-edged sword: the role of computer experience and information on applicant reactions towards novel technologies for personnel selection. Comput. Hum. Behav. 81, 19–30. doi: 10.1016/j.chb.2017.11.036

Langer, M., König, C. J., and Papathanasiou, M. (2019). Highly automated job interviews: acceptance under the influence of stakes. Int. J. Sel. Assess. 27, 217–234. doi: 10.1111/ijsa.12246

Laurano, M. (2022). The Power of AI in Talent Acquisition. Available online at: https://www.aptituderesearch.com/wp-content/uploads/2022/03/Apt_PowerofAI_Report-0322_Rev4.pdf (accessed May 9, 2022).

Lee, M. K. (2018). Understanding perception of algorithmic decisions: fairness, trust, and emotion in response to algorithmic management. Big Data Soc. 5:205395171875668. doi: 10.1177/2053951718756684

Lepri, B., Oliver, N., Letouzé, E., Pentland, A., and Vinck, P. (2018). Fair, transparent, and accountable algorithmic decision-making processes: the premise, the proposed solutions, and the open challenges. Philos. Technol. 31, 611–627. doi: 10.1007/s13347-017-0279-x

Leutner, F., Sonia-Cristina, S.-C., Liff, J., and Mondragon, N. (2020). The potential of game- and video-based assessments for social attributes: examples from practice. J. Manag. Psychol. 36, 533–547. doi: 10.1108/JMP-01-2020-0023

Li, L., Lassiter, T., Lee, M. K., and Oh, J. (2021). “Algorithmic hiring in practice: recruiter and hr professional’s perspectives on AI use in hiring; algorithmic hiring in practice: recruiter and HR professional’s perspectives on AI use in hiring,” in Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society (New York, NY: Association for Computing Machinery). doi: 10.1145/3461702

Lieberoth, A. (2015). Shallow gamification: testing psychological effects of framing an activity as a game. Games Cult. 10, 229–248. doi: 10.1177/1555412014559978

Mirowska, A., and Mesnet, L. (2021). Preferring the devil you know: potential applicant reactions to artificial intelligence evaluation of interviews. Hum. Resour. Manag. J. 32:12393. doi: 10.1111/1748-8583.12393

Office for National Statistics (2021). Changing Trends and Recent Shortages in the Labour Market, UK - Office for National Statistics. Available online at: https://www.ons.gov.uk/employmentandlabourmarket/peopleinwork/employmentandemployeetypes/articles/changingtrendsandrecentshortagesinthelabourmarketuk/2016to2021 (accessed May 9, 2022).

Salovey, P., and Mayer, J. D. (1990). Emotional Intelligence. Imag. Cogn. Pers. 9, 185–211. doi: 10.2190/DUGG-P24E-52WK-6CDG

Society for Industrial and Organizational Psychology (2018). Principles for the Validation and Use of Personnel Selection Procedures, 5th Edn. Bowling Green, OH: Society for Industrial and Organizational Psychology. doi: 10.1017/iop.2018.195

Suen, H. Y., Chen, M. Y. C., and Lu, S. H. (2019). Does the use of synchrony and artificial intelligence in video interviews affect interview ratings and applicant attitudes? Comput. Hum. Behav. 98, 93–101. doi: 10.1016/j.chb.2019.04.012

Weiss, B., and Feldman, R. S. (2006). Looking good and lying to do it: deception as an impression management strategy in job interviews. J. Appl. Soc. Psychol. 36, 1070–1086. doi: 10.1111/J.0021-9029.2006.00055.X

Willis, C., Powell-Rudy, T., Colley, K., and Prasad, J. (2021). Examining the use of game-based assessments for hiring autistic job seekers. J. Intell. 9:53. doi: 10.3390/JINTELLIGENCE9040053

Wright, J., and Atkinson, D. (2019). The Impact of Artificial Intelligence Within the Recruitment Industry: Defining a New Way of Recruiting. Available online at: https://www.cfsearch.com/wp-content/uploads/2019/10/James-Wright-The-impact-of-artificial-intelligence-within-the-recruitment-industry-Defining-a-new-way-of-recruiting.pdf (accessed May 9, 2022).

Keywords: selection, recruitment, fairness, perceptions, psychometrics, algorithm, machine learning

Citation: Hilliard A, Guenole N and Leutner F (2022) Robots are judging me: Perceived fairness of algorithmic recruitment tools. Front. Psychol. 13:940456. doi: 10.3389/fpsyg.2022.940456

Received: 10 May 2022; Accepted: 05 July 2022;

Published: 25 July 2022.

Edited by:

Luke Treglown, University College London, United KingdomReviewed by:

Vincent F. Mancuso, Massachusetts Institute of Technology, United StatesCopyright © 2022 Hilliard, Guenole and Leutner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Airlie Hilliard, ahill015@gold.ac.uk

Airlie Hilliard

Airlie Hilliard Nigel Guenole

Nigel Guenole Franziska Leutner

Franziska Leutner