Abstract

Much of the debate on the ethics of self-driving cars has revolved around trolley scenarios. This paper instead takes up the political or institutional question of who should decide how a self-driving car drives. Specifically, this paper is on the question of whether and why passengers should be able to control how their car drives. The paper reviews existing arguments—those for passenger ethics settings and for mandatory ethics settings respectively—and argues that they fail. Although the arguments are not successful, they serve as the basis to formulate desiderata that any approach to regulating the driving behavior of self-driving cars ought to fulfill. The paper then proposes one way of designing passenger ethics settings that meets these desiderata.

Similar content being viewed by others

Notes

By “self-driving cars,” “autonomous vehicles” or “automated vehicles” (AV) I understand individually-owned passenger vehicles with automation levels 4 or higher according to the SAE definition. I concentrate on cars owned by individuals, in contrast to corporate-owned cars.

The nomenclature is from Gogoll and Müller (2017). The distinction between PES and MES depends on whether a passenger can meaningfully control a vehicle’s driving style and macro path planning. The expression “meaningful control” is central to the ethics of robotics.

My discussion here is prompted by comments by a peer reviewer for a different journal.

Tesla’s cost function for path planning minimizes traversal time, collision risk, lateral acceleration, and lateral jerk—the latter as a measure of comfort (Tesla, 2021). The behavior of Teslas is hence governed via deliberately designed properties of the cost function.

Technical and normative issues are not independent: Technological choices constrain the ethics of a system. This is an important insight in the value-alignment literature (cf. Gabriel, 2020), of which the debate on the ethics of self-driving cars can be seen as a part.

Things are actually more complicated because it is not clear whose proxy the cars ought to be—there is thus a “moral proxy problem” (Thoma, 2022). Depending on whether cars are proxies for individuals or aggregates (such as developers or regulators), they should make risky decisions very differently (ibid.).

Of course, there could be a collective decision in favor of PES; but this is not how PES are usually defended.

I take the name for this argument from the title of a paper by Bonnefon et al. (2016), who present the empirical finding that motivates the argument that I present here (The main idea in the argument is also called the “ethical opt-out problem” (Bonnefon et al., 2020)). However—to avoid misattribution—the argument I present here is not theirs. The argument is hinted at by Contissa et al., (2017, p. 367) who write that “[i]f an impartial (utilitarian) ethical setting is made compulsory for, and rigidly implemented into, all AVs, many people may refuse to use AVs, even though AVs may have significant advantages, in particular with regard to safety, over human-driven vehicles.” Bonnefon et al. (2020, p. 110), however, advance a similar argument. They write: “[I]f people are not satisfied with the ethical principles that guide moral algorithms, they will simply opt out of using these algorithms, thus nullifying all their expected benefits.”.

Similarly, Ryan (2020) writes: “Very few people would buy [a self-driving car] if they prioritised the lives of others over the vehicle’s driver and passengers.”.

The social dilemma argument is motivated by an empirical finding: Although a majority of people agree that a driving style that maximizes overall welfare or health in a population is the preferable driving style from a moral point of view, many people would not actually want to use or buy a vehicle that drives in this way (Bonnefon et al., 2016; Gill 2021). This is the social dilemma.

What I describe is only an extreme version of an egoistic car. In fact, as has been argued, there could be a continuum (Contissa et al., 2017).

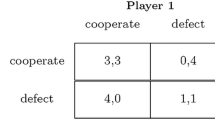

A prisoners dilemma is a two-person symmetric game with two pure strategies, “cooperate” and “defect”, in which the payoffs of the four different outcomes satisfy the condition T > R > P > S, that is, temptation to defect against a cooperator has a strictly greater payoff than reward of mutual cooperation, punishment for mutual defection, and the so-called sucker payoff for cooperating with a defector.

This is acknowledged by some (Bonnefon et al., 2016).

Respondents in China would find it “tolerable” if self-driving cars are four to five times as safe as human drivers and “acceptable” if the cars were safer by one to two orders of magnitude (Liu et al., 2019).

For context: These are data from US participants. US participants can be expected to have relatively unfavorable attitudes towards AVs compared to India or China. A study in 2014 found that only 14% to 22% of respondents in the UK and US respectively hold very positive attitudes towards automated vehicles compared to 46% and 50% in India and China (Schoettle and Sivak, 2014).

The Kelley Blue Book calls these “value shoppers” (KBB Editors, 2022).

This is not a crucial assumption: Even if the nominal insurance costs might be higher, especially in the short term, they could be decreased by policy to make self-driving cars attractive (Ravid, 2014).

Moreover, it would likely take decades to be able to have sufficient exposure to measure (as opposed to simulate or estimate) the safety of self-driving cars (Kalra & Paddock, 2016).

I concentrate on this argument because it is a recent and the best developed one.

By “best interest of society” the authors mean that traffic injuries and fatalities are minimized in a given population.

This differs from the social dilemma argument which assumed that purchasing decisions are a PD instead of traffic being a PD.

I write “emerge” and “stable” to indicate that the game is played repeatedly. Even if players will not cooperate in one-shot games, the prospects for achieving widespread cooperation look much better when PD is played repeatedly.

It could be said that the traffic game is embedded in other games within the political structure.

Of course, also MES could incorporate a concern for pluralism. But, arguably, PES are more responsive to occupants’ preferences. On PES, the average distance between behavior and preference will likely be narrower than on MES.

Another illustration of this conflict between others’ interest and your interest is, of course, in trolley cases and collision scenarios such as in the Tunnel Problem where a car needs to choose between running over a pedestrian or running the car into the wall of a tunnel (Millar, 2014a).

By “mobility” I understand the time required to get to a destination. By “safety” I understand the absence of risk, defined as a function of the probability of a hazardous event and the harm to the occupants and others. It should be noted that I understand both “mobility” and “safety” impartially as everyone’s mobility and safety and not just those of vehicle occupants.

Assume also that this situation occurs in a location that does not prescribe a minimum lateral distance for safe passing.

Of course, the details of this would have to be worked out by operationalizing these value conflicts and by studying the user interaction design (cf. Thornton et al., 2019).

This is a matter of how the one dial trades off between the mobility–safety conflict and the other for the self-interest–other-interest conflict. How the one dial makes this tradeoff—the path of the indifference curve though the space of parameter combinations—is an important question for ethics and design.

Another problem with this objection is that it considers frequency but not stakes. It might be true that there are more opportunities for mobility and few for safety. But the stakes for safety might be much higher than those for mobility: Safety is about avoiding injuries and physical harms but mobility only about getting to a destination faster.

Shariff et al. (2017) discuss the importance of “virtue signalling”, however, not in the context of PES but instead as a psychological mechanism to exploit (in advertisement and communication) to increase AV adoption.

References

Alexander, J. M. (2007). The structural evolution of morality. Cambridge University Press.

Arpaly, N. (2004). Unprincipled virtue: An inquiry into moral agency. Oxford University Press.

AutoPacific. (2022). FADS—AutoPacific Insights. AutoPacific. Retrieved August 18, 2022, from https://www.autopacific.com/autopacific-insights/tag/FADS.

Awad, E., Dsouza, S., Bonnefon, J.-F., Shariff, A., & Rahwan, I. (2020). Crowdsourcing moral machines. Communications of the ACM, 63(3), 48–55. https://doi.org/10.1145/3339904

Axelrod, R. (2009). The evolution of cooperation (Revised). Basic Books.

Basl, J., & Behrends, J. (2020). Why everyone has it wrong about the ethics of autonomous vehicles. In Frontiers of engineering: Reports on leading-edge engineering from the 2019 symposium. National Academies Press. https://doi.org/10.17226/25620.

Bonnefon, J.-F., Shariff, A., & Rahwan, I. (2016). The social dilemma of autonomous vehicles. Science, 352(6293), 1573–1576. https://doi.org/10.1126/science.aaf2654

Bonnefon, J.-F., Shariff, A., & Rahwan, I. (2020). The moral psychology of AI and the ethical opt-out problem. In S. Matthew Liao (Ed.), Ethics of artificial intelligence (pp. 109–126). Oxford University Press.

Borenstein, J., Herkert, J. R., & Miller, K. W. (2017). Self-driving cars and engineering ethics: the need for a system level analysis. Science and Engineering Ethics. https://doi.org/10.1007/s11948-017-0006-0

Bouton, M., Nakhaei, A., Fujimura, K., & Kochenderfer, M. J. (2018). Scalable decision making with sensor occlusions for autonomous driving. In 2018 IEEE international conference on robotics and automation (ICRA), 2076–2081. https://doi.org/10.1109/ICRA.2018.8460914.

Brändle, C., & Schmidt, M. W. (2021). Autonomous driving and public reason: A rawlsian approach. Philosophy & Technology, 34(4), 1475–1499. https://doi.org/10.1007/s13347-021-00468-1

Choi, J. K., & Ji, Y. G. (2015). Investigating the importance of trust on adopting an autonomous vehicle. International Journal of Human-Computer Interaction, 31(10), 692–702. https://doi.org/10.1080/10447318.2015.1070549

Cohen, T., & Cavoli, C. (2019). Automated vehicles: Exploring possible consequences of government (non)intervention for congestion and accessibility. Transport Reviews, 39(1), 129–151. https://doi.org/10.1080/01441647.2018.1524401

Contissa, G., Lagioia, F., & Sartor, G. (2017). The ethical knob: Ethically-customisable automated vehicles and the law. Artificial Intelligence and Law, 25(3), 365–378. https://doi.org/10.1007/s10506-017-9211-z

Crawford, K., & Calo, R. (2016). There is a blind spot in AI research. Nature News, 538(7625), 311. https://doi.org/10.1038/538311a

Cunneen, M., Mullins, M., Murphy, F., Shannon, D., Furxhi, I., & Ryan, C. (2020). Autonomous vehicles and avoiding the trolley (dilemma): Vehicle perception, classification, and the challenges of framing decision ethics. Cybernetics and Systems, 51(1), 59–80. https://doi.org/10.1080/01969722.2019.1660541

Dietrich, M., & Weisswange, T. H. (2019). Distributive justice as an ethical principle for autonomous vehicle behavior beyond hazard scenarios. Ethics and Information Technology, 21(3), 227–239. https://doi.org/10.1007/s10676-019-09504-3

Epting, S. (2019). Automated vehicles and transportation justice. Philosophy & Technology, 32(3), 389–403. https://doi.org/10.1007/s13347-018-0307-5

Etzioni, A., & Etzioni, O. (2017). Incorporating ethics into artificial intelligence. The Journal of Ethics, 21(4), 403–418. https://doi.org/10.1007/s10892-017-9252-2

Fraade-Blanar, L., Blumenthal, M. S., Anderson, J. M., & Kalra, N. (2018). Measuring automated vehicle safety. Research reports. Santa Monica, CA: RAND Corporation. Retrieved from https://www.rand.org/pubs/research_reports/RR2662.html.

Gabriel, I. (2020). Artificial intelligence, values, and alignment. Minds and Machines, 30(3), 411–437. https://doi.org/10.1007/s11023-020-09539-2

Gabriel, I. (2022). Towards a theory of justice for artificial intelligence. Dædalus, 151(2), 218–231.

Gerdes, J. C., Thornton, S. M., & Millar, J. (2019). Designing automated vehicles around human values. In G. Meyer & S. Beiker (eds) Road vehicle automation 6 (pp. 39–48). Lecture Notes in Mobility. Springer.

Gill, T. (2021). Ethical dilemmas are really important to potential adopters of autonomous vehicles. Ethics and Information Technology, 23(4), 657–673. https://doi.org/10.1007/s10676-021-09605-y

Gogoll, J., & Müller, J. F. (2017). Autonomous cars. In favor of a mandatory ethics setting. Science and Engineering Ethics, 23(3), 681–700. https://doi.org/10.1007/s11948-016-9806-x

Goodall, N. J. (2014). Machine ethics and automated vehicles. In G. Meyer, & S. Beiker (Eds.), Road vehicle automation 6 (pp. 93–102). Lecture Notes in Mobility. Springer. https://doi.org/10.1007/978-3-319-05990-7_9.

Goodall, N. J. (2016). Away from Trolley Problems and Toward Risk Management. Applied Artificial Intelligence, 30(8), 810–821. https://doi.org/10.1080/08839514.2016.1229922

Goodall, N. J. (2017). From trolleys to risk: Models for ethical autonomous driving. American Journal of Public Health, 107(4), 496–496. https://doi.org/10.2105/AJPH.2017.303672

Goodall, N. J. (2019). More than trolleys. Transfers, 9(2), 45–58. https://doi.org/10.3167/TRANS.2019.090204

Harper, C. D., Hendrickson, C. T., Mangones, S., & Samaras, C. (2016). Estimating potential increases in travel with autonomous vehicles for the non-driving, elderly and people with travel-restrictive medical conditions. Transportation Research Part c: Emerging Technologies, 72(November), 1–9. https://doi.org/10.1016/j.trc.2016.09.003

Himmelreich, J. (2018). Never mind the trolley: The ethics of autonomous vehicles in mundane situations. Ethical Theory and Moral Practice, 21(3), 669–684. https://doi.org/10.1007/s10677-018-9896-4

Himmelreich, J. (2020). Ethics of technology needs more political philosophy. Communications of the ACM, 63(1), 33–35. https://doi.org/10.1145/3339905

JafariNaimi, N. (2017). Our bodies in the trolley’s path, or why self-driving cars must not be programmed to kill. Science, Technology, & Human Values. https://doi.org/10.1177/0162243917718942

Kalra, N., & Paddock, S. M. (2016). Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Research reports. Santa Monica, CA: RAND Corporation. https://doi.org/10.7249/RR1478

KBB Editors. (2022). How to buy a new car in 10 steps. Kelley Blue Book (blog). Retrieved March 23, 2022, from https://www.kbb.com/car-advice/10-steps-to-buying-a-new-car/.

Keeling, G. (2020). Why trolley problems matter for the ethics of automated vehicles. Science and Engineering Ethics, 26(February), 293–307. https://doi.org/10.1007/s11948-019-00096-1

Keeling, G., Evans, K., Thornton, S. M., Mecacci, G., & de Sio, F. S. (2019). Four perspectives on what matters for the ethics of automated vehicles. In G. Meyer & S. Beiker (Eds.), Road vehicle automation 6 (pp. 49–60). Lecture Notes in Mobility. Springer.

Liljamo, T., Liimatainen, H., & Pöllänen, M. (2018). Attitudes and concerns on automated vehicles. Transportation Research Part F: Traffic Psychology and Behaviour, 59(November), 24–44. https://doi.org/10.1016/j.trf.2018.08.010

Lin, P. (2014). Here’s a terrible idea: Robot cars with adjustable ethics settings. Wired. Retrieved August 18, 2014, from https://www.wired.com/2014/08/heres-a-terrible-idea-robot-cars-with-adjustable-ethics-settings/.

Lin, P. (2017). Robot cars and fake ethical dilemmas. Forbes. Retrieved April 3, 2017, from https://www.forbes.com/sites/patricklin/2017/04/03/robot-cars-and-fake-ethical-dilemmas/.

Liu, P., Yang, R., & Zhigang, Xu. (2019). How safe is safe enough for self-driving vehicles? Risk Analysis, 39(2), 315–325. https://doi.org/10.1111/risa.13116

Milakis, D., van Arem, B., & van Wee, B. (2017). Policy and society related implications of automated driving: A review of literature and directions for future research. Journal of Intelligent Transportation Systems, 21(4), 324–348. https://doi.org/10.1080/15472450.2017.1291351

Millar, J. (2014a). Proxy prudence: Rethinking models of responsibility for semi‐autonomous robots. SSRN Scholarly Paper ID 2442273. Rochester, NY: Social Science Research Network. Retrieved from https://papers.ssrn.com/abstract=2442273.

Millar, J. (2014b). You should have a say in your Robot car’s code of ethics. Wired. Retrieved September 2, 2014b, from https://www.wired.com/2014b/09/set-the-ethics-robot-car/.

Millar, J. (2015). Technology as moral proxy: Autonomy and paternalism by design. IEEE Technology and Society Magazine, 34(2), 47–55. https://doi.org/10.1109/MTS.2015.2425612

Millar, J. (2017). Ethics settings for autonomous vehicles. In P. Lin, R. Jenkins, & K. Abney (Eds.), Robot ethics 2.0: From autonomous cars to artificial intelligence (pp. 20–34). Oxford University Press.

Mladenovic, M. N., & McPherson, T. (2016). Engineering social justice into traffic control for self-driving vehicles? Science and Engineering Ethics, 22(4), 1131–1149. https://doi.org/10.1007/s11948-015-9690-9

Moridpour, S., Sarvi, M., & Rose, G. (2010). Lane changing models: A critical review. Transportation Letters, 2(3), 157–173. https://doi.org/10.3328/TL.2010.02.03.157-173

NHTSA. (1995). Synthesis report: Examination of target vehicular crashes and potential ITS countermeasures. DOT HS 808 263. United States Department of Transportation.

NHTSA. (2017). Automated driving systems 2.0: A vision for safety. United States Department of Transportation.

Nunes, A. (2019). Driverless cars: Researchers have made a wrong turn. Nature. https://doi.org/10.1038/d41586-019-01473-3

Nyholm, S. (2018). The ethics of crashes with self-driving cars: A roadmap, I. Philosophy Compass, 13(7), e12507. https://doi.org/10.1111/phc3.12507

Nyholm, S., & Smids, J. (2016). The ethics of accident-algorithms for self-driving cars: An applied trolley problem? Ethical Theory and Moral Practice, 19(5), 1275–1289. https://doi.org/10.1007/s10677-016-9745-2

Nyholm, S., & Smids, J. (2020). Automated cars meet human drivers: Responsible human-robot coordination and the ethics of mixed traffic. Ethics and Information Technology, 22, 335–344. https://doi.org/10.1007/s10676-018-9445-9

O’Connor, M. R. (2019). The fight for the right to drive. Retrieved April 30, 2019, from https://www.newyorker.com/culture/annals-of-inquiry/the-fight-for-the-right-to-drive.

Rahwan, I. (2018). Society-in-the-loop: Programming the algorithmic social contract. Ethics and Information Technology, 20(1), 5–14. https://doi.org/10.1007/s10676-017-9430-8

Ravid, O. (2014). Don’t sue me, I was just lawfully texting & drunk when my autonomous car crashing into you. Southwestern Law Review, 44(1), 175–208.

Rodríguez-Alcázar, J., Bermejo-Luque, L., & Molina-Pérez, A. (2021). Do automated vehicles face moral dilemmas? A plea for a political approach. Philosophy & Technology, 34(4), 811–832. https://doi.org/10.1007/s13347-020-00432-5

Roy, A. (2018). This is the human driving manifesto. The Drive. Retrieved March 5, 2018, from https://www.thedrive.com/opinion/18952/this-is-the-human-driving-manifesto.

Ryan, M. (2020). The future of transportation: Ethical, legal, social and economic impacts of self-driving vehicles in the year 2025. Science and Engineering Ethics, 26(June), 1185–1208. https://doi.org/10.1007/s11948-019-00130-2

Schoettle, B., & Sivak, M. (2014). Public opinion about self-driving vehicles in China, India, Japan, the U.S., the U.K., and Australia. Technical Report. University of Michigan, Ann Arbor, Transportation Research Institute. Retrieved from http://deepblue.lib.umich.edu/handle/2027.42/109433.

Sezer, V. (2018). Intelligent decision making for overtaking maneuver using mixed observable Markov decision process. Journal of Intelligent Transportation Systems, 22(3), 201–217. https://doi.org/10.1080/15472450.2017.1334558

Shariff, A., Bonnefon, J.-F., & Rahwan, I. (2017). Psychological roadblocks to the adoption of self-driving vehicles. Nature Human Behaviour, 1(10), 694. https://doi.org/10.1038/s41562-017-0202-6

Shariff, A., Bonnefon, J.-F., & Rahwan, I. (2021). How safe is safe enough? Psychological mechanisms underlying extreme safety demands for self-driving cars. Transportation Research Part C: Emerging Technologies, 126(May), 103069. https://doi.org/10.1016/j.trc.2021.103069

Soltanzadeh, S., Galliott, J., & Jevglevskaja, N. (2020). Customizable ethics settings for building resilience and narrowing the responsibility gap: Case studies in the socio-ethical engineering of autonomous systems. Science and Engineering Ethics, 26, 2693–2708. https://doi.org/10.1007/s11948-020-00221-5

Susskind, J. (2018). Future politics: Living together in a world transformed by tech. Oxford University Press.

Tesla, dir. (2021). Tesla AI Day. Retrieved from https://www.youtube.com/watch?v=j0z4FweCy4M.

Thoma, J. (2022). Risk imposition by artificial agents: The moral proxy problem. In S. Vöneky, P. Kellmeyer, O. Müller, & W. Burgard (Eds.), The Cambridge handbook of responsible artificial intelligence: Interdisciplinary perspectives. Cambridge University Press.

Thornton, S. M., Limonchik, B., Lewis, F. E., Kochenderfer, M., & Christian Gerdes, J. (2019). Towards closing the loop on human values. IEEE Transactions on Intelligent Vehicles. https://doi.org/10.1109/TIV.2019.2919471

Xu, Z., Zhang, K., Min, H., Wang, Z., Zhao, X., & Liu, P. (2018). What drives people to accept automated vehicles? Findings from a field experiment. Transportation Research Part C: Emerging Technologies, 95(October), 320–334. https://doi.org/10.1016/j.trc.2018.07.024

Zimmermann, A., Di Rosa, E., & Kim, H. (2020). Technology can’t fix algorithmic injustice. Boston Review. Retrieved January 9, 2020, from http://bostonreview.net/science-nature-politics/annette-zimmermann-elena-di-rosa-hochan-kim-technology-cant-fix-algorithmic.

Acknowledgements

I am grateful for thoughts and comments I received from Johanna Thoma and Sebastian Köhler, from students at Sonoma State University, from participants and the audience at the Automated Vehicles Symposium 2019 in Orlando, as well as from an anonymous reviewer for this journal.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Himmelreich, J. No wheel but a dial: why and how passengers in self-driving cars should decide how their car drives. Ethics Inf Technol 24, 45 (2022). https://doi.org/10.1007/s10676-022-09668-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s10676-022-09668-5