- 1Faculty of Psychology, Southwest University, Chongqing, China

- 2Institute of Affective Computing and Information Processing, Southwest University, Chongqing, China

- 3Chongqing Brain Science Collaborative Innovation Center, Southwest University, Chongqing, China

- 4School of Electronic and Information Engineering of Southwest University, Chongqing, China

- 5Chongqing Key Laboratory of Non-linear Circuit and Intelligent Information Processing, Southwest University, Chongqing, China

- 6School of Music, Southwest University, Chongqing, China

Tempo is an important musical element that affects human’s emotional processes when listening to music. However, it remains unclear how tempo and training affect individuals’ emotional experience of music. To explore the neural underpinnings of the effects of tempo on music-evoked emotion, music with fast, medium, and slow tempi were collected to compare differences in emotional responses using functional magnetic resonance imaging (fMRI) of neural activity between musicians and non-musicians. Behaviorally, musicians perceived higher valence in fast music than did non-musicians. The main effects of musicians and non-musicians and tempo were significant, and a near significant interaction between group and tempo was found. In the arousal dimension, the mean score of medium-tempo music was the highest among the three kinds; in the valence dimension, the mean scores decreased in order from fast music, medium music, to slow music. Functional analyses revealed that the neural activation of musicians was stronger than those of non-musicians in the left inferior parietal lobe (IPL). A comparison of tempi showed a stronger activation from fast music than slow music in the bilateral superior temporal gyrus (STG), which provided corresponding neural evidence for the highest valence reported by participants for fast music. Medium music showed stronger activation than slow music in the right Heschl’s gyrus (HG), right middle temporal gyrus (MTG), right posterior cingulate cortex (PCC), right precuneus, right IPL, and left STG. Importantly, this study confirmed and explained the connection between music tempo and emotional experiences, and their interaction with individuals’ musical training.

Highlights

- Fast music evoked positive emotional valence with activation in the bilateral STG.

- Medium music evoked the strongest emotional arousal and lowest emotional valence.

- Medium music activated right HG, MTG, cingulate gyrus, precuneus, IPL, and left STG.

- Musical training led to differentiated neural activation in the left IPL.

Introduction

As a basic temporal concept in beat perception, tempo is primary importance in determining listeners’ music-evoked emotion (Hevner, 1937; Melvin, 1964; Gabrielsson and Juslin, 2003; Gagnon and Peretz, 2003) and is also an important feature for recognizing different emotional states based on the long-term modulation spectrum analysis (Shi et al., 2006).

Music with different tempi can evoke different emotions. Music with a fast tempo has been found to evoke positive emotions, such as happiness, excitement, delight, and liveliness, while music with a slow tempo evokes negative emotions, such as sadness, depression, and gravity (Peretz et al., 1998; Balkwill and Thompson, 1999; Juslin and Sloboda, 2001). In comparison, speech, as another type of acoustic cue, produces almost the opposite emotional effect. Fast speech has been judged as being less pleasant than slow speech (Ilie and Thompson, 2006). It is thus worth investigating what the neural connections are between musical tempi and their emotional function in listening to music, which can provide a valuable description of music-evoked emotion and promote the application of tempo in regulating music-evoked emotion.

By detecting individuals’ electroencephalogram frequencies that correspond to the tempo of music, researchers have found that musical stimuli with different tempi entrained neural changes in the motor and auditory cortices, which was most prominent in the alpha (8–12 Hz) and beta (12.5–18 Hz) ranges (Yuan et al., 2009; Nicolaou et al., 2017). When listening to highly arousing, usually fast music, alpha activities have been found to decrease in the frontal and temporal areas (Basar et al., 1999; Ting et al., 2007), and beta waves have been detected to increase in the left temporal lobe and motor area (Höller et al., 2012; Gentry et al., 2013). In a functional magnetic resonance imaging (fMRI) study, music with positive emotions was associated with large activation in the auditory cortices, motor area, and limbic systems (Brattico et al., 2011; Koelsch et al., 2013; Park et al., 2014; Bogert et al., 2016). However, no studies have directly compared the differential neural activities of music-evoked emotion aroused by different music tempi, which is a basic and important acoustic feature in music listening. It can be hypothesized that a positive emotional experience when listening to fast music would arouse strong neural activities in the auditory and motor cortices.

Music with a slow tempo often evokes negative emotions (Balkwill and Thompson, 1999; Cai and Pan, 2007; Hunter et al., 2010); however, the neural mechanism behind this is still unclear. Humans do not always prefer slow to fast tempo, while nonhuman primates have been found to prefer music with a slow tempo that was similar to their alarm calls of short broadband bursts repeated at very high rates (McDermott and Hauser, 2007), which implies that humans’ emotional experience of music may be the result of a matched-degree between the music tempo and physiological rhythmic features. During sports training, slow music was found to decrease players’ feelings of revitalization and positive engagement (Szabo and Hoban, 2004). Emotional and psychophysiological research had proved that a decrease in tempo led to a decrease in reported arousal and tension and a decrease in heart rate variability (Van der Zwaag et al., 2011; Karageorghis and Jones, 2014). Therefore, the mechanism of slow music’s emotional effect may be the result of its slowed acoustical arousal compared with humans’ physiological rhythms, such as heart rate or walking pace tempo, which may produce negative emotional effects with weak activation in the auditory cortex.

Few studies have looked at medium-tempo music. Medium music reduced the rating of perceived exertion (Silva et al., 2016) and was preferred at low and moderate intensities of exercise (Karageorghis et al., 2006), which reflected its advantage in optimizing listeners’ physiological load and may be connected with autonomic response. Due to the similarity between medium tempo and humans’ physiological rhythms (∼75 bpm), it could be assumed that it may be easier to process activities in the autonomic emotional network with medium-tempo music than with music of other tempi.

Additionally, tempo has been found to be connected to individuals’ musical training through enhanced activation in the dorsal premotor cortex, prefrontal cortex (Kim et al., 2004; Chen et al., 2008), and subcortical systems (Strait et al., 2009). Compared with non-musicians, musicians were more sophisticated in their recognition of music emotion with stronger activation of the frontal theta and alpha (Nolden et al., 2017), as well as stronger activation in the auditory system through a complex network that covered the cortical and sub-cortical areas (Peretz and Zatorre, 2005; Levitin and Tirovolas, 2009; Levitin, 2012; Zatorre, 2015). Conversely, by examining musicians and non-musicians’ responses to phase and tempo perturbations, researchers found that the ability of individuals to perceive the beat and rhythm of a musical piece was independent of prior musical training (Large et al., 2002; Geiser et al., 2009). Whether the way in which tempo interacts with individuals’ emotional values is dependent upon their musical training has been a controversial question and is worth studying.

In order to investigate the effects of tempo on how humans’ emotional experiences interact with musical training, this study attempted to explore the differences in the behavioral and neural emotional activity induced by music of different tempi between musicians and non-musicians. Typically, tempo is measured according to beats per minute (bpm) and is divided into prestissimo (>200 bpm), presto (168–200 bpm), allegro (120–168 bpm), moderato (108–120 bpm), andante (76–108 bpm), adagio (66–76 bpm), larghetto (60–66 bpm), and largo (40–60 bpm) (Fernández-Sotos et al., 2016). Valence and arousal were chosen as the basic dimensions for detecting listeners’ emotional experience; these were the most popular features in presenting humans’ emotional experiences while listening to music (Bradley and Lang, 1994). First, in this experiment, music with a fast tempo (>120 bpm, presto and allegro), medium tempo (76–120 bpm, moderato and andante), and slow tempo (60–76 bpm, adagio and larghetto) were chosen to stimulate music-evoked emotion. Second, to ensure a neural activation closest to the natural experience of music listening and to avoid the neural activity of a motoric task, we collected the brain activation during a passive music listening activity without any other body movement or cognitive process. Third, to collect individuals’ evaluations of music-evoked emotion, we arranged the subjective emotion assessment of the valence and arousal dimensions soon after the fMRI scanning.

We hypothesized that musicians would show stronger emotional experiences and neural activities than non-musicians. Music with a fast tempo would arouse the most positive emotional reactions with activation in the temporal and motor processing areas. Music with a medium tempo, which is close to humans’ physiological rhythms, would arouse a strong emotional response by entraining the autonomic neural activation of emotion processes. Music with a slow tempo would receive the lowest emotional valence and the weakest emotional arousal by the participants.

Materials and Methods

Participants

Forty-eight healthy adult volunteers (mean age 20.77 ± 1.87 years, 25 women, all right-handed) took part in the study after providing written informed consent. All volunteers were free of contraindications for MRI scanning. Twenty-one were musicians, who had at least 7 years of musical experience, either in vocal or instrumental music. Twenty-seven participants were non-musicians, who had no more than 3 years of musical training, other than general education classes before high school. None of the participants in either group had prior experience with a task similar to that used in the present study. They had no history of hearing loss, neurological or psychiatric disorders, and were not taking any prescription drugs or alcohol at the time of the experiment. They all received 50 RMB after the experiment. The study was approved by the Ethics Committee of Southwest University in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki). The privacy rights of all participants were observed and protected.

Stimuli

Instrumental music with three kinds of tempo (fast tempo: >120 bpm, presto and allegro; medium tempo: 76–120 bpm, moderato and andante; and slow tempo: 60–76 bpm, adagio and larghetto) was selected by three music professors. Each tempo group contained 10 songs. These musical excerpts were all serious non-vocal music, chosen to illustrate significant emotions and be representative of the most important instrumental groups (e.g., solo, chamber, and orchestra music). One month before the experimental fMRI scanning, the 30 songs were rated according to valence, arousal, and familiarity by another 15 musicians and 20 non-musicians. Individuals were categorized as musicians if they had at least 7 years of musical experience, either in vocal or instrumental music, and individuals were non-musicians if they had no more than 3 years of musical training, other than general education classes before high school. During the assessment, each rater was sitting in a separate quiet room with a Dell computer, playing music through a set of headphones. After listening to a whole series of music at about 60 dB, raters were required to score the valence, arousal, and familiarity separately on three 7-point scales (1 = very negative, low arousal, unfamiliar and 7 = very positive, high arousal, familiar). Then, twelve music of equal familiarity, with higher arousal in same tempo or with more positive/negative valence for each tempo group, were selected for the formal experiment (Supplementary Table S1). Each group contained four Chinese and non-Chinese non-vocal musical compositions. The first 60 to 90 s of the selected 12 musical pieces were adapted to fit the system requirements of E-prime 2.0 and given 1.5 s fade-in and fade-out ramps.

Experiment

Before the fMRI experiment, participants were asked to remain calm and complete a practice activity outside the scanner room, which lasted about 1 min. In the practice activity, participants were required to listen to three music clips of 20 s and detect a specific click-click sound that lasted 2 s with 0.25 s fade-in and fade-out ramps among them, without missing any. In the formal experiment, this was designed to prevent participants from being inattentive or falling asleep in the scanner.

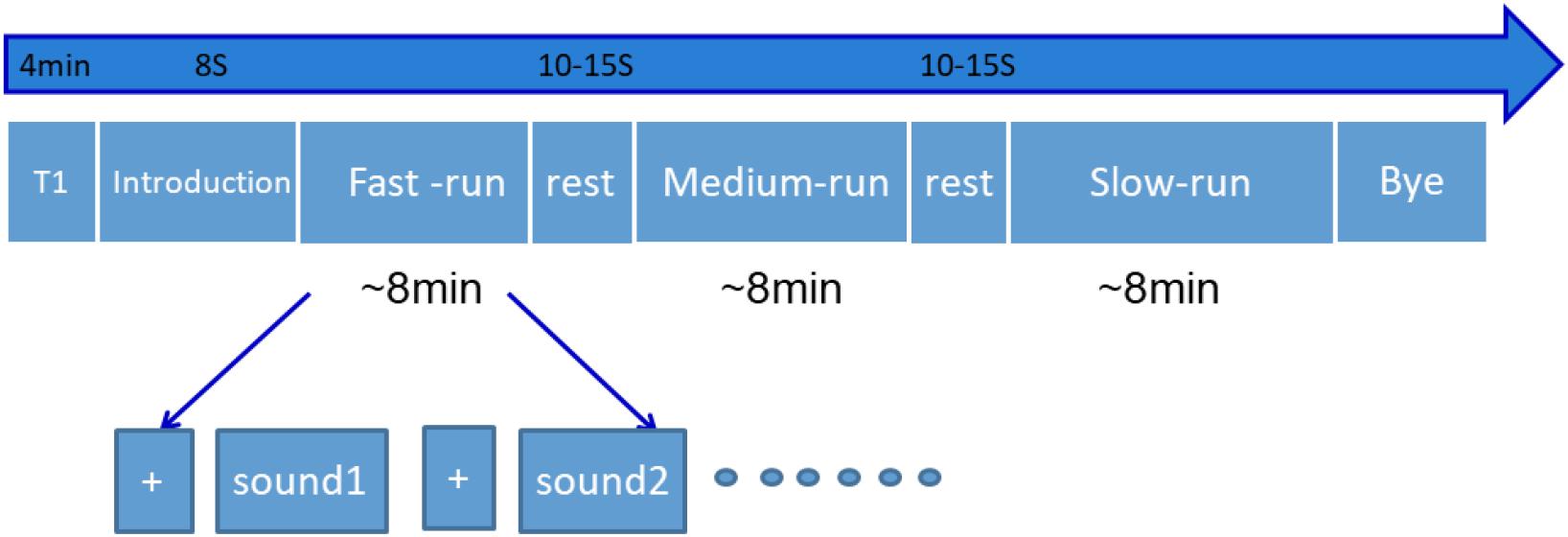

All the participants received a fMRI scan in this experiment. The design for the procedure is displayed in Figure 1. Three runs of fast-, medium-, and slow-tempo music were pseudo-randomly presented among different participants. Each run contained four musical excerpts of the same tempo classification and two detection click-click sounds, which were played randomly at five 4-s intervals. The detection sound would be presented at the beginning of each run. Between the runs, participants could rest for 10–15 s. During the scanning, all participants were asked to relax, not to move during the scanning process, and to listen to the music attentively with their eyes closed. Once the detection sound appeared, participants needed to respond by pressing a box button. Meanwhile, their response was recorded by the experimenter outside the scanning room. If the participant missed one detection sound during a run, they would be reminded to concentrate during the rest period. After the scan, the participants were interviewed and all participants except two reported the experiment as being relaxing and stress-free. The scanning lasted approximately 28 min.

The post-ratings of valence and arousal for the same 12 music pieces were performed soon after the scanning. Only after the participant understood the meaning of the two dimensions (valence, the amount of pleasure experienced, which fluctuates from negative to positive, and arousal, the autonomic reaction associated with an experience, which fluctuates from weak to strong) (Liu et al., 2016), could the participant begin the post-rating experiment. The two participants who reported experiencing stress after the fMRI scan finished their behavioral ratings on the day after their scans. During the post-rating, after one musical piece was played, participants were required to rate the music pieces within 4 s for both the arousal and valence dimensions, using two 7-point scales (0 = very negative or low arousal and 7 = very positive or high arousal) based on their subjective music-evoked emotion. The behavioral ratings were completed in a specially organized room, where each participant was placed in front of a computer. Each participant was asked to judge each music piece, which was played on the computer at a volume of 60 decibels (dB). Two intervals of 20–30 s each occurred pseudo-randomly for participants to relax. The post-rating lasted about 30 min. The statistical analysis was conducted using SPSS 20.0. Before and after the neural and behavioral experiments, the State-Trait Anxiety Inventory was used to evaluate individuals’ state anxiety (Spielberger et al., 1970).

fMRI Acquisition

Images were acquired with a Siemens 3T scanner (Siemens Magnetom Trio TIM, Erlangen, Germany). An echo-planar imaging sequence was used for image collection, and T2-weighted images were recorded per run [TE = 30 ms; TR = 2000 ms; flip angle = 90°; field of view (FOV) = 220 mm × 220 mm; matrix size = 64 × 64; 32 interleaved 3 mm-thick slices; in-plane resolution = 3.4 mm × 3.4 mm; interslice skip = 0.99 mm]. T1-weighted images were collected with a total of 176 slices at a thickness of 1 mm and in-plane resolution of 0.98 mm × 0.98 mm (TR = 1900 ms; TE = 2.52 ms; flip angle = 90°; FOV = 250 mm × 250 mm). We used SPM8 (Welcome Department of Cognitive Neurology, London, United Kingdom)1 to preprocess the functional images. Slice order was corrected by slice timing correction, and the data were realigned to estimate and modify the six parameters of head movement. In order to collect magnet-steady images, the first 10 images were deleted. The images were normalized to Montreal Neurological Institute space in 3 mm × 3 mm × 3 mm voxel sizes then spatially smoothed with a Gaussian kernel. The full width at half maximum was specified as 6 mm × 6 mm × 6 mm.

Then we obtained six direction parameters of head movement. We deleted data of participants whose head-movement parameters were more than 2.5 mm. After the preprocessing, data for 7 participants were deleted and data for 41 participants were retained; that is, the final sample consisted of 16 musicians and 25 non-musicians. Two levels of data analysis procedures were used to analyze fMRI data. At the first (subject) level, four event types were defined, which consisted of fast-, medium-, and slow-tempo trials, and detection response. The onset time was chosen when the target sounds were presented. At the second (group) level, full factorial analysis of variance was chosen for a comparison of the three sound conditions between the two groups. To determine whether there was significant activation corresponding to each contrast in tempo, a false discovery rate (FDR) corrected p = 0.05 and an extent threshold of cluster size = 20 voxels for the height (intensity) were used as the threshold.

Stimuli Presentation During the Scanning

The task was programmed using E-prime 2.0 on a Dell computer. Binaural auditory stimuli were presented using a custom-built magnet-compatible system that attenuated around 28 dB. During the scanning, the loudness levels at the head of the participant were approximately 98 dB. After the attenuation of the listening device, the auditory stimuli were approximately 70 dB at the participant’s ears. During a pretest scan, the optimal listening level for each participant was guaranteed by being determined individually.

Results

Behavioral Analysis

On the State-Trait Anxiety Scale, the mean trait anxiety score was 52.15 ± 3.08 (range 45–60), and the scores demonstrated a normal distribution. The mean pre-test score was 47.74 ± 6.94, and the mean post-test score was 49.37 ± 6.36, which was consistent with normed values for Chinese college students (45.31 ± 11.99) and confirmed that all participants were in an emotionally stable state (Li and Qian, 1995). The difference between pre- and post-scores was not significant (p = 0.65). Internal consistency coefficient (alpha) was 0.93.

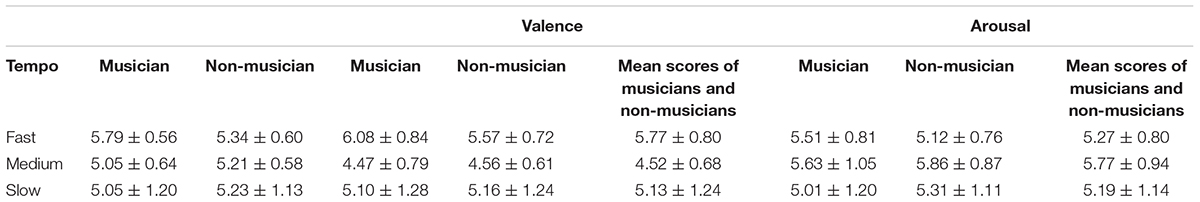

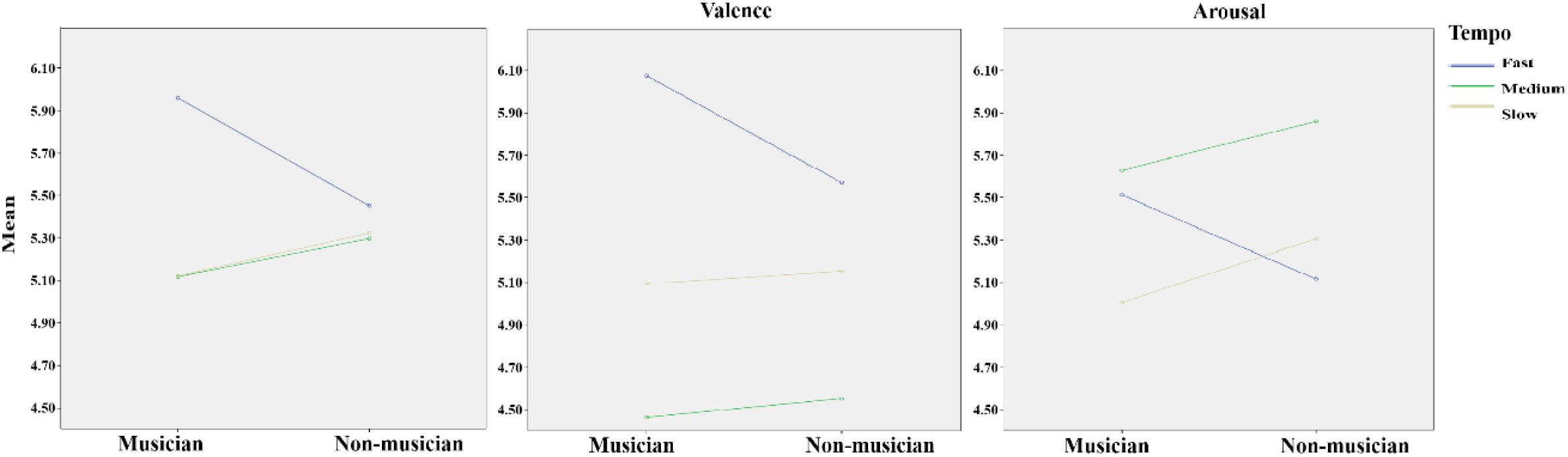

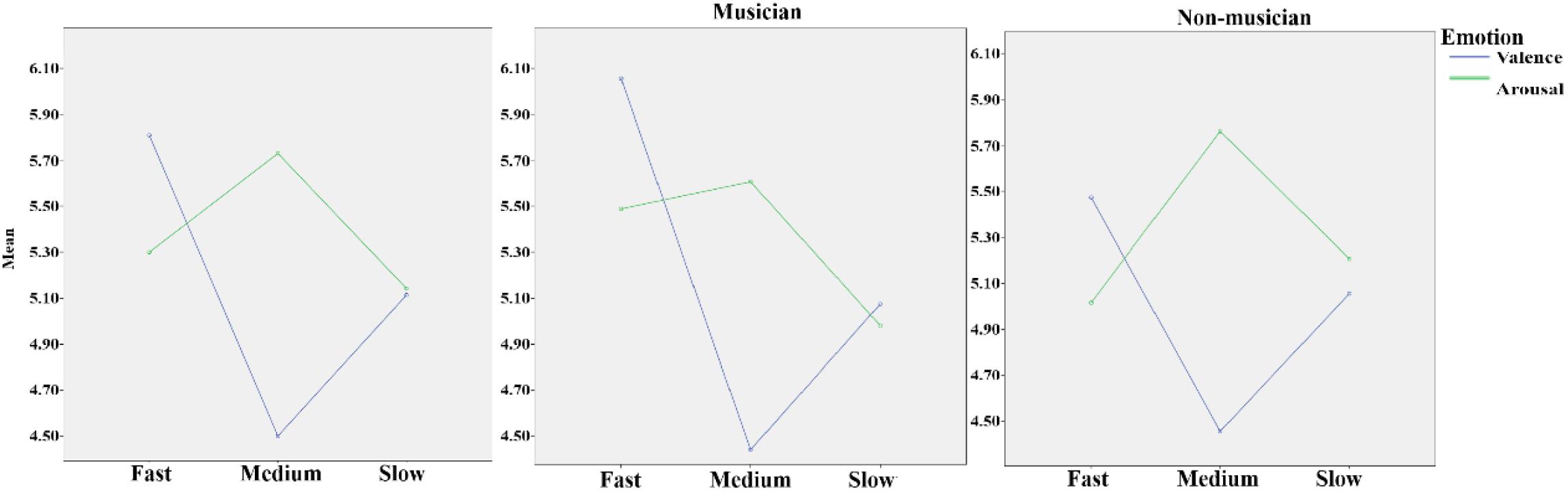

In the repeated-measure 2 (group: musician and non-musician) × 3 (tempo: fast, medium, and slow) × 2 (emotion dimension: valence and arousal) ANOVA, the main effect of tempo was significant, F(2,37) = 5.79, p = 0.005, η2 = 0.13; the main effect of emotion dimension was significant, F(2,37) = 13.57, p = 0.001, η2 = 0.26. Additionally, the interaction effect of group and tempo approached significance, F(2,37) = 2.94, p = 0.059, η2 = 0.07; the interaction effect of tempo and emotion dimension was significant, F(1,37) = 29.38, p < 0.001, η2 = 0.43. In the one-way ANOVA of group × valence dimension and valence dimension × fast, medium, and slow music, a significant difference was found in the valence of fast music between musicians (6.08 ± 0.84) and non-musicians (5.57 ± 0.72), F(1,39) = 4.16, p = 0.048, η2 = 0.03. Testing the simple effect of rhythm tempo, the valence score of medium-tempo music was significantly lower than those of fast (p < 0.001) and slow music (p = 0.001); the valence of fast music was significantly higher than that of slow music. The arousal score of medium music was significantly higher than that of fast (p = 0.006) and slow music (p = 0.003) (Table 1). Overall, musicians perceived more positive and stronger emotions than non-musicians (Figure 2), especially with higher scores of emotional valence in response to fast music. Fast-tempo music had the highest valence and medium-tempo music had lowest valence and highest arousal (Figure 3).

TABLE 1. Average scores of three tempi in two emotional dimensions between musicians and non-musicians.

FIGURE 2. The panel (Left) shows the mean scores of musicians’ and non-musicians’ emotional responses to fast, medium, and slow music. The panel (Middle) shows the valence scores of musicians’ and non-musicians’ emotional responses to fast, medium, and slow music. The panel (Right) shows the arousal scores of musicians’ and non-musicians’ emotional responses to fast, medium, and slow music.

FIGURE 3. The panel (Left) shows the mean scores of all participants’ valence and arousal to fast, medium, and slow music. The panel (Middle) shows the mean scores of musicians’ valence and arousal to fast, medium, and slow music. The panel (Right) shows the mean scores of non-musicians’ valence and arousal to fast, medium, and slow music.

fMRI Analyses

Group Analysis

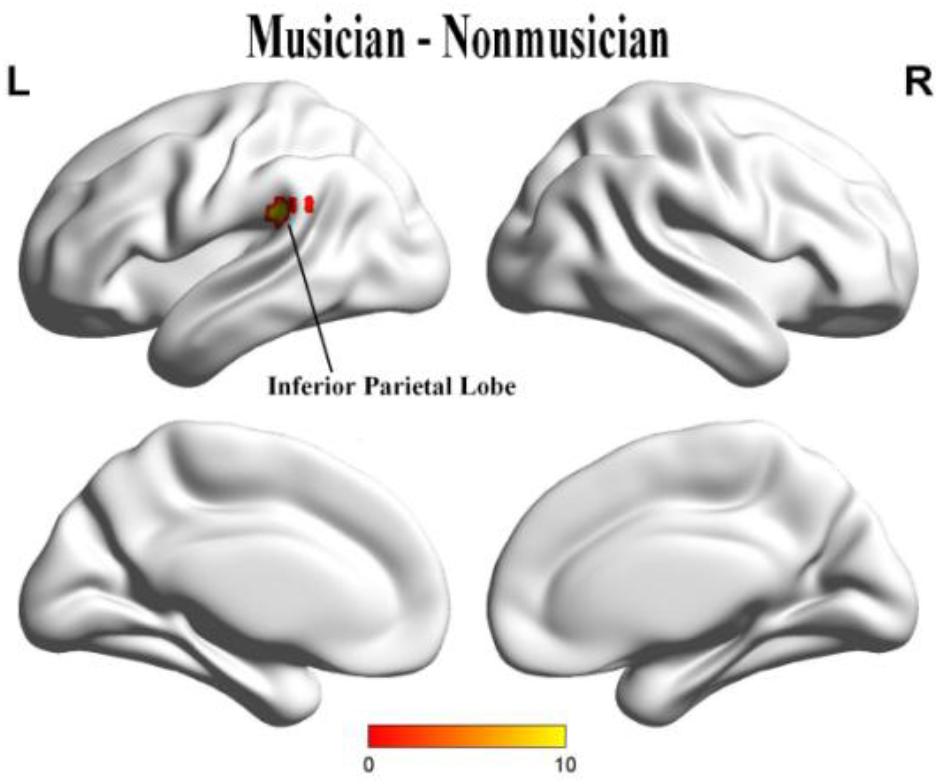

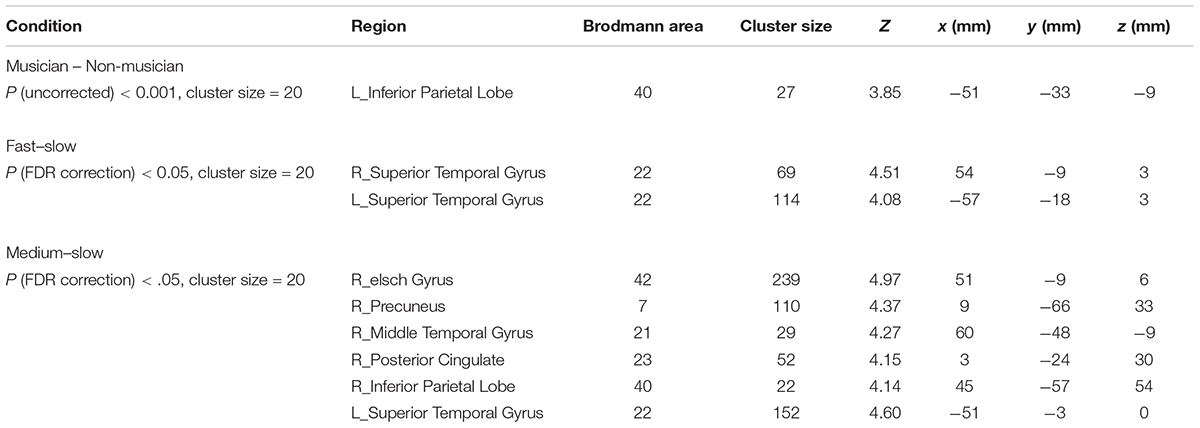

When we compared group effects, the musicians demonstrated significantly stronger activation in the left inferior parietal lobe (IPL) than did non-musicians (uncorrected p = 0.001, cluster size = 20; Figure 4) (Table 2).

TABLE 2. fMRI analysis of difference between musicians and non-musicians and difference among fast-, medium-, and slow-tempo music.

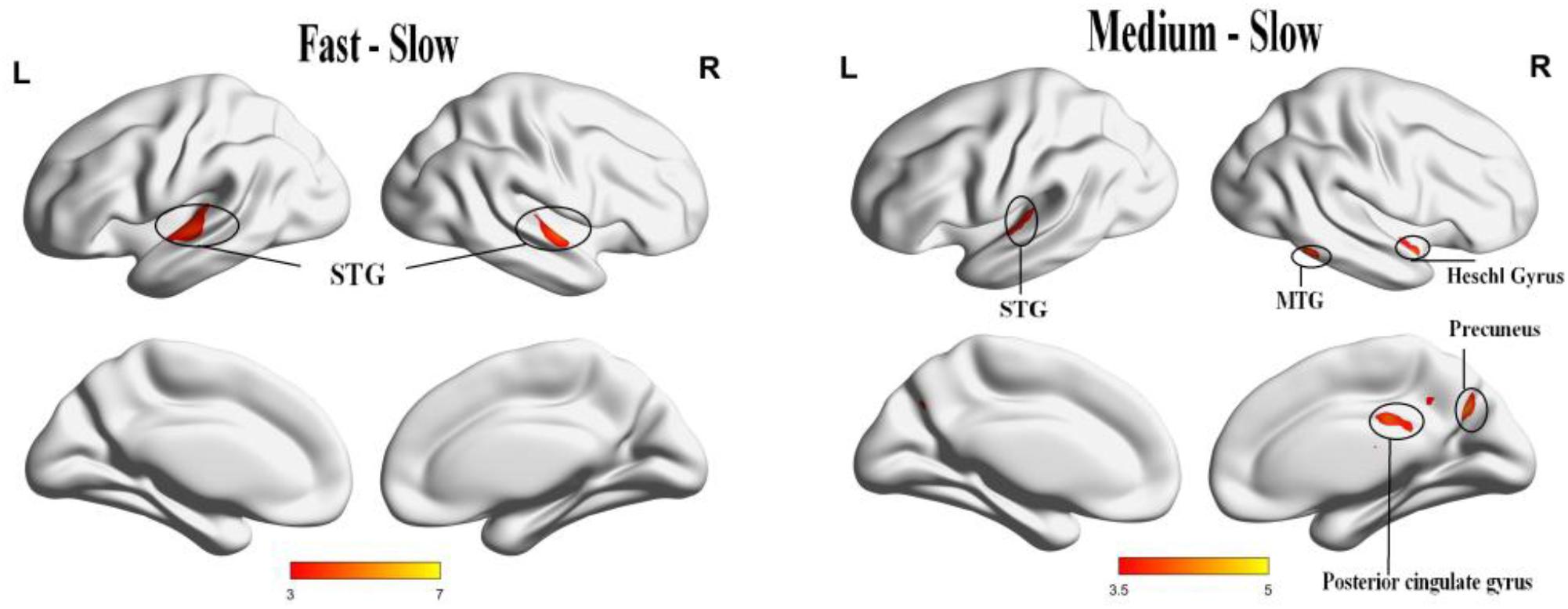

Tempo Analysis

Significant activation was found when we compared the tempo effects using a p < 0.05 cluster-extent FDR correction, cluster size = 20. The activation for fast music was stronger than slow music in the bilateral anterior superior temporal gyrus (STG). The activation for medium music was stronger than slow music in the right Heschl’s gyrus (HG), right precuneus, right middle temporal gyrus (MTG), right posterior cingulate cortex (PCC), right IPL, and left STG (Table 2 and Figure 5).

FIGURE 5. The panel (Left) result of comparison shows the positive activation of fast music to slow music in bilateral STG. The panel (Right) result of comparison shows the positive activation of medium music to slow music in right HG, right precuneus, right MTG, right PCC, right IPL, left STG, and left culmen.

Discussion

To our knowledge, this is the first study that provides evidence, from both musical tempo and musical training, to reveal brain activation underlying music-evoked emotion during natural listening conditions. In the current study, the influence of tempo on music-evoked emotion was compared between musicians and non-musicians in their ratings of valence and arousal using music excerpts with fast, medium, and slow tempi. Significant differences were found in emotional valence between musicians and non-musicians when listening to fast music, and in the interaction effect of musical training and tempo. In the functional analysis, musicians showed stronger activation in the left IPL than non-musicians. Both behavioral and neural differences were found among the three tempi, with different activations in the auditory cortex (STG, MTG, and HG), limbic system (cingulate gyrus), and parietal cortex (IPL and precuneus). Especially, when looking at participants’ highest ratings of pleasantness, fast-tempo music showed stronger activation of bilateral STG than did slow music. Medium-tempo music, which participants rated as having the strongest arousal, showed stronger activation in the auditory cortex, cingulate gyrus, and precuneus. Overall, the current results confirmed our hypothesis and provided neural evidence for understanding the effects of musical tempi on musicians and non-musicians’ music experience.

The Effects of Musical Training

Behaviorally, musicians and non-musicians showed no differences in their integrated emotional ratings of music; however, when the comparison was separated in the valence and arousal dimensions, musicians showed higher emotional valence than non-musicians to fast music. These findings verified our hypothesis that the emotional experience of musicians would be stronger than non-musicians; it also implied that fast-tempo music could effective in differentiating the emotional experience between musicians and non-musicians. The interaction between group and tempo also supported our hypothesis regarding musical training. Participants’ ratings showed a decreasing tendency from musicians to non-musicians in the valence dimension while, in the arousal dimension, there was an increasing tendency from musicians to non-musicians, suggesting that participants’ musical training played a differentiated role in affecting emotional intensity and valence. Further, a significant difference in the neural activation of the IPL was found in the fMRI analysis. A previous study found that only musicians recruited the left IPL for melodic and harmonic processing in a passive fMRI listening paradigm (Schmithorst and Holland, 2003). In their investigation of rhythmic deviation within a musical sequence of musical mismatch negativity, Lappe et al. (2013) also found that bilateral neural activation occurred in the IPL only in musically trained individuals.

The IPL can function in cognitive control with regulating working memory and taking part in observing others’ activities, understanding relevant intentions before beginning subsequent acts with a top-down process (Fogassi et al., 2005). Conversely, we found that the IPL was engaged in responding to emotional information. While viewing emotional images, activation of the IPL has been recognized to arouse the emotions (Ochsner et al., 2012; Ferri et al., 2016). In an emotion-detecting experiment, Engelen et al. (2015) presented neutral and emotional body conditions of equaled motion; they found stronger activation of the IPL in the emotional condition in contrast to a neutral body gesture. The left IPL plays a pivotal role in many cognitive functions (Bzdok et al., 2016), such as second language learning (Sliwinska, 2015; Barbeau et al., 2017), semantic processing (Patel, 2003; Donnay et al., 2014), auditory working memory (Alain et al., 2008), and implicit processing of brief musical emotions (Bogert et al., 2016). In the magnetoencephalography (MEG) recording of cortical entrainment to music and its modulation by expertise, left lateralization was confirmed in a topographical analysis of the difference between musicians and non-musicians in the beta range (Doelling and Poeppel, 2015). Combined with the higher valence of musicians to non-musicians, the stronger activation of the IPL could be important evidence of superior processing for musicians’ emotional experience.

Some studies have reported than the emotional response to music was stable between musicians and non-musicians and was weakly influenced by musical expertise (Bigand et al., 2005; Bramley et al., 2016). However, in their experiments, musicians and non-musicians were both asked to perform other tasks, such as to identify excerpts that induced similar emotional experiences and to group these excerpts or involve participants in a virtual roulette paradigm. These scenarios are different from the current study’s design of no-task music listening for emotional experience, which is close to the music listening situation in people’s daily life. As a natural musical listening study of music emotion evoking, the present findings highlight the differences between musicians and non-musicians, and provided a further understanding of humans’ music experience.

The Effect of Rhythm Tempo

Fast Music vs. Slow Music

Consistent with our hypothesis, fast-tempo music evoked the most pleasant emotion, and slow-tempo music received the lowest score in the arousal dimension among the three kinds of music. These findings are consistent with existing research about music tempo and its influence on subjective emotional valence and arousal (Droitvolet et al., 2013; Trochidis and Bigand, 2013). Happy music produced significant and large activation in the auditory cortices (Brattico et al., 2011; Koelsch et al., 2013; Park et al., 2014; Bogert et al., 2016). The STG is the core region of the auditory area that responds to auditory stimuli (Humphries et al., 2010; Angulo-Perkins et al., 2014; Thomas et al., 2015) and has also been characterized for happy compared with sad music conditions (Pehrs et al., 2013; Bogert et al., 2016). The current fMRI results, that bilateral STG was stronger for fast- than slow-tempo music were consistent with previous findings, which implied that a faster tempo could arouse more positive emotion with stronger activation of emotional experience in the temporal cortex.

However, this finding was different from a previous study, in which no changes in musical tempo were found in humans’ autonomic nervous system (ANS) when participants listened to fast and slow music (Krabs et al., 2015). Although music-evoked emotion had been reported to contribute to music-evoked ANS effects (Juslin, 2013) and it was tightly connected with music tempo. In the study of Krabs et al. (2015), the tempo was extracted after the musical emotion was identified as pleasant or unpleasant and ANS effects did not differ between fast music and slow music, which implied that the neural effects of musical tempo could not be equaled to musical emotion. In our study, we chose the tempo to be the core variable to compare its emotional effects and emotional valence was the second variable, which depended on the chosen tempo. Compared with the above study, the different STG activation of tempo in our research may be more objective to explain that tempo was an acoustical feature that affected listeners’ emotional experience and blood oxygenation level dependent (BOLD) response, but not a transitional emotion label in music; alternatively, it can be explained that fMRI activities were more sensitive than ANS response affected by tempo. Conclusively, conjunct findings of higher emotional valence and bilateral STG that were stronger in fast- than slow-tempo music were both produced in the current study, which provided a powerful explanation of the effects of musical tempo on humans’ emotional experience.

Medium Music vs. Slow Music

Although it was beyond our expectation that participants rated medium-tempo music with the strongest arousal and the lowest valence in this study, a large range of neural activation in the auditory cortex, PCC, precuneus, and IPL could provide a scientific explanation for this understanding. The cingulate gyrus is an important structure in the core music-evoked emotion network (Koelsch, 2014) and was also found to be positively connected with humans’ ability to regulate emotions after long-time music exposure (Bringas et al., 2015). The PCC is believed to link emotion and memory processes (Maratos et al., 2001; Maddock et al., 2003) and has been implicated in autobiographical emotional recall (Fink et al., 1996). The precuneus is another important area for feeling the emotional content of music (Blood and Zatorre, 2001; Tabei, 2015), and is a brain region associated with assessing emotional responses evoked in the listener (Cavanna and Trimble, 2006). Through large functional analysis of postmortem assessments of cingulotomy lesions, vegetative state cases, and metabolic studies, the PCC and precuneus have been proven to be pivotal for conscious information and connected with arousal state (Vogt and Laureys, 2005). Regarding medium-tempo music demonstrating the strongest arousal in this study, it could be concluded that these high levels of emotional arousal may be tightly connected with listeners’ PCC and precuneus. Participants rated medium music as having the lowest emotional valence, which means they felt the least pleasure or weakest hedonic component (Feldman, 1995). The right hemisphere has been shown to be more connected with negative emotion (Sato and Aoki, 2006). When medium music was contrasted with slow music, the temporal cortex, cingulate gyrus, and precuneus were both activated in the right hemisphere, which was connected to the weak pleasure recorded for medium music.

In addition to the limbic system (cingulate gyrus) and parietal cortex (IPL and precuneus), significant neural difference was also found in the auditory cortex (HG, MTG, and STG) when medium music was contrasted with slow music. HG is an area of the primary auditory cortex buried within the lateral sulcus of the human brain (Dierks et al., 1999). It plays an important role in pitch perception and initial detection of acoustic changes (Krumbholz et al., 2003; Schneider et al., 2005). Activation of the right MTG has been found to be connected to the processing of semantic aspects of language (Hickok and Poeppel, 2007), and music-evoked emotion by expressed affect (Steinbeis and Koelsch, 2008). Even in a distorted tune test, the right MTG was found to be stronger in musicians with 19 years of training than in individuals without musical training (Seung et al., 2005). Medium music aroused a similar BOLD signal of MTG in listeners with music training, which provided a possible explanation that medium music would be more readily available for the listeners’ acoustic perception. We found that medium-tempo music only elicited stronger STG activation in the left hemisphere. The auditory cortices in the two hemispheres are relatively specialized. Specifically, temporal resolution is greater in the left auditory cortical areas and spatial resolution is greater in the right auditory cortical areas (Zatorre et al., 2002; Liu et al., 2017). Studies have found that trained music listeners showed stronger activation in the left STG during passive music listening (Ohnishi et al., 2001; Bangert et al., 2006). Finding this activity in the left STG inspired us to theorize that medium music may promote individuals’ music processing with the left hemisphere’s advantage in temporal resolution. The current results demonstrated that music with a medium tempo could promote listeners’ emotional arousal through a large range of neural activities. Whether it depends on the similarity of medium-tempo music to human physiological rhythms (i.e., the rhythm of the heart), or other internal neural mechanisms, deserves future investigation combined with more biological traits and technology.

Limitation and Future Studies

Our study addressed the effects of musical tempo on music-evoked emotion and how it is influenced by humans’ musical training. Although no motor areas were found in the current study, the experiment described new neural characteristics of the mechanism of tempo on music-evoked emotion. There are two limitations in the current study. No significant relationships were found between the behavioral scores and the BOLD signals. In the current study, the emotional ratings of music were collected after scanning to ensure an unpolluted and natural music listening situation. In future study, the data collection of emotional dimensions and BOLD signals may be improved with an effective method of integration. Besides, only three kinds of music tempo were presented to compare their effects on music-evoked emotion. If stimuli can be used in a continuous fashion, more relationships between these variables may be found in future researches.

Summary

Tempo is highly connected with music-evoked emotion and it interacts with musical training during behavioral tasks. This study discussed the emotional experience with valence and arousal ratings and explained the neural findings of fast-, medium-, and slow-tempo music. The stronger activation of the IPL in musicians than non-musicians provides supplementary evidence for the controversial conclusion of whether or not musical training promotes listeners’ neural activation to emotional music. We synthesized key findings about the auditory area, parietal cortex, and cingulate gyrus to explain the highest valence to fast music and the strongest arousal to medium music. Although no significant interactions between musical training and tempo were found in the fMRI analysis, the behavior interactions provided a worthy inspiration for future investigation. Hence, the effects of tempo on music-evoked emotion could be a valuable investigation for revealing the emotional mechanism in listening to music.

Author Contributions

GL was the leader of this study and instructed the study theme. YL was responsible for the experimental design and manuscript writing. DW participated in experimental design. QL, GY, and SW were responsible for the data collection. GW and XZ were responsible for the recording the stimuli and selecting the music stimuli.

Funding

This work was supported by National Natural Science Foundation of China (Grant Nos. 61472330 and 61872301), and the Fundamental Research Funds for the Central Universities (SWU1709562).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer NG and handling Editor declared their shared affiliation at time of review.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.02118/full#supplementary-material

Footnotes

References

Alain, C., He, Y., and Grady, C. (2008). The contribution of the inferior parietal lobe to auditory spatial working memory. J. Cogn. Neurosci. 20, 285–295. doi: 10.1162/jocn.2008.20014

Angulo-Perkins, A., Aubé, W., Peretz, I., Barrios, F. A., Armony, J. L., and Concha, L. (2014). Music listening engages specific cortical regions within the temporal lobes: differences between musicians and non-musicians. Cortex 59, 126–137. doi: 10.1016/j.cortex.2014.07.013

Balkwill, L. L., and Thompson, W. F. (1999). A cross-cultural investigation of the perception of emotion in music: psychophysical and cultural cues. Music Percept. 17, 43–64. doi: 10.2307/40285811

Bangert, M., Peschel, T., Schlaug, G., Rotte, M., Drescher, D., Hinrichs, H., et al. (2006). Shared networks for auditory and motor processing in professional pianists: evidence from fMRI conjunction. Neuroimage 30, 917–926. doi: 10.1016/j.neuroimage.2005.10.044

Barbeau, E. B., Chai, X. J., Chen, J. K., Soles, J., Berken, J., Baum, S., et al. (2017). The role of the left inferior parietal lobule in second language learning: an intensive language training fMRI study. Neuropsychologia 98, 169–176. doi: 10.1016/j.neuropsychologia.2016.10.003

Basar, E., Basar-Eroglu, C., Karakas, S., and Schurmann, M. (1999). Oscillatory brain theory: a new trend in neuroscience. IEEE Eng. Med. Biol. Mag. 18, 56–66. doi: 10.1109/51.765190

Bigand, E., Vieillard, S., Madurell, F., Marozeau, J., and Dacquet, A. (2005). Multidimensional scaling of emotional responses to music: the effect of musical expertise and of the duration of the excerpts. Cogn. Emot. 19, 1113–1139. doi: 10.1080/02699930500204250

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Bogert, B., Numminen-Kontti, T., Gold, B., Sams, M., Numminen, J., Burunat, I., et al. (2016). Hidden sources of joy, fear, and sadness: explicit versus implicit neural processing of musical emotions. Neuropsychologia 89, 393–402. doi: 10.1016/j.neuropsychologia.2016.07.005

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25, 49–59. doi: 10.1016/0005-7916(94)90063-9

Bramley, S., Dibben, N., and Rowe, R. (2016). Investigating the influence of music tempo on arousal and behaviour in laboratory virtual roulette. Psychol. Music 44, 1389–1403. doi: 10.1177/0305735616632897

Brattico, E., Alluri, V., Bogert, B., Jacobsen, T., Vartiainen, N., Nieminen, S. K., et al. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Front. Psychol. 2:308. doi: 10.3389/fpsyg.2011.00308

Bringas, M. L., Zaldivar, M., Rojas, P. A., Martinezmontes, K., Chongo, D. M., Ortega, M. A., et al. (2015). Effectiveness of music therapy as an aid to neurorestoration of children with severe neurological disorders. Front. Neurosci. 9:427. doi: 10.3389/fnins.2015.00427

Bzdok, D., Hartwigsen, G., Reid, A., Laird, A. R., Fox, P. T., and Eickhoff, S. B. (2016). Left inferior parietal lobe engagement in social cognition and language. Neurosci. Biobehav. Rev. 68, 319–334. doi: 10.1016/j.neubiorev.2016.02.024

Cai, Y., and Pan, X. (2007). An experimental research on how 8 music excerpts’ tempo and melody influenced undergraduates’ emotion. Psychol. Sci. 30, 196–198.

Cavanna, A. E., and Trimble, M. R. (2006). The precuneus: a review of its functional anatomy and behavioural correlates. Brain 129, 564–583. doi: 10.1093/brain/awl004

Chen, J. L., Penhune, V. B., and Zatorre, R. J. (2008). Listening to musical rhythms recruits motor regions of the brain. Cereb. Cortex 18, 2844–2854. doi: 10.1093/cercor/bhn042

Dierks, T., Linden, D. E., Jandl, M., Formisano, E., Goebel, R., Lanfermann, H., et al. (1999). Activation of heschl’s gyrus during auditory hallucinations. Neuron 22, 615–621. doi: 10.1016/S0896-6273(00)80715-1

Doelling, K. B., and Poeppel, D. (2015). Cortical entrainment to music and its modulation by expertise. Proc. Natl. Acad Sci. U.S.A. 112, 6233–6242. doi: 10.1073/pnas.1508431112

Donnay, G. F., Rankin, S. K., Lopez-Gonzalez, M., Jiradejvong, P., and Limb, C. J. (2014). Neural substrates of interactive musical improvisation: an FMRI study of ‘trading fours’ in jazz. PLoS One 9:e88665. doi: 10.1371/J.pone.0088665

Droitvolet, S., Ramos, D., Bueno, J. L. O., and Bigand, E. (2013). Music, emotion, and time perception: the influence of subjective emotional valence and arousal? Front. Psychol. 4:417. doi: 10.3389/fpsyg.2013.00417

Engelen, T., de Graaf, T. A., Sack, A. T., and De, G. B. (2015). A causal role for inferior parietal lobule in emotion body perception. Cortex 73, 195–202. doi: 10.1016/j.cortex.2015.08.013

Feldman, L. A. (1995). Valence focus and arousal focus: individual differences in the structure of affective experience. J. Pers. Soc. Psychol. 69, 153–166. doi: 10.1037/0022-3514.69.1.153

Fernández-Sotos, A., Fernández-Caballero, A., and Latorre, J. M. (2016). Influence of tempo and rhythmic unit in musical emotion regulation. Front. Comput. Neurosci. 10:80. doi: 10.3389/fncom.2016.00080

Ferri, J., Schmidt, J., Hajcak, G., and Canli, T. (2016). Emotion regulation and amygdala-precuneus connectivity: focusing on attentional deployment. Cognt. Affect. Behav. Neurosci. 16, 991–1002. doi: 10.3758/s13415-016-0447-y

Fink, G. R., Markowitsch, H. J., Reinkemeier, M., Bruckbauer, T., Kessler, J., and Heiss, W. D. (1996). Cerebral representation of one’s own past: neural networks involved in autobiographical memory. J. Neurosci. 16, 4275–4282. doi: 10.1523/JNEUROSCI.16-13-04275

Fogassi, L., Ferrari, P. F., Gesierich, B., Rozzi, S., Chersi, F., and Rizzolatti, G. (2005). Parietal lobe: from action organization to intention understanding. Science 308, 662–667. doi: 10.1126/science.1106138

Gabrielsson, A., and Juslin, P. N. (2003). Emotion of Expression Music. Oxford: Oxford University Press.

Gagnon, L., and Peretz, I. (2003). Mode and tempo relative contributions to “happy-sad” judgements in equitone melodies. Cogn. Emot. 17, 25–40. doi: 10.1080/02699930302279

Geiser, E., Ziegler, E., Jancke, L., and Meyer, M. (2009). Early electrophysiological correlates of meter and rhythm processing in music perception. Cortex 45, 93–102. doi: 10.1016/j.cortex.2007.09.010

Gentry, H., Humphries, E., Pena, S., Mekic, A., Hurless, N., and Nichols, D. F. (2013). Music genre preference and tempo alter alpha and beta waves in human non-musicians. Impulse 1–11.

Hevner, K. (1937). The affective value of pitch and tempo in music. Am. J. Psychol. 49, 621–630. doi: 10.2307/1416385

Hickok, G., and Poeppel, D. (2007). The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402. doi: 10.1038/nrn2113

Höller, Y., Thomschewski, A., Schmid, E. V., Höller, P., Crone, J. S., and Trinka, E. (2012). Individual brain-frequency responses to self-selected music. Inter. J. Psychophsiol. 86, 206–213. doi: 10.1016/j.jijpsycho.2012.09.005

Humphries, C., Liebenthal, E., and Binder, J. R. (2010). Tonotopic organization of human auditory cortex. Neuroimage 50, 1202–1211. doi: 10.1126/science.216.4552.1339

Hunter, P. G., Schellenberg, E. G., and Schimmack, U. (2010). Feelings and perceptions of happiness and sadness induced by music: similarities, differences, and mixed emotions. Psychol. Aesthet. Creat. Arts 4, 47–56. doi: 10.1037/a0016873

Ilie, G., and Thompson, W. F. (2006). A comparison of acoustic cues in music and speech for three dimensions of affect. Music Percept. 23, 319–330. doi: 10.1525/mp.2006.23.4.319

Juslin, P. N. (2013). From everyday emotions to aesthetic emotions: towards a unified theory of musical emotions. Phys. Life Rev. 10, 235–266. doi: 10.1016/j.plrev.2013.05.008

Juslin, P. N., and Sloboda, J. A. (2001). Music and Emotion: Theory and Research. Oxford: Oxford University Press, doi: 10.1037/1528-3542.1.4.381

Karageorghis, C. I., and Jones, L. (2014). On the stability and relevance of the exercise heart rate–music-tempo preference relationship. Psychol. Sport Exerc. 15, 299–310. doi: 10.1016/j.psychsport.2013.08.004

Karageorghis, C. I., Jones, L., and Low, D. C. (2006). Relationship between exercise heart rate and music tempo preference. Res. Q. Exerc. Sport 7, 240–250. doi: 10.1080/02701367.2006.10599357

Kim, D. E., Shin, M. J., Lee, K. M., Chu, K., Woo, S. H., Kim, Y. R., et al. (2004). Musical training-induced functional reorganization of the adult brain: functional magnetic resonance imaging and transcranial magnetic stimulation study on amateur string players. Hum. Brain Mapp. 23, 188–199. doi: 10.1002/hbm.20058

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15, 170–180. doi: 10.1038/nrn3666

Koelsch, S., Skouras, S., Fritz, T., Herrera, P., Bonhage, C., Kussner, M. B., et al. (2013). The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. Neuroimage 81, 49–60. doi: 10.1016/j.neuroimage.2013.05.008

Krabs, R. U., Enk, R., Teich, N., and Koelsch, S. (2015). Autonomic effects of music in health and Crohn’s disease: the impact of isochronicity, emotional valence, and tempo. PLoS One 10:e0126224. doi: 10.1371/journal.pone.0126224

Krumbholz, K., Patterson, R. D., Seither-Preisler, A., Lammertmann, C., and Lütkenhöner, B. (2003). Neuromagnetic evidence for a pitch processing center in heschl’s gyrus. Cereb. Cortex 13, 765–772. doi: 10.1093/cercor/13.7.765

Lappe, C., Steinsträter, O., and Pantev, C. (2013). A rhythmic deviation within a musical sequence induces neural activation in inferior parietal regions after short-term multisensory training. Multisens. Res. 26, 164–164. doi: 10.1163/22134808-000S0121

Large, E. W., Fink, P., and Kelso, J. A. (2002). Tracking simple and complex sequences. Psychol. Res. 66, 3–17. doi: 10.1007/s004260100069

Levitin, D. J. (2012). What does it mean to be musical? Neuron 73, 633–637. doi: 10.1016/j.neuron.2012.01.017

Levitin, D. J., and Tirovolas, A. K. (2009). Current advances in the cognitive neuroscience of music. Ann. N. Y. Acad. Sci. 1156, 211–231. doi: 10.1111/j.1749-6632.2009.04417.x

Li, W. L., and Qian, M. Y. (1995). Revision of the state-trait anxiety inventory with sample of Chinese college students (in Chinese). Acta Centia 31,108–114.

Liu, Y., Chen, X., Zhai, J., Tang, Q., and Hu, J. (2016). Development of the attachment affective picture system. Soc. Behav. Pers. 44, 1565–1574. doi: 10.2224/sbp.2016.44.9.1565

Liu, Y., Ding, Y., Lu, L., and Chen, X. (2017). Attention bias of avoidant individuals to attachment emotion pictures. Sci. Rep. 7:41631. doi: 10.1038/srep41631

Maddock, R. J., Garrett, A. S., and Buonocore, M. H. (2003). Posterior cingulate cortex activation by emotional words: fMRI evidence from a valence decision task. Hum. Brain Mapp. 18, 30–41. doi: 10.1002/hbm.10075

Maratos, E. J., Dolan, R. J., Morris, J. S., Henson, R. N. A., and Rugg, M. D. (2001). Neural activity associated with episodic memory for emotional context. Neuropsychologia 39, 910–920. doi: 10.1016/S0028-3932(01)00025-2

McDermott, J., and Hauser, M. D. (2007). Nonhuman primates prefer slow tempos but dislike music overall. Cognition 104, 654–668. doi: 10.1016/j.cognition.2006.07.011

Melvin, G. R. (1964). The mood effects of music: a comparison of data from four investigators. J. Psychol. 58, 427–438. doi: 10.1080/00223980.1964.9916765

Nicolaou, N., Malik, A., Daly, I., Weaver, J., Hwang, F., Kirke, A., et al. (2017). Directed motor-auditory EEG connectivity is modulated by music tempo. Front. Hum. Neurosci. 11:502. doi: 10.3389/fnhum.2017.00502

Nolden, S., Rigoulot, S., Jolicoeur, P., and Armony, J. L. (2017). Oscillatory brain activity in response to emotional sounds in musicians and non-musicians. J. Acoust. Soc. Am. 141:3617. doi: 10.1121/1.4987756

Ochsner, K. N., Silvers, J. A., and Buhle, J. T. (2012). Functional imaging studies of emotion regulation: a synthetic review and evolving model of the cognitive control of emotion. Ann. N. Y. Acad. Sci. 1251, E1–E24. doi: 10.1111/j.1749-6632.2012.06751.x

Ohnishi, T., Matsuda, H., Asada, T., Aruga, M., Hirakata, M., Nishikawa, M., et al. (2001). Functional anatomy of musical perception in musicians. Cereb. Cortex 11, 754–760. doi: 10.1093/cercor/11.8.754

Park, M., Gutyrchik, E., Bao, Y., Zaytseva, Y., Carl, P., Welker, L., et al. (2014). Differences between musicians and non-musicians in neuro-affective processing of sadness and fear expressed in music. Neurosci. Lett. 566C, 120–124. doi: 10.1016/j.neulet.2014.02.041

Patel, A. D. (2003). Language, music, syntax and the brain. Nat. Neurosci. 6, 674–681. doi: 10.1038/nn1082

Pehrs, C., Deserno, L., Bakels, J. H., Schlochtermeier, L. H., Kappelhoff, H., Jacobs, A. M., et al. (2013). How music alters a kiss: superior temporal gyrus controls fusiform-amygdalar effective connectivity. Soc. Cogn. Affect. Neurosci. 9, 1770–1778. doi: 10.1093/scan/nst169

Peretz, I., Gagnon, L., and Bouchard, B. (1998). Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition 68, 111–141. doi: 10.1016/S0010-0277(98)00043-2

Peretz, I., and Zatorre, R. J. (2005). Brain organization for music processing. Annu. Rev. Psychol. 56, 89–114. doi: 10.1146/annurev.psych.56.091103.070225

Sato, W., and Aoki, S. (2006). Right hemispheric dominance in processing of unconscious negative emotion. Brain Cogn. 62, 261–266. doi: 10.1016/j.bandc.2006.06.006

Schmithorst, V. J., and Holland, S. K. (2003). The effect of musical training on music processing: a functional magnetic resonance imaging study in humans. Neurosci. Lett. 348, 65–68. doi: 10.1016/S0304-3940(03)00714-6

Schneider, P., Sluming, V., Roberts, N., Scherg, M., and Goebel, R. (2005). Structural and functional asymmetry of lateral heschl’s gyrus reflects pitch perception preference. Nat. Neurosci. 8, 1241–1247. doi: 10.1038/nn1530

Seung, Y., Kyong, J. S., Woo, S. H., Lee, B. T., and Lee, K. M. (2005). Brain activation during music listening in individuals with or without prior music training. Neurosci. Res. 52, 323–329. doi: 10.1016/j.neures.2005.04.011

Shi, Y., Zhu, X., Kim, H., and Eom, K. (2006). “A tempo feature via modulation spectrum analysis and its application to music emotion classification,” in Proceedings of the IEEE International Conference on Multimedia and Expo, Toronto, 1085–1088. doi: 10.1109/ICME.2006.262723

Silva, A. C., Ferreira, S. D. S., Alves, R. C., Follador, L., and Silva, S. G. D. (2016). Effect of music tempo on attentional focus and perceived exertion during self-selected paced walking. Inter. J. Exerc. Sci. 9, 536–544.

Sliwinska, M. W. (2015). The Role of the Left Inferior Parietal Lobule in Reading. Doctoral dissertation, London, University College London.

Spielberger, C. D., Gorsuch, R. L., and Lushene, R. E. (1970). STAI Manual for the State-Trait Anxiety Inventory. Palo Alto, CA: Consulting Psychologists Press.

Steinbeis, N., and Koelsch, S. (2008). Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS One 3:e2226. doi: 10.1371/J.pone.0002226

Strait, D. L., Kraus, N., Skoe, E., and Ashley, R. (2009). Musical experience and neural efficiency–effects of training on subcortical processing of vocal expressions of emotion. Eur. J. Neurosci. 29, 661–668. doi: 10.1111/j.1460-9568.2009.06617.x

Szabo, A., and Hoban, L. (2004). Psychological effects of fast-and slow-tempo music played during volleyball training in a national league team. Int. J. Appl. Sports Sci. 16, 39–48.

Tabei, K. I. (2015). Inferior frontal gyrus activation underlies the perception of emotions, while precuneus activation underlies the feeling of emotions during music listening. Behav. Neurosci. 2015:529043. doi: 10.1155/2015/529043

Thomas, J. M., Huber, E., Stecker, G. C., Boynton, G. M., Saenz, M., and Fine, I. (2015). Population receptive field estimates of human auditory cortex. Neuroimage 105, 428–439. doi: 10.1016/j.neuroimage.2014.10.060

Ting, T. G., Ying, L., Huang, Y., and De-Zhong, Y. (2007). Detrended fluctuation analysis of the human eeg during listening to emotional music. J. Electron. Sci. Technol. 5, 272–277.

Trochidis, K., and Bigand, E. (2013). Investigation of the effect of mode and tempo on emotional responses to music using eeg power asymmetry. J. Psychophysiol. 27, 142–147. doi: 10.1027/0269-8803/a000099

Van der Zwaag, M. D., Westerink, J. H., and van den Broek, E. L. (2011). Emotional and psychophysiological responses to tempo, mode, and percussiveness. Music. Sci. 15, 250–269. doi: 10.1177/1029864911403364

Vogt, B. A., and Laureys, S. (2005). Posterior cingulate, precuneal and retrosplenial cortices: cytology and components of the neural network correlates of consciousness. Prog. Brain Res. 150, 205–217. doi: 10.1016/S0079-6123(05)50015-3

Yuan, Y., Lai, Y. X., Wu, D., and Yao, D. Z. (2009). A study on melody tempo with eeg. J. Electron. Sci. Technol. 7, 88–91.

Zatorre, R. J. (2015). Musical pleasure and reward: mechanisms and dysfunction. Ann. N. Y. Acad. Sci. 1337, 202–211. doi: 10.1111/nyas.12677

Keywords: music tempo, musician, non-musician, emotion, fMRI

Citation: Liu Y, Liu G, Wei D, Li Q, Yuan G, Wu S, Wang G and Zhao X (2018) Effects of Musical Tempo on Musicians’ and Non-musicians’ Emotional Experience When Listening to Music. Front. Psychol. 9:2118. doi: 10.3389/fpsyg.2018.02118

Received: 25 May 2018; Accepted: 15 October 2018;

Published: 13 November 2018.

Edited by:

Simone Dalla Bella, Université de Montréal, CanadaReviewed by:

Laura Verga, Maastricht University, NetherlandsRobert J. Ellis Independent Researcher, San Francisco, CA, United States

Copyright © 2018 Liu, Liu, Wei, Li, Yuan, Wu, Wang and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guangyuan Liu, liugy@swu.edu.cn

Ying Liu

Ying Liu Guangyuan Liu1,2,3,4,5*

Guangyuan Liu1,2,3,4,5* Qiang Li

Qiang Li