Abstract

Research on interdisciplinary science has for the most part concentrated on the institutional obstacles that discourage or hamper interdisciplinary work, with the expectation that interdisciplinary interaction can be improved through institutional reform strategies such as through reform of peer review systems. However institutional obstacles are not the only ones that confront interdisciplinary work. The design of policy strategies would benefit from more detailed investigation into the particular cognitive constraints, including the methodological and conceptual barriers, which also confront attempts to work across disciplinary boundaries. Lessons from cognitive science and anthropological studies of labs in sociology of science suggest that scientific practices may be very domain specific, where domain specificity is an essential aspect of science that enables researchers to solve complex problems in a cognitively manageable way. The limit or extent of domain specificity in scientific practice, and how it constrains interdisciplinary research, is not yet fully understood, which attests to an important role for philosophers of science in the study of interdisciplinary science. This paper draws upon two cases of interdisciplinary collaboration; those between ecologists and economists, and those between molecular biologists and systems biologists, to illustrate some of the cognitive barriers which have contributed to failures and difficulties of interactions between these fields. Each exemplify some aspect of domain specificity in scientific practice and show how such specificity may constrain interdisciplinary work.

Similar content being viewed by others

1 Introduction

The movement for interdisciplinary research in both natural and social science has been one of the most prominent in recent science and academic policy. To smooth the progression towards interdisciplinary research, researchers working in the domains of Science Policy and some Science and Technology Studies have focused on institutional obstacles to interdisciplinary research, proposing ways to circumvent these obstacles by creating favorable institutional and administrative settings that promote cross-disciplinary coordination and communication (Sá 2008; Jacobs and Frickel 2009). These include for instance targeted research funding, departmental reorganization, new interdisciplinary centers, and even architectural redesign (Sá 2008; Lepori et al. 2007; Bruce et al. 2004; Van Heur 2010; Crow and Dabars 2015). These do not always work however and getting disciplines to work together substantively on problems has proven difficult (Roy et al. 2013; Yegros-Yegros et al. 2015). Part of the reason may be that institutional obstacles are only one possible dimension of what makes interdisciplinarity difficult, or explain why it fails when it does. Less attention has been given to the likely cognitive difficulties that pervade, constrain and even block collaborative interdisciplinary work. Such obstacles are strongly connected to the nature of scientific practice and the limits of human cognition. The need for more detailed investigation of these difficulties suggests an important role for philosophers of science in the study of interdisciplinarity.

In this paper the aim is to draw together insights from studies of scientific practice and cognitive science, which shed light on some of the cognitive aspects of what make interdisciplinarity difficult when it is. “Cognitive obstacles” to interdisciplinarity are taken to refer to the more intellectual and technical cognitive, conceptual and methodological challenges researchers face coordinating and integrating background concepts, methods, epistemic standards, and technologies of their respective scientific domains—particularly in the context of collaboration—in order to achieve some benefit for solving specific problems or sets of problems. Research on the domain specificity of expertise and scientific practice suggests that the domain specific (or “disciplinary”) structure of science may play an important role explaining why interdisciplinarity is often so difficult. Using cases of collaborative interactions in two fields; (i) interactions between molecular biologists and systems biologists and (ii) interactions between ecologists and economists, we will survey some of the specific cognitive challenges such interactions face. These challenges include the opacity of domain specific practices to outsiders, conflicting epistemic values, large conceptual and methodological divides and unstructured task environments. Not all of these are novel, nor are they unique challenges to interdisciplinarity. But in the interdisciplinary cases we look at they all get their bite or intransigence insofar as they are elements and consequences of the domain specific structure of scientific practice, particularly the complex interdependencies between methods, technologies, epistemic values, stable lab environments, and cognitive structures on which functional scientific practice often depends. Our goal is thus to illustrate the potential importance of this concept, and of a philosophical and cognitive approaches in general, for understanding interdisciplinary failures and difficulties.

2 Background

At the level of scientific administration interdisciplinarity is currently one of the most promoted policy goals, both in the natural and social sciences. This is attested to by high-level policy reports from the National Science Foundation, the National Academy of Sciences, and European Union Research Advisory Board. Each report places a strong imperative on interdisciplinary research and promoting the kinds of institutional regimes needed to support it.Footnote 1 The European Research Council, among others, explicitly targets its funding at interdisciplinary projects.Footnote 2 In addition countless papers have been written advocating the importance of interdisciplinarity. Such papers often perceive interdisciplinarity as a way of democratizing science and breaking down disciplinary authority to outside participation (see particularly Mode 2 Science; Nowotny et al. 2001). They also share the belief that the disciplinary system is too rigid to adequately address many of the current real world problems we face, including economic development, climate change and systemic disease. Few scientists particularly in fields like environmental science where interdisciplinarity is considered especially desirable, have not at times felt nudged or even compelled towards some sort of interdisciplinary engagement in order to obtain research funding.

Much of the relevant scholarship has focused on the institutionally-derived obstacles confronting both individuals and teams attempting to collaborate, and finding effective ways of evaluating and measuring the interdisciplinarity of current research and specific research proposals, both for allocating funds and assessing outcomes (Huutoniemi et al. 2010; Klein 2008). Such obstacles include for instance current funding allocation systems; existing peer review systems, which reportedly devalue interdisciplinary contributions; academic promotional systems, which devalue work published in interdisciplinary journals; the physical obstacles of having disciplines usually located in distinct buildings on campuses; and institutionally rigid educational systems which entrench disciplinary perspectives and values. Many of the proposed or implemented strategies for improving interdisciplinarity have thus been institutional in nature. Most important in this regard has been the redirection of funding towards collaborative interdisciplinary projects. In fact the entire research funding of universities has been reorganized around interdisciplinary projects alone.Footnote 3 Research centers designed to create space and positions for interdisciplinary work in general or amongst specific fields are increasingly common, particularly in fields related to environmental issues and sustainability. In certain cases the classical institutional structure of universities has been completely replaced with cross-disciplinary “problem-centered” institutes and schools (see for instance Arizona State University; Crow and Dabars 2015).

These policy-driven efforts however have not always succeeded (Klein 2010; Roy et al. 2013). According to Yegros-Yegros et al. (2015, p. 2), “Evidence on whether IDR is more or less “successful” is scarce, messy and inconclusive.” Indeed the degree of interdisciplinarity of a project and its correlation with success or failure is hard to measure (Huutoniemi et al. 2010). Still there is empirical evidence that interdisciplinarity despite institutional support is difficult and often fails even if a project is structured with interdisciplinarity or transdisciplinarity as a central part of its problem-solving strategy (Zierhofer and Burger 2007; Rhoten 2005; Pohl 2005; Metzger and Zare 1999; Evans and Marvin 2006). The quality of research done in new interdisciplinary fields like ecological economics or sustainability science while well promoted is not well established. Even their practitioners admit the scientific production does not necessarily have the rigor or conceptual materials, like general formal models, which disciplinary fields have (Walker and Holling 2013).

The focus on the institutional constraints of interdisciplinarity is probably not a surprise since the researchers doing this work come from sociological or policy backgrounds for which institutions are principal units of analysis and control, and for which the institutional structure of science is a clear variable of control. Some sociological theories about interdisciplinarity (such as those of Turner 2000) claim that it is institutional perpetuation and propagation rather than intellectual or cognitive factors that drive disciplinary isolation. For instance Lowe and Phillipson (2009) take the strong position that any paradigm can always be overcome by the right institutional framework by pointing to the fluid nature of scientific activity uncovered by sociologists like Turner.Footnote 4

Yet despite the preference towards addressing interdisciplinarity in terms of institutional factors, most interdisciplinarity researchers treat cognitive obstacles as on par with the institutional obstacles which confront researchers trying to collaborate (Porter et al. 2006; Gray 2008). Various papers have explored the linguistic and epistemic tensions between fields that challenge interdisciplinary research in general and in specific cases (Bracken and Oughton 2006; Turner et al. 2015; Calvert and Fujimura 2011). It is recognized that the success of institutional strategies may well depend on cognitive factors such as the conceptual or methodological distance between fields (Huutoniemi et al. 2010).

However attempts to explore the roles epistemic divides or tensions do play as obstacles to interdisciplinary work are usually pitched at a rather generic level, singling out say the tensions between the epistemic unity of disciplinary work and the epistemological pluralism (or pragmatism; Boix Mansilla 2010; Miller et al. 2008) required for interdisciplinary work. For the most part such analyses only touch the surface of deeper cognitive or conceptual challenges that confront collaborators, and do not draw on cognitive science theory or concepts, or deeper philosophical analysis. Boix Mansilla (2010), one of the most prominent interdisciplinarity scholars accuses interdisciplinarity studies in general of relying too much on metaphors like “crossroads” or “trading zones” to describe interdisciplinary cognition. Such concepts provide no solid means for structuring “strong research agendas” or designing “empirically grounded programs on interdisciplinary learning and its assessment” (p. 289). As such interdisciplinarity scholarship in Science Policy has rarely studied the more technical means through which methodologies and conceptual frameworks of particular distinct fields can be coordinated to produce scientifically credible results, and the feasibility of such approaches given the resources researchers in these fields have at hand.

In these respects cognitive science and philosophy of science have the expertise and knowledge of scientific practices to go beyond metaphors and discover how the conceptual and cognitive structure of scientific problem solving may constrain interdisciplinary research. Some cognitive and management scientists already address the cognitive problems of managing and coordinating large multidisciplinary teams. These researchers are interested in team-based collaboration within or across disciplinary boundaries, particularly the conditions for composing and organizing teams, and the flow of tasks and activities within those teams, in order to maximize task performance (e.g Fiore 2008; see Cooke and Hilton 2015, for an up-to-date summary of current team-science research). Philosophers have not yet specifically tried to broadly address the question “Why is interdisciplinarity difficult?”, although much of the work which now exists is relevant to answering it. Philosophical discussion of interdisciplinary interaction extends back to Darden and Maull (1977) although philosophers have only relatively recently begun to investigate the subject more closely, by exploring the meaning of conceptual and methodological “integration” (O’Rourke et al. 2016), often used as the standard for identifying genuine interdisciplinary interactions, and the possibility of conceptual and methodological integration amongst specific fields, such as between evolutionary and developmental biology or cancer research (Plutynski 2013; Brigandt 2010; Brigandt and Love 2012; Love and Lugar 2013). The forms of integration that can be studied on this basis are broad and include forms of integration that take place through model-exchange rather than collaboration (e.g. Ross 2005; Grüne-Yanoff 2011; the special issue in Perspectives on Science 21(2) 2013 edited by Grüne-Yanoff and Mäki).

This latter work helps identify important methodological and conceptual gaps, and links between fields or disciplines, which helps define the nature of the problems that researchers must overcome in order to integrate concepts and methods from other fields in their practice. Such work is relevant for understanding why interdisciplinarity is difficult and what it might need to succeed. However the question of why interdisciplinarity is difficult is one that also pertains to the broader structure or system of problem-solving practices within a field, and the role particular methods, conceptual frameworks and other scientific resources, like models, epistemic values, experimental practices, and cognitive practices of handling them, play within these. This paper draws together work of several philosophers of science who are trying to understand these elements in order to understand the practical social epistemic and cognitive conditions through which interdisciplinarity succeeds or fails (see Andersen 2010; Andersen and Wagenknecht 2013; O’Malley 2013; Nersessian and Patton 2009; Nersessian and Newstetter 2014). Certain types of conceptual integration might seem perfectly desirable on say explanatory grounds, but are less feasible when weighed against other constraints that affect a practice. Further since many of the practical, epistemic and cognitive constraints researchers work under may only be visible in vivo, this paper helps advocate the importance of the ethnographic and qualitative approaches it relies on, in part at least, for the study of scientific practice in interdisciplinary of other contexts (MacLeod and Nersessian 2014). Such methods, even amongst the philosophers studying scientific practice in interdisciplinary contexts we have mentioned here, are still relatively rare.

3 The domain specificity of scientific practice

The main hypothesis of this paper is that the essential domain specific structure of the way much scientific practice helps account for the difficulties of interdisciplinary work. In this section we try to develop an idea of what it means for science to be domain specific and what the broader theoretical motivation might be for treating scientific practices as domain specific. The notion that scientific activity is structured around conceptual and methodological frameworks embodying the principles and practices required for doing scientific work within a discipline is of course not a new one. Kuhn built his theory of paradigms around disciplines understood in this sense. Kuhn’s (1974, 1977) notion of a disciplinary-matrix captures the idea that certain conceptual and methodological features serve to define normal science and structure problem solving within a given discipline. His basic ideas about the role of metaphysical presumptions, symbolic generalizations, values and exemplars, and the puzzle solving nature of disciplinary work have informed modern ideas about the “intellectual” features and practices of disciplines. Institutional and “Kuhnian” conceptions of a disciplines have existed side by side in the literature on disciplinarity. D’Agostino (2012) for example lists both institutional and intellectual (or conceptual and methodological features) that have commonly been used to characterize disciplines since Kuhn.

There has been something of an underlying presumption in the interdisciplinarity literature that both cognitive and institutional conceptions of discipline coincide in actual cases (see Turner et al. 2015 for instance). However the diversity of fields and practices within disciplines makes it highly doubtful that the academic institutions called “disciplines” are really coextensive with anything like Kuhn’s problem-solving units. Disciplinary matrices, where they exist, are more likely to do so at the level of fields within academic disciplines,Footnote 5 and even then fields themselves may exhibit substantial diversity in this respect (think of the heterogeneity within environmental science or nanoscience).Footnote 6 Further most philosophers of science would agree that we are unlikely to find anything as fully linguistically closed and isolated as Kuhn implies. Still some philosophers do agree that Kuhn’s ideas of disciplinarity and disciplinary practices have central relevance for understanding interdisciplinarity. Andersen (2013) for instance has argued that the tension between tradition and innovation that underlies disciplinary work on Kuhn’s view is similarly expressed in the demand for interdisciplinarity, which requires fundamental and deep levels of innovation. The role disciplinarity plays in interdisciplinary contexts has also been explored with respect to both social epistemological relations (Andersen and Wagenknecht 2013; Andersen 2010) and incommensurability in interdisciplinary communication (Holbrook 2013).

Kuhn aside, the principal proponents of domain specificity in human skills and practices can be found in cognitive science, from which the concept of domain specificity originally derives. In this field researchers use the term “domain specificity” to capture the degree to which a cognitive systems or cognitive domain is specialized for just specific tasks. According to Robbins (2015), “A system is domain specific to the extent that it has a restricted subject matter, that is, the class of objects and properties that it processes information about is circumscribed in a relatively narrow way.” Domain specificity has been argued of numerous cognitive systems at various levels of organization such as basic color perception, and visual shape analysis systems, but also higher level expert or knowledge-based cognitive systems (like fire-fighting expertise, and also varieties of scientific expertise) which involve learned patterns of reasoning that are adapted for analyzing a particular domain and events within it. The concept of domain specificity has two basic features (i) the narrow subject matter or classes of problems domain-specific cognitive systems address, and (ii) their inflexibility given the fine-tuned dedication and specialization of these systems to handling well that subject-matter alone. If and how these features appear in scientific contexts is still an open question, but one that has been addressed from multiple directions and perspectives.

Sociology of science for instance supports the idea that scientific practice functions through practices specialized for limited domains of investigation or problem-solving, concentrating not so much on individual cognition, but the material and social dimensions of scientific work. For example, through intensive anthropological studies of laboratory scientific practice, has unearthed just how situated scientific problem-solving can be within specialized material environments. Knorr-Cetina (1999) describes scientific practice as embedded within epistemic cultures, “those arrangements and mechanisms—bounded through affinity, necessity and historical coincidence—which in a given field or subfield, make up how we know what we know...that create and warrant knowledge” (1999, p. 1). Laboratory cultures composed of sets of material and communicative practices represent in this respect “bounded habitats of knowledge practice” (2007, p. 1). The importance of material environments (like laboratory environments, their organization and equipment) to scientific practice has been explored by a number of sociologists and historians such as Latour, Galison and Rheinberger. The tenor of these discussions is that scientists (particularly experimental scientists) all operate within socio-technical systems or cultures which sustain scientific activity.

This work is paralleled in cognitive science itself by work on the distributed and situated nature of scientific cognition which offers the opportunity to draw cognitive and sociological research together, by providing a framework for understanding how individual cognitive agents draw on their social and technical environments, and their own cognitive capacities and those of others, to solve specific problems. Cognitive anthropologists argue for instance that any day-to-day cognitive activity requires an external material environment to take place. Cognition cannot be reduced to pure abstract symbolic processing occurring only “in the head”. Cognition even in the form of regular arithmetic is context-dependent and distributed throughout environments through artifacts and technologies (see Lave 1988). In complex scientific contexts lab scientists learn to “think” in terms of the affordances of the technologies and equipment around them, and their own mental models of how they operate. Reasoning is substantially distributed to, and afforded by, these technical and experimental devices, as well as by specific computational and mathematical models that researchers know how to debug, correct and draw inferences from, without necessarily being able to conceptualize all parts of their operation (Nersessian 2002, 2010).

In general cognitive psychologists have found evidence of inflexibility and specialization in cognitive domains. People in general do not perform well on abstract problem-solving tasks unless those tasks are presented in contexts that embody or situate them in familiar and meaningful environments. Experts demonstrate more coherent knowledge-based schemas for their domains and are very good at effectively categorizing problems according to the principles or problem-solving strategies or heuristics that should be applied to them (Ericsson et al. 1993; Gobet and Simon 1996). The transfer of skills particularly in scientific contexts is often very difficult (e.g., Clancey 1993; Greeno 1988; Lave 1988; Suchman 1987), and task-experts have trouble transferring what may be relevant skills because current tasks are not situated in their familiar domains.Footnote 7 On the controversial dual processing view of cognition expert reasoning is system 1 rather than system 2 processing, where the latter represents a more conscious symbolic form of processing that applies general or abstract rules, and the former relies on more “intuitive” or tacit internalized responses (Kahneman and Klein 2009). This would explain why experts are often quite incapable of explaining the detailed rationales of their behavior. Shifting the burdens onto system 1 processing reduces cognitive load and makes performance cognitively manageable, whether in high pressure or high complexity contexts. Stability of environments is especially important in this regard.

This older work on expert reasoning has yet to be fully integrated with newer work in situated and distributed cognition in science. One thing cognitive approaches in cognitive psychology lack, through their tendency to break down scientific practice into basic sets of skills, are detailed pictures of how specialized the conceptual and methodological elements of scientific practice are for solving particular sets of problems. Here philosophy of science can contribute and where possible combine its more detailed understanding of these elements of scientific practice with insights from cognitive science and sociology. Much of philosophy of science however still takes Kuhn as a reference point for these discussions. Results of investigations and studies like those above would suggest a way of unfolding what might make scientific practice both specialized and inflexible when it is. To varying degrees problem-solving practices within domains are functionally dependent on, and distributed amongst, epistemic principles, conceptual tools, material, social and technological environments and practices, as well as tacit and intuitive knowledge, forming what Chang calls “systems of practice” (Chang 2012). These systems are coherent with respect to their aims, but they are also adapted to deal with specific phenomena within the constraints of what can be cognitively processed and learnt. They enable researchers to think, act and validate results with respect to those domains. Importantly the inflexibility and specialization which cognitive scientists find in the expert competencies of scientists and others may well be a function of the technical interdependence between these principles, tools etc which is required for building systems capable of handling complex domain specific tasks in a scientific domain. It may not on this basis be clear or easy for scientists within or outside the domain to see how to modify these elements in the novel directions interdisciplinarity often demands without compromising the functionality of the domain and its ability to do problem-solving work. On this basis the abilities of collaborators to find viable ways to coordinate their practices is an undoubted challenge. As we will see in the next section, this last potential feature of domain specificity is common to the problems raised below which helps implicate the role of domain specificity in cases of interdisciplinary difficulty and failure.

4 Consequences of domain specificity for interdisciplinarity

In this section we look at some specific problems that have arisen in the course of interdisciplinary collaboration, and have been responsible for collaborative failure at least in some cases. Some of these problems are certainly familiar to interdisciplinarity scholars, but their deeper ties to the structure of scientific practice and domain specificity have not been generally investigated. None of these problems are necessarily unique to interdisciplinarity interactions, and could well arise within the institutional structures we call “disciplines”, insofar as disciplines themselves are composed of different cognitive domains, even though the same subject matter may be under investigation (see for instance mathematical and field ecology). Each of these can be analyzed as problems that get their bite or intransigence because of the complex interdependencies which specialized practice often depends on. Nor are they necessarily independent problems, but they do nonetheless highlight different salient features of how domain specificity might manifest itself in interdisciplinary contexts, helping to motivate the relevance and importance of the concept for interdisciplinary scholarship.

This set of problems are drawn from two cases of interdisciplinary interactions. The first are interactions between molecular biologists and systems biologists. Part of this research is based on a 5 year ethnographic study of two systems biology labs led by Professor Nancy Nersessian, as well as literature reviews of publications in the field.Footnote 8 One lab was a computational lab (lab G). Its researchers never performed any experimentation themselves and had little knowledge of experimentation. Computational labs are predominant in the field. The other was a fully functioning wet lab, in which researchers both modeled and performed their own experiments. Systems biology is relatively new field of about 15 years that seeks to mathematically model large-scale biochemical systems with the aid of modern high-powered computation. These models should in theory come closer to providing the ability to predict and control, and even re-engineer, complex biological systems, including systemic disease phenomena like cancer. Systems biologists come for the most part from quantitative backgrounds such as engineering, and have no biological training. Building these models requires however biological expertise, experimental data and experimental validation. The expertise for these resides with molecular biologists. Most modelers need to collaborate with experimenters from molecular biology, particularly if they operate in a computational lab alone. Practitioners in molecular biology have almost no mathematical training and do very little modeling. Their skills lie in experimental manipulation. Initial studies of interactions between modelers and experimenters have found divergences in epistemic values and practices between the two groups (Calvert and Fujimura 2011; Rowbottom 2011; Fagan 2016; Green et al. 2015). These observations support the findings from this project on the difficulties facing systems biologists and molecular biologists who engage in collaborative work.

The second set of interactions explored here are between economists and ecologists. This work draws on some of the issues figures in these disciplines themselves identify as general problems as well as problems that have arisen in the course of ongoing study of collaborations in the area of resource and environmental management (see MacLeod and Nagatsu 2016), and in a participative sociological study of a long term collaborative project for replenishing Salmon stocks in the Baltic sea (Haapasaari et al. 2012). As yet there is not as much research on collaborative interactions between ecologists and economists, particularly in philosophical or sociological circles, which makes it less certain how deep or common the problems mentioned below actually are, although they certainly have arisen at times. Model exchange between economists and ecologists stretch back, but collaborations have been sporadic, despite the strong demand for them (Polasky and Segerson 2009, p. 410; Millennium Ecosystem Assessment 2005). In terms of background economists and ecologists share many potential domain general skills that should predispose good interactions. Mathematical modeling is common to both (but not all, see field ecology), and indeed the mathematical models they rely on sometimes have deep similarities, some of which are the result of this historical exchange. However despite these facts, and despite the seeming high possibility of communication, interactions between ecologists and economists have often proved difficult.

4.1 The opacity of domain specific practices to outsiders

One rather obvious consequence of domain specificity, particularly the complex specialization it manifests, is that the technical skills, understanding and experience required to operate within a domain can be opaque or intractable to non-specialists without adequate training. Problems at this level fuel communication problems or “language barriers” (Norton and Toman 1997) which are often identified as critical to interdisciplinary failure. If domain specificity is strong then a field’s complex set of interdependencies of methods, epistemic principles, technological practices and of course tacit knowledge, can make it very hard for outsiders to understand how the system operates, or the rationale behind decisions its practitioners make. Developing the insight required to see how to modify or coordinate one’s own practices to connect with another’s in a productive and scientifically rigorous way can be very difficult, especially over a short time frame. Such complexity makes it hard for researchers to interpret or translate the actions of a collaborator in terms that makes sense to them, structure their own actions to meet the requirements of a collaborator, anticipate each other’s actions or understand why an action or request of a collaborator might be on balance warranted if the time and effort costs seem large. Domain specificity thus inhibits the development of what is often called interactive expertise (Collins and Evans 2002).

The raw difficulty of sufficiently understanding a well-established and complex set of technical practices can be identified as responsible for some of the reasons why collaboration has often proved difficult in both systems biology and ecology/economics collaborations. This was a substantial problem for members of the computational lab from systems biology. It was very hard for experimenters (molecular biologists) to understand the mathematical methods systems biologists were using and why particular mathematical steps required certain data, just as it was hard for the systems biologists to have any real sense of how mathematical requirements could be translated into experimental procedures. This led to collaborative failures (MacLeod and Nersessian 2014). One problem is that mathematical model-building uses techniques that abstract and simplify biological network information, in order to generate computationally tractable parameter fitting problems. Justifications for these are based on mathematical and statistical principles. Molecular biologists have generally no expertise at all in assessing whether these really can produce adequate representations and are worth investing their time in. Lack of understanding of each other’s methods leads to fragile trust relationships that can break down when requests cannot be understood or interpreted as productive or warranted, given the resources and time that invariably have to be invested in them. In the words of one experimenter we interviewed,

the data that they [modelers] want from us is something that is not simple to generate. So if they want a Km for an enzyme we have to purify the enzyme. Then we have to create all the conditions to measure it in vitro. That’s not a simple undertaking. That’s probably six months of work. And none of us have a person sitting around who can do that for six months.... If we are going to spend six months generating what they want then we would like we need to have something that is going to come out of it. (molecular biologist)

At the same time modelers in the labs we studied underestimated at the start of their collaborations the amount of tacit, technical and biological knowledge that goes into experimentation, and the extent to which such knowledge goes beyond just “recipe-following” in experimental procedures. Particularly they lack understanding of the dependence of those procedures on sound skills and control, their limitations, as well as technological and material constraints of the biological substances that are used. Modelers often represented biological knowledge as simply horizontal. Any part could be learned when needed since biology, unlike mathematics, lacks any essential core of knowledge which needs to be mastered first. Whether this is true of biological knowledge or not, experimental practice itself, if not vertical in the way mathematics is, cannot be learned easily, since it is based on the integration and coordination of multiple kinds of skill-based, biological and technological knowledge which takes experience to acquire. As a result modelers’ practices are rarely well coordinated with constraints on experimentation. Modelers may build their models in apprehension of experimental results that are impossible to obtain for technical reasons they do not understand and cannot anticipate. As one experimenter put it,

Sometimes from the mathematical point of view, it would be nice to have some strain that doesn’t have a given enzyme. But I know as a biochemist that I cannot grow that strain, for example. There are things that are not possible and if you are just from the modeler side, sometimes you ask things that are not biologically possible. (molecular biologist)

One case of collaboration we observed in the study failed because the experimenter could never form a clear idea of what the modeler was asking for, despite much attempted communication. Models require specific information that can seem non-standard from an experimenter’s point of view and not easily fit with their established practices in a way they can translate or recognize. Such barriers inhibit coordination, such that modelers in many cases are left without the data they need to optimally improve their models.

In the case of ecology and economics, researchers often share mathematical education and modeling skills, as well as basic similarities in the structure of their population-based models. The barriers of understanding each other’s practices sufficiently are in turn lower, but not absent. The neo-classical economic framework can seem very unfamiliar and counter-intuitive to those not trained in it and indeed ecologists do report struggling to understand why economists optimize the way they do using for instance interest rate discounting factors, or why they place emphasis on specific concepts like marginal rates of return or infinite substitutability. Coordinating practices of model analysis and model construction within the expertise of both groups that fit the conceptual and mathematical constraints each field imposes can thus be difficult for both ecologists and economists who cannot fathom the reasons underlying those constraints. Haapasaari et al. (2012) report the experiences of their own collaborative project involving fisheries scientists, economists and social scientists. Many difficulties pervaded the integration of economists into the project, who were perceived by the fisheries scientists (and the social scientists) as inflexible. For instance, as one fisheries scientist put it.

Right at the beginning, it became clear that the economists did not have any motivation to play the common game, and I realized that it was no use to even try, so we had to knuckle under the fact that they have another perception of good science. (p. 6)

The economists stuck to their tried and true bioeconomic models, using optimization to determine a management strategy, despite the reservations of the fisheries scientists that typical bioeconomic models are too simplistic and optimization too blunt a resource. As one economist Haapasaari et al. cite put it, in specific appeal to opacity problems,

It is easy to underestimate another discipline, to wonder what is the difficulty there if you see only some really reduced and simplified part of it, and you don’t understand the methods, you cannot know what amount of work there is behind that work, and if you don’t know the literature of that discipline, you cannot understand what is the contribution of that study compared to previous results. (p. 6)

As such ecologists can find the theoretical framework and normative principles underlying these economic models difficult to rationalize. The resistance of economists towards modifying this framework may be interpreted as stubbornness by potential collaborators, as it was in this case, reinforcing their reputation as difficult to work with. Of course some ecologists may, with good argument, fundamentally reject the substitutability and trade-off framework, but this tends not to promote collaborative interaction. For those trying to collaborate the conceptual and normative framework of economics can be a substantial obstacle. There is no reason not to expect that opacity problems work both ways.

4.2 Large conceptual and methodological divides

Opacity problems generate mismatched communication and uncertainty in interactions between collaborators which make it very difficult to coordinate practices in productive ways. In other cases the problems might be less directly due to a lack of technical insight into one another’s practices and more due to the fact that the conceptual and methodological distance between the cognitive domains is very large. As mentioned philosophers often identify such divides, and sometimes propose ways, in theory at least, to bridge them. However sometimes there might be no straightforward way to translate or link models or concepts from the different domains, without solving very complex problems neither domain is well-adapted to solve with its current sets of practices. Importantly it may require significantly restructuring practices in those domains in ways which conflict with the way practices in those domains have been designed and optimized. In such cases domain specificity becomes a particularly intransigent obstacle. One particular such problem in economics/ecology collaborations is the problem of scale. Most experimental work and models in ecology are of relatively small scale compared to those in economics (working over limited spatial regions, with limited numbers of variables). This fits the range at which experimentation can be manageably carried out, and thus the range over which reliable models can be produced. Such models can guide individual land-use decisions, such as harvesting strategies for resource management. On the other hand economics usually works with larger-scale models relevant for regional, national or international policy formation built using observational data (Vermaat et al. 2005). It at these levels that there is a demand for policy relevant contributions from ecology to economic models. Unfortunately there are no simple reliable ways of scaling up models produced in one limited context to another larger context. As Stevens et al. (2007) put it, scaling up requires the addition of assumptions that introduce further error, while requiring complex arguments that reduce “the transparency of results and makes them difficult to explain to non-specialists (Carpenter 1998)”. On the other hand scaling up experiments to a large-scale is not only an enormously expensive option but such experiments become difficult to control and replicate. Arguably ecology has settled on its scales of experimentation and model-building because they fit well these practical and epistemic constraints. There is no easy pathway to transforming the field. The result however is that ecological models and economic models are for the most part not in scale alignment, and thus lack conceptual compatibility. There is likely no easy way to resolve this incompatibility.Footnote 9

At certain scales of economic analysis economics and ecology can be in temporal and spatial scale alignment. For example in the field of resource management and harvesting for individual land or resource users, such problems connecting model variables can be much more straightforward. However even here conceptual problems of these kinds may arise (MacLeod and Nagatsu 2016). For instance economic optimization readily requires growth models that are valid outside the physical situations used to build the model. Optimization algorithms survey factual as well as counterfactual possibilities. However this requires a usually larger range of validity than most models in the domain of resource management are usually constructed to achieve. Most are built using statistical regression techniques, and are valid only for the domain encompassed by the data used in their construction. In ecology the models that can provide better reliability are called process-based models. Process-based models attempt to model the causal processes underlying ecological growth. This enables reliable model predictions outside the range of data used to build the models and over longer time-scales, both essential factors in economic optimization. However such models require considerably more work and expertise (particularly computational and biological expertise) to produce requiring longer time frames. Knowledge in particular of the relevant processes which determine a system’s response to future climate conditions is “extremely limited” and difficult to produce (Cuddington et al. 2013, p. 9).

There are no easy conceptual solutions which will facilitate the construction of models relevant for regional or larger policy levels that are consistent with current model-building practices. Economic and ecological processes work on different time-scales. Economic processes are fast, and recover from shocks quickly. Economic systems have high redundancy. As Norton and Toman (1997) note, these properties of economic models suit well the substitutability paradigm in economics which treats ecological systems mostly on a small scale as sources of services that can be swapped in and out or intervened on so as to maintain a desired flow of services. Resources like a forest stand do approximately recover at growth rates mostly governed over shorter time scales by factors determined by properties of the stand rather than external factors. However larger-scale systems are not as resilient to economic activities but respond only much more slowly to them. The tenor of calls for integration of economics and ecology are thus more or less demands that economic models must factor in ecological effects that occur over large-scales or over longer-time scales. Achieving this would not only require a substantial modification of established practices and methods, it would require overcoming conceptual disagreements over the meanings of concepts like “stability” which are embedded with the kinds of models both economists and ecologists produce and the analysis they use. Most problematic of all however it would often require building complex nonlinear multiscale systems models. While the call for these, and in general for a “systems-level perspective” in environmental science, are persistent (see for instance Costanza et al. 2014; Norton and Toman 1997 and in general Farrell et al. 2013) such models are difficult to produce and prone to failure or superficiality. They are outside the experience of many practitioners for the most part, and frequently outside experimental capacities and available data. In all these cases above then, the conceptual and methodological incompatibilities between the two fields are non-trivial, and not overcome easily.

4.3 Conflicts over epistemic values

Collaborating fields may harbor deep disagreements over the standards for assessing the reliability of certain scientific claims. These standards are governed by what are often called “epistemic values”. When fields collaborate conflicts over epistemic values may arise as both problems of opacity but also as problems due to the entrenched role these values play in systems of practice. Disputes over them however certainly have consequences for collaboration insofar as these values are essential to the practices of one or more collaborating fields. In the case of systems biology conflicts over epistemic values are well-known. Fagan (2016) and Green et al. (2015) for instance note that different explanatory preferences can block the acceptance of the results of mathematical modelers by molecular biologists. Modelers modeling stem-cell pluripotency aim to show how general characteristics of stem cells and cell development follow from general mathematical descriptions of cell state spaces. Fagan and her collaborators argue that the character of these explanations are deductive-nomological. Experimenters studying the same phenomenon on the other hand have a preference for detailed mechanistic type explanations, which illustrate how sets of causal interactions give rise to different developmental trajectories amongst stem cells. Different views about what a valuable and reliable explanation should look has contributed to the efforts of modelers being largely ignored by experimenters.

Other disputes and lack of collaboration between experimenters and modelers can be traced to disagreements over the ways models can be used reliably and the ways in which models can be validated for such uses (MacLeod and Nersessian 2014; Rowbottom 2011). Modelers believe models can be validated through predictive testing as reliable representations accurate enough for predicting system behavior in response to perturbations. Experimenters however are skeptical that models can obtain this kind of fidelity. Their primary concern is often with the quality and sufficiency of the underlying data used to construct a model, and whether that is representative enough of system behavior, notwithstanding good predictive testing results. Implicitly these views disagree over the power of mathematical methods of abstraction and idealization to compensate for data inaccuracies, errors and variability. To some extent these conflicts can be understood as opacity problems, since researchers might not easily see how closely tied the methodologies of a collaborating field are to a given set of values. Tacit knowledge also plays a role. Experimenters have a first-hand knowledge and understanding of biological variability and the weaknesses of data sets which are not easily communicable without benchtop experience.

Practices in both fields are built around these epistemic values and preferences in terms of how experimenters and mathematicians derive in their eyes legitimate results. In the case of assumptions about the legitimacy of idealizations and abstraction it is hard to see how mathematics could operate effectively without them. O’Rourke and Crowley (2013) treat conflicts over epistemic values as opacity problems. In this way such conflicts can be potentially addressed through managed collective workshops, which help bring forth underlying epistemic values for open discussion. But divides like these are not just opacity problems, but reflect more basic hard-to-resolve disagreements, particularly when systems of practice flow from them. Further in the case of systems biology there is not necessarily enough information to determine one way or the other whether mathematical methods can handle biological variability. The extent of biological variability is itself uncertain and many models built so far have been too simplified to really decide what modeling might be capable of. Unfortunately such conflicts reduce incentives to collaborate, which reduces the ability of the field to adjudicate these issues. In systems biology for instance initial enthusiasm for a modeling project can turn negative once experimenters begin to suspect that the models are not as reliable as modelers represent them. This lack of trust or faith in modelers in turn reduces the possibility of modelers getting the experimental information they need to increase the accuracy of their models. Overall this is another hard domain specificity problem for systems biology to resolve.

In relations between economics and ecology, such incompatibilities over epistemic values of these fields are the subject of much contention (Beder 2011), and reflect a deeper entanglement of values with domain specific systems of practice of both fields. Armsworth et al. (2009) drawing on their experience in such collaborations, but also what they have “witnessed.... when serving as authors, reviewers, editors and grant panelists where a referee from one discipline criticized a researcher from the other for their poor use of statistics,” (p. 265) claim that ecologists and economists have different approaches to statistical regression which reflect deeper background assumptions about the aims of model-building or their basic “philosophy of science.” Economists are according to them more theory-driven, ecologists more data-driven. Economists use statistical regression to test theoretical models, whereas ecologists are much more interested in using the data to derive parsimonious causal relationships between variables. They are less interested in building and testing theories. They worry much more about the validity of their models, and testing for “off-model” relationships, rather than just picking out the most substantive relationships in the data. Hence economics and ecology can fall on either side of another difficult epistemic divide; scientists who are suspicious of theory-laden approaches as distortive and biased, and those that think of data-mining or pattern recognition methods as unsubstantive, unprogressive and uninformed. These views can be tied to the general suspicions ecologists have about the justifiability of current economic theory. However, as a result, practices surrounding the use of statistical regression analysis can be very distinct.

Armsworth et al. think these are in essence just cultural differences which can be overcome with communication and education. However when such differences are in fact the result of embedded domain specific practices they may not be easy to overcome, as we have seen. In the Haapasaari case for instance the economists involved could not conceive of any scientific value to them of pursuing the data-driven integration strategy proposed by the fisheries scientists. The latter wanted to use Bayesian Belief Network analysis to integrate knowledge from all participants in the project including sociologists and assess potential fishing management strategies. Bayesian Belief Networks are graphical models that represent a set of variables connected by directed, acyclic graphs. Connections represent their probabilistic dependencies. Expert knowledge is required from each group to contribute a background set of nodes (or events) and of conditional probability distributions between connected nodes. These reflect a degree of belief and uncertainty regarding the effects to which a causal event would give rise.

While Bayesian methods are often used in economics, the use of a BBN method in this case conflicted with the economists’ own preferences for theory-driven research and the associated standards by which research in economics is evaluated. The economists were expected to provide a model which would be used to generate a set of background distributions across different potential parameter and structural options for certain relations in the network. The performance of different simulations would be used to update conditional probabilities amongst variables in the network and form representations of uncertainty in their relations, against which different management strategies could be evaluated. No economic model would be refined, tested or optimized in any way the economists recognized as reliable through this procedure. Further the economic model would need to be integrated with unfamiliar and unrecognizable concepts from other fields with no strong economic interpretation or legitimacy. Indeed they only began to contribute to the BBN strategy once they had first published using their more traditional approach. The strong preference in economics for work adjudged theoretically relevant and valid within the field clashed with the more pragmatic willingness of the other groups to apply, integrate and collectively modify background models and concepts through a novel statistical method designed to estimate degrees of beliefs and uncertainties. This preference amongst economists for theoretical development is closely tied of course to the established practices of optimization and model-testing which operate in the field. None of these were required here. Economists were thus in a poor place to value the BBN method and recognized they would have a hard time convincing their disciplinary colleagues of its value and legitimacy. Hence while a very promising interdisciplinary strategy, a fairly substantial restructuring of economists’ preferences, practices and values in scientific work would seem to be required before BBN modeling of this kind could be established within economics as both a sound use of economics and something which contributes to the field. Differences like these are unlikely to be easily overcome.

4.4 Unstructured problem-solving environments

When conceptual structures and schemas do not accurately or precisely predict events in a particular domain it has been shown, for a few professions at least, that experts are very poor at handling domain specific problems (Shanteau 1992). Expertise in such contexts is difficult to acquire insofar as processes of acquiring expertise are often processes of learning correlations between conceptual frameworks and real world events by exploring their relationships through manipulations of both. Limiting the complexity of the domain being studied, and keeping tasks relatively manageable while using conceptual frameworks which have high validity within these refined domains, increases expert performance, and the ability of experts to acquire that performance. The implications of this research for scientific practice is that high-validity environments are related to the ability of scientists to learn how to solve problems in a domain. Domain practices need be structured in such a way to meet these cognitive constraints.

As such a different consequence of domain specificity for interdisciplinary work derives from a problem converse to those above that while domain specificity and the boundaries it creates might inhibit collaboration, operating without established domain relevant problem-solving practices can be as difficult and frustrating. Interdisciplinarity sometimes seems synonymous with the idea that researchers will somehow learn to work in more fluid open-ended problem-solving environments without adhering to disciplinary problem solving recipes and norms. But this has to be weighed against the importance of the role that such recipes and norms play enabling efficient and effective problem-solving. As we saw in Sect. 3, the domain specificity concept helps explain how complex cognitive problem-solving processes can take place through adaptation and specialization of practices for a relatively narrow set of phenomena that make use of our limited cognitive abilities. However in interdisciplinary contexts the domain specific task routines fields rely on to make their research cognitively tractable may no longer be operable or applicable. Task routines prescribe the sequence of steps a researcher should take in order to resolve a specific type of problem (or perform a specific type of experiment). Without these routines researchers can find themselves in the difficult position of having to invent methodologies and formulate strategies for handling problems on the spot, without tried and tested methods for unpacking and simplifying problems, and validating the outcomes. Indeed the more integrative the conceptual and methodological approach required or demanded for a problem, the more substantial an issue this may be.

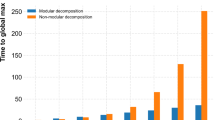

Systems biology is a particular case of this. Collaborators, particularly modelers, in systems biology have relatively unstructured problem-solving environments. Modelers, as mentioned, are trained engineers who have moved into biology in anticipation that mathematical methods and computation combined with experimental work can improve understanding of biological systems. However while certain methods and general concepts from control engineering have found life in systems biology, for the most part little from these domains transfers easily or cleanly to the biological domain. This leaves modelers often grappling with extremely challenging problems, on which neither experimenters nor their own backgrounds can give them much guidance.

Further, there is yet no good domain specific theory which can prescribe sets of modeling routines for how to model biological systems generally that will get a modeler from start to finish. Approaches do exist, such as Biochemical Systems Theory (BST) which apply canonical sets of equations. These have been designed mathematically to capture the range of nonlinear behaviors that biological networks usually exhibit, within the degrees of freedom provided by their parameters (Voit 2000, 2013). However such approaches do not cover the variety of data situations in which modelers find themselves. Modelers may have only steady state/equilibrium data; they may have incomplete time series data; they may only have in vitro data. As G16, a graduate researcher who came to lab G from telecommunications engineering told us, contrary to her expectation, working on one project does not necessarily prepare you for the next:

... when I talk to G10, his project, like his kind of data are different. Like he has it for... different gene knockouts and then, more of steady state data. And then like G5’s data are different. His are [not time series]...and then you could [get] creative with it, like you could say, um I’ve tried different things and then it took me a while to realize that’s not the way...that’s not what I can use. Like G10 could use it for his project because of this and that. For me, it’s not going to work because I have this dynamic data, which is different.

These differences require, for instance, choosing a modeling framework that best seems to fit what is possible with the kind of data available, finding ways to extrapolate the available data, making simplifying assumptions about network structure or parameter values to fit what is possible with the data, and modifying the modeling framework mathematically. Many of these decisions are made on a trial and error basis, experimenting with different possibilities. The result is a cognitively intensive problem solving process that is frustrating for its practitioners. Ultimately over the course of a graduate degree initial problems are simplified from ones that cannot be solved given the available data to ones that can be. At the same time model behaviors are learnt and internalized. But it is a slow process. In the words of G16,

When you were an engineer and you used to work with exact stuff and formulas, especially like in my area, it’s like a very neat little problem... So when you get here, you’re like very frustrated. Like, nothing is known to any extent [with emphasis]....after a while, you know what to expect and you know that kind of thing is not gonna... you can reason that....that kind of thing and that error in there is not gonna effect the whole system like that.

This research does not occur within well-established domain specific practices, and is characterized by its participants as very difficult and not necessarily effective as a result. Finding reliable methods for problem-solving in systems biology is high on the agenda, but hampered by the complexity of biological systems and the difficulties of collaboration mentioned in Sect. 4.1. In principle any interdisciplinary project that tries to transplant domain specific practices into a new domain these practices were not designed specifically for may put its researchers in the position of having to solve problems by inventing substantial new practices and methods on the spot for each individual problem. Given the extra cognitive demands this might not be possible with any efficiency or certainty, particularly where the phenomena are complex and researchers also need to coordinate their practices with researchers from other fields. Of course putting graduate researchers in these positions might in the end produce creative results, but it seems like a high risk strategy. In light of this the demands and expectations we might have for creative interdisciplinary work need to be cultivated with a good understanding of what the conditions for effective problem solving in practice might be.

5 Conclusion

While interdisciplinarity scholarship is in general aware of the importance of cognitive constraints, but has struggled to articulate them in any precise or detailed way, this paper has attempted to illustrate how this can be done by drawing on philosophical and cognitive accounts of scientific practice. In each of these cases above part of what generates each of these problems for researchers attempting to work across disciplinary boundaries, can be understood as elements or consequence of the domain specific structure of scientific practice. To some degree the intransigence or difficulty of these problems for interdisciplinarity stems from the complex interdependencies between methods, technologies, epistemic values, stable lab environments, and cognitive structures which undergird many domain specific practices, and is an essential feature of the specialization of such practices for solving specific sets of problems in specific ways. These dependencies make it difficult to see how another cognitive domain operates effectively and efficiently in order to coordinate practices across domains, just as it makes it difficult to vary practices along the dimensions interdisciplinary work might require. Such variations may disrupt methodological and material elements around which practices within a domain are constructed and upon which their ability to solve problems depends in rather deep difficult to resolve ways. Yet the example of unstructured problem-solving illustrates how essential specialized problem-solving structures can be to efficient and effective science.

Admittedly this only paper provides a cursory account of the cognitive obstacles it discusses all of which warrant more in depth investigation in order to unpack on what basis, and the extent to which, any act as constraints on philosophical and deeper cognitive scientific grounds if possible. Further these obstacles are likely not unique to interdisciplinary contexts, but might arise in any context where there is an attempt to integrate or coordinate distinct domain specific activities and practices. Regardless they stand to provide some insight into interdisciplinary difficulty and failure that occur when such boundaries are crossed from a principally cognitive and philosophical, rather than institutional, point of view. Collectively they are evidence of the domain specificity of scientific practice, and the need to further investigate just how domain specific scientific practice is if we are to get a good handle on the challenges to interdisciplinary work.

Having said that, while the purpose of this paper has been to illustrate specifically cognitive and philosophical problems, it has not been to exclude the importance of institutional, educational, social or other factors that play a role both affording and inhibiting interdisciplinary work. Interdisciplinary work often crosses institutional boundaries and cognitive ones at the same time. If anything understanding the challenges to interdisciplinary work and how to structure policies in the most effective ways to encourage it requires the combined work of many fields. Together these fields help us understand how for instance domain specific practices are further embedded by institutional and educational systems, and by personal emotional reactions and identity issues that affect attempts to implement interdisciplinary policies and work across interdisciplinary boundaries (see Boix Mansilla et al. 2012). Sociology, philosophy, psychology, education science and so on need to team up and integrate their own particular perspective or insight into interdisciplinary interactions. Interdisciplinarity itself seems as pressing a portal as any for bringing the fields studying science in one way or another closer together.

Notes

National Science Foundation (2002), National Academy of Sciences (2002), European Union Research Advisory Board (2002). See also match-making events such as the National Academies Keck Futures Initiative (NAKFI, http://www.keckfutures.org).

ERC Frontier Research Grants Information for Applicants to the Starting and Consolidator Grant 2016 Calls. Available: http://ec.europa.eu/research/participants/data/ref/h2020/other/guides_for_applicants/h2020-guide16-erc-stg-cog_en.pdf.

For instance the Lappeenranta University of Technology, Finland has reorganized its entire internal research funding allocations such that researchers can only obtain these funds by forming interdisciplinary projects or “platforms” that engage all of its three different schools.

Indeed explicit pronouncements of such beliefs are not hard to find more widely in sociology of science. A strong sociological view such as Latour and Woolgar (1986) paints cognitive and conceptual structures or features of science as simply manifestations of cultural institutionalization, serving for instance power and authority functions, but not acting as independent constraints on practice. Anything can be wiped away if it serves the institution. Institutional or other sociological forces are always dominant and determinative.

Darden and Maull’s notion of field is widely cited in this context. It is also shares some of the qualities of Kuhn’s disciplines (Darden and Maull 1977).

Tracking the links and overlaps between organizations called “disciplines”, “fields” and the actual cognitive structure of science is a difficult task that requires a lot more investigation. There are different levels of cognitive organization and institutional organization. Any discipline, field or something smaller might capture important cognitive organization, or indeed something that crosses these boundaries. Fields within disciplines may share basic cognitive organization or they may not to any substantial extent. I will use the expression “cognitive domain” to refer generally to units of cognitive organization.

This study consisted of over 100 interviews with lab participants and their collaborators, including longitudinal studies of particular laboratory projects. Many hours of lab observation were performed. Lab group meetings were recorded. Results and generalizations drawns from the analysis of this data was checked with lab participants to estimate their applicability to other labs across the field, as well as with publications and other presentations within the field.

See Benda et al. (2002) however for an attempt to create problem solving strategies that can help resolve scale mismatch problems in these areas.

References

Andersen, H. (2010). Joint acceptance and scientific change: A case study. Episteme, 7, 248–265.

Andersen, H. (2013). The second essential tension: On tradition and innovation in interdisciplinary research. Topoi, 32(1), 3–8.

Andersen, H., & Wagenknecht, S. (2013). Epistemic dependence in interdisciplinary groups. Synthese, 190(11), 1881–1898.

Armsworth, P. R., Gaston, K. J., Hanley, N. D., & Ruffell, R. J. (2009). Contrasting approaches to statistical regression in ecology and economics. Journal of Applied Ecology, 46(2), 265–268.

Beder, S. (2011). Environmental economics and ecological economics: The contribution of interdisciplinarity to understanding, influence and effectiveness. Environmental conservation, 38(02), 140–150.

Benda, L. E., Poff, L. N., Tague, C., Palmer, M. A., Pizzuto, J., Cooper, S., Stanley, E. & Moglen, G. (2002). How to avoid train wrecks when using science in environmental problem solving. BioScience, 52(12), 1127–1136.

Boix Mansilla, V. (2010). Learning to synthesize: The development of interdisciplinary understanding. In R. Frodeman, J. T. Klein, & C. Mitcham (Eds.), The Oxford handbook of interdisciplinarity (pp. 288–306). Oxford: Oxford University Press.

Boix Mansilla, V., Lamont, M., & Sato, K. (2012). Successful interdisciplinary collaborations: The contributions of shared socio-emotional-cognitive platforms to interdisciplinary synthesis. 4S Annual Meeting. Vancouver, Canada. Retrieved from http://nrs.harvard.edu/urn-3:HUL.InstRepos:10496300.

Bracken, L. J., & Oughton, E. A. (2006). ‘What do you mean?’ The importance of language in developing interdisciplinary research. Transactions of the Institute of British Geographers, 31(3), 371–382.

Brigandt, I. (2010). Beyond reduction and pluralism: Toward an epistemology of explanatory integration in biology. Erkenntnis, 73(3), 295–311.

Brigandt, I., & Love, A. C. (2012). Conceptualizing evolutionary novelty: Moving beyond definitional debates. Journal of Experimental Zoology (Molecular and Developmental Evolution), 318B, 417–427.

Bruce, A., Lyall, C., Tait, J., & Williams, R. (2004). Interdisciplinary integration in Europe: The case of the fifth framework programme. Futures, 36(4), 457–470.

Calvert, J., & Fujimura, J. H. (2011). Calculating life? Duelling discourses in interdisciplinary systems biology. Studies in History and Philosophy of Science Part C: Studies in History and Philosophy of Biological and Biomedical Sciences.

Carpenter, S. R. (1998). The need for large-scale experiments to assess and predict the response of ecosystems to perturbation. In M. L. Pace & P. M. Groffman (Eds.), Successes, limitations, and frontiers in ecosystem science (pp. 287–312). New York: Springer.

Chang, H. (2012). Is water H2O? Evidence, realism and pluralism. Dordrecht: Springer.

Clancey, W. J. (1993). Situated action: A neuropsychological interpretation response to Vera and Simon. Cognitive Science, 17, 87–116.

Collins, H. M., & Evans, R. (2002). The third wave of science studies: Studies of expertise and experience. Social Studies of Science, 32(2), 235–296.

Cooke, N. J., & Hilton, M. L. (Eds.). (2015). Enhancing the effectiveness of team science. Washington, DC: National Academies Press.

Costanza, R., Cumberland, J. H., Daly, H., Goodland, R., Norgaard, R. B., Kubiszewski, I., et al. (2014). An introduction to ecological economics. London: CRC Press.

Crow, M. M., & Dabars, W. B. (2015). Designing the new American university. Baltimore: John Hopkins University Press.

Cuddington, K., Fortin, M. J., Gerber, L. R., Hastings, A., Liebhold, A., O’connor, M., et al. (2013). Process-based models are required to manage ecological systems in a changing world. Ecosphere, 4(2), 1–12.

D’Agostino, F. (2012). Disciplinarity and the growth of knowledge. Social Epistemology, 26(3–4), 331–350.

Darden, L., & Maull, N. (1977). Interfield theories. Philosophy of Science, 44, 43–64.

Ericsson, K. A., Krampe, R. T., & Tesch-Römer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychological Review, 100(3), 363.

European Union Research Advisory Board. (2004). Interdisciplinarity in research. Report.

Evans, R., & Marvin, S. (2006). Researching the sustainable city: Three modes of interdisciplinarity. Environment and planning A, 38(6), 1009–1028.

Fagan, M. B. (2016). Stem cells and systems models: Clashing views of explanation. Synthese, 193(3), 873–907.

Farrell, K., Luzzati, T., & Van den Hove, S. (Eds.). (2013). Beyond reductionism: A passion for interdisciplinarity. Oxon, UK: Routledge.

Fiore, S. M. (2008). Interdisciplinarity as teamwork how the science of teams can inform team science. Small Group Research, 39(3), 251–277.

Gobet, F., & Simon, H. A. (1996). Recall of random and distorted chess positions: Implications for the theory of expertise. Memory & Cognition, 24(4), 493–503.

Gray, B. (2008). Enhancing transdisciplinary research through collaborative leadership. American Journal of Preventive Medicine, 35(2), S124–S132.

Green, S., Fagan, M., & Jaeger, J. (2015). Explanatory integration challenges in evolutionary systems biology. Biological Theory, 10(1), 18–35.

Greeno, J. G. (1988). Situations mental models and generative knowledge. In D. Klahr & K. Kotovsky (Eds.), Complex information processing: The impact of Herbert A. Simon. Hillsdale NJ: Erlbaum.

Grüne-Yanoff, T. (2011). Models as products of interdisciplinary exchange: Evidence from evolutionary game theory. Studies in History and Philosophy of Science, 42, 386–397.

Haapasaari, P., Kulmala, S., & Kuikka, S. (2012). Growing into interdisciplinarity: How to converge biology, economics, and social science in fisheries research? Ecology and Society, 17(1), 6.

Holbrook, J. B. (2013). What is interdisciplinary communication? Reflections on the very idea of disciplinary integration. Synthese, 190(11), 1865–1879.

Huutoniemi, K., Klein, J. T., Bruun, H., & Hukkinen, J. (2010). Analyzing interdisciplinarity: Typology and indicators. Research Policy, 39(1), 79–88.

Jacobs, J. A., & Frickel, S. (2009). Interdisciplinarity: A critical assessment. Annual Review of Sociology, 35, 43–65.

Kahneman, D., & Klein, G. (2009). Conditions for intuitive expertise: A failure to disagree. American Psychologist, 64(6), 515.

Klein, J. T. (2008). Evaluation of interdisciplinary and transdisciplinary research: A literature review. American Journal of Preventive Medicine, 35(2), S116–S123.

Klein, J. T. (2010). A taxonomy of interdisciplinarity. In R. Frodeman, J. T. Klein, & C. Mitcham (Eds.), The Oxford handbook of interdisciplinarity (pp. 15–30). Oxford: Oxford University Press.

Knorr-Cetina, K. (1999). Epistemic cultures: The cultures of knowledge societies. Cambridge, MA: Harvard.

Knorr-Cetina, K. (2007). Culture in global knowledge societies: Knowledge cultures and epistemic cultures. Interdisciplinary Science Reviews, 32(4), 361–375.

Kuhn, T. S. (1974). Second thoughts on paradigms. In F. Suppes (Ed.), The structure of scientific theories (pp. 459–482). Urbana, IL: University of Illinois Press.

Kuhn, T. S. (1977). The essential tension: Tradition and innovation of scientific research (1959). Kuhn: The essential tension (pp. 225–239). Chicago: University of Chicago Press.

Latour, B., & Woolgar, S. (1986). Laboratory life: The construction of scientific facts. Princeton, NJ: Princeton University Press.

Lave, J. (1988). Cognition in practice: Mind, mathematics and culture in everyday life. Cambridge, UK: Cambridge University Press.

Lepori, B., Van den Besselaar, P., Dinges, M., Potì, B., Reale, E., Slipersæter, S., et al. (2007). Comparing the evolution of national research policies: What patterns of change? Science and Public Policy, 34(6), 372–388.

Love, A. C., & Lugar, G. L. (2013). Dimensions of integration in interdisciplinary explanations of the origin of evolutionary novelty. Studies in History and Philosophy of Science Part C: Studies in History and Philosophy of Biological and Biomedical Sciences, 44(4, Part A), 537–550.

Lowe, P., & Phillipson, J. (2009). Barriers to research collaboration across disciplines: Scientific paradigms and institutional practices. Environment and Planning A, 41(5), 1171–1184.