- Department of Educational Psychology, Competence Centre School Psychology Hesse, Institute for Psychology, Goethe University Frankfurt, Frankfurt am Main, Germany

School psychologists are asked to systematically evaluate the effects of their work to ensure quality standards. Given the different types of methods applied to different users of school psychology measuring the effects of school psychological services is a complex task. Thus, the focus of our scoping review was to systematically investigate the state of past research on the measurement of the effects of school psychological services published between 1998 and 2018 in eight major school psychological journals. Of the 5,048 peer-reviewed articles published within this period, 623 were coded by two independent raters as explicitly refering to school psychology or counseling in the school context in their titles or abstracts. However, only 22 included definitions of effects of school psychological services or described outcomes used to evaluate school psychological services based on full text screening. These findings revealed that measurement of the effects of school psychological services has not been a focus of research despite its' relevance in guidelines of school psychological practice.

Introduction

School psychology is an independent and applied field of psychology concerned with providing mental health services to students, their families, teachers, school principals and other school staff (Jimerson et al., 2007; Bundesverband Deutscher Psychologinnen und Psychologen, 2015; American Psychological Association, 2020). According to the APA “school psychologists are prepared to intervene at the individual and system level, and develop, implement and evaluate programs to promote positive learning environments for children and youth from diverse backgrounds, and to ensure equal access to effective educational and psychological services that promote health development” (American Psychological Association, 2020). They promote “healthy growth and development of children and youth in a range of contexts” that impact instruction, learning or school behavior by providing services such as “assessment and evaluation of individuals and instructional and organizational environments,” “prevention and intervention programs,” “crisis intervention,” “consultations with teachers, parents, administrators, and other health service providers,” “supervision of psychological services” and “professional development programs” (American Psychological Association, 2020).

While a large extent of the available scientific literature on the scope of school psychology is consistent with the above-mentioned definition of the APA and reflects common practice in the U.S., there are considerable differences among countries (see Jimerson et al., 2007 for an extensive overview of school psychology across 43 countries). In coherence, the International School Psychology Association (ISPA) states that “the term school psychology is used in a general form to refer to professionals prepared in psychology and education and who are recognized as specialists in the provision of psychological services to children and youth within the context of schools, families, and other settings that impact their growth and development. As such, the term also refers to and is meant to include educational psychologists (…)” (International School Psychology Association, 2021). For example, in England, Wales, and Hong Kong the term “educational psychologists” is used as an equivalent to the term school psychologists (Lam, 2007; Squires and Farrell, 2007). In this review we use the term “school psychology” in coherence with the above-mentioned definition provided by the International School Psychology Association (2021).

A particular characteristic of school psychology is its multifaceted nature. Practitioners in this discipline cater for the needs of different types of users (e.g., students, teachers, parents) by relying on diverse methods (e.g., counseling of individuals or groups of students or teachers, screening and diagnostics, training, consultation). Moreover, school psychologists address a broad range of psychological needs (e.g., academic achievement, mental health, and behavior) and support conducive learning environments and practices (Bundesverband Deutscher Psychologinnen und Psychologen, 2015; American Psychological Association, 2020). They provide student-level services (i.e., educational and mental health interventions and services offered for small groups or individuals) as well as system-level services (e.g., implementation and evaluation of programs for teachers). Student-level services are, however, not limited to direct interventions with students. In order to support students effectively, it is often necessary to work with adults that play a significant role in students' lives. School psychologists, therefore, often rely on indirect services with parents and teachers to promote well-being in students. These mediated actions that underlie indirect service delivery models of school psychology are often referred to as the “paradox of school psychology” following Gutkin and Conoley (1990). Again, there are considerable differences among and within countries with respect to the extent that school psychologists engage in these direct and indirect services. For instance, in some regions of the U.S. school psychologists are mostly responsible for providing direct services like psychoeducational assessments, while a related professional group of so-called “school counselors” engage in indirect services such as consultation. In contrast, in a study by Bahr et al. (2017) school psychologists from three Midwestern U.S. states reported that “problem-solving consultation was the activity on which they spent the greatest amount of their time” (p. 581). Recent developments have extended the role of school psychologists to also provide system-level services that aim to support the organizational development of schools. In this context, a lot of emphasis is being placed on preventive work and interventions with parents, educators, and other professionals that intend to create supportive learning and social environments for students (Burns, 2011; Skalski et al., 2015).

Professional associations in different countries have attempted to summarize the above-mentioned multi-faceted nature of school psychology in practical frameworks and/or models. For instance, the Model for Services by School Psychologists by the National Association of School Psychology in the U.S. (Skalski et al., 2015) distinguishes between student-level services (i.e., interventions and instructional support to develop academic skills and interventions and mental health services to develop social and life skills) and systems-level services (i.e., school-wide practices to promote learning, prevention, responsive services and family-school collaboration services). Similarly, the Department of School Psychology of the Professional Association of German Psychologists (Bundesverband Deutscher Psychologinnen und Psychologen, 2015) states that school psychologists provide support for students through individual counseling in cases of learning, developmental, and behavioral problems of students (e.g., fostering gifted students, identifying special needs in inclusive schools, etc.), as well as for schools through system-level consultation (e.g., development toward inclusive schools, violence prevention, etc.).

There have also been several theoretical proposals to conceptualize the manifold field of school psychology. For example, Nastasi (2000) suggested that this subdiscipline should be understood as a comprehensive health care service that ranges from prevention to treatment. Also, Sheridan and Gutkin (2000) advocated for an ecological framework of service delivery that takes into account multiple eco-systemic levels. Moreover, Strein et al. (2003) acknowledged that thinking of school psychology in terms of direct and indirect services has advanced the field notably. They suggest broadening the framework even further to adopt a public health perspective that evaluates both individual- and system-level outcomes. Although there are, undoubtably, many differences between the way school psychology is understood and practiced in different countries, there seems to be consensus that the broad distinctions between individual vs. system-level and direct vs. indirect services represent an essential part of the scope of school psychological services (cf. Jimerson et al., 2007).

Just like other health professionals, school psychologists are expected to rely on evidence-based practices and evaluate the effects of their interventions to ensure quality standards (Hoagwood and Johnson, 2003; Kratochwill and Shernoff, 2004; White and Kratochwill, 2005; Forman et al., 2013; Morrison, 2013). For instance, some of the examples of professional practice included in the domain of Research and Program Evaluation of the Model for Services by School Psychologists by the NASP (Skalski et al., 2015) in the U.S. are “using research findings as the foundation for effective service delivery” and “using techniques of data collection to evaluate services at the individual, group, and systems levels” (Skalski et al., 2015, p. I-5). Similarly, the professional profile of the Department of School Psychology of the Professional Association of German Psychologists (Bundesverband Deutscher Psychologinnen und Psychologen, 2015) mentions that school psychologists engage in regular measures of documentation, evaluation and quality insurance of their work in collaboration with their superiors (Bundesverband Deutscher Psychologinnen und Psychologen, 2015, p. 6).

Measuring the effects of service delivery is, however, a very complex task that needs to encompass the multiple dimensions involved in school psychological practice, if we take into consideration the multifaceted nature of school psychology described above. This makes it difficult to define the effects of school psychological services and to derive recommendations on how to operationalize and measure them in practice. Practical guidelines and/or models, such as the ones mentioned above, rarely provide any specifications about the designs, instruments, or outcome variables that should be used to implement service evaluations. Results of a survey on contemporary practices in U.S. school psychological services showed that 40% reported using teacher or student reports (verbal and written), observational data, and single-subject design procedures to evaluate their services (Bramlett et al., 2002). In contrast, the results of the International School Psychology Survey indicate that this is not the international standard. School psychologists in many countries complain about the lack of research and evaluation of school psychological services in their country (Jimerson et al., 2004) or even express the need for more studies on service evaluation (Jimerson et al., 2006). Although the survey did not collect information about (self-)evaluation practices, results suggest huge variability in evaluation and thereby the understanding of effects of school psychological services.

Furthermore, attempts to define the term “effects of school psychological services” vary considerably and imply different ways of operationalizing measurement of effects. Some approaches define effects as significant changes in outcome variables that are thought to be a consequence of service delivery. Other approaches define effects as the impact of interventions as perceived by school psychologists themselves and/or clients (i.e., consumer satisfaction) or follow an economic point of view describing effects in relation to costs. These diverse perspectives seem to be used in parallel or even as synonyms, although they refer to different aspects of evaluation of school psychological services. For instance, Phillips (1998) suggested that the methods used by practicing school psychologist need to be investigated through controlled experimental evaluation studies conducted by researchers to determine changes in students, teachers, and/or other users of services. In this perspective an effect refers to the extent to which services can achieve the expected outcome. According to Andrews (1999) this should be labeled as efficacy and measured in experimental settings, ideally randomized controlled trials. In contrast to standardized designs commonly used in empirical research, school psychological practices are often characterized by the simultaneous application of heterogeneous methods to a broad range of psychological needs with varying frequencies. Thus, as a next step, effective methods that showed significant results in experimental designs need to be applied by school psychologists in practice to confirm their effectiveness in real-world settings where ideal conditions cannot be assured (Andrews, 1999; White and Kratochwill, 2005; Forman et al., 2013).

From a different perspective, some authors define the effects of school psychological services as the perceived impact of services on students, teachers, and/or other users from the perspective of school psychologists (e.g., Manz et al., 2009) or from the users' perspectives (e.g., Sandoval and Lambert, 1977; Anthun, 2000; Farrell et al., 2005). Again, another group of studies argue that evaluation results are necessary to justify cost-effectiveness of school psychological services. For example, Phillips (1998) stated that the “value of psychological service is measured by its efficiency, i.e., its desirable outcomes in relation to its costs” (p. 269; cf. Andrews, 1999). For this purpose, effects are often operationalized via frequency counts, like the number of tests used or number of children screened for special education per month (Sandoval and Lambert, 1977), the number of students participating in services offered by school psychologists (Braden et al., 2001), or the time dedicated to certain activities.

Taken together, what exactly is meant when school psychologists are asked to systematically evaluate the effects of their work and in this way ensure quality standards, seems to depend on the (often implicit) definitions and operationalizations of the measurement of effects each guideline or model adheres to. A possible reason for this variability might be related to the broad scope of the field of school psychology. As described in the paradox of school psychology (Gutkin and Conoley, 1990) it is mostly necessary to work with adults playing significant roles in the students' life to support students effectively. Thus, the impact of school psychological services on students is often indirect, mediated by actions of adults like teachers or parents who interact directly with school psychologists (Gutkin and Curtis, 2008). This poses methodological challenges for the measurement of effects of school psychological services, since both the development of the students as well as the changes in adults can be used as outcomes to define and operationalize effects in the three aforementioned perspectives.

One way of shedding light on this matter is to rely on evidence synthesis methodologies to reach a better understanding of how effects of school psychological services have been conceptualized to date, as Burns et al. (2013) propose. These authors conducted a mega-analysis summarizing past research from 47 meta-analyses to inform practice and policy on the effectiveness of school psychological services. While their findings reveal moderate and large effects for various types of school psychological services, several questions remain unanswered with respect to the measurement of the above-mentioned effects. Burns et al. (2013) charted the available evidence with great detail focusing on the type of intervention (e.g., domain of practice: academic, social and health wellness, etc.; tier of intervention: universal, targeted, intensive, etc.) and target population (e.g., preschool, elementary, middle or high school students). However, their focus did not lie on measurement of effects itself, such as distinguishing between effects measured from the perspective of school psychologists, service users or objective assessment measures. This is important, because the efficacy of an intervention might depend on the measurement used to capture results (e.g., consumer satisfaction survey vs. standardized test). Furthermore, the evidence summarized by Burns et al. (2013) was not limited to research explicitly conducted in the field of school psychology, but rather in the broader context of educational psychology. While there are undoubtably several relevant studies that nurture the field of school psychology without explicitly mentioning it, it is also important to highlight research that explicitly focuses on school psychology to strengthen the adoption of evidence-based practices in this field.

Building on this work, in the present review we aimed to address these gaps and contribute to a better understanding of the measurement of effects of school psychological services by systematically investigating the state of past research on this topic. We decided to adopt a scoping review approach, a type of knowledge synthesis to summarize research findings, identify the main concepts, definitions, and studies on a topic as well as to determine research gaps to recommend or plan future research (Peters et al., 2015; Tricco et al., 2018). Compared to systematic reviews (e.g., Liberati et al., 2009) scoping reviews mostly address a broader research question and summarize different types of evidence based on heterogeneous methods and designs. There is no need to examine the risk of bias of the included evidence to characterize the extent and content of research to a topic (Peters et al., 2015; Tricco et al., 2018). Thus, scoping reviews can be used to generate specific research questions and hypotheses for future systematic reviews (Tricco et al., 2016). Our review was guided by four research questions: (1) What percentage of articles in these journals focus on the measurement of effects of school psychological services? (2) What type of articles (i.e., empirical, theoretical/conceptual, meta-analysis) have been published on this topic and what is their content? (3) How did the authors define effects of school psychological services in past research? and (4) Which instruments are used to operationalize measurement of effects?

Methods and Procedure

We followed the methodological guidelines suggested by the PRISMA guidelines for scoping reviews (PRISMA-ScR, Tricco et al., 2018) and provide details in the following sections.

Eligibility Criteria

We followed a similar procedure as Villarreal et al. (2017) and limited our search to eight major peer-reviewed school psychology journals: Canadian Journal of School Psychology, International Journal of School and Educational Psychology, Journal of Applied School Psychology, Journal of School Psychology, Psychology in the School, School Psychology International, School Psychology Quarterly, and School Psychology Review. Furthermore, following common practices in systematic review methodology, we focused on articles published between 1998 (the year after the National Association of School Psychologists of the U.S. published the Blueprint for Training to guide training and practice, Yesseldyke et al., 1997) and September 2018. In this way, we aimed to capture past research published in the last 20 years. We only focused on reports written in English, as the review team is not proficient enough in other languages (e.g., French reports in the Canadian Journal of School Psychology) to assess eligibility for this review.

Empirical research studies (i.e., articles reporting new data from quantitative or qualitative studies using either a sample of school psychologists or a sample of teachers, principals, students or other users of school psychological services), theoretical/conceptual contributions (i.e., articles on school psychological services based on the literature), or meta-analyses/systematic review (i.e., articles systematically analyzing results from multiple studies within the field of school psychology) were included. Editorials, commentaries, book and test reviews were excluded. Furthermore, to meet eligibility criteria for this review, articles had to explicitly refer to “school psychology” or “counseling” in the school context in their title and/or abstract and needed to address effects of school psychological services by naming at least one of the following keywords in the abstract: “evaluation/evaluate,” “effect/effects,” “effectivity,” “efficacy” or “effectiveness.” Abstracts on school psychology with text that was limited to the discussion (e.g., “consequences for school psychology will be discussed”) were excluded. To address our third and fourth research question, at full-text screening stage only articles that provided definitions and/or operationalizations of the effects of school psychological services were included.

To identify the range of evidence published on the effects of school psychological services we included studies delivered with school psychologists as well as articles related to all kinds of direct and indirect services. No selection limitations were imposed about method, design, participants, or outcomes of the studies.

Information Sources, Search, and Selection Process

The first author and a research assistant hand screened the journals' homepages from June to September 20181. The selection process consisted of three steps. First, screening of titles and abstracts of all published articles was completed through independent double ratings by the first author and a research assistant to identify papers explicitly related to school psychology (search strategy: “school psychology” OR “counseling” in the school context). Articles were coded as included or excluded in an excel sheet. Moreover, the included articles were coded as empirical, theoretical/conceptual, or meta-analysis/systematic review. Inter-rater reliability values based on Cohen's kappa were estimated with the software package psych for R (Revelle, 2018). Second, the abstracts of the selected articles, even those with inclusion ratings from only one rater, were screened once again by the first author to identify articles on effects related to school psychological services (search strategy: evaluate* OR effect* OR effect* OR efficacy). Third, full texts of all included articles were screened by the first author.

If access to full texts was restricted through the journals' homepages, we used electronic databases (e.g., PsychArticles), search engines (e.g., Google), interlibrary loan services (e.g., Subito documents library), or contacted the authors via ResearchGate to gain full access.

Data Charting Process, Data Items, and Synthesis of Results

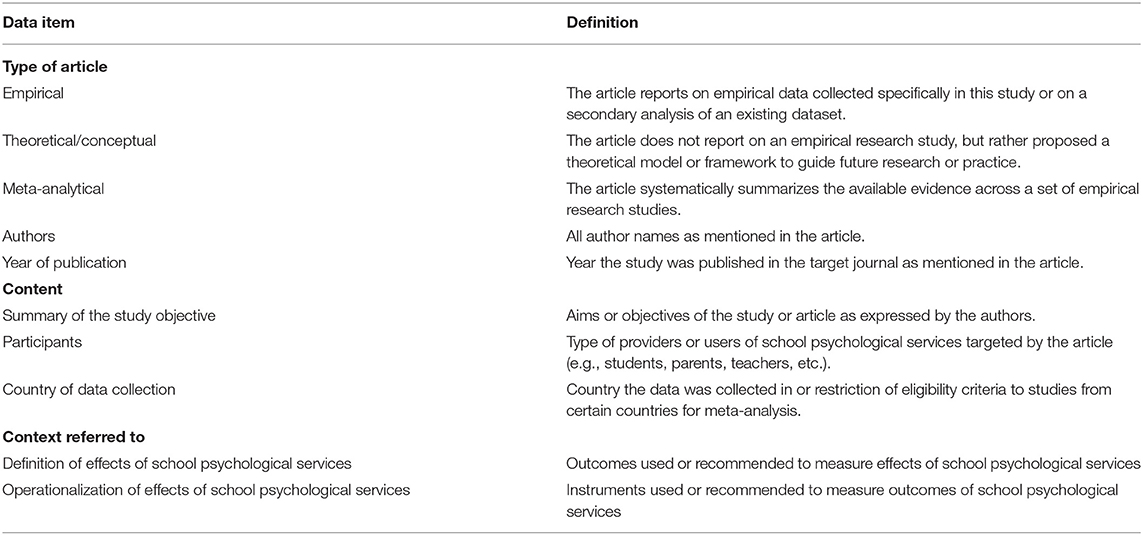

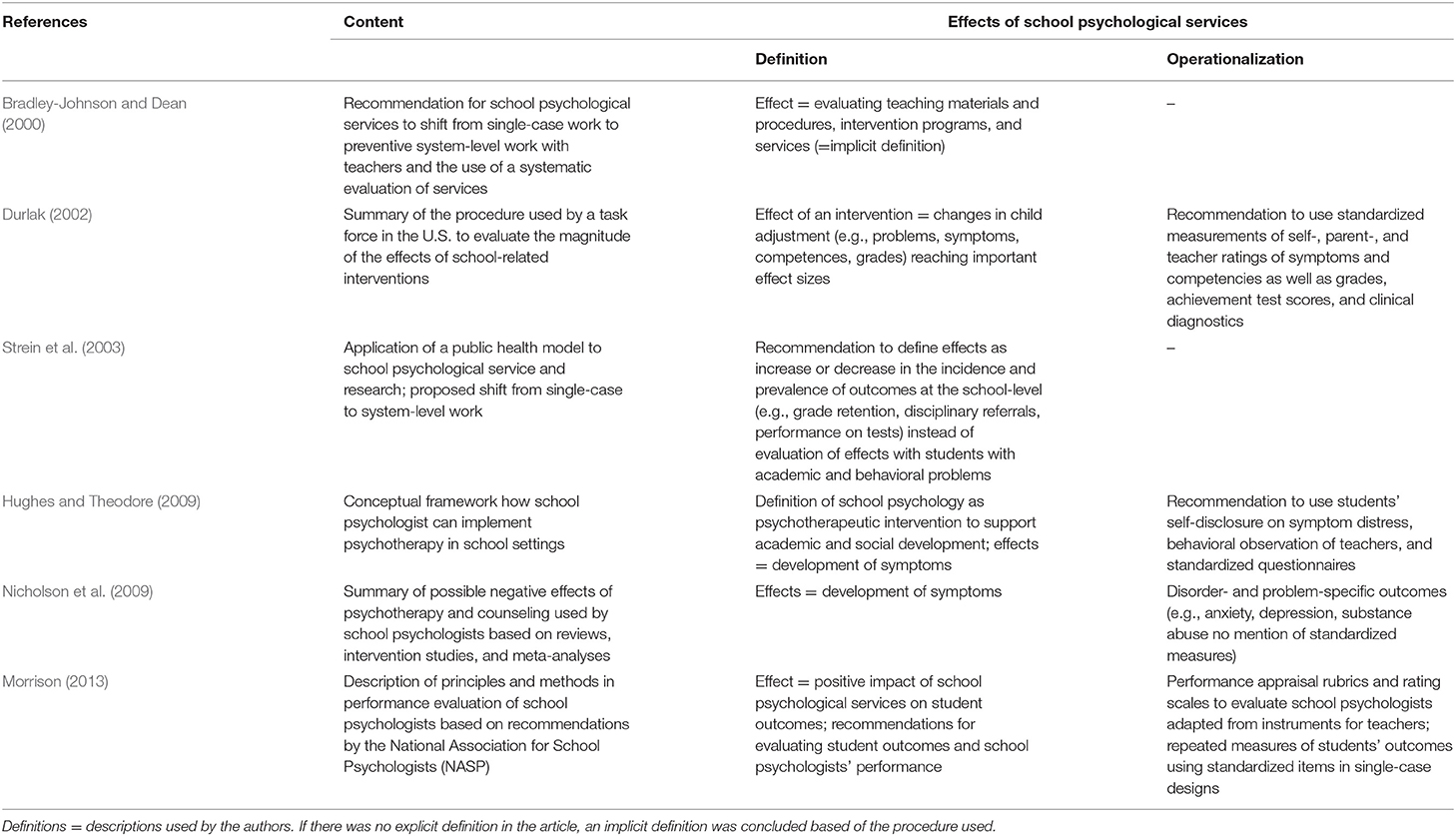

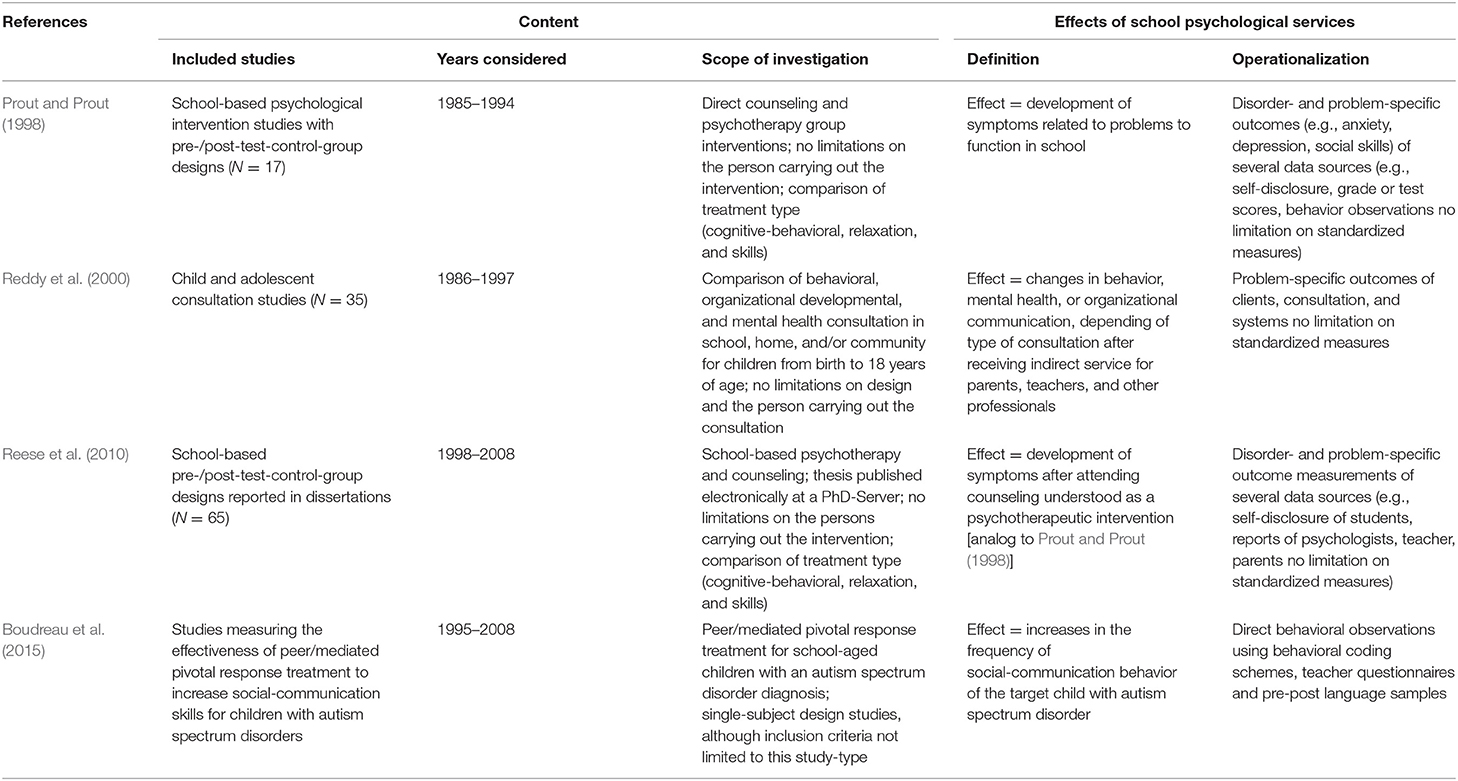

The first author charted data from the included articles in an excel sheet consisting of the following data items: type of article (i.e., empirical, theoretical/conceptual, or meta-analytical), authors, year of publication, content (i.e., summary of the study objective, participants and country of data collection or context referred to), definition of effects of school psychological services (i.e., explanation of outcomes used or recommended to measure effects of school psychological services), and operationalization of effects of school psychological services (i.e., instruments used or recommended to measure outcomes). Table 1 provides further details on each of these data items.

Based on the data charting form, we summarized our findings in a narrative manner and identified research clusters and gaps addressing our research questions. Specifically, for the category “effects of school psychological services,” we proposed a first categorization into thematic clusters grouping different types of definitions, based on the information we extracted from full texts during data charting. This categorization was then double-checked by revising the full texts to make sure that each of the included articles was accurately allocated to one of the proposed thematic clusters.

Results

Search and Selection Process

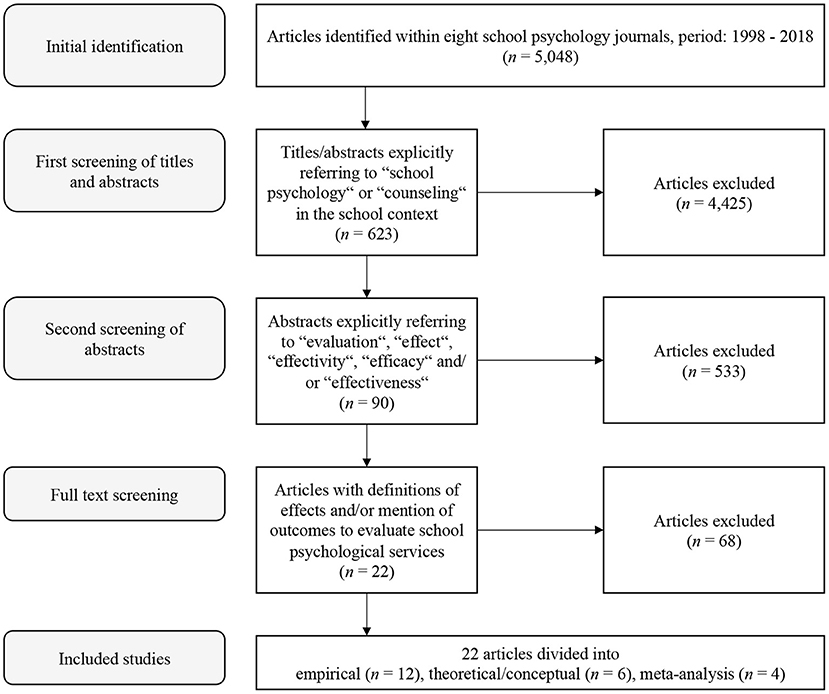

Figure 1 reveals the results of the search and selection process. Departing from 5,048 references that we initially identified, 4,425 were excluded during the first title and abstract screening round.

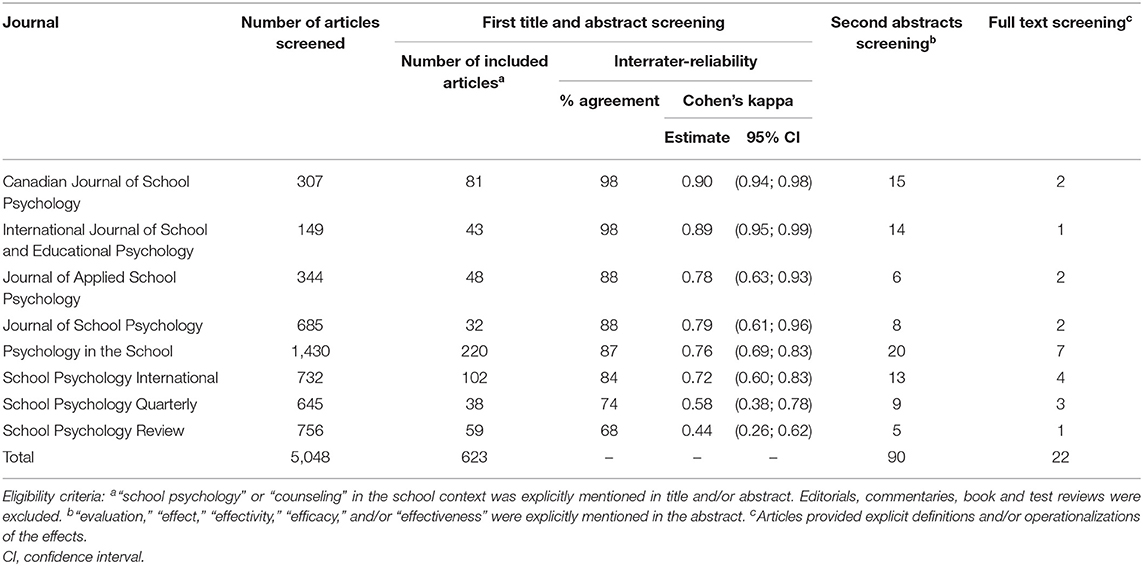

Table 2 presents further information on the articles screened from each of the eight journals targeted in this review, as well as interrater-reliability values based on percent agreement and Cohen's kappa (two independent ratings on 5,048 reports). There was almost perfect agreement between the two raters' judgements on two journals, κ = 0.89–0.90, substantial agreement for four journals, κ = 0.72 and 0.79 and moderate agreement on the remaining two journals, κ = 0.44 and 0.58 (Landis and Koch, 1977). Percent agreement ranged from 68 to 98%.

In the second screening stage, further 533 articles were excluded as they did not meet the inclusion criteria for this review (see Figure 1). The remaining 90 articles moved on to the full text screening stage. In this final step of the selection process, we identified 22 articles with explicit definitions or mention of effects of school psychological services. The remaining 68 articles were excluded (see Figure 1).

Percentage of Articles on the Effects of School Psychological Services

To answer research question 1, two rounds of title and abstract screening revealed that 1.78% of articles (i.e., 90 over a total of 5,048) published between January of 1998 and September of 2018 in the eight peer-reviewed journals targeted by this review explicitly referred to the measurement of effects of school psychological services in their title or abstract. However, only 0.43% (i.e., 22 over a total of 5,048) included definitions of effects of school psychological services or described outcomes used to evaluate school psychological services based on full text screening. See Table 2 for the number of included articles per journal.

Type and Content of Articles on the Effects of School Psychological Services

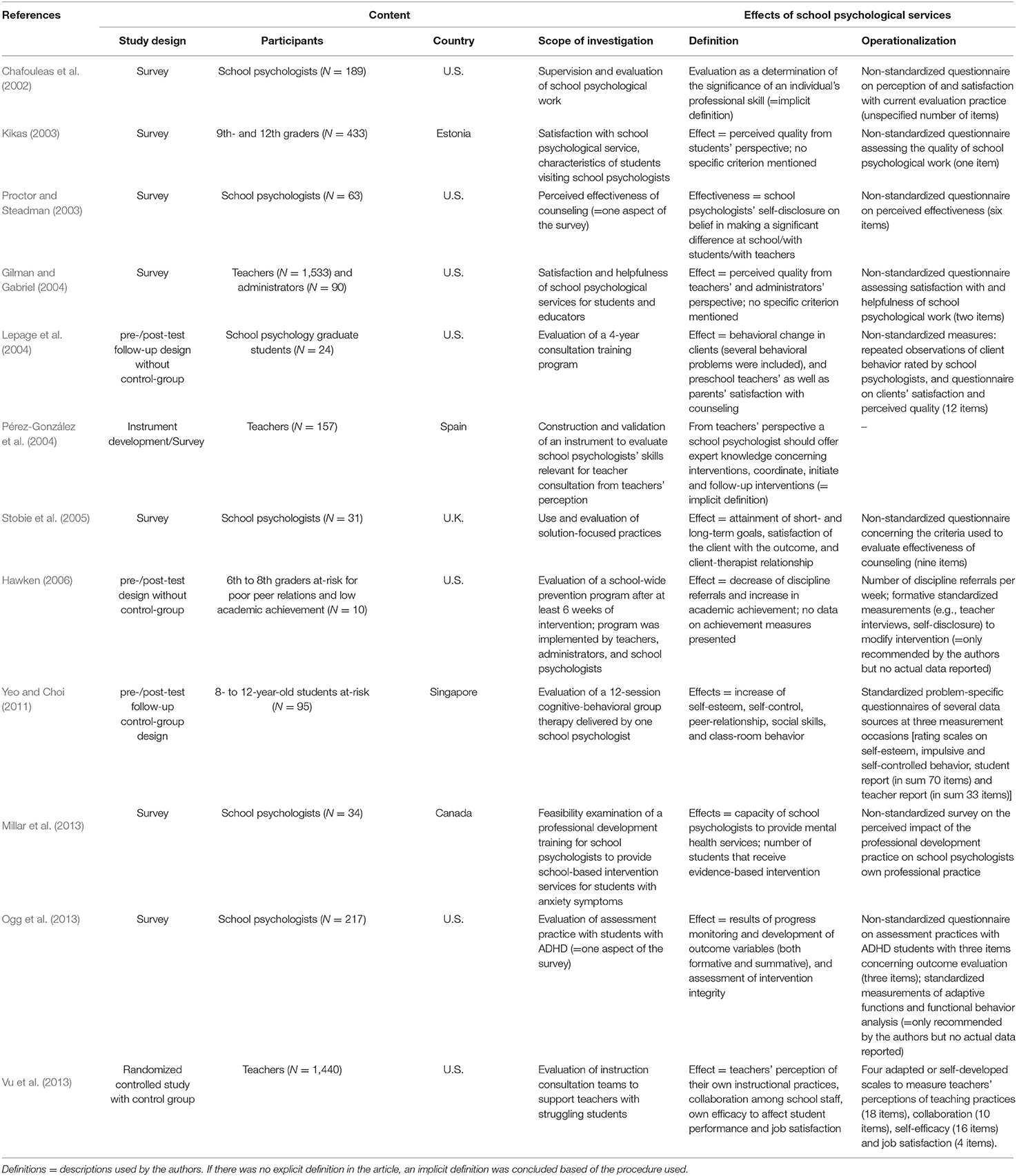

Research question 2 intended to explore what type of articles had been published on the measurement of effects of school psychological services. Of the 22 articles that met inclusion criteria for this review, we identified 12 empirical research reports, six theoretical/conceptual articles, and four meta-analyses published between 1998 and 2015. The content covered varied considerably from article to article and is described with a short summary of each report in Tables 3A–C.

Within the 12 empirical articles, eight articles used a survey technique (in one article the survey is part of an instrument development method) and four articles described longitudinal intervention studies. The participants of the survey were mostly school psychologists (5 of 8 articles), one survey was done with students and two with teachers. The four (quasi-)experimental longitudinal studies investigated the effects of interventions for students (2 of 4), school psychology students (1 of 4) and teachers (1 of 4). Based on different aspects of the effects of school psychological services investigated by each study, the content covered across articles can be summarized as follows: (1) articles about school psychological evaluation practices in general from school psychologists' perspective (4 of 12: Chafouleas et al., 2002; Stobie et al., 2005; Millar et al., 2013; Ogg et al., 2013), (2) one article on the evaluation of school psychological skills from teachers' perspective (Pérez-González et al., 2004), (3) articles on the efficacy and/or effectiveness of special interventions or trainings (3 of 12: Lepage et al., 2004; Hawken, 2006; Yeo and Choi, 2011), and (4) articles about satisfaction or perceived effectiveness of school psychological services (4 of 12: Kikas, 2003; Proctor and Steadman, 2003; Gilman and Gabriel, 2004; Vu et al., 2013).

Of the six theoretical/conceptual articles, three reports contained conceptual frameworks of the effects of school psychological services (Bradley-Johnson and Dean, 2000; Strein et al., 2003; Hughes and Theodore, 2009), two articles recommended methodological proposals to assess effects of services (Durlak, 2002; Morrison, 2013), and one critically discussed possible positive and negative effects of school psychological services (Nicholson et al., 2009).

Within the category meta-analyses, three reports summarized effect sizes of 17 (Prout and Prout, 1998) and 65 empirical group studies (Reese et al., 2010) and five single-subject-design studies (Boudreau et al., 2015) on direct school-based psychological services respectively and one investigated the overall effect of 35 studies on indirect services (Reddy et al., 2000). No limitation was placed on the persons carrying out the intervention, type of services, or the setting. Hence, there was no explicit focus on school psychology in any of the meta-analyses.

Definitions of Effects of School Psychological Services

The definitions used to operationalize effects of school psychological services within the included 22 articles (see Table 3) indicate four thematic clusters (research question 3): (1) development of various psychological outcomes at the student level, (2) development of school/system-level outcomes, (3) consumer satisfaction, and (4) no explicit definition.

In relation to the first thematic cluster, most articles (i.e., 11 of 22) defined the effects of school psychological services as development of psychological outcomes at the student level. Three empirical articles (Lepage et al., 2004; Yeo and Choi, 2011; Ogg et al., 2013), four theoretical/conceptual articles (Durlak, 2002; Hughes and Theodore, 2009; Nicholson et al., 2009; Morrison, 2013), and all four meta-analyses (Prout and Prout, 1998; Reddy et al., 2000; Reese et al., 2010; Boudreau et al., 2015) fell into this category. However, given the fact that the four meta-analyses included counseling and consultation studies not necessarily delivered by school psychologists, the results should be interpreted with caution. Psychological outcomes addressed in the included articles predominantly focused on symptoms of mental health and/or academic or social competencies of students with disorders or at-risk students, depending on the context of the study. Mostly, development was equated to a positive change of these outcomes as evidenced through an increase or decrease of outcome scores based on data provided by pre-/post-test study designs. While some articles focused on development in a single subject or participant group receiving an intervention, others compared the development of an intervention group to a control group.

The second thematic cluster defined effects of school psychological services as the development of school/system-level outcomes and was represented by four of the 22 included articles (empirical: Hawken, 2006; Millar et al., 2013; Vu et al., 2013 theoretical/conceptual: Strein et al., 2003). These articles focused on the number of disciplinary referrals, grade retention frequency data, the capacity of school psychologists to provide services and the number of students receiving evidence-based interventions, and teachers' perceptions of the effects of consultation teams on their work and job satisfaction. A positive development indicating the effectiveness of school psychological services was therefore equated with a decrease or increase of these variables. The meta-analyses by Reddy et al. (2000), that was already categorized in the first cluster, can also be included within this category of school-level effects as it measures the overall effect of consultation on organizational development (e.g., improvement in communication or climate) in addition to other outcomes.

The third thematic cluster that defined effects as consumer satisfaction was solely represented by three empirical articles. While two studies focused on clients' perceived quality of the received service (Kikas, 2003; Gilman and Gabriel, 2004), the remaining study used school psychologists' self-disclosure of service efficacy as an outcome measure (Proctor and Steadman, 2003).

Finally, three of the 22 articles did not give any explicit definition of the effects of school psychological services (empirical: Chafouleas et al., 2002; Pérez-González et al., 2004; theoretical/conceptual: Bradley-Johnson and Dean, 2000). Implicitly, these articles referred to consumer satisfaction and evaluation practices as part of school psychological professional standards. Another article that we were unable to allocate into one of the categories was the study by Stobie et al. (2005). The authors defined effects of school psychological services in a broad manner as the attainment of goals without naming specific outcome variables.

Operationalization of Effects of School Psychological Services

The instruments used to investigate effects (research question 4) were mainly disorder- and problem-specific measurements or satisfaction surveys. Questionnaires with rating scales were the most commonly used instruments. Common questions, for example, asked users about the helpfulness of and satisfaction with school psychological services for children and/or educators (e.g., Gilman and Gabriel, 2004) or the overall quality of the work of school psychologists (e.g., Kikas, 2003). Similarly, school psychologists' self-perception was measured by investigating, for example, whether practitioners believed that they were making a difference at their school, that they were effective with students and teachers, and whether they thought that teachers, parents, and administrators were knowledgeable about their skills and abilities (e.g., Proctor and Steadman, 2003). Ten of the 12 empirical articles used non-standardized questionnaires specifically developed or adapted for each study. The questionnaires used a wide range of number of items to evaluate effects, that is, one item in Kikas (2003) up to 18 items in Vu et al. (2013). Only one empirical paper used standardized questionnaires (Yeo and Choi, 2011), and one study used a behavioral observation technique (Lepage et al., 2004). All four meta-analyses included standardized and non-standardized outcome measures from several data sources (questionnaires, behavioral observation, school or clinical reports). The two theoretical/conceptual articles describing procedures and methods for evaluating school psychological services and school-related interventions recommended using standardized measurements (Durlak, 2002; Morrison, 2013).

Discussion

The present study aimed to contribute to a better understanding of the measurement of effects of school psychological services by conducting a scoping review of scientific articles published between 1998 and 2018 in eight leading school psychology journals. Only 0.43% of all articles published within this period (i.e., 22 over a total of 5,048) met the inclusion criteria for our review of providing definitions and/or operationalizations of the effects of school psychological services. This shows that measurement of effects of school psychological services has, to date, been addressed in a very limited way by the scholarly community in the field of school psychology. This is surprising giving the fact that professional practice guidelines ask school psychologists to evaluate the efficacy of their services to ensure quality standards. However, addressing this issue is a complex task given the multiple users, intervention methods, and psychological needs involved in school psychological practice (e.g., paradox of school psychology, Gutkin and Conoley, 1990). This might explain why such a limited percentage of past research has tried to tackle this issue. Moreover, given the difficulties researchers might probably neglect to collect primary data on the effects of school psychology, opting instead to focus on aspects linked to school psychological work such as the effects of interventions or the usability of diagnostic tools. Consequently, if these studies do not use our keywords in their abstracts, they are not included in our study.

Nevertheless, the 22 studies that we identified provide a starting point to guide school psychologists in evaluating the effects of some of the dimensions of their work. The six theoretical/conceptual articles lay the groundwork by proposing frameworks, recommending methodological steps, and highlighting critical aspects that need to be considered when evaluating the effects of school psychological services. Three empirical studies conducted by researchers in experimental settings as well as four meta-analyses report information on measuring effects of school psychological services with the limitation that interventions were not necessarily carried out by school psychologists. In coherence with Andrews (1999), this evidence describes the impact school psychological services may have under ideal conditions in experimental settings. In fact, only nine of the 22 studies included in our review were delivered with school psychologists. This result highlights a research gap in the field of “effectiveness-studies” (Andrews, 1999), this is, the application of methods supported by experimental research findings in practical non-experimental real-world settings. If school psychologists are required to engage in evidence-based practices and evaluate the efficacy of their work (White and Kratochwill, 2005; Skalski et al., 2015), it is imperative that they can rely on scientific evidence obtained from real-world studies conducted with practicing school psychologists, not only on findings from experimental studies implemented by researchers. Empirical (quasi-) experimental studies conducted by researchers and/or practitioners with school-aged samples were the most frequently published papers in the eight leading school psychology journals from 2000 onward (Villarreal et al., 2017; cf. Begeny et al., 2018). Further research should aim to confirm the effects of these (quasi-)experimental studies in non-experimental settings by including practicing school psychologists as potential professionals delivering the investigated interventions. The contributions by Forman et al. (2013) with respect to the development of implementation science in school psychology can provide a valuable starting point in this respect. Also, the ideas proposed by Nastasi (2000, 2004) of creating researcher-practitioner partnerships are important to move the field forward and enable school psychologists to function as scientist-practitioners (Huber, 2007). Only if researchers and practitioners work together to increase the adoption of evidence-based practices, common obstacles, such as the reduced importance of research in school psychological practice, can be tackled to improve service delivery (Mendes et al., 2014, 2017).

In terms of the definitions used to refer to effects of school psychological services, most articles focused on the development of psychological outcomes at the student level such as symptoms, behaviors, or competences (i.e., 11 of 22). Only a few studies understood effects as the development of school/system-level outcomes such as discipline referrals (i.e., 4 of 22), consumer satisfaction of service users (i.e., 3 of 22) or provided no explicit definitions (i.e., 4 of 22). No studies with an economic perspective on the definition of effects were identified. Thus, available definitions capture single aspects of the multiple dimensions involved in school psychological practice. The effects of direct student-level services are undoubtably a core component of the efficacy of school psychological services, but more research is needed to guide practitioners with respect to the evaluation of indirect services at the school level and indirect mediation effects of adults, who work with school psychologists, on outcomes at the student level (cf. Gutkin and Curtis, 2008). Following the suggestions by Strein et al. (2003) of adopting a public health perspective may aid researchers in adopting a broader focus of the evaluation of effects of school psychological services.

Regarding the operationalization of effects of school psychological services, we identified a mismatch between theoretical and conceptual proposals of what “should be done” and “what has actually been done” as reported by the available evidence. While theoretical/conceptual articles recommended the use of standardized measures, in practice ten of 12 empirical studies used non-standardized instruments, only in some cases offered information on the reliability of these instruments and, on occasions, only included one item. Also, the four meta-analyses did not limit their inclusion criteria to studies using standardized measures. This finding is concerning, as the methodological quality of instruments determines the extent to which effects of school psychological services may be captured. Thus, empirical research in this field should lead by example and use reliable and valid assessment instruments.

Our results need to be interpreted with caution taking at least two limitations into account. First, to be included in our scoping review, studies had to explicitly refer to the concepts “school psychology” or “counseling” in the school context in their title and/or abstract. We were interested in exploring how the effects of school psychological services are conceptualized and measured within this applied field of psychological research. However, school psychology often relies on contributions from other related research fields (e.g., counseling research, clinical psychology). Given our eligibility criteria this kind of research was not included in our review, although it also plays a role in guiding evaluations of the effects of school psychological services. Readers should, therefore, keep in mind that the evidence summarized in this review only refers to research explicitly associated with the field of school psychology. This limitation is especially important to keep in mind for international readers, as different professional titles are used worldwide to refer to psychologists that cater for needs of students, teachers and other school staff. In European countries, for example, the term “educational psychologist” is often used as a synonym of “school psychologist.” As our review focused on articles that explicitly mentioned the terms “school psychology” or “counseling” in the school context in their title and/or abstract, it is possible that we excluded relevant publications using derivations of the term “educational psychology.”

Second, our review is limited to past research published in eight leading school psychology journals that predominantly focus on contributions by researchers and samples located in the U.S (see Begeny et al., 2018 for a similar approach). This decision certainly limits the generalizability of our results. Therefore, we alert the reader to keep in mind that our findings might be showing an incomplete picture and encourage future research to replicate our study with additional information sources.

Conclusion

This scoping review contributes toward a better understanding of how the measurement of effects of school psychological services has been conceptualized by past research in major school psychology journals. The results show very limited research on this topic despite the relevance of evaluation in guidelines of school psychological practices. We systematically identified, summarized, and critically discussed the information from 22 articles that may serve as a guide for policymakers and school psychologists aiming at evaluating the effects of their services informed by scientific evidence. According to our results, the definitions of the effects of school psychological services only capture some aspects of the multiple dimensions involved in school psychological practice and the methodological quality of the instruments used to assess the efficacy needs to be improved in future studies. It seems that school psychologists can rely on a large amount of interventions with experimental research support, but studies investigating fidelity and effects of these interventions in practical non-experimental school psychological settings are less common. Overall, the results represent a starting point to conceptualize measurement of effects of school psychological services. More research is urgently needed to provide school psychologists with evidence-based tools and procedures to assess the effects of their work and ensure the quality of school psychological services.

Author Contributions

The initial identification process on the journals' homepages and the first screening of titles and abstracts was done by BM. The second screening of the abstracts, the full text screening, and data charting was done by BM. Syntheses of our results were done by BM, AH, NV, and GB. All authors contributed to the article and approved the submitted version.

Funding

The review was supported by the positions of the authors at the Competence Centre School Psychology Hesse at the Goethe-University Frankfurt, Germany.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1. ^Reports published in the Canadian Journal of School Psychology and the International Journal of School and Educational Psychology were screened in January 2021 during the review process as recommended by one of the reviewers to extend our search.

References

American Psychological Association (2020). School Psychology. Available online at: https://www.apa.org/ed/graduate/specialize/school (accessed September 10, 2020).

Andrews, G. (1999). Efficacy, effectiveness and efficiency in mental health service delivery. Aust. N. Z. J. Psychiatry 33, 316–322. doi: 10.1046/j.1440-1614.1999.00581.x

Anthun, R. (2000). Quality dimensions for school psychology services. Scand. J. Psychol. 41, 181–187. doi: 10.1111/1467-9450.00186

Bahr, M. W., Leduc, J. D., Hild, M. A., Davis, S. E., Summers, J. K., and Mcneal, B. (2017). Evidence for the expanding role of consultation in the practice of school psychologists. Psychol. Schools 54, 581–595. doi: 10.1002/pits.22020

Begeny, J. C., Levy, R. A., Hida, R., and Norwalk, K. (2018). Experimental research in school psychology internationally: an assessment of journal publications and implications for internationalization. Psychol. Schools 55, 120–136. doi: 10.1002/pits.22070

Boudreau, A. M., Corkum, P., Meko, K., and Smith, I. M. (2015). Peer-mediated pivotal response treatment for young children with autism spectrum disorders: a systematic review. Can. J. School Psychol. 30, 218–235. doi: 10.1177/0829573515581156

Braden, J. S., DiMarino-Linnen, E., and Good, T. L. (2001). Schools, society, and school psychologists history and future directions. J. School Psychol. 39, 203–219. doi: 10.1016/S0022-4405(01)00056-5

Bradley-Johnson, S., and Dean, V. J. (2000). Role change for school psychology: the challenge continues in the new millennium. Psychol. Schools 37, 1–5. doi: 10.1002/(SICI)1520-6807(200001)37:1<1::AID-PITS1>3.0.CO;2-Q

Bramlett, R. K., Murphy, J. J., Johnson, J., Wallingsford, L., and Hall, J. D. (2002). Contemporary practices in school psychology: a national survey of roles and referral problems. Psychol. Schools 39, 327–335. doi: 10.1002/pits.10022

Bundesverband Deutscher Psychologinnen und Psychologen (Professional Association of German Psychologists) (2015). Schulpsychologie in Deutschland – Berufsprofil [School psychology in Germany – job profile]. Available online at: https://www.bdp-schulpsychologie.de/aktuell/2018/180914_berufsprofil.pdf (accessed September 10, 2020).

Burns, M. K. (2011). School psychology research: combining ecological theory and prevention science. School Psychol. Rev. 40, 132–139. doi: 10.1080/02796015.2011.12087732

Burns, M. K., Kanive, R., Zaslofsky, A. F., and Parker, D. C. (2013). Mega-analysis of school psychology blueprint for training and practice domains. School Psychol. Forum 7, 13–28.

Chafouleas, S. M., Clonan, S. M., and Vanauken, T. L. (2002). A national survey of current supervision and evaluation practices of school psychologists. Psychol. Schools 39, 317–325. doi: 10.1002/pits.10021

Durlak, J. A. (2002). Evaluating evidence-based interventions in school psychology. School Psychol. Q. 17, 475–482. doi: 10.1521/scpq.17.4.475.20873

Farrell, P., Jimerson, S. R., Kalambouka, A., and Benoit, J. (2005). Teachers' perception of school psychologists in different countries. School Psychol. Int. 26, 525–544. doi: 10.1177/0143034305060787

Forman, S. G., Shapiro, E. S., Codding, R. S., Gonzales, J. E., Reddy, L. A., Rosenfield, S. A., et al. (2013). Implementation science and school psychology. School Psychol. Q. 28, 77–100. doi: 10.1037/spq0000019

Gilman, R., and Gabriel, S. (2004). Perceptions of schools psychological services by education professionals: results from a multi-state survey pilot study. School Psychol. Rev. 33, 271–286. doi: 10.1080/02796015.2004.12086248

Gutkin, T. B., and Conoley, J. C. (1990). Reconceptualizing school psychology from a service delivery perspective: implications for practice, training, and research. J. School Psychol. 28, 203–223. doi: 10.1016/0022-4405(90)90012-V

Gutkin, T. B., and Curtis, M. J. (2008). “School-based consultation: the science and practice of indirect service delivery,” in The Handbook of School Psychology, 4th Edn. eds T. B., Gutkin and C. R. Reynolds (Hoboken, NJ: John Wiley & Sons), 591–635.

Hawken, L. S. (2006). School psychologists as leaders in the implementation of a targeted intervention. School Psychol. Q. 21, 91–111. doi: 10.1521/scpq.2006.21.1.91

Hoagwood, K., and Johnson, J. (2003). School psychology: a public health framework: I. From evidence-based practices to evidence-based policies. J. School Psychol. 41, 3–21. doi: 10.1016/S0022-4405(02)00141-3

Huber, D. R. (2007). Is the scientist-practitioner model viable for school psychology practice? Am. Behav. Sci. 50, 778–788. doi: 10.1177/0002764206296456

Hughes, T. L., and Theodore, L. A. (2009). Conceptual framework for selecting individual psychotherapy in the schools. Psychol. Schools 46, 218–224. doi: 10.1002/pits.20366

International School Psychology Association (2021). A Definition of School Psychology. Available online at: https://www.ispaweb.org/a-definition-of-school-psychology/ (accessed September 10, 2020).

Jimerson, S. R., Graydon, K., Farrell, P., Kikas, E., Hatzichristou, C., Boce, E., et al. (2004). The international school psychology survey: development and data from Albania, Cyprus, Estonia, Greece and Northern England. School Psychol. Int. 25, 259–286. doi: 10.1177/0143034304046901

Jimerson, S. R., Graydon, K., Yuen, M., Lam, S.-F., Thurm, J.-M., Klueva, N., et al. (2006). The international school psychology survey. Data from Australia, China, Germany, Italy and Russia. School Psychol. Int. 27, 5–32. doi: 10.1177/0143034306062813

Jimerson, S. R., Oakland, T. D., and Farrell, P. T. (2007). The Handbook of International School Psychology. Thousand Oaks, CA: Sage.

Kikas, E. (2003). Pupils as consumers of school psychological services. School Psychol. Int. 24, 20–32. doi: 10.1177/0143034303024001579

Kratochwill, T. R., and Shernoff, E. S. (2004). Evidence-based practice: promoting evidence-based interventions in school psychology. School Psychol. Rev. 33, 34–48. doi: 10.1080/02796015.2004.12086229

Lam, S. F. (2007). “Educational psychology in Hong Kong,” in The Handbook of International School Psychology, eds S. Jimerson, T. Oakland, and P. Farrell (SAGE Publications), 147–157.

Landis, J. R., and Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics 33, 159–174. doi: 10.2307/2529310

Lepage, K., Kratochwill, T. R., and Elliott, S. N. (2004). Competency-based behaviour consultation training: an evaluation of consultant outcomes, treatment effects, and consumer satisfaction. School Psychol. Q. 19, 1–28. doi: 10.1521/scpq.19.1.1.29406

Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gøtzsche, P. C., Ioannidis, J. P. A., et al. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 6:e1000100. doi: 10.1371/journal.pmed.1000100

Manz, P. H., Mautone, J. A., and Martin, S. D. (2009). School psychologists' collaborations with families: an exploratory study of the interrelationships of their perceptions of professional efficacy and school climate, and demographic and training variables. J. Appl. School Psychol. 25, 47–70. doi: 10.1080/15377900802484158

Mendes, S. A., Abreu-Lima, I., Almeida, L. S., and Simeonsson, R. J. (2014). School psychology in Portugal: practitioners' characteristics and practices. Int. J. School Educ. Psychol. 2, 115–125. doi: 10.1080/21683603.2013.863171

Mendes, S. A., Lasser, J., Abreu-Lima, I. M., and Almeida, L. S. (2017). All different or all the same? Exploring the diversity of professional practices in Portuguese school psychology. Eur. J. Psychol. Educ. 32, 251–269. doi: 10.1007/s10212-016-0297-6

Millar, G. M., Lean, D., Sweet, S. D., Moraes, S. C., and Nelson, V. (2013). The psychology school mental health initiative: an innovative approach to the delivery of school-based intervention services. Can. J. School Psychol. 28, 103–118. doi: 10.1177/0829573512468858

Morrison, J. Q. (2013). Performance evaluation and accountability for school psychologists: challenges and opportunities. Psychol. Schools 50, 314–324. doi: 10.1002/pits.21670

Nastasi, B. K. (2000). School psychologists as health-care providers in the 21st century: conceptual framework, professional identity, and professional practice. School Psychol. Rev. 29, 540–554. doi: 10.1080/02796015.2000.12086040

Nastasi, B. K. (2004). Meeting the challenges of the future: integrating public health and public education for mental health promotion. J. Educ. Psychol. Consult. 15, 295–312. doi: 10.1207/s1532768xjepc153&4_6

Nicholson, H., Foote, C., and Grigerick, S. (2009). Deleterious effects of psychotherapy and counseling in the schools. Psychol. Schools 46, 232–237. doi: 10.1002/pits.20367

Ogg, J., Fefer, S., Sundman-Wheat, A., McMahan, M., Stewart, T., Chappel, A., et al. (2013). School-based assessment of ADHD: purpose, alignment with best practice guidelines, and training. J. Appl. School Psychol. 29, 305–327. doi: 10.1080/15377903.2013.836775

Pérez-González, F., Garcia-Ros, R., and Gómez-Artiga, A. (2004). A survey of teacher perceptions of the school psychologist's skills in the consultation process. An exploratory factor analysis. School Psychol. Int. 25, 30–41. doi: 10.1177/0143034304026778

Peters, M. D. J., Godfrey, C. M., Khalil, H., McInerney, P., Parker, D., and Soares, C. B. (2015). Guidance for conducting systematic scoping reviews. Int. J. Evid. Based Healthc. 13, 141–146. doi: 10.1097/XEB.0000000000000050

Phillips, B. N. (1998). Scientific standards of practice in school psychology: an emerging perspective. School Psychol. Int. 19, 267–278. doi: 10.1177/0143034398193006

Proctor, B. E., and Steadman, T. (2003). Job satisfaction, burnout, and perceived effectiveness of “in-house” versus traditional school psychologists. Psychol. Schools 40, 237–243. doi: 10.1002/pits.10082

Prout, S. M., and Prout, H. T. (1998). A meta-analysis of school-based studies of counseling and psychotherapy: an update. J. School Psychol. 36, 121–136. doi: 10.1016/S0022-4405(98)00007-7

Reddy, L. A., Barboza-Whitehead, S., Files, T., and Rubel, E. (2000). Clinical focus of consultation outcome research with children and adolescents. Special Serv. Schools 16, 1–22, doi: 10.1300/J008v16n01_01

Reese, R. J., Prout, H. T., Zirkelback, E. A., and Anderson, C. R. (2010). Effectiveness of school-based psychotherapy: a meta-analysis of dissertation research. Psychol. Schools 47, 1035–1045. doi: 10.1002/pits.20522

Revelle, W. (2018). psych: Procedures for Personality and Psychological Research. Evanston, Illinois, USA: Northwestern University. Available online at: https://CRAN.R-project.org/package=psych Version = 1.8.4 (accessed September 10, 2020).

Sandoval, J., and Lambert, N. M. (1977). Instruments for evaluating school psychologists' functioning and service. Psychol. Schools 14, 172–179. doi: 10.1002/1520-6807(197704)14:2<172::AID-PITS2310140209>3.0.CO;2-1

Sheridan, S. M., and Gutkin, T. B. (2000). The ecology of school psychology: examining and changing our paradigm for the 21st century. School Psychol. Rev. 29, 485–502. doi: 10.1080/02796015.2000.12086032

Skalski, A. K., Minke, K., Rossen, E., Cowan, K. C., Kelly, J., Armistead, R., et al. (2015). NASP Practice Model Implementation Guide. Bethesda, MD: National Association of School Psychologists.

Squires, G., and Farrell, P. (2007). “Educational psychology in England and Wales,” in The Handbook of International School Psychology, eds S. Jimerson, T. Oakland, and P. Farrell (SAGE Publications), 81–90.

Stobie, I., Boyle, J., and Woolfson, L. (2005). Solution-focused approaches in the practice of UK educational psychologists. A study of the nature of their application and evidence of their effectiveness. School Psychol. Int. 26, 5–28. doi: 10.1177/0143034305050890

Strein, W., Hoagwood, K., and Cohn, A. (2003). School psychology: a public health perspective. Prevention, populations, and systems change. J. School Psychol. 41, 23–38. doi: 10.1016/S0022-4405(02)00142-5

Tricco, A. C., Lillie, E., Zarin, W., O'Brian, K. K., Colquhoun, H., Kastner, M., et al. (2016). A scoping review on the conduct and reporting of scoping reviews. BMC Med. Res. Methodol. 16:15. doi: 10.1186/s12874-016-0116-4

Tricco, A. C., Lillie, E., Zarin, W., O'Brian, K. K., Colquhoun, H., Levac, D., et al. (2018). PRISMA extension for scoping Reviews (PRISMA-ScR): checklist and explanation. Ann. Int. Med. 169, 467–473. doi: 10.7326/M18-0850

Villarreal, V., Castro, M. J., Umana, I., and Sullivan, J. R. (2017). Characteristics of intervention research in school psychology journals: 2010 – 2014. Psychol. Schools 54, 548–559. doi: 10.1002/pits.22012

Vu, P., Shanahan, K. B., Rosenfield, S., Gravois, T., Koehler, J., Kaiser, L., et al. (2013). Experimental evaluation of instructional consultation teams on teacher beliefs and practices. Int. J. School Educ. Psychol. 1, 67–81. doi: 10.1080/21683603.2013.790774

White, J. L., and Kratochwill, T. R. (2005). Practice guidelines in school psychology: issues and directions for evidence-based intervention in practice and training. J. School Psychol. 43, 99–115. doi: 10.1016/j.jsp.2005.01.001

Yeo, L. S., and Choi, P. M. (2011). Cognitive-behavioural therapy for children with behavioural difficulties in the Singapore mainstream school setting. School Psychol. Int. 32, 616–631. doi: 10.1177/0143034311406820

Keywords: efficacy, evaluation, school psychology, scoping review, measurement

Citation: Müller B, von Hagen A, Vannini N and Büttner G (2021) Measurement of the Effects of School Psychological Services: A Scoping Review. Front. Psychol. 12:606228. doi: 10.3389/fpsyg.2021.606228

Received: 14 September 2020; Accepted: 19 March 2021;

Published: 16 April 2021.

Edited by:

Meryem Yilmaz Soylu, University of Nebraska-Lincoln, United StatesReviewed by:

Matthew Burns, University of Missouri, United StatesSofia Abreu Mendes, Lusíada University of Porto, Portugal

Copyright © 2021 Müller, von Hagen, Vannini and Büttner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bettina Müller, bettina.mueller@psych.uni-frankfurt.de

Bettina Müller

Bettina Müller Alexa von Hagen

Alexa von Hagen Natalie Vannini

Natalie Vannini Gerhard Büttner

Gerhard Büttner