Abstract

The world’s current model for economic development is unsustainable. It encourages high levels of resource extraction, consumption, and waste that undermine positive environmental outcomes. Transitioning to a circular economy (CE) model of development has been proposed as a sustainable alternative. Artificial intelligence (AI) is a crucial enabler for CE. It can aid in designing robust and sustainable products, facilitate new circular business models, and support the broader infrastructures needed to scale circularity. However, to date, considerations of the ethical implications of using AI to achieve a transition to CE have been limited. This article addresses this gap. It outlines how AI is and can be used to transition towards CE, analyzes the ethical risks associated with using AI for this purpose, and supports some recommendations to policymakers and industry on how to minimise these risks.

Similar content being viewed by others

1 Introduction: artificial intelligence, circular economy, and their challenges

Over the past 50 years, natural resource extraction has tripled globally, with this trend accelerating since the turn of the century (Oberle et al. 2019). Based on the current trajectory, demand will require more than two planets’ worth of natural resources by 2030 and three by 2050 (Osborne 2006). Excessive demand for resources leads to higher levels of greenhouse gas emissions from mining and extraction, the creation of monocultures that harm the natural ecosystem, and individual health deteriorating due to environmental degradation, such as worsening air quality. Waste is also extensive. For instance, the global food system currently produces enough food to feed the world’s population, but roughly a third is wasted in the supply chain and the process of consumption (Ellen MacArthur Foundation and Google 2019). The “circular economy” (CE) has been proposed as an economic model that can help overcome these developmental challenges.

Although there is no agreed-upon definition of CE (Kirchherr et al. 2017), the term is generally used to refer to an economy “based on the principles of designing out waste and pollution, keeping products and materials in use, and regenerating natural systems” (Ellen MacArthur Foundation 2017). This contrasts with the so-called “linear economy”, the current and dominant consumption-based economic paradigm described above, which is characterised by taking resources, making goods to be sold, and disposing of everything one does not need, including the product at the end of its lifecycle (Sariatli 2017). Products are typically made to be consumed and discarded by the user once they are no longer considered valuable. Similarly, by-products in the creation process of products are frequently discarded rather than utilised.

To address the harms associated with a linear economy, public and private sector CE policies and initiatives have emerged since at least the 1990s. Germany was an early pioneer of integrating CE into national law by enacting the “Closed Substance Cycle and Waste Management Act” (1996), which placed waste-management responsibilities on those who produce, market, and consume goods. Since the turn of the century, interest in this model amongst policymakers and businesses has continued to grow (Geissdoerfer et al. 2017). For example, the Chinese government passed a CE promotion law in 2008, published a national strategy for achieving CE in 2013, and emphasised implementing CE in its previous four national 5 years plans (Mathews and Tan 2016). Likewise, the European Commission announced a Circular Economy Action Plan in 2015 and the subsequent New Circular Economy Action Plan in 2020, which look to transition the EU towards a regenerative growth model through enacting a “future-oriented agenda for achieving a cleaner and more competitive Europe” (New Circular Economy Action Plan 2020). In other states—such as Brazil, India, and the United States (US)—industry has been leading CE initiatives (Geng et al. 2019), with companies such as Xerox and Caterpillar integrating CE principles into their business models (Stahel 2016).

Digital technologies are a crucial enabler of CE ambitions (Preston 2012). Policymakers and businesses recognise this. For instance, the European Commission’s Circular Economy Action Plan explicitly states that “digital technologies, such as the Internet of things, big data, blockchain, and artificial intelligence will… accelerate circularity” (New Circular Economy Action Plan 2020, p. 4). Similarly, numerous businesses across the globe have looked to digital technologies to further circularity, such as using sensors to more effectively monitor and maintain products (Nobre and Tavares 2017; Reuter 2016).

In this article, we centre our analysis on artificial intelligence (AI), understood here as a cluster of smart technologies, ranging from machine learning software, to natural language processing applications, to robotics, which have unprecedented capacities to reshape individual lives, societies, and the environment (Roberts et al. 2021). Two reasons determined our choice. First, AI technologies have several novel features, including an ability to process vast amounts of data, autonomously or semi-autonomously, to make inferences, predictions, decisions, or to generate content. These features mean that of all digital tools and technologies, those that utilise AI have the most transformational potential for CE; for instance, through facilitating widespread smart automation or breakthroughs in fundamental science that could scale CE solutions exponentially.Footnote 1 At the same time, these novel features mean that AI technologies pose unique ethical risks to fundamental rights that deserve special attention (Floridi and Taddeo 2016; Tsamados et al. 2021). Second, there has been significant growth in the use of these technologies in recent years (The State of AI in 2021 2021), with academics, businesses, and policymakers increasingly interested in applying these technologies for CE initiatives (Ellen MacArthur Foundation and Google 2019). Hence, it is not just a hypothetical consideration.

To date, research at the intersection of AI and CE has focused on how these technologies can be used to aid CE ambitions (Acerbi et al. 2021). By contrast, the potential for harms to emerge from using AI to achieve this circular transition has not been comprehensively analysed. This is a significant omission, given the well-documented ethical risks associated with many uses of AI, including uses to foster socially and environmentally good outcomes (Cowls et al. 2021; Taddeo et al. 2021; Tsamados et al. 2021). In this paper, we address this gap by (i) assessing the potential ethical issues associated with using AI to achieve CE targets, and (ii) providing policy recommendations designed to promote the ethical use of AI for achieving CE ambitions. The remainder of this paper is structured as follows. Section two establishes a background for the analysis with a brief overview of the history and concept of the circular economy, including common criticisms. Section three considers how AI can aid the transition to a circular economy. Section four assesses the ethical risks of using AI technologies to support CE strategies. Section five offers some policy recommendations for governments and industry that can be adopted to achieve more ethical outcomes when using AI for CE initiatives. A brief conclusion closes the article.

2 The concept of circular economy

CE is a model for economic development that seeks to decouple growth from the unrestrained consumption of finite resources through introducing regenerative practices (The Circular Economy In Detail, n.d. 2022). The CE concept has been touted in various forms since at least the late 1970s as a solution to the environmental sustainability issue (Geissdoerfer et al. 2017) and as a new model for economic prosperity (Kirchherr et al. 2017). Although perspectives on what CE is differ significantly, there are several commonalities at the heart of most understandings. CE typically centres around ideas of reduction, reuse (including repair), and recycling (hereafter the “3Rs”)Footnote 2 of products, components, and materials to minimise waste (Kirchherr et al. 2017). The 3Rs are often understood as functioning in a “waste hierarchy”: reduction is the top priority to ensure that resources are extracted at a level where nature can recover. Next, reuse is promoted, so that excessive waste is not created unnecessarily. Recycling is the last resort on account of being the most wasteful (Ellen MacArthur Foundation 2017).

Many understandings of CE distinguish between biological and technical cycles. Circularity is often the default in “natural” biological systems, with waste from specific processes becoming resources for others (Stahel 2016). The water and carbon cycles, and processes such as composting, are examples of circular living systems regenerating themselves (U.S. Chamber of Commerce Foundation 2017). CE initiatives seek to emulate “natural” cycles when using biological materials in production, such as through regenerating soil and using renewable resources in the manufacturing process (Ellen MacArthur Foundation and Google 2019). Technical cycles are for materials that have been processed by humans and cannot easily be returned to nature. A prominent example is the extraction of rare metals and their transformation into mass-market consumer electronics. These materials should be (re)used as efficiently as possible to minimise resource extraction and transformation (Gaustad et al. 2021; Silvestri et al. 2021). In practice, products are often a mix of biological and technical materials. To ensure that cycles are effectively maintained, it is essential to separate materials at the point of recycling, or to develop technical materials that emulate biological ones that can be more easily returned to nature.Footnote 3

Unlike the idea of sustainability, which has often been criticised on account of being too vague to be implementable (Phillis and Andriantiatsaholiniaina 2001), CE provides a tangible model that can be adopted for reusing (Floridi 2019) or cutting down waste and promoting more environmentally friendly growth (Geissdoerfer et al. 2017).

2.1 Circular economy initiatives

Several policy initiatives have been released to promote a transition to a CE model. For example, the EU’s Waste Electrical and Electronic Equipment Directive (2013) stipulates that all producers of phones need to accommodate a take-back system, and the New Circular Action Plan (2020) introduces a comprehensive set of product requirements for circularity. In China, the Circular Economy Promotion Law (2008) notes that senior officials should be evaluated against CE targets and indicators, which were established in the country’s subsequent 5 years plans (McDowall et al. 2017). Finally, although the US has been less active in promoting CE, the Environmental Protection Agency’s National Recycling Strategy (2021) explicitly focuses on circularity (National Recycling Strategy 2021) and a handful of local initiatives have been undertaken, including San Francisco’s Zero Waste scheme (Mathews and Tan 2016).

Industry initiatives are also being pioneered across private sectors. The technology company Philips has begun offering “lighting as a service”, where it focuses on selling maintenance and repair agreements rather than lighting products. The products sold as part of these agreements are often smart technologies that only provide lighting when needed (Achieving a Circular Economy 2015). Ikea has recently opened its first second-hand store and an associated buy-back scheme to encourage consumers to return their unwanted goods for reuse (Fleming 2020). Smaller private initiatives are also on the rise. Prominent examples include FairphoneFootnote 4 and BackMarket,Footnote 5 social enterprise companies that apply the CE model to the mobile phone and computer industries, by encouraging the refurbishing, repair, and reuse of phones and laptops.

These initiatives are promising, but the transition towards circularity is still nascent. Despite predictions that the circular economy will replace the linear economy by 2029 (Hippold 2019), a 2022 report showed that only 8.6% of the world economy was circular in 2020, which is, in fact, a decline from 2018, when 9.1% of the global economy was circular (de Wit and Haigh 2022)Footnote 6. This indicates that significant progress still needs to be made if a meaningful circular transition is to take place.

2.2 Circular economy criticisms

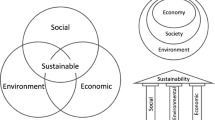

The concept of a CE is not without its critics. Notably, the focus on socially good outcomes is generally lacking in CE narratives (Barbier 1987; Purvis et al. 2019), while the idea of sustainability often includes economic, environmental, and social elements (Bibri 2018). Because of this, it is unclear whether the circular economy would prove beneficial for social outcomes, including whether it would improve individual well-being (Geissdoerfer et al. 2017), or lead to greater

“social equality, in terms of inter- and intra-generational equity, gender, racial and religious equality and other diversity, financial equality, or in terms of equality of social opportunity” (Murray et al. 2017, p. 376)

Even if CE proved to be socially beneficial, it remains unclear how to ensure or even facilitate these beneficial aspects.

Some scholarship has been critical of the theoretical robustness of the CE concept, which has predominantly been developed by policymakers and businesses. This includes questions over the foundational premise that the earth is a closed loop where materials and energy cycle through the system, with scientists pointing out that the earth is, in fact, an open system (Skene 2018). Other criticisms include the potentially damaging effects of “Jevon’s paradox” (i.e. eco-efficiency leading to more consumption) (Korhonen et al. 2018), and the objection that long-lasting products may not be the most environmentally friendly choice on account of the difficulty of disposalFootnote 7 (Murray et al. 2017) or due to the prolonged use of eco-inefficient products (Blunck et al. 2019).

Perhaps, most problematically, the lack of theoretical robustness of the CE concept could lead organisations to appropriate the term without overhauling business practices in a meaningful way. For instance, many proposed definitions of CE do not include the idea of a waste hierarchy, meaning companies could make incremental improvements in recycling and claim that they are introducing circular and sustainable business practices, irrespective of the kind of material used (and disposed) in production processes (Kirchherr et al. 2017). Large transnational corporations leading the discourse on, and investments in, CE initiatives also create a risk of co-option, whereby powerful actors that are already prospering because of the current developmental model set the standards for CE, while also securing a position of capital accumulation within this new model (Mah 2021; Ponte 2019). This could undermine market competition and consumer choice.

Finally, some even question whether CE is sufficient to fix the current “take-make-waste” model, given that it still promotes consumption and growth (Blühdorn and Welsh 2007; Mah 2021). This problem has given rise to a “degrowth” movement amongst environmental activists and scholars, who advocate for a radical political economy reorganisation that concentrates mainly on the “reduction” principle that is also found in CE (Schröder et al. 2019).

Despite these documented drawbacks, it would be wrong to dismiss the CE model. While imperfect, in many circumstances, it offers a marked improvement on the status quo and can facilitate tangibly sustainable outcomes. Nonetheless, for a sustainable and effective implementation of CE to succeed, ongoing scrutiny and anticipation of potential harms are needed. This includes scrutinising the digital technologies that are being used to support CE as part of a “green plus blue” approach to global challenges in the twenty-first century (Floridi 2020). We undertake this analysis in the following sections, specifically focusing on AI.

3 AI and CE

AI is a key enabler of CE and the focal point of this paper on account of its potential to bring about significant benefits and risks.Footnote 8 However, it is important to acknowledge from the outset that AI does not function in a vacuum; AI systems are typically developed and deployed in tandem with other digital technologies. For example, Internet of things (IoT) devices may be used to collect data for an AI system to subsequently analyse (Askoxylakis 2018; Reuter 2016). Accordingly, while our analysis centres on the use of AI in support of CE, we consider the use of other digital technologies insofar as they complement AI in relevant contexts. The remainder of this section considers how AI can aid CE goals by Sect. (3.1) designing and maintaining circular products and Sect. (3.2) facilitating circular businesses.

3.1 Designing, developing, and maintaining circular products

AI can support the design, development, and maintenance of circular products. This can happen in two notable ways.

First, a product needs to be designed and developed with the 3Rs in mind to meet CE parameters. In particular, products should be designed to ensure a long product life and in a way that enables the separation of components that are a part of the biological cycle (e.g. cardboard) from those that are part of a technical cycle (e.g. plastic), which would enhance its recycling potential (Ellen MacArthur Foundation and Google 2019). AI can support designers by suggesting initial designs for eco-friendly products or adjusting designs based on environmental parameters and/or considerations of other actors in the circular value chain (Acerbi et al. 2021; Gailhofer et al. 2021). For instance, parameters could be established for designing a product based on local or recycled materials, which would, in turn, lessen resource extraction and emissions associated with the transport of materials. Similarly, AI can help design new materials to substitute unsustainable resources, such as harmful chemicals. This design could enhance the durability of products and ease recycling at the end of the product lifecycle. An example is the project ‘Accelerated Metallurgy’ which used AI to develop novel metal alloy combinations that take into account circular economy principles such as non-toxicity, design for use and reuse, extending the use period and minimising waste (Gailhofer et al. 2021). AI could also help predict how materials change over time, including considerations of durability and potential toxicity of materials (Ellen MacArthur Foundation and Google 2019). This information can be contained in a “product passport”, which would help facilitate reverse logistics (Charnley et al. 2019). These solutions could address some of the concerns outlined above about the long-term issues of eco-inefficiencies and disposal issues surrounding circular products (Blunck et al. 2019; Murray et al. 2017).

Second, regarding the maintenance of CE products, AI could be used to monitor products and make data-driven decisions. For example, AI-powered digital twins—virtual models that accurately reflect physical objects—can help study performance over time and generate possible improvements (What Is a Digital Twin? n.d. 2022). These systems, which rely on IoT sensors to collect data on functionality, can help ensure the longevity of products through understanding product performance and condition in near-real-time (Askoxylakis 2018; Okorie et al. 2018). These data can then be used to make decisions about a product, such as whether interventions are needed, optimising performance and extending the product lifespan (Bressanelli et al. 2018). More generally, AI can be used to analyse data collected over a product’s lifecycle to either make real-time efficiency improvements or to determine whether a returned product should be reused, remanufactured, or recycled (Blunck et al. 2019). Since 2019, Apple has used on-device machine learning to predict the usage patterns of iPhone users, allowing more efficient battery charging, which it claims can extend the chemical age and thus the lifespan of the popular smartphone.Footnote 9 Meanwhile, Google and DeepMind have used AI to optimise battery usage based on predicted usage patterns and thus save power and potentially reduce charge cycles.Footnote 10 Beyond conventional business-to-consumer markets, these could be effective methods of maintaining product quality in a sharing economy business model.

3.2 Facilitating circular businesses

AI could also support circular business. In this case, at least three points of intervention are promising.

First, AI can be used to develop innovative circular business models, like AI-based dynamic pricing. If products are sold as a service or recycled products marketed, it is unlikely that standardised pricing could be used, given the multitude of variables impacting the price of a product. Relying on individuals to price each returned product manually would be time-consuming and could not scale effectively. Dynamic pricing algorithms could be used to analyse many variables that should be considered in pricing, such as age of the product, wear and tear, and market conditions to calibrate price-points efficiently. Platforms like eBay already offer second-hand sellers price suggestions based on the current market for similar items in similar conditions.Footnote 11 Likewise, matching algorithms can help connect buyers and sellers more effectively (Gailhofer et al. 2021). These business models are already being tested in existing sharing economy models, such as for bikes, indicating the potential viability of exporting them into circular product markets (Ellen MacArthur Foundation and Google 2019). Indeed, product-as-a-service has been identified as a potentially significant opportunity for existing companies, not just market disruptors (Antikainen and Valkokari 2016).

Second, AI could facilitate circular businesses by supporting the recycling infrastructure needed for a functioning circular economy. Effective sorting is required, because CE involves reusing, repairing, and recycling products. AI-powered image recognition can identify and differentiate waste, minimising resource loss. For instance, Unilever and Alibaba recently partnered to trial an AI-enabled sorting machine that distinguishes between different types of plastic, with the project aiming to introduce large-scale, closed-loop, plastic recycling in China (Moore 2021). Similarly, in the electronic waste sector, robots are being integrated into disassembly lines to retrieve and recycle valuable and hazardous materials at the end of a product’s lifecycle (Renteria and Alvarez-de-los-Mozos 2019). For example, Apple’s Daisy robot can “take apart up to 200 iPhone devices per hour, removing and sorting components to recover materials that traditional recyclers can’t—and at a higher quality” (Apple Recycling Program 2018). This facilitates higher value recovery of materials, creating secondary product markets (Fletcher and Webb 2017; Renteria and Alvarez-de-los-Mozos 2019). This type of sorting is crucial for minimising waste at the end of a product’s lifecycle and providing the materials for new circular products.

Third, AI can help with necessary infrastructural elements, to ensure that the resources underpinning circular businesses are themselves sustainable. Energy consumption for storage and processing of data is a notable example. Data centres are heavily energy-intensive. Some predictions suggest that data centres could use as much as 13% of the world’s electricity by 2030, compared to 1% in 2010 (Andrae and Edler 2015). If data-intensive circular businesses require electricity consumption at this level, then many of the environmental aspirations of the circular economy could be undermined. This is a risk that has been recognised by some of the world’s largest data providers, many of whom are turning to AI to assist in areas such as cooling and optimising energy use. For instance, in 2016, DeepMind developed an AI system that tuned Google data centres’ cooling systems based on the weather and other factors, thus reducing the cooling energy bill by 40% (Jones 2018).

4 Ethical issues of using AI in CE

We have seen that using AI for developing CE products and businesses offers many potential benefits. However, without proper consideration or ethical scrutiny, the use of these technologies could undermine their utility on account of being harmful and rejected by society (Floridi et al. 2020). The unethical use of AI presents several plausible risks, as we detail in the remainder of this section. Sub-Sects. (4.1) and (4.2) will focus on the potential direct harms from AI systems for CE, while Sects. (4.3) and (4.4) will focus on broader structural considerations of using these technologies.

4.1 Data privacy

CE concerns relationships and processes between multiple parties. A single actor does not “close the loop” given the connectedness of supply chains; circularity can hardly be achieved without collaboration (Alexandris et al. 2018; Larsson and Lindfred 2019; Sankaran 2020). This poses a pressing need for cooperative networks, and data and interoperable systems are critical to this end (Ramadoss et al. 2018). Data fuel these intra- and inter-organisational networks by informing stakeholders about the various attributes of underlying assets, such as location, condition, and availability. At the same time, without AI, it would be extremely difficult to make sense of these data and use them to aid in designing and maintaining products, supporting circular businesses, or achieving a high degree of circularity in the economy. However, this data collection and analysis could also exacerbate privacy risks.

Regarding data collection, the proliferation of tracking and measurement devices, such as IoT, into personal spaces is often a prerequisite for AI-powered CE products. This poses a significant ethical risk (Bressanelli et al. 2018; Ramadoss et al. 2018). Take the collaboration between Cisco, Cranfield University, and The Clearing in developing a circular model for producing and consuming sport shoes. Each pair of shoes was fitted with an IoT component that tracked the location and shoe condition to identify replacement and upgrade needs. At the end of the product’s life, customers were recommended a location to return the shoes for remanufacturing (Nobre and Tavares 2017). While this model sought to minimise environmental waste, it did so at the cost of revealing an individual’s geospatial data, which can act as a proxy for many other pieces of information about an individual, including their work, hobbies, and other behavioural patterns. Accordingly, the use of AI in support of CE tacitly encourages increased data collection through allowing data analysis capabilities to be scaled, in turn threatening consumer privacy.

A potential retort to this ethical risk is that personal data, including geospatial data, are already collected and analysed by numerous applications on our phones (Binns et al. 2018). However, the fact that ethically contentious data collection is already taking place does not act as a justification for further collection. How the data from tracking-enabled CE devices are used and by whom are key questions that would need to be addressed if geospatial data or other personal data are to be used ethically for CE products. A recent public engagement exercise by the Geospatial Commission—an expert committee housed within the UK Government’s Cabinet Office—revealed that individuals were concerned that geospatial data are not being used in their best interests, that they could not control the use of these data meaningfully, and that there were real risks of the data being misused or breached (Maxwell et al. 2021). The responses to this engagement exercise indicate that collecting geospatial data from circular products for subsequent analysis through AI applications pose a significant risk to public trust.

Regarding data analysis, the individual or group inferences that AI systems can make could also prove ethically problematic (Floridi 2014; Taylor et al. 2016). Consider the example of smart meters, which, as of 2020, account for over 30% of all energy meters in homes and small businesses in the UK (Smart Meter Statistics in Great Britain 2020). AI can analyse data from these meters to improve energy consumption, resulting in lower costs for consumers and a waste reduction. While energy data may not seem sensitive, patterns in energy usage can point to when individuals wake up, go to sleep, go to work, are away, have guests over, amongst many other things. Previous studies have indicated that it is even possible to infer how frequently an individual puts on the kettle and how much water is used to fill it (Murray et al. 2016). This example is indicative of how AI can make precise inferences about individual behaviours, even through seemingly banal applications. Potential CE benefits could be undermined by pernicious uses of these data, such as for unwanted targeted advertising or punitive behaviours against customers not following regimented policies, like black-box trackers on cars for specifically profiled drivers.

The above examples refer to intra-organisation data collection and analysis. The situation can become even more contested when inter-organisation data sharing is considered. As mentioned, this connectivity is a necessary step for “closing the loop”, yet it raises questions over how organisations can share data in a meaningful way while still ensuring privacy (Antikainen et al. 2018). Data security and liability risks are heightened within a highly interoperable ecosystem where one compromised node could impact many others (Allam and Dhunny 2019; Luthra and Mangla 2018), with the effects of security breaches especially damaging in these complex and interdependent systems involving multiple stakeholders. If left unaddressed, these risks could affect the potential successful adoption of a connected circular economy or make its implementation more problematic than it needs to be through undermining public trust.

4.2 Algorithmic bias

CE literature is generally positive about adopting algorithmic-based business models, such as automated dynamic pricing and matching. AI can be used to scale circular business practices by pricing reused products and/or matching them with potential consumers automatically, for example, based on demand, the condition of the product, or the profile of consumers. However, many existing experiments with automated dynamic pricing and algorithmic profiling in the wider economy have led to unfair or discriminatory outcomes. Here, we will focus our discussion on the former.Footnote 12 Recent examples of unethical outcomes from automated dynamic pricing include an online Scholastic Assessment Test (SAT) preparatory course provider discriminating based on ZIP codes, which act as a proxy for ethnicity, leading to Asians being almost twice as likely to be offered higher prices than non-Asians (Angwin et al. 2015); the dating app Tinder’s pricing algorithm discriminating against individuals over 30 (Heikkila 2022); and Uber charging higher fare prices to individuals in Chicago neighbourhoods that have larger non-white and higher poverty level populations (Pandey and Caliskan 2021).

Taking this last case of Uber as an example, fare pricing is generally determined by duration and length of trips and a “surge multiplier”, which is based on relative demand and supply within a specific location. Uber’s current algorithmic model is influenced by drivers’ preferences and biases, such as whether to collect individuals from some areas of a city or specific passengers based on information provided, like name and rating. Ge et al. (2020, p. 1) found that in Boston, US

“Uber drivers were twice as likely to cancel an accepted ride when travellers were using [an] African American-sounding name”.

Pandey and Caliskan (2021) argue that one possible reason specific neighbourhoods, and thus demographic groups, are charged higher prices is because a lower proportion of drivers are willing to provide services in some areas, impacting surge pricing. These example shows how harmful biases can creep into dynamic pricing business models and suggest that applying this model to CE poses a significant ethical risk. While using personal characteristics for pricing CE products may seem implausible at first, there are clear precedents in personalised marketing (Miller and Hosanagar 2019). On top of this, risks could materialise even if protected characteristics, like race or gender, are avoided, due to the potential for other attributes to act as proxies, as was seen in the above example. As such, it is not unreasonable to imagine tailored pricing and advertising of circular products including a variable that correlates with a protected characteristic and inadvertently leads to indirect discrimination (e.g. the inclusion of consumer’s ZIP code as a variable, so as to minimise transport emissions).

It should be stressed that harmful biases are not unique to automated pricing and other algorithmic business models. Individuals manually pricing CE products may show similar biases, as seen in many other sectors previously (Ayres 1991; Chander 2017). However, AI systems could standardise specific types of harmful biases at scale, with the “black box” nature of some of these systems exacerbating this risk by making harms less traceable (Pasquale 2015). Additionally, because of the proliferation of AI-as-a-service—off the shelf AI systems that organisations can buy—and due to the complex allocation of responsibilities, redressing these biases might become extremely challenging. This indicates that careful consideration of design ex-ante and regular monitoring ex-post are needed if companies are to adopt an ethically sound automated dynamic pricing system.

4.3 Economic inequality and exclusion

On top of the direct harms to individuals from AI systems for CE, these technologies could also have negative structural impacts. In terms of social and economic outcomes, significant risks are associated with the current realities of AI development and deployment. This is true both internationally and domestically.

Internationally, as several nations in Europe, North America, and Asia pilot circular projects, including smart cities, Global South countries have fewer resources to promote an AI-powered circular transition. A study by McKinsey estimates that leading countries could capture an additional 20–25% in net economic benefits from AI adoption. In comparison, Global South countries may capture only about 5–15% (Notes from the AI Frontier 2018). Thus, the application of AI may widen the digital divide between nations rather than close it.

As a solution, Ghoreishi and Happonen (2020) propose that Global North countries could use their AI technologies to help developing countries move towards CE. However, this approach would merely plaster over the broader issue of how contemporary AI value chains are structured. In the Global South, critical roles in the AI value chain include extracting raw materials, manufacturing hardware, and low-skilled tasks such as data labelling (Crawford and Joler 2018; Gray and Suri 2019). In contrast, the underlying research, design, and maintenance of products typically occur in the Global North (Weber 2017). Accordingly, merely relying on AI exports to the Global South for a CE transition could further exacerbate many problematic dependency-agency issues that characterise current AI dynamics (Weber 2017), and which have long been criticised by international development scholars (Frank 1986). Indeed, without a wider restructuring of the AI value chain that empowers high value-added tasks being completed in the Global South, other risks from simply exporting AI could materialise, such as the use of harmfully biased or unrobust models on account of deployment in a context the system was not trained for (Danks and London 2017).

Domestic inequalities and exclusion could also materialise within Global North states, which is where most AI-driven CE initiatives presently occur. CE’s reduction of linear consumption will change the labour structure from creating products to maintaining products-as-a-service. This will mean a greater emphasis on higher skilled work designing and maintaining products, and a lesser emphasis on factory floor product creation. The resultant shift would be a polarisation in the types of occupations available, thus leading to wage inequality (Lawrence et al. 2017). In countries like the U.S., where nearly 50% of digital service jobs exist in only ten metropolitan areas, a shift from manufacturing to design roles may result in further geographic inequality (Muro 2020).

Domestically, social exclusion is also a real possibility. On a regional scale, cities like Stockholm, Copenhagen, Amsterdam, and Reykjavik have created digital suggestion platforms, where citizens can provide information about the city’s infrastructure and environment. The intention of developing these platforms is to collect data and encourage civic engagement while improving social and environmental conditions towards a circular economy. However, these tools tend to “exclude non-digital people like the elderly or simply less-informed” (Blunck et al. 2019). In the UK, for example, 99% of adults aged 16–44 years were recent internet users, compared with 54% of adults aged 75 years and over. Additionally, 81% of disabled adults are internet users, compared to 91% of adults more generally (Internet Users UK: 2020, 2021). Likewise, the increasing attention and resources being used on smart cities to leverage the most innovative technologies for social, economic, and environmental activities could come at the expense of suburban and rural areas (Allam and Dhunny 2019; Ziosi et al. 2022). While the use of AI in support of CE would not be entirely to blame for these domestic inequalities—which are already materialising due to the development and deployment of other digital technologies—the potential exacerbation of these inequalities from using AI in support of CE is an important ethical consideration.

4.4 Epistemological risks

A final ethical consideration concerns whether current, relevant, scientific knowledge makes the use of AI in CE innately risky. Nature is a complex and balanced ecosystem. The risk is that one may over-simplify the ecosystem, adopting a reductionist approach, formalising what cannot be reduced to formulae, and using mathematical modelling in which crucial variables are removed (Murray et al. 2017). This is problematic, because, for AI to benefit the environment, one needs to “ask the right ecological questions to have a clear understanding of the problem” and how to tackle it (Blunck et al. 2019, p. 31). There is still much unknown about the environmental dimensions of CE (Larsson and Lindfred 2019). For example, water reuse is an excellent opportunity to apply CE principles. However, there are many concerns and unknowns about the impact of the quality of recycled water, specifically on human health (Voulvoulis 2018). The same observation has been made about plastic recycling which consumes resources and produces its own set of waste and emissions (Korhonen et al. 2018; Mah 2021). More generally, existing work on the environmental aspect of the circular economy is unbalanced, with scholars pointing out that biodiversity is often a forgotten element of CE narratives (Geissdoerfer et al. 2017). This risks inadvertently deploying AI or optimising algorithms in a harmful manner because of a flawed or narrow understanding of what a good environmental outcome should look like (Murray et al. 2017).

Designing or optimising for specific notions of good environmental outcomes is ethically risky due to the inevitable complex balances and trade-offs involved. The rapid development and manufacturing of advanced machinery for CE will lead to extensive use of resources, causing emissions and pollution (Blunck et al. 2019). For instance, many “clean” technologies, such as hybrid car engines, also rely on rare-earth metals that are mined at considerable environmental cost (Tremblay 2016). Indeed, if done incorrectly, the disposal of these products could also prove challenging, with electrical and electronic equipment becoming one of the fastest-growing waste streams in the EU (New Circular Economy Action Plan 2020). Likewise, data collection, analysis, and storage processes in AI development require much computational power, which consumes enormous amounts of energy (Cowls et al. 2021; Kouhizadeh et al. 2019). This energy consumption is increasing with the development of bigger AI models, with the computing power needed to train state-of-the-art models increasing by over 300,000 times from 2012 to 2018.Footnote 13 Consequently, using AI to fulfil a narrow set of CE ambitions could come at the cost of other environmental priorities. In fact, given that AI standardises and scales specific decisions, the risk associated with using these systems is particularly high, if trade-offs are not adequately understood and considered.

5 Policy recommendations

CE literature often considers how a transition from a linear economy can occur at three levels: micro, meso, and macro (Acerbi et al. 2021; Ghisellini et al. 2016; McDowall et al. 2017; Milios 2018). These categories are used slightly differently across academic literature, with our usage as follows. Micro recommendations refer to intra-organisation policies. Meso recommendations refer to industry-specific policies and/or inter-organisation relationships. Macro recommendations refer to national or global-level policies. To help avoid or mitigate the potential risks outlined above, we offer policy recommendations at each level. These recommendations are meant to be realistically implementable and help guide the policy and practice of AI in support of CE in the near and medium-term.

5.1 Micro-level

Organisations looking to develop circular products or transition to a circular business model should look to the debates in AI and digital ethics literature. Several innovative solutions can be found within this field for mitigating the harms outlined above. Here, we will focus on two practices that can support the ethical use of AI: privacy-enhancing technologies and AI auditing. These practices align with addressing the ethical risks outlined in Sects. 4.1 and 4.2, respectively.

Privacy-enhancing technologies (PETs) is a catch-all phrase for any technical solution protecting individual privacy or personal data (Privacy Enhancing Technologies for Trustworthy Use of Data 2021). This ranges from simple tools such as ad-blockers to more advanced techniques like homomorphic encryption. PETs can minimise the risks associated with data collection from devices, including IoT-embedded CE products, and subsequent inferences made by AI technologies. Regarding data collection, IoT and similar devices could be combined with privacy-enhancing measures like federated analytics which analyses data locally. Given that attempts to apply PETs to IoT devices are still immature (Garrido et al., 2021), this is likely a medium-term solution that technology companies will probably need to pioneer.Footnote 14 For inferences about those using circular products, privacy-preserving techniques such as differential privacy (Dwork 2008) and synthetic data can offer protection by obscuring the individual within datasets. The former does so by enabling population-level insights and the latter by augmenting datasets with realistic, generated data. These techniques will likely involve a trade-off between the degree of privacy preserved and the granularity of insights provided about products. Accordingly, deciding whether to use PETs should be a case-by-case decision that organisations make, based on factors such as level of risk.

AI auditing can mitigate the risk of harmful algorithmic bias in the CE, such as that posed by circular business models that incorporate automated dynamic pricing. Several auditing approaches can detect whether AI systems are exhibiting bias. Governance audits can be used to determine whether there are appropriate organisational measures in place for the use of AI systems; empirical audits can be used to assess the inputs and/or outputs of an algorithm for signs of bias; and technical audits can assess features of the dataset and/or model (Auditing Algorithms 2022). These audits could be undertaken internally, based on regulator, academic, or industry guidance (Raji et al. 2020; Mökander et al. 2021; Mökander and Floridi 2021),Footnote 15 or by an external organisation offering AI auditing services. Detecting harmful biases allows CE businesses to modify their systems to mitigate these harms. It is important to stress that the field of AI auditing is still relatively nascent, meaning research is necessary for determining which framework is appropriate for a specific product or organisation. That being said, regulatory guidance on auditing will become clearer as policy measures like the EU’s AI Act begin to take shape.

5.2 Meso-level

Addressing the structural inequalities associated with a shift to CE is challenging. Developing new industry-wide norms, particularly within the technology sector, could be a beneficial first step. An open source approach offers possible mitigation for the international geographic inequalities that currently characterise the use of AI in the CE. Patent wavers (e.g. as done by Tesla), open source software (e.g. Meta/ Linux’s PyTorch), and open data (e.g. Google’s Dataset Search) could all support organisations that currently do not have access to large datasets or possess the capabilities to integrate AI into their processes (Zhang et al. 2019). This opening of capabilities can stimulate circular businesses and higher quality jobs in the Global South. Moreover, it could improve the overall innovation ecosystem, speeding up the transition to a CE. However, the limitations of this proposal should also be stressed. Structural inequalities, such as those relating to education and infrastructure, mean that any claims of geography being a thing of the past are fallacious (Anwar and Graham 2022). Likewise, open source does not mean that capabilities are democratised, given that the underlying designs and logics of systems and datasets are controlled by very few entities (Crawford and Joler 2018). Accordingly, open source can provide a promising first step for reducing global inequalities, but it is necessary to recognise the limited change it can make within wider power structures.

The private sector should also develop best practices for an inclusive CE transition. One aspect of this is reskilling programmes. Current reskilling initiatives are left mainly to individual organisations; these initiatives can help manage immediate business needs but are inadequate for managing longer term occupational shifts, due to their frequent disconnect and parochial focus. Sector skills councils, non-profit organisations that help a single sector identify and close the specific skill gap, could provide a strong foundation for addressing the needed structural transition for CE (Chopra-McGowan and Reddy 2020). A second element of an inclusive use of AI for CE is the promotion of diversity in the development of applications and products. An important first step is to ensure that diversity, equity, and inclusion are prioritised when undertaking public engagement on policies or products. More generally, for AI-powered CE products to be designed inclusively, those creating the systems must be reflective of a society’s diversity. While this is true for all digital technologies, it is particularly important for AI, given that many systems are (semi-)autonomous and opaque, making it more difficult to detect and rectify issues ex-post. Correcting the diversity gap in the technology sector is necessary, and it will require a range of industry-wide remedies, including funding university outreach and scholarships, partnering with, and supporting interest groups that seek to support minorities within the sector, and transparent reporting about diversity statistics. Failing to do so will lead to the development of AI products that only work well for specific demographic groups.

5.3 Macro-level

At a macro-level, governments can help address the epistemological risks associated with AI for CE through supporting research and developing wide-ranging guidance. Regarding the former, the concept of CE has generally been developed and progressed by policymakers and industry, with several scientists questioning some fundamental premises around CE (Korhonen et al. 2018; Skene 2018). The first port of call is for governments to increase the funding available for research into foundational questions associated with CE and how to operationalise it in a way that minimises harmful trade-offs. There are already promising signs of such investment beginning to materialise. For instance, in 2021, UK Research and Innovation (UKRI) pledged £30 million to support a major research programme into CE, encompassing 30 universities and over 200 industry partners.Footnote 16

Guidance can help ensure that scientifically supported best practice is followed when using AI for CE. The needed guidance ranges from repositories of existing successful uses, to codes of practice, to standards defining appropriate variables when optimising AI for different CE problems. Examples of best practice for guidance or standards can be drawn from several adjacent fields, including environmental governance. For instance, the European Commission is set to introduce a standard methodology for quantifying the environmental footprint of private sector products and services in the first half of 2022, which is designed to mitigate “greenwashing”.Footnote 17 Similar methodologies could be proposed for measuring the carbon cost of AI systems, so as to understand the environmental trade-offs associated with their use for CE.

Finally, the epistemic risks that we highlighted in Sect. 4.4 call for a joined-up and flexible approach to the governance of AI for CE and CE in general. As our scientific understanding of complex environmental dynamics is still evolving, governance mechanisms aiming at supporting sustainable practices need to be able to accept and adapt quickly to new knowledge to ensure that AI for CE is a success. The risk here is that governance policies may standardise the wrong trade-offs and thus scale harms. Deep collaboration between governments, academia, and industry, potentially through new and dynamic institutions, will be necessary for overcoming this risk.

6 Conclusion

CE offers an alternative vision to the current linear economic model. Circularity would facilitate more environmentally sustainable development and a broader societal shift from consumption to quality experiences and relationships. AI will be crucial to realising this transition. It can support the design and maintenance of circular products and the creation of circular business models. Policymakers, industry, and academics are all taking a keen interest in these potential opportunities.

However, there has been little scrutiny of the ethical consequences of using AI to transition to CE and how to address potential risks. Using AI to develop and maintain circular products and businesses may pose significant challenges. Privacy, equality, and well-being could all be harmed through the unethical use of AI. Moreover, positive social and environmental outcomes could be undermined by a disjointed, uneven, or misguided application of AI in transitioning to a circular economy. These risks can be minimised and, in some cases, avoided altogether. To this end, we have proposed three sets of recommendations that can guide the ethical adoption of AI for fulfilling circular economy ambitions.

At the micro-level, adopting AI ethics best practices within organisations, such as using privacy-enhancing technologies and AI ethics-based auditing, will help mitigate potential risks from privacy infringements and harmful biases. At the meso-level, the promotion of open source, industry-wide collaboration on reskilling, and supporting inclusive design, could help minimise the exacerbation of social and economic inequalities, both internationally and domestically. At the macro-level, governments can help to address some of the epistemological questions associated with using AI for CE by providing further funding for research and developing guidance and standards collaboratively. Adopting these recommendations would leverage the good potential of AI to foster CE. AI and CE can be mutually supportive, and an ethical “AI4CE” is an important project. It must also become an urgent priority.

Data availability

Not Applicable

Notes

For instance, the development of new types of plastic that are more environmentally friendly. See https://www.deepmind.com/blog/putting-the-power-of-alphafold-into-the-worlds-hands

The 3Rs are typically adopted, though many scholars go further through adding other ‘Rs’ pertaining to CE behaviours.

This point will be discussed in greater depth later in the paper.

One important caveat is that this does not necessarily suggest that CE initiatives are shrinking in real terms. Instead, this figure may be indicative of higher levels of overall consumption.

For example, bamboo chopsticks are less energetically expensive than a highly specialised plastic fork. When both of these products are inevitably disposed, the bamboo chopsticks can be easily re-assimilated into nature through bio-degradation, while the fork may require multiple processes and machines for its recycling (Murray et al. 2017).

As mentioned in the introduction, this is on account of the novel features of AI which include (i) an ability to process vast amounts of data, (ii) autonomously or semi-autonomously, and (iii) to make inferences, predictions, decisions, or to generate content.

This is not to say that algorithmic profiling is not problematic. A significant amount of literature has considered how biases can emerge during profiling; for instance, see (Sweeney 2013). Rather, the choice to focus only on the case of dynamic pricing was made to ensure a concise analysis.

An example of a company already pioneering this type of technology is tinyML. See https://www.tinyml.org/

References

A new Circular Economy Action Plan For a cleaner and more competitive Europe (2020) European Commission. https://eur-lex.europa.eu/legal-content/EN/TXT/?qid=1583933814386&uri=COM:2020:98:FIN. Accessed 25 Jun 2021

Acerbi F, Forterre DA, Taisch M (2021) Role of artificial intelligence in circular manufacturing: a systematic literature review. IFAC-PapersOnLine 54(1):367–372. https://doi.org/10.1016/j.ifacol.2021.08.040

Achieving a circular economy: how the private sector is reimagining the future of business (2015) US Chamber of Commerce. https://www.uschamberfoundation.org/sites/default/files/Circular%20Economy%20Best%20Practices.pdf. Accessed 23 Nov 2021

Alexandris G, Katos V, Alexaki S, Hatzivasilis G (2018, September). Blockchains as enablers for auditing cooperative circular economy networks. In 2018 IEEE 23rd international workshop on computer aided modeling and design of communication links and networks (CAMAD), pp 1–7. IEEE.

Allam Z, Dhunny ZA (2019) On big data, artificial intelligence and smart cities. Cities 89:80–91. https://doi.org/10.1016/j.cities.2019.01.032

Andrae ASG, Edler T (2015) On global electricity usage of communication technology: trends to 2030. Challenges 6(1):117–157. https://doi.org/10.3390/challe6010117

Angwin J, Larson J, Mattu S (2015) The tiger mom tax: Asians are nearly twice as likely to get a higher price from Princeton review. ProPublica. https://www.propublica.org/article/asians-nearly-twice-as-likely-to-get-higher-price-from-princeton-review?token=UnpQVP7I8tJbGlMfvfYJisVdJ7aaZ7jl. Accessed 01 Jan 2022

Antikainen M, Uusitalo T, Kivikytö-Reponen P (2018) Digitalisation as an enabler of circular economy. Procedia CIRP 73:45–49. https://doi.org/10.1016/j.procir.2018.04.027

Antikainen M, Valkokari K (2016) A Framework for Sustainable Circular Business Model Innovation. In Technology Innovation Management Review vol 6, Issue 7, pp 5–12. Talent First Network.

Anwar MA, Graham M (2022) The digital continent: placing Africa in planetary networks of work. Oxford University Press

Apple adds Earth Day donations to trade-in and recycling program (2018) Apple Newsroom. https://www.apple.com/uk/newsroom/2018/04/apple-adds-earth-day-donations-to-trade-in-and-recycling-program/. Accessed 03 Dec 2021

Askoxylakis I (2018) A framework for pairing circular economy and the internet of things. IEEE Int Conf Commun (ICC) 2018:1–6. https://doi.org/10.1109/ICC.2018.8422488

Auditing algorithms: the existing landscape, role of regulators and future outlook (2022) GOV.UK. https://www.gov.uk/government/publications/findings-from-the-drcf-algorithmic-processing-workstream-spring-2022/auditing-algorithms-the-existing-landscape-role-of-regulators-and-future-outlook. Accessed 10 Jun 2022

Ayres I (1991) Fair driving: gender and race discrimination in retail car negotiations. Harv Law Rev 104(4):817–872. https://doi.org/10.2307/1341506

Barbier EB (1987) The concept of sustainable economic development. Environ Conserv 14(2):101–110. https://doi.org/10.1017/S0376892900011449

Bibri SE (2018) Smart sustainable cities of the future: the untapped potential of big data analytics and context-aware computing for advancing sustainability. Springer

Binns R, Lyngs U, Van Kleek M, Zhao J, Libert T, Shadbolt N (2018) Third party tracking in the mobile ecosystem. Proceedings of the 10th ACM Conference on Web Science, 23–31. https://doi.org/10.1145/3201064.3201089

Blühdorn I, Welsh I (2007) Eco-politics beyond the paradigm of sustainability: a conceptual framework and research agenda. Environ Polit 16(2):185–205. https://doi.org/10.1080/09644010701211650

Blunck E, Salah Z, Kim J (2019) Industry 4.0, AI and Circular Economy—Opportunities and Challenges for a Sustainable Development. Global Trends and Challenges in the Era of the Fourth Industrial Revolution (The Industry 4.0)

Bressanelli G, Adrodegari F, Perona M, Saccani N (2018) Exploring how usage-focused business models enable circular economy through digital technologies. Sustainability 10(3):639. https://doi.org/10.3390/su10030639

Chander A (2017) The racist algorithm? Mich Law Rev 115(6):1023–1045. https://doi.org/10.3316/agispt.20190905016562

Charnley F, Tiwari D, Hutabarat W, Moreno M, Okorie O, Tiwari A (2019) Simulation to enable a data-driven circular economy. Sustainability 11(12):3379. https://doi.org/10.3390/su11123379

Chopra-McGowan A, Reddy SB (2020) What would it take to reskill entire industries? Harvard Business Review. https://hbr.org/2020/07/what-would-it-take-to-reskill-entire-industries

Cowls J, Tsamados A, Taddeo M, Floridi L (2021) The AI gambit: leveraging artificial intelligence to combat climate change—opportunities, challenges, and recommendations. AI Soc. https://doi.org/10.1007/s00146-021-01294-x

Crawford K, Joler V (2018) Anatomy of an AI System: The Amazon Echo as an anatomical map of human labor, data and planetary resources. Anatomy of an AI System. http://www.anatomyof.ai

Danks D, London AJ (2017) Algorithmic Bias in Autonomous Systems. Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, 4691–4697. https://doi.org/10.24963/ijcai.2017/654

de Wit M, Haigh L (2022) The Circularity Gap Report 2022. Circularity Gap Reporting Institution. https://www.circularonline.co.uk/wp-content/uploads/2022/01/Circularity-Gap-Report-2022.pdf. Accessed 26 Feb 2022

Dwork C (2008) Differential privacy: a survey of results. In: Agrawal M, Du D, Duan Z, Li A (eds) Theory and applications of models of computation. Springer, pp 1–19. https://doi.org/10.1007/978-3-540-79228-4_1

Ellen MacArthur Foundation (2017) What is a Circular Economy? Ellen MacArthur Foundation. https://www.ellenmacarthurfoundation.org/circular-economy/concept. Accessed 15 Mar 2021

Ellen MacArthur Foundation Google (2019) Artificial Intelligence and the Circular Economy.

Fleming S (2020) 4 creative ways companies are embracing the circular economy. World Economic Forum. https://www.weforum.org/agenda/2020/12/circular-economy-examples-ikea-burger-king-adidas/. Accessed 17 Nov 2021

Floridi L (2014) Open data, data protection, and group privacy. Philos Technol 27(1):1–3. https://doi.org/10.1007/s13347-014-0157-8

Floridi L (2019) The green and the blue: naïve ideas to improve politics in a mature information society. Springer, pp 183–221

Floridi L (2020) The green and the blue: a new political ontology for a mature information society. Philos Jahrb 127(2):307–338

Floridi L, Taddeo M (2016) What is data ethics? Philos Trans R Soc 374(2083):20160360. https://doi.org/10.1098/rsta.2016.0360

Floridi L, Cowls J, King TC, Taddeo M (2020) How to design AI for social good: seven essential factors. Sci Eng Ethics 26(3):1771–1796. https://doi.org/10.1007/s11948-020-00213-5

Gailhofer P, Herold A, Schemmel JP, Scherf C-S, Urrutia C, Köhler AR, Braungardt S (2021) The role of Artificial Intelligence in the European Green Deal. Policy Department for Economic, Scientific and Quality of Life Policies Directorate-General for Internal Policies, 70

Garrido GM, Sedlmeir J, Uludağ Ö, Alaoui IS, Luckow A, Matthes F (2021) Revealing the landscape of privacy-enhancing technologies in the context of data markets for the IoT: A Systematic Literature Review. ArXiv:2107.11905 [Cs]. http://arxiv.org/abs/2107.11905

Gaustad G, Williams E, Leader A (2021) Rare earth metals from secondary sources: review of potential supply from waste and byproducts. Resour Conserv Recycl 167:105213. https://doi.org/10.1016/j.resconrec.2020.105213

Ge Y, Knittel CR, MacKenzie D, Zoepf S (2020) Racial discrimination in transportation network companies. J Public Econ 190:104205. https://doi.org/10.1016/j.jpubeco.2020.104205

Geissdoerfer M, Savaget P, Bocken NMP, Hultink EJ (2017) The circular economy—a new sustainability paradigm? J Clean Prod 143:757–768. https://doi.org/10.1016/j.jclepro.2016.12.048

Geng Y, Sarkis J, Bleischwitz R (2019) How to globalize the circular economy. Nature 565(7738):153–155. https://doi.org/10.1038/d41586-019-00017-z

Ghisellini P, Cialani C, Ulgiati S (2016) A review on circular economy: the expected transition to a balanced interplay of environmental and economic systems. J Clean Prod 114:11–32. https://doi.org/10.1016/j.jclepro.2015.09.007

Ghoreishi M, Happonen A (2020) Key enablers for deploying artificial intelligence for circular economy embracing sustainable product design: Three case studies. 050008. https://doi.org/10.1063/5.0001339

Global survey: the state of AI in 2021 (2021). McKinsey. https://www.mckinsey.com/business-functions/mckinsey-analytics/our-insights/global-survey-the-state-of-ai-in-2021. Accessed 13 Feb 2022

Gray ML, Suri S (2019) Ghost work: how to stop silicon valley from building a new global underclass. Houghton Mifflin Harcourt

Heikkila M (2022) UK consumer group: Tinder’s pricing algorithm discriminates against over-30s. POLITICO. https://www.politico.eu/article/uk-consumer-group-tinders-pricing-algorithm-discriminates-against-gay-users-and-over-30s/. Accessed 07 Apr 2022

Hippold S (2019) Gartner Predicts Circular Economies Will Replace Linear Economies in 10 Years. Gartner. https://www.gartner.com/en/newsroom/press-releases/2019-09-26-gartner-predicts-circular-economies-will-replace-line. Accessed 10 Nov 2021

Internet users, UK: 2020. (2021) Office for National Statistics. https://www.ons.gov.uk/businessindustryandtrade/itandinternetindustry/bulletins/internetusers/2020. Accessed 23 Jan 2022

Jones N (2018) How to stop data centres from gobbling up the world’s electricity. Nature 561(7722):163–166. https://doi.org/10.1038/d41586-018-06610-y

Kirchherr J, Reike D, Hekkert M (2017) Conceptualizing the circular economy: an analysis of 114 definitions. Resour Conserv Recycl 127:221–232. https://doi.org/10.1016/j.resconrec.2017.09.005

Korhonen J, Honkasalo A, Seppälä J (2018) Circular economy: the concept and its limitations. Ecol Econ 143:37–46. https://doi.org/10.1016/j.ecolecon.2017.06.041

Kouhizadeh M, Sarkis J, Zhu Q (2019) At the nexus of blockchain technology, the circular economy, and product deletion. Appl Sci 9(8):1712. https://doi.org/10.3390/app9081712

Larsson A, Lindfred L (2019) Digitalization, circular economy and the future of labor. In: Larsson A, Teigland R (eds) The digital transformation of labor: automation, the gig economy and welfare, 1st edn. Routledge, Cham

Lawrence M, Roberts C, King L (2017) Managing automation: employment, inequality and ethics in the digital age. IPPR Commission on Economic Justice. https://www.ippr.org/research/publications/managing-automation. Accessed 03 Jan 2022

Luthra S, Mangla SK (2018) Evaluating challenges to Industry 4.0 initiatives for supply chain sustainability in emerging economies. Process Saf Environ Prot 117:168–179. https://doi.org/10.1016/j.psep.2018.04.018

Mah A (2021) Future-proofing capitalism: the paradox of the circular economy for plastics. Global Environmental Politics 21(2):121–142. https://doi.org/10.1162/glep_a_00594

Mathews JA, Tan H (2016) Circular economy: lessons from China. Nature 531(7595):440–442. https://doi.org/10.1038/531440a

Maxwell M, McCool S, Peppin A, Spittle K, Stocks T (2021) Public dialogue on location data ethics— Engagement report. Geospatial Commission, Ada Lovelace Institute & Traverse.

McDowall W, Geng Y, Huang B, Barteková E, Bleischwitz R, Türkeli S, Kemp R, Doménech T (2017) Circular economy policies in China and Europe. J Ind Ecol 21(3):651–661. https://doi.org/10.1111/jiec.12597

Milios L (2018) Advancing to a circular economy: three essential ingredients for a comprehensive policy mix. Sustain Sci 13(3):861–878. https://doi.org/10.1007/s11625-017-0502-9

Miller AP, Hosanagar K (2019). How targeted Ads and dynamic pricing can perpetuate bias. Harvard Business Review. https://hbr.org/2019/11/how-targeted-ads-and-dynamic-pricing-can-perpetuate-bias

Mökander J, Floridi L (2021) Ethics-based auditing to develop trustworthy AI. Mind Mach. https://doi.org/10.1007/s11023-021-09557-8

Mökander J, Morley J, Taddeo M, Floridi L (2021) Ethics-based auditing of automated decision-making systems: nature, scope, and limitations. Sci Eng Ethics 27(4):44. https://doi.org/10.1007/s11948-021-00319-4

Moore D (2021) China trials AI-enabled sorting machines to keep plastic in circular economy. Circular Online. https://www.circularonline.co.uk/news/china-trials-ai-enabled-sorting-machines-to-keep-plastic-in-circular-economy/. Accessed 10 Jan 2022

Muro M (2020) No matter which way you look at it, tech jobs are still concentrating in just a few cities. https://www.brookings.edu/research/tech-is-still-concentrating/. Accessed 29 Jan 2022

Murray D, Liao J, Stankovic L, Stankovic V (2016) Understanding usage patterns of electric kettle and energy saving potential. Appl Energy 171:231–242. https://doi.org/10.1016/j.apenergy.2016.03.038

Murray A, Skene K, Haynes K (2017) The circular economy: an interdisciplinary exploration of the concept and application in a global context. J Bus Ethics 140(3):369–380. https://doi.org/10.1007/s10551-015-2693-2

National Recycling Strategy (2021) Part one of a series on building a circular economy for all. EPA; National Recycling Strategy. 68

Nobre GC, Tavares E (2017) Scientific literature analysis on big data and internet of things applications on circular economy: a bibliometric study. Scientometrics 111(1):463–492. https://doi.org/10.1007/s11192-017-2281-6

Notes from the AI Frontier (2018) Modeling the impact of AI on the world economy. McKinsey Global Institute. pp. 3–4

Oberle B, Bringezu S, Hatfield-Dodds S, Hellweg S, Schandl H, Clement J (2019) Global Resources Outlook 2019. UN Environment. https://www.resourcepanel.org/reports/global-resources-outlook. Accessed 01 Nov 2021

Okorie O, Salonitis K, Charnley F, Moreno M, Turner C, Tiwari A (2018) Digitisation and the circular economy: a review of current research and future trends. Energies 11(11):3009. https://doi.org/10.3390/en11113009

Osborne H (2006) Humans using resources of two planets, WWF warns. The Guardian. http://www.theguardian.com/environment/2006/oct/24/conservation.internationalnews. Accessed 01 Feb 2022

Pandey A, Caliskan A (2021) Disparate Impact of Artificial Intelligence Bias in Ridehailing Economy’s Price Discrimination Algorithms. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society. Association for Computing Machinery. pp. 822–833. https://doi.org/10.1145/3461702.3462561

Pasquale F (2015) The black box society: the secret algorithms that control money and information. The black box society. Harvard University Press. https://doi.org/10.4159/harvard.9780674736061

Phillis YA, Andriantiatsaholiniaina LA (2001) Sustainability: an ill-defined concept and its assessment using fuzzy logic. Ecol Econ 37(3):435–456. https://doi.org/10.1016/S0921-8009(00)00290-1

Ponte S (2019) Business, power and sustainability in a world of global value chains. Zed Book Ltd. https://doi.org/10.5040/9781350218826

Preston F (2012) A global redesign? Shaping the circular economy (p. 20). Chatham House. https://www.chathamhouse.org/sites/default/files/public/Research/Energy,%20Environment%20and%20Development/bp0312_preston.pdf. Accessed 18 Nov 2022

Privacy enhancing technologies for trustworthy use of data (2021) Centre for Data Ethics and Innovation. https://cdei.blog.gov.uk/2021/02/09/privacy-enhancing-technologies-for-trustworthy-use-of-data/. Accessed 17 Jan 2022

Purvis B, Mao Y, Robinson D (2019) Three pillars of sustainability: in search of conceptual origins. Sustain Sci 14(3):681–695. https://doi.org/10.1007/s11625-018-0627-5

Raji ID, Smart A, White RN, Mitchell M, Gebru T, Hutchinson B, Smith-Loud J, Theron D, Barnes P (2020) Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing. Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 33–44. https://doi.org/10.1145/3351095.3372873

Ramadoss TS, Alam H, Seeram R (2018) Artificial intelligence and internet of things enabled circular economy. Int J Eng Sci (IJES) 7(9 Ver III):55–63

Reuter MA (2016) Digitalizing the circular economy: circular economy engineering defined by the metallurgical internet of things. Metall Mater Trans B 47(6):3194–3220. https://doi.org/10.1007/s11663-016-0735-5

Roberts H, Cowls J, Hine E, Mazzi F, Tsamados A, Taddeo M, Floridi L (2021) Achieving a ‘good AI society’: comparing the aims and progress of the EU and the US. Sci Eng Ethics 27(6):68. https://doi.org/10.1007/s11948-021-00340-7

Sankaran K (2020) Carbon emission and plastic pollution: how circular economy, blockchain, and artificial intelligence support energy transition? J Innov Manag 7(4):7–13. https://doi.org/10.24840/2183-0606_007.004_0002

Sariatli F (2017) Linear economy versus circular economy: a comparative and analyzer study for optimization of economy for sustainability. Visegrad J Bioecon Sustain Dev 6(1):31–34. https://doi.org/10.1515/vjbsd-2017-0005

Schröder P, Bengtsson M, Cohen M, Dewick P, Hofstetter J, Sarkis J (2019) Degrowth within—aligning circular economy and strong sustainability narratives. Resour Conserv Recycl 146:190–191. https://doi.org/10.1016/j.resconrec.2019.03.038

Silvestri L, Forcina A, Silvestri C, Traverso M (2021) Circularity potential of rare earths for sustainable mobility: recent developments, challenges and future prospects. J Clean Prod 292:126089. https://doi.org/10.1016/j.jclepro.2021.126089

Skene KR (2018) Circles, spirals, pyramids and cubes: Why the circular economy cannot work. Sustain Sci 13(2):479–492. https://doi.org/10.1007/s11625-017-0443-3

Smart Meter Statistics in Great Britain: Quarterly Report to end March 2020 (2020). Department for Business, Energy and Industrial Strategy

Stahel WR (2016) The circular economy. Nature 531(7595):435–438. https://doi.org/10.1038/531435a

Sweeney L (2013) Discrimination in online ad delivery. Commun ACM 56(5):44–54. https://doi.org/10.1145/2447976.2447990

Taddeo M, Tsamados A, Cowls J, Floridi L (2021) Artificial intelligence and the climate emergency: opportunities, challenges, and recommendations. One Earth 4(6):776–779. https://doi.org/10.1016/j.oneear.2021.05.018

Taylor L, Floridi L, van der Sloot B (2016) Group privacy: new challenges of data technologies. Springer

The Circular Economy In Detail (n.d.) (2022) Ellen MacArthur Foundation. Retrieved 19 Feb 2022, from https://archive.ellenmacarthurfoundation.org/explore/the-circular-economy-in-detail. Accessed 19 Feb 2022

Tremblay J-F (2016) How rare earths bring clean technologies, dirty landscapes. Chem Eng News 94(32):24

Tsamados A, Aggarwal N, Cowls J, Morley J, Roberts H, Taddeo M, Floridi L (2021) The ethics of algorithms: key problems and solutions. AI Soc. https://doi.org/10.1007/s00146-021-01154-8

U.S. Chamber of Commerce Foundation (2017) Circularity vs. Sustainability [Text]. U.S. Chamber of Commerce Foundation. https://www.uschamberfoundation.org/circular-economy-toolbox/about-circularity/circularity-vs-sustainability. Accessed 11 Nov 2021

Voulvoulis N (2018) Water reuse from a circular economy perspective and potential risks from an unregulated approach. Curr Opin Environ Sci Health 2:32–45. https://doi.org/10.1016/j.coesh.2018.01.005

Weber S (2017) Data, development, and growth. Bus Polit 19(3):397–423. https://doi.org/10.1017/bap.2017.3

What is a digital twin? (n.d.) (2022). IBM. Retrieved 21 Jan 2022, from https://www.ibm.com/topics/what-is-a-digital-twin. Accessed 21 Jan 2022

Zhang A, Venkatesh VG, Liu Y, Wan M, Qu T, Huisingh D (2019) Barriers to smart waste management for a circular economy in China. J Clean Prod 240:118198. https://doi.org/10.1016/j.jclepro.2019.118198

Ziosi M, Hewitt B, Juneja P, Taddeo M, Floridi L (2022) Smart Cities: mapping their Ethical Implications (SSRN Scholarly Paper ID 4001761). Soc Sci Res Netw. https://doi.org/10.2139/ssrn.4001761

Renteria A, Alvarez-de-los-Mozos E (2019) Human-Robot Collaboration as a new paradigm in circular economy for WEEE management. Procedia Manufacturing 38:375–382. https://doi.org/10.1016/j.promfg.2020.01.048

Fletcher SR, Webb P (2017) Industrial Robot Ethics: The Challenges of Closer Human Collaboration in Future Manufacturing Systems. In M. I. Aldinhas Ferreira, J. Silva Sequeira, M. O. Tokhi, E. E. Kadar, G. S. Virk (Eds.), A World with Robots, vol 84, pp 159–169. Springer International Publishing. https://doi.org/10.1007/978-3-319-46667-5_12

Frank AG (1986) The Development of Underdevelopment. In Promise of Development. Routledge.

Acknowledgements

Huw Roberts’ research is supported by a research grant for the AI*SDG project at the University of Oxford’s Saïd Business School. Mariarosaria Taddeo wishes to acknowledge that she serves as non-executive president of the board of directors of Noovle Spa.

Author information

Authors and Affiliations

Contributions

Conceptualisation: LF, MT, and HR. Data collection and analysis: HR and JZ. Paper writing: HR and JZ. Paper review and revisions: HR, JZ, BB, JC, BG, PJ, AT, MZ, MT, and LF. Supervision: LF and MT.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Roberts, H., Zhang, J., Bariach, B. et al. Artificial intelligence in support of the circular economy: ethical considerations and a path forward. AI & Soc (2022). https://doi.org/10.1007/s00146-022-01596-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00146-022-01596-8