Abstract

In July 2017, China’s State Council released the country’s strategy for developing artificial intelligence (AI), entitled ‘New Generation Artificial Intelligence Development Plan’ (新一代人工智能发展规划). This strategy outlined China’s aims to become the world leader in AI by 2030, to monetise AI into a trillion-yuan (ca. 150 billion dollars) industry, and to emerge as the driving force in defining ethical norms and standards for AI. Several reports have analysed specific aspects of China’s AI policies or have assessed the country’s technical capabilities. Instead, in this article, we focus on the socio-political background and policy debates that are shaping China’s AI strategy. In particular, we analyse the main strategic areas in which China is investing in AI and the concurrent ethical debates that are delimiting its use. By focusing on the policy backdrop, we seek to provide a more comprehensive and critical understanding of China’s AI policy by bringing together debates and analyses of a wide array of policy documents.

Similar content being viewed by others

1 Introduction

In March 2016, a Google DeepMind artificial intelligence (AI) designed for playing the board game Go (AlphaGo) defeated Lee Sedol, a South Korean professional Go player. At the time, Sedol had the second-highest number of Go international championship victories, yet lost against AlphaGo by four games to one (Boroweic 2016). While the match received some coverage in the West, it was a major event in China, where over 280 million people watched it live. Two government insiders described this match as a ‘Sputnik moment’ for the development of AI within China (Lee 2018, p. 3). Although there had been AI policy initiatives in the country previously, the victory for AlphaGo contributed to an increase in focus, as indicated by the 2017 ‘New Generation Artificial Intelligence Development Plan’ (AIDP). The AIDP set out strategic aims and delineated the overarching goal of making China the world leader in AI by 2030.Footnote 1

A limited number of reports have attempted to assess the plausibility of China’s AI strategy given China’s current technical capabilities (Ding 2018; “China AI Development Report” 2018). Others have sought to understand specific areas of development, for instance, security or economic growth (Barton et al. 2017; “Net Impact of AI on jobs in China” 2018; Allen 2019). However, to grasp the ramified implications and direction of the AIDP, it is insufficient to analyse specific elements in isolation or to consider only technical capabilities. Instead, a more comprehensive and critical analysis of the driving forces behind China’s AI strategy, its political economy, cultural specificities, and the current relevant policy debates, is required to understand China’s AI strategy. This is the task we undertake in this article.

To provide this contextualised understanding, Sect. 2 maps relevant AI legislation in China. We argue that, although previous policy initiatives have stated an intent to develop AI, these efforts have been fractious and viewed AI as one of many tools in achieving a different set goal. In contrast, the AIDP is the first national-level legislative effort that focuses explicitly on the development of AI as a unified strategy. Following this, Sect. 3 analyses the interventions and impact of the AIDP on three strategic areas identified in the document, namely: international competition, economic growth, and social governance. Section 4 focuses on China’s aim to develop ethical norms and standards for AI. There we argue that, although the debate is in its early stages, the desire to define normative boundaries for acceptable uses of AI is present and pressing. Altogether, this article seeks to provide a detailed and critical understanding of the reasons behind, and the current trajectory of, China’s AI strategy. It emphasises that the Chinese government is aware of the potential benefits, practical risks, and the ethical challenges that AI presents, and that the direction of China’s AI strategy will largely be determined by the interplay of these factors and by the extent to which government’s interests may outweigh ethical concerns. Section 5 concludes the paper by summarising the key findings of our analysis.

2 AI legislation in China

Since 2013, China has published several national-level policy documents, which reflect the intention to develop and deploy AI in a variety of sectors. For example, in 2015, the State Council released guidelines on China’s ‘Internet +’ action. It sought to integrate the internet into all elements of the economy and society. The document clearly stated the importance of cultivating emerging AI industries and investing in research and development. In the same year, the 10-year plan ‘Made in China 2025’ was released, with the aim to transform China into the dominant player in global high-tech manufacturing, including AI (McBride and Chatzky 2019). Another notable example is the Central Committee of the Communist Party of China’s (CCP) 13th 5-year plan,Footnote 2 published in March 2016. The document mentioned AI as one of the six critical areas for developing the country’s emerging industries (CCP 2016), and as an important factor in stimulating economic growth. When read together, these documents indicate that there has been a conscious effort to develop and use AI in China for some time, even before ‘the Sputnik moment’. However, prior to 2016, AI was presented merely as one technology among many others, which could be useful in achieving a range of policy goals. This changed with the release of the AIDP.

2.1 The New generation artificial intelligence development plan (AIDP)

Released in July 2017 by the State Council (which is the chief administrative body within China), the ‘New Generation Artificial Intelligence Development Plan’ (AIDP) acts as a unified document that outlines China’s AI policy objectives. Chinese media have referred to it as ‘year one of China’s AI development strategy’ (“China AI Development Report” 2018, p. 63). The overarching aim of the policy, as articulated by the AIDP, is to make China the world centre of AI innovation by 2030, and make AI ‘the main driving force for China’s industrial upgrading and economic transformation’ (AIDP 2017). The AIDP also indicates the importance of using AI in a broader range of sectors, including defence and social welfare, and focuses on the need to develop standards and ethical norms for the use of AI. Altogether, the Plan provides a comprehensive AI strategy and challenges other leading powers in many key areas.

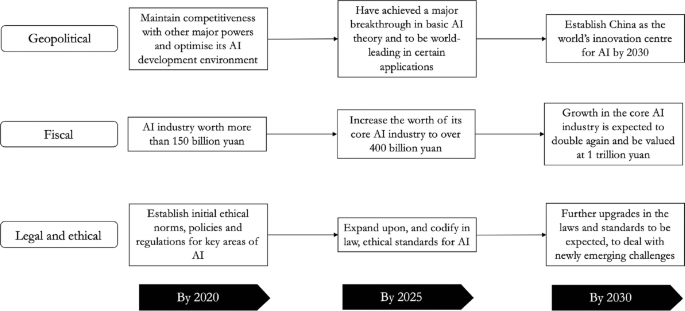

The AIDP delineates three key steps, each of which contains a series of goals, some of which are tightly defined, while others are vaguer. They are summarised as follows and in Fig. 1 below:

-

1.

By 2020, China aims to maintain competitiveness with other major powers and optimise its AI development environment. In monetary terms, China intends to create an AI industry worth more than 150 billion yuan (ca. 21 billion dollars). Lastly, it seeks to establish initial ethical norms, policies, and regulations for vital areas of AI.

-

2.

By 2025, China aims to have achieved a ‘major breakthrough’ (as stated in the document) in basic AI theory and to be world-leading in some applications (‘some technologies and applications achieve a world-leading level’). China also targets an increase in the worth of its core AI industry to over 400 billion yuan (ca. 58 billion dollars), and plans to expand upon, and codify in law, ethical standards for AI.

-

3.

By 2030, China seeks to become the world’s innovation centre for AI. By then, growth in the core AI industry is expected to more than double again and be valued at 1 trillion yuan (ca 147 billion dollars), and further upgrades in the laws and standards are also to be expected, to deal with newly emerging challenges.

2.2 Implementing the AIDP

The Plan will be guided by a new AI Strategy Advisory Committee, established in November 2017, and will be coordinated by the Ministry of Science and Technology (MIST), alongside the AI Plan Promotion Office, and other relevant bodies (“AI Policy-China” n.d.).Footnote 3 Although these bodies will provide central guidance, the Plan is not meant to act as a centrally enacted initiative. The AIDP instead functions as a stamp of approval for de-risking and actively incentivising local projects that make use of AI. Recognising this point is important: the AIDP is an ambitious strategy set by the central government, but the actual innovation and transformation is expected to be driven by the private sector and local governments. In other words, it is more appropriate to view the AIDP as a highly incentivised ‘wish list’, to nudge, and coordinate other relevant stakeholders, rather than a central directive (Sheehan 2018). This is why the 3-year plan promoting the AIDP (2018–2020) emphasises coordination between provinces and local governments.

With regard to the private sector, China has selected ‘AI national champions’: businesses endorsed by the government to focus on developing specific sectors of AI. For example, Baidu has been tasked with the development of autonomous driving, Alibaba with the development of smart cities, and Tencent with computer vision for medical diagnoses (Jing and Dai 2017). Being endorsed as a national champion involves a deal whereby private companies agree to focus on the government’s strategic aims. In return, these companies receive preferential contract bidding, easier access to finance, and sometimes market share protection. Although other companies can compete in these fields, historically the status of ‘national champion’ has helped larger companies dominate their respective sectors (Graceffo 2017).

With this said, the new AI ‘national team’ differs from previous state-sponsored national champions in that they are already internationally successful in their respective fields, independently of this preferential treatment. Furthermore, there is extensive domestic competition in the areas where national champions have been selected. This suggests that competition may not be stymied in the traditional manner. For instance, all the companies selected as AI national champions are developing technologies in Alibaba’s designated area of smart cities (Ding 2019). In parallel with this, patronage does not prohibit smaller companies benefiting from the financial incentive structure. Technology start-ups within China often receive government support and subsidies for developing AI technologies. As an example, Zhongguancun Innovation Town is a purpose-built, government subsidised, incubator workspace that provides a suite of services to help Chinese technology start-ups succeed, often in the sectors where national champions have been selected. Finally, there are also cases where there is no specific endorsement. For example, while the AIDP promotes smart courts, with a stated desire to develop AI for evidence collection, case analysis, and legal document reading, as of April 2020 there is no national champion selected for developing AI applications for the administration of justice.

Concerning local governments, the political structure within China creates a system of incentives for fulfilling national government policy aims. Short term limits for provincial politicians and promotions based on economic performance provide strong incentives for following centrally-defined government initiatives (Li and Zhou 2005; Persson and Zhuravskaya 2016). Thus, local governments become hotbeds for testing and developing central government policy. The strength of this incentive system can be seen in the decision made by the administration of the city of Tianjin to establish a $5 billion fund for the development of AI, around the same time as the publication of the AIDP (Mozur 2017). At the same time, it is important to recognise how the absence of an effective accountability review of local government spending creates problems within this system. Notably, it has facilitated a mindset in which local politicians know that the central government will bail them out for failed projects, leading to poor budget management (Ji 2014). A clear example of this is the large-scale port building initiatives developed by provincial governments in East coast provinces that were based more on prestige than any economic rationale, and which led to overcapacity and disorderly competition (Zhu 2019).

These incentive structures contain a subtle distinction. A national team has been selected to lead the research and development in a handful of designated strategic areas. Beyond these selected companies, there are few specific guidelines provided to industry and local state agents as to which items to pursue on the AIDP’s ‘wish list’. This enables companies to cherry-pick the technologies they want to develop and provides local governments with a choice of private sector partners for integrating AI into city infrastructure or governance (Sheehan 2018). Subsequent documentation has emphasised the importance of strengthening organisation and implementation,Footnote 4 including between provinces and ministries, yet it is unclear how this coordination would function in practice. Thus, the AIDP may work as a ‘wish list’, but the exact guidance, incentivisation and risk differ depending on the type of stakeholder.

The AIDP should not be read in isolation when considering China’s AI strategy (Ding 2018), but it does provide the most transparent and influential indication of the driving forces behind China’s AI strategy. Because of the AIDP’s significance (in terms of policy) and importance (in terms of strategy), in the rest of this article, we shall use it as the organisational skeleton for explaining the drivers and ethical boundaries shaping China’s approach to AI.

3 China’s AI strategic focus

The AIDP provides a longitudinal perspective on China’s strategic situation regarding AI, including its comparative capabilities, the opportunities offered, and the potential risks. Following a technology-first approach, it may be tempting to concentrate one’s attention on the stated capabilities of AI, to gain an insight into the types of technologies in which China is investing. However, this would likely offer only a short-term perspective and would soon be out of date as technological innovation advances rapidly. Furthermore, it would do little to explain why China is seeking to develop a strong AI sector in the decades to come. To this end, it is more useful to try to understand China’s strategic focus from a policy-first approach, by analysing the areas where China considers that AI presents opportunities. In this section, we focus on these areas of particular importance to China, on how and what China expects to gain from developing AI in each of them, and on some of the perceived risks present in each of these areas. The AIDP highlights three areas where AI can make a substantial difference within China: international competition, economic development, and social governance. They are strictly interrelated but, for the sake of clarity, we shall analyse them separately, and contextualise each of them by discussing the relevant literature surrounding the broader political backdrop and contemporary policy debates.

3.1 International competition

The AIDP states that AI has become a new focus of international competition and that ‘the development of AI [is] […] a major strategy to enhance national competitiveness and protect national security’ (AIDP 2017). It emphasises that China should take the strategic opportunity afforded by AI to make ‘leapfrog developments’Footnote 5 in military capabilities. Although China and the US are regularly portrayed as geopolitical rivals (Mearsheimer 2010; Zhao 2015), the military budgets of the two powers remain significantly different. China has the world’s second-largest military budget, with $175 billion allocated in 2019 (Chan and Zhen 2019), but its spending is still only a third of the US budget (Martina and Blanchard 2019). Rather than outspending the US in conventional weaponry, China considers investing in AI as an opportunity to make radical breakthroughs in military technologies and thus overtake the US.

Attempts to use technologies to challenge US hegemony are nothing new within China’s military strategy. Since the late 1990s, the country has been following a policy of ‘shashoujian’ (杀手锏), which roughly translates as ‘trump-card’ (Bruzdzinski 2004). Rather than directly competing with the US, China has sought to develop asymmetric capabilities, which could provide a critical advantage in warfare and credible deterrence in peacetime (Blasko 2011). This trump-card strategy seeks to use unorthodox technologies against enemies’ weaknesses to gain the initiative in war (Peng and Yao 2005). The trump-card approach was echoed by the former Party Chairman, Jiang Zemin, who emphasised that technology should be the foremost focus of the military, especially the technology that the ‘enemy fears [the] most’ (Cheung et al. 2016).

One area in which China has been developing these asymmetric tactics is cyber warfare, where capabilities have been developed for targeting the US military’s battle-critical networks if needed (Kania 2017a). Alongside this, evidence points to the persistent use of cyberattacks to collect scientific, technological and commercial intelligence (Inkster 2010). The Chinese position on these capabilities is ambivalent. On the one hand, China has officially promoted international initiatives for regulating hostile state-run activities in cyberspace, and to fill the existing regulatory gap for state behaviour in this domain (Ku 2017; Austin 2016; Taddeo 2012; Taddeo 2016). For example, China co-sponsored the International Code of Conduct for Information Security at the UN General Assembly in September 2011, which sought a commitment against using information technologies in acts of aggression and has provided continued support for dialogue by the UN Group of Government Experts in preventing cyber conflicts (Meyer 2020). On the other hand, China has also run cyber operations targeting US infrastructure and aiming at extracting commercial and scientific information as well as acquiring relevant intelligence against several countries, including Australia, Philippines, Hong Kong, and the US.Footnote 6

The desire to leapfrog the US is echoed in statements from China’s political and military leadership. For instance, President Xi Jinping stated in 2017 that ‘under a situation of increasingly fierce international military competition, only the innovators win’ (Kania 2020, p. 2). This sentiment is shared by Lieutenant General Liu Guozhi, deputy of the 19th National Congress and director of the Science and Technology Committee of the Central Military Commission, who stated in an interview that AI presented a rare opportunity for taking shortcuts to achieve innovation and surpass rivals (“AI military reform” 2017). In parallel, academics affiliated with the People’s Liberation Army (PLA) highlight that AI will be used to predict battlefield situations and identify optimal approaches, facilitating ‘winning before the war’ (Li 2019). Some members of the PLA go further than this in anticipating a battlefield ‘singularity’, where AI outpaces human decision-making (Kania 2017a). These statements emphasise the belief, which is widespread throughout China’s military and defence circles, in the importance of utilising emergent technologies including AI to achieve a competitive military advantage.

As China has developed economically and militarily, the focus of the country’s military strategy has also matured. Over the past few years, China’s strategy has coalesced around efforts to develop ‘new concept weapons’ to surpass the US’s military capabilities. These are not limited to AI alone, and are applicable to China’s investments in other fields of emerging military technologies, like hypersonic weaponry (Kania 2017b). Therefore, China’s efforts to use technology to gain an advantage in military affairs should not be seen as something new, but instead understood within a broader historical context of finding innovative ways to challenge the hegemony of the US.

Although the push for leapfrog developments marks a continuation of previous policy, there are strong concurrent indications that Chinese officials are also concerned about AI causing an arms race and potential military escalation. Statements of senior officials seem to suggest a belief in cooperation and arms control to mitigate the risks that AI’s military development poses. In particular, three major risks are central to the debate:

-

(i)

human involvement and control once AI-based weapons are deployed;

-

(ii)

the absence of well-defined norms for state behaviour and use of AI weapons, which in turn increases;

-

(iii)

the likelihood of misperceptions or unintentional conflict escalation (Taddeo and Floridi 2018; Allen 2019).

These concerns underpin China’s support to restrict the use of autonomous weapons, as expressed at the 5th Convention on Certain Conventional Weapons (“Chinese Position Paper” 2016) and, more recently, the desire to ban autonomous lethal weapons (Kania 2018a). Despite concerns (i)–(iii), it is crucial to stress that China is the actor pursuing the most aggressive strategy for developing AI for military uses among the major military powers (Pecotic 2019).

Digging more deeply into China’s actions on the international stage is revealing. The ban that China advocated encompassed only usage and not the development or production of autonomous lethal weapon systems. Thus, it would not prevent the existence of autonomous lethal weapons serving as a deterrent, in much the same way that China has a putative ‘no first use’ (NFU) doctrine for nuclear weapons. Furthermore, the definition of autonomy embraced by China is extremely narrow, including only fully autonomous weapons (“UN Seeks Human Control Over Force” 2018). Some commentators argue that this juxtaposition of cautious concerns about deployment, on the one hand, and an aggressive approach to development, on the other, can be explained by the Chinese efforts to exert pressure on other militaries whose democratic societies are more sensitive to the controversies of using automated weapons (Kania 2018a). This is a reasonable claim: a continuation of propaganda may be part of the explanation. For instance, China was the first nuclear power to pledge ‘no first use’ of nuclear weapons (so far only India has a similar pledge; other countries, including the US and the UK, have pledged to use nuclear weapons only defensively). But rather than offering a genuine commitment to NFU, this pledge was meant as internal and external propaganda tool, which would be circumvented by semantics if needed (Schneider 2009).

Taken together, China’s focus on military AI can be considered as a continuation of a longer-term strategy, which privileges developing (with the threat of deploying) technology to gain a military advantage. There remains a conscious recognition, by several actors in China, that developing AI presents an especially fraught risk of igniting an arms race or causing unintentional escalation due to the autonomy of these technologies (Taddeo and Floridi 2018; Allen 2019). But at the political level, efforts to curtail the use of military AI internationally may also be seen as part of a propaganda strategy.

3.2 Economic development

Economic development is the second strategic opportunity explicitly mentioned in the AIDP. It is stated that AI will be the driving force behind a new round of industrial transformation, which will ‘inject new kinetic energy into China’s economic growth’ (AIDP 2017). The reconstruction of economic activity is targeted in all sectors, with manufacturing, agriculture, logistics, and finance being the examples promoted in the AIDP.

China’s rapid growth has frequently been referred to as an ‘economic miracle’, due to the country’s shift from having a slow-growth economy to enjoying some of the world’s highest growth rates for almost three decades (Ray 2002; Naughton and Tsai 2015). A number of factors facilitated this economic growth, of which the demographic dividend is one. A large workforce, in combination with a small dependent population, fostered high levels of savings and heavy investment (Cai and Lu 2013). Structural changes, including a conscious shift from a predominantly agricultural to a manufacturing economy, and the opening up of markets, are additional, critical factors. By 2012, China’s labour force growth dropped to around zero, and its shift from an agricultural to a manufacturing economy had largely matured. These trends have led Chinese policymakers to the realisation that an alternative development model is necessary for maintaining high rates of growth. This model rests on the shift from heavy investment in the industry to growth stimulated by an innovative society (Naughton and Tsai 2015). Recently, science and technology have been put forward as a crucial means for achieving this type of innovative growth (Zhang 2018).

Some commentators have argued that maintaining these high levels of growth is particularly important for China due to the implicit trading by citizens of political freedoms for economic growth and embourgeoisement (Balding 2019). Research has highlighted that support for the party and a relatively lackluster desire for democracy stems from satisfaction with employment and material aspects of life, particularly within the middle classes (Chen 2013). Slowing economic growth would likely sow dissatisfaction within the populace and make inherent features within the Chinese political system, such as corruption, less tolerable (Diamond 2003; Pei 2015). A lack of a democratic outlet for this frustration could lower the overall support that the government currently receives. Some maintain that this creates a ‘democratise or die’ dynamic (Huang 2013), however, this may be unfeasible, given China’s political control (Dickson 2003; Chin 2018).

Against this backdrop, a report by PwC suggested that China is the country that has the most to gain from AI, with a boost in GDP of up to 26% by 2030 (“Sizing the Prize” 2017). Estimates also suggest that AI could facilitate an increase in employment by 12% over the next two decades (“Net Impact of AI on Jobs in China?” 2018). Because of these potential benefits, President Xi has frequently spoken of the centrality of AI to the country’s overall economic development (Hickert and Ding 2018; Kania 2018b). China has been pursuing the potential economic benefits of AI concretely and proactively for some time. For example, there has been a 500% increase in the annual installation of robotic upgrades since 2012. This rate is staggering, especially when compared to a rate of just over 100% in Europe (Shoham et al. 2018), equating to over double the number of robot installations in China than Europe.

AI can be a double-edged sword, because the benefits and improvements brought about by AI come with the risk, amongst others, of labour market disruptions. This is a concern explicitly stated in the AIDP. Although the aforementioned PwC report predicts that automation will increase the net number of jobs in China, disruption will likely be unevenly spread (“Net Impact of AI on Jobs in China?” 2018). Smarter automation will most immediately affect low- and medium-skilled jobs while creating opportunities for higher-skilled technical roles (Barton et al. 2017). China has been active in its efforts to adapt to such AI-related risks, especially with an education overhaul promoted by the ‘National Medium- and Long-term Education Reform and Development Plan (2010–2020)’. This plan has the goal of supporting the skilled labour required in the information age (“Is China Ready for Intelligent Automation” 2018). In the same vein, China is addressing the shortage in AI skills specifically by offering higher education courses on the subject (Fang 2019). Accordingly, China seems to be preparing better than other middle-income countries to deal with the longer-term challenges of automation (“Who is Ready for Automation?” 2018).

Although these efforts will help to develop the skill set required in the medium and long term, they do little to ease the short-term structural changes. Estimates show that, by 2030, automation in manufacturing might have displaced a fifth of all jobs in the sector, equating to 100 million workers (“Is China Ready for Intelligent Automation” 2018). These changes are already underway, with robots having replaced up to 40% of workers in several companies in China’s export-manufacturing provinces of Zhejiang, Jiangsu and Guangdong (Yang and Liu 2018). In the southern city of Dongguan alone, reports suggest that 200,000 workers have been replaced with robots (“Is China Ready for Intelligent Automation” 2018). When this is combined with China’s low international ranking in workforce transition programmes for vocational training (“Is China Ready for Intelligent Automation” 2018), it can be suggested that the short-term consequence of an AI-led transformation is likely to be significant disruptions to the workforce, potentially exacerbating China’s growing inequality (Barton et al. 2017).

3.3 Social governance

Social governance, or more literally in Chinese ‘social construction’,Footnote 7 is the third area in which AI is promoted as a strategic opportunity for China. Alongside an economic slowdown, China is facing emerging social challenges, hindering its pursuit of becoming a ‘moderately prosperous society’ (AIDP 2017). An aging population and constraints on the environment and other resources are explicit examples provided in the AIDP of the societal problems that China is facing. Thus, the AIDP outlines the goal of using AI within a variety of public services to make the governance of social services more precise and, in doing so, mitigate these challenges and improve people’s lives.

China has experienced some of the most rapid structural changes of any country in the past 40 years. It has been shifting from a planned to a market economy and from a rural to an urban society (Naughton 2007). These changes have helped facilitate economic development, but also introduced a number of social issues. One of the most pressing social challenges China is facing is the absence of a well-established welfare system (Wong 2005). Under the planned economy, workers were guaranteed cradle-to-grave benefits, including employment security and welfare benefits, which were provided through local state enterprises or rural collectives (Selden and You 1997). China’s move towards a socialist market economy since the 1990s has accelerated a shift of these provisions from enterprises and local collectives to state and societal agencies (Ringen and Ngok 2017). In practice, China has struggled to develop mature pension and health insurance programmes, creating gaps in the social safety net (Naughton 2007). Although several initiatives have been introduced to alleviate these issues (Li et al. 2013), the country has found it difficult to implement them (Ringen and Ngok 2017).

The serious environmental degradation that has taken place in the course of China’s rapid development is another element of concern. For most of China’s development period, the focus has been on economic growth, with little or no incentive provided for environmental protection (Rozelle et al. 1997). As a result, significant, negative externalities and several human-induced natural disasters have occurred that have proven detrimental for society. One of the most notable is very poor air quality, which has been linked to an increased chance of illness and is now the fourth leading cause of death in China (Delang 2016). In parallel, 40% of China’s rivers are polluted by industry, causing 60,000 premature deaths per year (Economy 2013). Environmental degradation of this magnitude damages the health of the population, lowers the quality of life, and places further strain on existing welfare infrastructure.

The centrality of these concerns could be seen at the 19th National Party Congress in 2017, where President Xi declared that the ‘principal contradiction’ in China had changed. Although the previous ‘contradiction’ focused on ‘the ever-growing material and cultural needs of the people and backward social production,’ Xi stated ‘what we now face is the contradiction between unbalanced and inadequate development and the people’s ever-growing needs for a better life’ (Meng 2017). After years of focusing on untempered economic growth, President Xi’s remarks emphasise a broader shift in China’s approach to dealing with the consequences of economic liberalisation.

These statements are mirrored in several government plans, including the State Council Initiative, ‘Healthy China 2030’, which seeks to overhaul the healthcare system. Similar trends can be seen in China’s efforts to clean up its environment, with a new 3-year plan building on previous relevant initiatives (Leng 2018). China has recently focused on AI as a way of overcoming these problems and improving the welfare of citizens. It has been pointed out that China’s major development strategies rely on solutions driven by big data (Heilmann 2017). For example, ‘Healthy China 2030’ explicitly stresses the importance of technology in achieving China’s healthcare reform strategy, and emphasises a switch from treatment to prevention, with AI development as a means to achieve the goal (Ho 2018). This approach also shapes environmental protection, where President Xi has been promoting ‘digital environmental protection’ (数字环保) (Kostka and Zhang 2018). Within this, AI is being used to predict and mitigate air pollution levels (Knight 2015), and to improve waste management and sorting (“AI-powered waste management underway in China” 2019).

The administration of justice is another area where the Chinese government has been advancing using AI to improve social governance. Under Xi Jinping, there has been an explicit aim to professionalise the legal system, which suffers from a lack of transparency, issues of local protectionism, and interference in court cases by local officials (Finder 2015). A variety of reforms have been introduced in an attempt to curtail these practices including, transferring responsibility for the management of local courts from local to provincial governments, the creation of a database where judges can report attempts at interference by local politicians, and a case registration system that makes it more difficult for courts to reject complex or contentious cases (Li 2016).

Of particular interest, when focusing on AI, is the ‛Several Opinions of the Supreme People’s Court on Improving the Judicial Accountability System’ (2015), which requires judges to reference similar cases in their judicial reasoning. Furthermore, it stipulates that decisions conflicting with previous similar cases should trigger a supervision mechanism with more senior judges. To help judges minimise inconsistencies, an effort has been made to introduce AI technologies that facilitate making ‘similar judgements in similar cases’ (Yu and Du 2019). In terms of technology, two overarching types of systems have emerged. The first is a ‘similar cases pushing system’, where AI is used to identify judgements from similar cases and provide judges these for reference. This type of system has been introduced by, amongst others, Hainan’s High People’s Court who has also encouraged the use of AI systems in lower-level courts across the province (Yuan 2019). The second type uses AI techniques to provide an ‘abnormal judgement warning’ that would detect if a judgement made differs from similar cases. If an inconsistent judgement does occur, the system alerts the judge’s superiors, prompting an intervention. Despite the potential that AI-assisted sentencing holds for reducing the workload of judges and lessening corruption, feedback from those who have used the systems suggests that the technology is currently too imprecise (Yu and Du 2019). Some legal theorists have gone further in their criticism, highlighting the inhumane effects of using technology in sentencing and the detriment that it could cause for ‘legal hermeneutics, legal reasoning techniques, professional training and the ethical personality of the adjudicator’ (Ji 2013, p. 205).

Looking forward, the focus on China’s use of AI in governance seems most likely to centre on the widely reported ‘Social Credit’ System, which is premised upon developing the tools required to address China’s pressing social problems (Chorzempa et al. 2018). To do this, the system broadly aims at increasing the state’s governance capacity, promoting the credibility of state institutions, and building a viable financial credit base (Chai 2018). Currently, ‘the’ Social Credit System is not one unified nationwide system but rather comprises national blacklists that collate data from different government agencies, individual social credit systems run by local governments, and private company initiatives (Liu 2019). These systems are fractious and, in many cases, the local trials lack technical sophistication, with some versions relying on little more than paper and pen (Gan 2019). Nonetheless, the ambitious targets of the Social Credit System provide a compelling example of the government’s intent to rely on digital technology for social governance and also for more fine-grained regulation of the behaviour of its citizens.

3.4 Moral governance

Social governance/construction in China does not just encompass material and environmental features, but also the behaviour of citizens. Scholars have argued that the disruption of the Maoist period followed by an ‘opening up’ has created a moral vacuum within China (Yan 2009; Lazarus 2016). These concerns are echoed by the Chinese public, with Ipsos Mori finding that concerns over ‘moral decline’ in China were twice as high as the global average (Atkinson and Skinner 2019).Footnote 8 This is something that has been recognised by the Chinese government, with high-level officials, including President Xi, forwarding the idea of a ‘minimum moral standard’ within society (He 2015). This goal is not limited to ensuring ‘good’ governance in the traditional sense; it extends to the regulating the behaviour of citizens and enhancing their moral integrity, which is considered a task within the government’s remit (“Xi Jinping’s report at 19th CPC National Congress” 2017). In view of the government, AI can be used to this end.

The AIDP highlights AI’s potential for understanding group cognition and psychology (2017). The intention to rely on AI for moral governance can be seen in further legislation, with perhaps the clearest example being the State Council’s ‘Outline for the Establishment of a Social Credit System’, released in 2014. This document underscored that the Social Credit System did not just aim to regulate financial and corporate actions of business and citizens, but also the social behaviour of individuals. This document outlines several social challenges that the plan seeks to alleviate, including tax evasion, food safety scares, and academic dishonesty (Chorzempa et al. 2018). As highlighted, current efforts to implement these systems have been fractious, yet a number have already included moral elements, such as publicly shaming bad debtors (Hornby 2019).

Further concrete examples of how China has been utilising AI in social governance can be seen in the sphere of internal security and policing. China has been at the forefront of the development of smart cities, with approximately half of the world’s smart cities located within China. The majority of resources that have gone into developing these cities have focused on surveillance technologies, such as facial recognition and cloud computing for ordinary policing (Anderlini 2019). The use of advanced ‘counterterrorism’Footnote 9 surveillance programmes in the autonomous region of Xinjiang offers clearer and more problematic evidence of governmental efforts to use AI for internal surveillance. This technology is not limited to facial recognition, but also includes mobile phone applications to track the local Uyghur population, who are portrayed by the government as potential dissidents or terrorists (“China’s Algorithms of Repression” 2019). When government statements are read in parallel with these developments, it seems likely that some form of the Social Credit System(s) will play a central role in the future of China’s AI-enabled governance (Ding 2018), putting the rights of citizens under a sharp devaluative pressure. For example, most citizens generate large data footprints, and nearly all day-to-day transactions in cities are cashless and done with mobile apps (Morris 2019), internet providers enact ‛real-name registration’, linking all online activity to the individual (Sonnad 2017), enabling the government to identify and have access to the digital profile of all citizens using mobile-internet services.

The significant and likely risks related to implementing AI for governance stem from the intertwining of the material aspects of social governance with surveillance and moral control. Articles in the Western media often emphasise the problematic nature of ‘the’ Social Credit System, due to the authoritarian undertones of this pervasive control (Clover 2016; Botsman 2017). Examples of public dissatisfaction with specific features of locally run social credit systems appear to support this viewpoint (Zhang and Han 2019). In some cases, there have even been cases of public backlash leading to revisions in the rating criteria for local social credit systems. In contrast, some commentators have emphasised that, domestically, a national social credit system may be positively received as a response to the perception of moral decline in China, and a concomitant desire to build greater trust; indeed, it has been suggested that the system may be better named the ‘Social Trust’ system (Song 2018; Kobie 2019). When looking at the punishments distributed by social credit systems, some measures, including blacklisting citizens from travelling due to poor behaviour on trains, have received a positive response on Chinese social media. Government censorship and a chilling effect could account for this support, but there is currently no evidence of censors specifically targeting posts concerning social credit systems (Koetse 2018).

Efforts have also been made to understand public opinion on the systems as a whole, rather than just specific controversies or cases of blacklisting. A nationwide survey by a Western academic on China’s social credit systems found high levels of approval within the population (Kostka 2019). With this said, problems with the methodology of the paper, in particular with the translation of ‘social credit system’, indicate that it may be more appropriate to consider a general lack of awareness, rather than a widespread sentiment of support (‘Beyond Black Mirror’ 2019). These points indicate that it is too early to measure public sentiment in China surrounding the development of the Social Credit System(s).

It is important to recognise that despite the relative mundanity of current applications of the Social Credit System (Daum 2019; Lewis 2019), looking forward, substantial ethical risks and challenges remain in relation to the criteria for inclusion on a blacklist or receiving a low score and the exclusion that this could cause. In terms of the former, national blacklists are comprised of those who have broken existing laws and regulations, with a clear rationale for inclusion provided (Engelmann et al. 2019). However, the legal documents on which these lists are built are often ill-defined and function within a legal system that is subordinate to the Chinese Communist Party (Whiting 2017). As a result, legislation, like the one prohibiting the spread of information that seriously disturbs the social order, could be used to punish individuals for politically undesirable actions, including free speech (Arsène 2019). Still, it is more appropriate to consider this a problem of the political-legal structure and not a social credit system per se. The fundamental, ethical issue of an unacceptable approach to surveillance remains unaddressed.

In relation to local score-based systems that do not solely rely on illegality, assessment criteria can be even vaguer. For instance, social credit scores in Fuzhou account for ‘employment strength’, which is based on the loosely defined ‘hard-working/conscientious and meticulous’ (Lewis 2019). This is ethically problematic because of the opaque and arbitrary inclusion standards that are introduced for providing people certain benefits. In tandem with inclusion is the exclusion that punishments from these systems can cause. At present, most social credit systems are controlled by separate entities and do not connect with each other (Liu 2019), limiting excessive punishment and social exclusion. Nonetheless, memorandums of understanding are emerging between social credit systems and private companies for excluding those blacklisted from activities such as flying (Arsène 2019). As a result, it is important to emphasise that whilst the Social Credit System(s) is still evolving, the inclusion criteria and potential exclusion caused raise serious ethical questions.

4 The debate on digital ethics and AI in China

Alongside establishing material goals, the AIDP outlines a specific desire for China to become a world leader in defining ethical norms and standards for AI. Following the release of the AIDP, the government, public bodies, and industry within China were relatively slow to develop AI ethics frameworks (Lee 2018; Hickert and Ding 2018). However, there has been a recent surge in attempts to define ethical principles. In March 2019, China’s Ministry of Science and Technology established the National New Generation Artificial Intelligence Governance Expert Committee. In June 2019, this body released eight principles for the governance of AI. The principles emphasised that, above all else, AI development should begin from enhancing the common well-being of humanity. Respect for human rights, privacy and fairness were also underscored within the principles. Finally, they highlighted the importance of transparency, responsibility, collaboration, and agility to deal with new and emerging risks (Laskai and Webster 2019).

In line with this publication, the Standardization Administration of the People’s Republic of China, the national-level body responsible for developing technical standards, released a white paper on AI standards. The paper contains a discussion of the safety and ethical issues related to technology (Ding and Triolo 2018). Three key principles for setting the ethical requirements of AI technologies are outlined. First, the principle of human interest states that the ultimate goal of AI is to benefit human welfare. Second, the principle of liability emphasises the need to establish accountability as a requirement for both the development and the deployment of AI systems and solutions. Subsumed within this principle is transparency, which supports the requirement of understanding what the operating principles of an AI system are. Third, the principle of consistency of [sic] rights and responsibilities emphasised that, on the one hand, data should be properly recorded and oversight present but, on the other hand, that commercial entities should be able to protect their intellectual property (Ding and Triolo 2018).

Government affiliated bodies and private companies have also developed their own AI ethics principles. For example, the Beijing Academy of Artificial Intelligence, a research and development body including China’s leading companies and Beijing universities, was established in November 2018 (Knight 2019). This body then released the ‘Beijing AI Principles’ to be followed for the research and development, use, and governance of AI (“Beijing AI Principles” 2019). Similar to the principles forwarded by the AIDP Expert Committee, the Beijing Principles focus on doing good for humanity, using AI ‘properly’, and having the foresight to predict and adapt to future threats. In the private sector, one of the most high-profile ethical framework has come from the CEO of Tencent, Pony Ma. This framework emphasises the importance of AI being available, reliable, comprehensible, and controllable (Si 2019). Finally, the Chinese Association for Artificial Intelligence (CAII)Footnote 10 has yet to establish ethical principles but did form an AI ethics committee in mid-2018 with this purpose in mind (“AI association to draft ethics guidelines” 2019).

The aforementioned principles bear some similarity to those supported in the Global North (Floridi and Cowls 2019), yet institutional and cultural differences mean that the outcome is likely to be significantly different. China’s AI ethics needs to be understood in terms of the country’s culture, ideology, and public opinion (Triolo and Webster 2017). Although a full comparative analysis is beyond the scope of this article, it might be anticipated, for example, that the principles which emerge from China place a greater emphasis on social responsibility and group and community relations, with relatively less focus on individualistic rights, thus echoing earlier discussions about Confucian ethics on social media (Wong 2013).

In the following sections, we shall focus on the debate about AI ethics as it is emerging in connection with privacy and medical ethics because these are two of the most mature areas where one may grasp a more general sense of the current ‘Chinese approach’ to digital ethics. The analysis of the two areas is not meant to provide an exhaustive map of all the debates about ethical concerns over AI in China. Instead, it may serve to highlight some of the contentious issues that are emerging, and inform a wider understanding of the type of boundaries which may be drawn in China when a normative agenda in the country is set.

4.1 Privacy

All of the sets of principles for ethical AI outlined above mention the importance of protecting privacy. However, there is a contentious debate within China over exactly what types of data should be protected. China has historically had weak data protection regulations—which has allowed for the collection and sharing of enormous amounts of personal information by public and private actors—and little protection for individual privacy. In 2018, Robin Li, co-founder of Baidu, stated that ‛the Chinese people are more open or less sensitive about the privacy issue. If they are able to trade privacy for convenience, safety and efficiency, in a lot of cases, they are willing to do that’ (Liang 2018). This viewpoint—which is compatible with the apparently not too negative responses to the Social Credit System—has led some Western commentators to misconstrue public perceptions of privacy in their evaluations of China’s AI strategy (Webb 2019). However, Li’s understanding of privacy is not one that is widely shared, and his remarks sparked a fierce backlash on Chinese social media (Liang 2018). This concern for privacy is reflective of survey data from the Internet Society of China, with 54% of respondents stating that they considered the problem of personal data breaches as ‘severe’ (Sun 2018). When considering some cases of data misuse, this number is unsurprising. For example, a China Consumers Association survey revealed that 85% of people had experienced a data leak of some kind (Yang 2018). Thus, contrary to what may be inferred from some high-profile statements, there is a general sentiment of concern within the Chinese public over the misuse of personal information.

As a response to these serious concerns, China has been implementing privacy protection measures, leading one commentator to refer to the country as ‛Asia’s surprise leader on data protection’ (Lucas 2018). At the heart of this effort has been the Personal Information Security Specification (the Specification), a privacy standard released in May 2018. This standard was meant to elaborate on the broader privacy rules, which were established in the 2017 Cyber Security Law. In particular, it focused on both protecting personal data and ensuring that people are empowered to control their own information (Hong 2018). A number of the provisions within the standard were particularly all-encompassing, including a broad definition of sensitive personal information, which includes features such as reputational damage. The language used in the standard led one commentator to argue that some of the provisions were more onerous than those of the European Union's (EU) General Data Protection Regulation (GDPR) (Sacks 2018).

Despite the previous evidence, the nature of the standard means that it is not really comparable to the GDPR. On the one hand, rather than being a piece of formally enforceable legislation, the Specification is merely a ‘voluntary’ national standard created by the China National Information Security Standardization Technical Committee (TC260). It is on this basis that one of the drafters stated that this standard was not comparable to the GDPR, as it is only meant as a guiding accompaniment to previous data protection legislation, such as the 2017 Cyber Security law (Hong 2018). On the other hand, there remains a tension that is difficult to resolve because, although it is true that standards are only voluntary, standards in China hold substantive clout for enforcing government policy aims, often through certification schemes (Sacks and Li 2018). In June 2018, a certification standard for privacy measures was established, with companies such as Alipay and Tencent Cloud receiving certification (Zhang and Yin 2019). Further, the Specification stipulates the specificities of the enforceable Cybersecurity Law, with Baidu and AliPay both forced to overhaul their data policies due to not ‘complying with the spirit of the Personal Information Security Standard’ (Yang 2019).

In reality, the weakness in China’s privacy legislation is due less to its ‘non-legally binding’ status and more to the many loopholes in it, the weakness of China’s judicial system, and the influential power of the government, which is often the last authority, not held accountable through democratic mechanisms. In particular, significant and problematic exemptions are present for the collection and use of data, including when related to security, health, or the vague and flexibly interpretable ‘significant public interests’. It is these large loopholes that are most revealing of China’s data policy. It may be argued that some broad consumer protections are present, but actually this is not extended to the government (Sacks and Laskai 2019). Thus, the strength of privacy protection is likely to be determined by the government’s decisions surrounding data collection and usage, rather than legal and practical constraints. This is alarming.

It is important to recognise that the GDPR contains a similar ‘public interest’ basis for lawfully processing personal data where consent or anonymisation are impractical and that these conditions are poorly defined in legislation and often neglected in practice (Stevens 2017). But the crucial and stark difference between the Chinese and EU examples concerns the legal systems underpinning the two approaches. The EU’s judicial branch has substantive influence, including the capacity to interpret legislation and to use judicial review mechanisms to determine the permissibility of legislation more broadly.Footnote 11 In contrast, according to the Chinese legal system, the judiciary is subject to supervision and interference from the legislature, which has de jure legislative supremacy (Ji 2014); this gives de facto control to the Party (Horsley 2019). Thus, the strength of privacy protections in China may be and often is determined by the government’s decisions surrounding data collection and usage rather than legal and practical constraints. As it has been remarked, ‘The function of law in governing society has been acknowledged since 2002, but it has not been regarded as essential for the CCP. Rather, morality and public opinion concurrently serve as two alternatives to the law for the purpose of governance. As a result, administrative agencies may ignore the law on the basis of party policy, morality, public opinion, or other political considerations’ (Wang and Liu 2019, p. 6).

When relating this back to AI policy, China has benefited from the abundance of data that historically lax privacy protections have facilitated (Ding 2018). On the surface, China’s privacy legislation seems to contradict other development commitments, such as the Social Credit System, which requires extensive personal data. This situation creates a dual ecosystem whereby the government is increasingly willing to collect masses of data, respecting no privacy, while simultaneously admonishing tech companies for the measures they employ (Sacks and Laskai 2019). Recall that private companies, such as the AI National Team, are relied upon for governance at both a national and local level, and therefore may receive tacit endorsement rather than admonishment in cases where the government’s interests are directly served. As a result, the ‘privacy strategy’ within China appears to aim to protect the privacy of a specific type of consumer, rather than that of citizens as a whole, allowing the government to collect personal data wherever and whenever it may be merely useful (not even strictly necessary) for its policies. From an internal perspective, one may remark that, when viewed against a backdrop of high levels of trust in the government and frequent private sector leaks and misuses, this trade-off seems more intelligible to the Chinese population. The Specification has substantial scope for revising this duality, with a number of loopholes being closed since the initial release (Zhang and Yin 2019), but it seems unlikely that privacy protections from government intrusion will be codified in the near future. The ethical problem remains unresolved.

4.2 Medical ethics

Medical ethics is another significant area impacted by the Chinese approach to AI ethics. China’s National Health Guiding Principles have been central to the strategic development and governance of its national healthcare system for the past 60 years (Zhang and Liang 2018). They have been re-written several times, as the healthcare system has transitioned from being a single-tier system, prior to 1978, to a two-tier system that was reinforced by healthcare reform in 2009 (Wu and Mao 2017). The last re-write of the Guiding Principles was in 1996 and the following principles still stand (Zhang and Liang 2018):

-

(a)

People in rural areas are the top priority

-

(b)

Disease prevention must be placed first

-

(c)

Chinese traditional medicine and Western medicine must work together

-

(d)

Health affairs must depend on science and education

-

(e)

Society as a whole should be mobilised to participate in health affairs, thus contributing to the people’s health and the country’s overall development.

All five principles are relevant for understanding China’s healthcare system as a whole but, from the perspective of analysing the ethics of China’s use of AI in the medical domain, principles (a), (b), and (e) are the most important. They highlight that—in contrast to the West, where electronic healthcare data are predominantly focused on individual health, and thus AI techniques are considered crucial to unlock ‘personalised medicine’ (Nittas et al. 2018)—in China, healthcare is predominantly focused on the health of the population. In this context, the ultimate ambition of AI is to liberate data for public health purposesFootnote 12 (Li et al. 2019a, b). This is evident from the AIDP, which outlines the ambition to use AI to ‘strengthen epidemic intelligence monitoring, prevention and control,’ and to ‘achieve breakthroughs in big data analysis, Internet of Things, and other key technologies’ for the purpose of strengthening community intelligent health management. The same aspect is even clearer in the State Council’s 2016 official notice on the development and use of big data in the healthcare sector, which explicitly states that health and medical big data sets are a national resource and that their development should be seen as a national priority to improve the nation’s health (Zhang et al. 2018).Footnote 13

From an ethical analysis perspective, the promotion of healthcare data as a public good throughout public policy—including documents such as Measures on Population Health Information and the Guiding Opinions on Promoting and Regulating the Application of Big Medical and Health Data (Chen and Song 2018)—is crucial. This approach, combined with lax rules about data sharing within China (Liao 2019; Simonite 2019), and the encouragement of the open sharing of public data between government bodies (“Outline for the Promotion of Big Data Development” 2015), promotes the collection and aggregation of health data without the need for individual consent, by positioning group beneficence above individual autonomy. This is best illustrated with an example. As part of China’s ‘Made in 2025’ plan, 130 companies, including ‘WeDoctor’ (backed by Tencent, one of China’s AI national champions) signed co-operation agreements with local governments to provide medical check-ups comprised of blood pressure, electrocardiogram (ECG), urine and blood tests, free of charge to rural citizens (Hawkins 2019). The data generated by these tests were automatically (that is with no consent from the individual) linked to a personal identification number and then uploaded to the WeDoctor cloud, where they were used to train WeDoctor’s AI products. These products include the ‘auxiliary treatment system for general practice’, which is used by village doctors to provide suggested diagnosis and treatments from a database of over 5000 symptoms and 2000 diseases. Arguably, the sensitive nature of the data can make ‛companies—and regulators—wary of overseas listings, which would entail greater disclosure and scrutiny’ (Lucas 2019). Although this, and other similar practices, do involve anonymisation, they are in stark contrast with the European and US approaches to the use of medical data, which prioritise individual autonomy and privacy, rather than social welfare. A fair balance between individual and societal needs is essential for an ethical approach to personal data, but there is an asymmetry whereby an excessive emphasis on an individualistic approach may be easily rectified with the consensus of the individuals, whereas a purely societal approach remains unethical insofar as it overrides too easily individual rights and cannot be rectified easily.

Societal welfare may end up justifying the sacrifice of individual rights as a means. This remains unethical. However, how this is perceived within China remains a more open question. One needs to recall that China has very poor primary care provision (Wu and Mao 2017), that it achieved 95% health coverage (via a national insurance scheme) only in 2015 (Zhang et al. 2018), it has approximately 1.8 doctors per 1000 citizens compared to the OECD average of 3.4 (Liao 2019), and is founded on Confucian values that promote group-level equality. It is within this context that the ethical principle of the ‘duty of easy rescue’ may be interpreted more insightfully. This principle prescribes that, if an action can benefit others and poses little threat to the individual, then the ethical option is to complete the action (Mann et al. 2016). In this case, from a Chinese perspective, one may argue that sharing of the healthcare data may pose little immediate threat to the individual, especially as Article 6 of the Regulations on the Management of Medical Records of Medical Institutions, Article 8 of the Management Regulations on Application of Electronic Medical Records, Article 6 of the Measures for the Management of Health Information, the Cybersecurity Law of the People’s Republic of China, and the new Personal Information Security Specification all provide specific and detailed instructions to ensure data security and confidentiality (Wang 2019). However, it could potentially deliver significant benefit to the wider population.

The previous ‘interpretation from within’ does not imply that China’s approach to the use of AI in healthcare is acceptable or raises no ethical concerns. The opposite is actually true. In particular, the Chinese approach is undermined by at least three main risks.

First, there is a risk of creating a market for human care. China’s two-tiered medical system provides state-insured care for all, and the option for individuals to pay privately for quicker or higher quality treatment. This is in keeping with Confucian thought, which encourages the use of private resources to benefit oneself and one’s family (Wu and Mao 2017). With the introduction of Ping An [sic] Good Doctor’s unmanned ‘1-min clinics’ across China (of which there are now as many as 1,000 in place), patients can walk in, provide symptoms and medical history, and receive an automated diagnosis and treatment plans (which are only followed up by human clinical advice for new customers), it is entirely possible to foresee a scenario in which only those who are able to pay will be able to access human clinicians. In a field where emotional care, and involvement in decision making, are often as important as the logical deduction of a ‘diagnosis,’ this could have a significantly negative impact on the level and quality of care accessed across the population and on the integrity of the self (Andorno 2004; Pasquale 2015),Footnote 14 at least for those who are unable to afford human care.

Second, in the context of a population that is still rapidly expanding yet also ageing, China is investing significantly in the social informatisation of healthcare and has, since at least 2015, been linking emotional and behavioural data extrapolated from social media and daily healthcare data (generated from ingestibles, implantables, wearables, carebots, and Internet of Things devices) to Electronic Health Records (Li et al. 2019a, b), with the goal of enabling community care of the elderly. This further adds to China’s culture of state-run, mass-surveillance and, in the age of the Social Credit System, suggests that the same technologies designed to enable people to remain independent in the community as they age may one day be used as a means of social control (The Medical Futurist 2019), to reduce the incidence of ‘social diseases’—such as obesity and type II diabetes (Hawkins 2019)—under the guise of ‘improving peoples lives’ through the use of AI to improve the governance of social services (as stated in the AIDP).

The third ethical risk is associated with CRISPR gene modification and AI. CRISPR is a controversial gene modification technique that can be used to alter the presentation of genes in living organisms, for example for the purpose of curing or preventing genetic diseases. It is closely related to AI, as Machine Learning techniques can be used to identify which gene or genes need to be altered with the CRISPR method. The controversies, and potential significant ethical issues, associated with research in this area are related to the fact that it is not always possible to tell where the line is between unmet clinical need and human enhancement or genetic control (Cohen 2019). This became clear when, in November 2018, biophysics researcher He Jiankui revealed that he had successfully genetically modified babies using the CRISPR method to limit their chances of ever contracting HIV (Cohen 2019). The announcement was met by international outcry and He’s experiment was condemned by the Chinese government at the time (Belluz 2019). However, the drive to be seen as a world leader in medical care (Cheng 2018), combined with the promise gene editing offers for the treatment of diseases, suggest that a different response may be possible in the future (Cyranoski 2019; “China opens a Pandora’s Box”, 2018). Such a change in government policy is especially likely as global competition in this field heats up. The US has announced that it is enrolling patients in a trial to cure an inherited form of blindness (Marchione 2019); and the UK has launched the Accelerating Detection of Disease challenge to create a five-million patient cohort whose data will be used to develop new AI approaches to early diagnosis and biomarker discovery (UK Research and Innovation 2019). These announcements create strong incentives for researchers in China to push regulatory boundaries to achieve quick successes (Tatlow 2015; Lei et al. 2019). China has filed the largest number of patents for gene-editing on animals in the world (Martin-Laffon et al. 2019). Close monitoring will be essential if further ethical misdemeanours are to be avoided.

5 Conclusion

In this article, we analysed the nature of AI policy within China and the context within which it has emerged, by mapping the major national-level policy initiatives that express the intention to utilise AI. We identified three areas of particular relevance: international competitiveness, economic growth, and social governance (construction). The development and deployment of AI in each of these areas have implications for China and for the international community. For example, although the ‘trump-card’ policy to gain a military advantage may not be something new, its application to AI technologies risks igniting an arms race and undermining international stability (Taddeo and Floridi 2018). Efforts to counteract this trend seem largely hollow. Our analysis indicates that China has some of the greatest opportunities for economic benefit in areas like automation and that the country is pushing forward in AI-related areas substantially. Nonetheless, efforts to cushion the disruptions that emerge from using AI in the industry are currently lacking. Thus, AI can help foster increased productivity and high levels of growth, but its use is likely to intensify the inequalities present within society and even decrease support for the government and its policies. The AIDP also promotes AI as a way to help deal with some of the major social problems, ranging from pollution to standards of living. However, positive impact in this area seem to come with increased control over individuals’ behaviour, with governance extending into the realm of moral behaviour and further erosion of privacy.

Ethics also plays a central role in the Chinese policy effort on AI. The AIDP outlines a clear intention to define ethical norms and standards, yet efforts to do so are at a fledgling stage, being broadly limited to high-level principles, lacking implementation. Analyses of existing Chinese approaches and emerging debates in the areas of privacy and medical ethics provide an insight into the types of frameworks that may emerge. With respect to privacy, on the surface, recently introduced protections may seem robust, with definitions of sensitive personal information even broader than that used within the GDPR. However, a closer look exposes the many loopholes and exceptions that enable the government (and companies implicitly endorsed by the government) to bypass privacy protection and fundamental issues concerning lack of accountability and government’s unrestrained decisional power about mass-surveillance.

In the same vein, when focusing on medical ethics, it is clear that, although China may agree with the West on the bioethical principles, its focus on the health of the population, in contrast to the West’s focus on the health of the individual, may easily lead to unethical outcomes (the sacrifice imposed on one for the benefit of many) and is creating a number of risks, as AI encroaches on the medical space. These are likely to evolve over time, but the risks of unequal care between those who can afford a human clinician and those who cannot, control of social diseases, and of unethical medical research are currently the most significant.

China is a central actor in the international debate on the development and governance of AI. It is important to understand China’s internal needs, ambitions in the international arena, and ethical concerns, all of which are shaping the development of China’s AI policies. It is also important to understand all this not just externally, from a Western perspective, but also internally, from a Chinese perspective. However, some ethical safeguards, constraints and desiderata are universal and are universally accepted and cherished, such as the nature and scope of human rights.Footnote 15 They enable one to evaluate, after having understood, China’s approach to the development of AI. This is why in this article we have sought to contribute to a more comprehensive and nuanced analysis of the structural, cultural and political factors that ground China’s stance on AI, as well as an indication of its possible trajectory, while also highlighting where ethical problems remain, arise, or are likely to be exacerbated. They should be addressed as early as it is contextually possible.

Notes

In the rest of this article, we shall use ‘China’ or ‘Chinese’ to refer to the political, regulatory, and governance approach decided by the Chinese national government concerning the development and use of AI capabilities.

It should be noted that, although MIST has been tasked with coordinating the AIDP, it was the Ministry of Industry and Information Technology (MIIT) that released the guidance for the implementing the first step of the AIDP.

To accompany the three steps outlined earlier, the Ministry of Industry and Information Technology (MIIT) provides documents to flesh out these aims. The first of these, ‘Three-Year Action Plan for Promoting Development of a New Generation Artificial Intelligence Industry (2018–2020)’, has already been released.

‘This term refers to ‘an actor, which lags behind its competitors in terms of development, coming up with a radical innovation that will allow it to overtake its rivals’ (Brezis et al. 1993).’

The Chinese text (社会建设) directly translates to ‘society/community’ and ‘build/construction’.

It is worth highlighting, however, that the Chinese are more than double the world average, and ranked first, when it comes to answering the question “whether the country is going in the right direction”, with 94% of the respondents in agreement.

The word ‘counterterrorism’ started to be used after 9/11, with the phrase ‘cultural integration’ favoured before this (“Devastating Blows 2005).

The Chinese Association for Artificial Intelligence (CAAI) is the only state-level science and technology organization in the field of artificial intelligence under the Ministry of Civil Affairs.

As a practical example of this, the Court of Justice of the European Union gave judgment in Rīgas Case (2017) that has been used in defining what is meant by ‘legitimate interest.’.

This is not to imply that the West is not interested in using AI for population health management purposes, or that China is not interested in using AI for personalised health purposes. China is, for example, also developing an integrated data platform for research into precision medicine (Zhang et al. 2018). We simply mean to highlight that the order of priority between these two goals seems to differ.

The challenges section outlines some concrete benefits of implementing AI, illustrating some perceived gains to China. A separate (though more technological than ethical) point substantiated by the article is there is a lot of medical data which could potentially be beneficial, but the data are spread out among hospitals, not used for research, and largely unstructured.

Note that the emphasis on individual wellbeing must also be contextualised culturally.

For arguments on the universality of human rights coming from within cultural perspectives, see Chan (1999) on Confucianism and human rights.

References

AI association to draft ethics guidelines (2019). Xinhua. https://www.xinhuanet.com/english/2019-01/09/c_137731216.htm. Accessed 1 May 2019

AI Policy-China (n.d.) Future of Life Institute. https://futureoflife.org/ai-policy-china/. Accessed 14 Mar 2019

AI-powered waste management underway in China (2019) People’s Daily Online. https://en.people.cn/n3/2019/0226/c98649-9549956.html. Accessed 13 Apr 2019

Allen G (2019) Understanding China’s AI Strategy. Center for a New American Security. https://www.cnas.org/publications/reports/understanding-chinas-ai-strategy. Accessed 13 Mar 2019

Anderlini J (2019) How China’s smart-city tech focuses on its own citizens. Financial Times. https://www.ft.com/content/46bc137a-5d27-11e9-840c-530737425559. Accessed 25 Apr 2019

Arsène S (2019) China’s Social Credit System A Chimera with Real Claws. Asie.Visions, No. 110. https://www.ifri.org/sites/default/files/atoms/files/arsene_china_social_credit_system_2019.pdf. Accessed 12 Mar 2020

Atkinson S, Skinner G (2019) What worries the world?. IPSOS Public Affairs, Paris

Austin G (2016) International legal norms in cyberspace: evolution of China’s National Security Motivations. In: Osula A, Rõigas H (eds) International cyber norms: legal, policy and industry perspectives. NATO CCD COE Publications, Tallinn

Balding C (2019) What’s causing China’s economic slowdown. Foreign Affairs. https://www.foreignaffairs.com/articles/china/2019-03-11/whats-causing-chinas-economic-slowdown. Accessed 20 Mar 2019

Barton D, Woetzel J, Seong J, Tian Q (2017) Artificial intelligence: implications for China. McKinsey Global Institute, San Francisco

Beijing AI Principles (2019) Beijing Academy of Artificial Intelligence. https://www.baai.ac.cn/blog/beijing-ai-principles. Accessed 05 June 2019

Belluz J (2019) Is the CRISPR baby controversy the start of a terrifying new chapter in gene editing? VOX. https://www.vox.com/science-and-health/2018/11/30/18119589/crispr-gene-editing-he-jiankui. Accessed 12 Apr 2019

Beyond Black Mirror-China’s Social Credit System (2019) Re:publica. https://19.re-publica.com/de/session/beyond-black-mirror-chinas-social-credit-system. Accessed 30 June 2019

Blasko DJ (2011) ‘Technology Determines Tactics’: the relationship between technology and doctrine in Chinese military thinking. J Strat Stud 34(3):355–381. https://doi.org/10.1080/01402390.2011.574979

Borowiec S (2016) Google’s AI machine v world champion of ‘Go’: everything you need to know. The Guardian. https://www.theguardian.com/technology/2016/mar/09/googles-ai-machine-v-world-champion-of-go-everything-you-need-to-know. Accessed 29 Mar 2019