- 1Unit for Visually Impaired People (U-VIP), Italian Institute of Technology, Genoa, Italy

- 2Istituto David Chiossone, Genoa, Italy

Spatial memory relies on encoding, storing, and retrieval of knowledge about objects’ positions in their surrounding environment. Blind people have to rely on sensory modalities other than vision to memorize items that are spatially displaced, however, to date, very little is known about the influence of early visual deprivation on a person’s ability to remember and process sound locations. To fill this gap, we tested sighted and congenitally blind adults and adolescents in an audio-spatial memory task inspired by the classical card game “Memory.” In this research, subjects (blind, n = 12; sighted, n = 12) had to find pairs among sounds (i.e., animal calls) displaced on an audio-tactile device composed of loudspeakers covered by tactile sensors. To accomplish this task, participants had to remember the spatialized sounds’ position and develop a proper mental spatial representation of their locations. The test was divided into two experimental conditions of increasing difficulty dependent on the number of sounds to be remembered (8 vs. 24). Results showed that sighted participants outperformed blind participants in both conditions. Findings were discussed considering the crucial role of visual experience in properly manipulating auditory spatial representations, particularly in relation to the ability to explore complex acoustic configurations.

Highlights

- A novel task, the Audio-Memory, presented in the form of a game to evaluate audio-spatial memory abilities in sighted and blind individuals.

- Sighted outperformed the blind participants.

- Blind people encounter limitations ascribed to congenital blindness in processing auditory spatial representations and exploring complex acoustic configurations.

Introduction

In everyday life, abilities such as comprehension, reasoning, or learning are achieved through memory processes that allow the human brain to retain spatial and non-spatial information. The cognitive system devoted to the temporary storage and manipulation of information is the working memory system (WM) (Palmer, 2000). Historically, the most supported model of WM was proposed by Baddeley (1992), who divided WM into three separate subsystems: the central executive component (involved in high-order cognitive functions), the phonological loop and the visuo-spatial sketchpad (VSSP) that are used for the storage and processing of verbal and visuo-spatial information, respectively. Logie (1995) proposed an additional division of the VSSP into two subcomponents: the “inner scribe,” which refers to spatial components of information, and the “visual cache” for processing visual features of objects. One of the main functions ascribed to the VSSP of WM is mental imagery, a cognitive function that leads to internal representations (Cornoldi and Vecchi, 2003) of the objects composing the surrounding space. This function corresponds to a quasi-perceptual experience occurring in the absence of actual stimuli for the relevant perception (Kosslyn, 1980; Finke and Freyd, 1989; Rinck and Denis, 2004). Mental imagery is directly involved in cognitive functions such as learning (Yates, 1966), problem-solving, reasoning (Féry, 2003) and original and creative thought (LeBoutillier and Marks, 2003). The nature of these representations has long been the subject of research and debate. Kosslyn’s theory (Kosslyn, 1980), the most supported in this context, posits that mental images are “picture-like” representations, as confirmed by studies involving mental rotation and mental scanning of haptic spatial layouts (Farah et al., 1988; Vingerhoets et al., 2002). Although Kosslyn’s initial theory assumed that imagery processes partially overlap with perceptual mechanisms, evidence has shown that imagery cannot be equated with visual perception (Cornoldi and Vecchi, 2003). Visual mental images are not mere copies of visual input but rather the end product of a series of constructive processes based on memory retrieval mechanisms (Pietrini et al., 2004). Therefore, visuo-spatial mental imagery can originate from different sensory and perceptual inputs (e.g., visual, haptic, acoustic and verbal) (Cornoldi and Vecchi, 2003). Supporting this view, neuroimaging and electrophysiological studies generally indicate that the maintenance of information in spatial WM is not modality–specific and does not strictly depend on the encoding sensory modality (Lehnert and Zimmer, 2006, 2008).

Studies with congenitally blind individuals can provide fundamental insights into the role of vision in spatial memory abilities within the imagery debate. Visually impaired individuals can generate and manipulate mental images through long-term memory, haptic exploration, or verbal description (Zimler and Keenan, 1983; Lederman and Klatzky, 1990; Carreiras and Codina, 1992). Visual features such as dimension, shape, or texture can be perceived through touch and conveyed in internal images. Thus, the absence of sight does not impede an efficient visuospatial system functioning.

Blind individuals show deficits in memory tasks requiring large sequences of mental manipulation of stored information, namely active memory tasks (Vecchi et al., 1995, 2004; Vecchi, 1998). When the experimental demand requires only maintaining small amounts of information instead (i.e., passive memory tasks), their abilities usually do not differ significantly from sighted people (Cornoldi and Vecchi, 2003; Setti et al., 2018, 2019). Nevertheless, blind individuals might also show limitations when only passive memory processes are involved, such as memorizing 2D spatial layouts (Vecchi, 1998). In fact, vision remains the preferred sensory modality that facilitates the accomplishment of visuospatial working memory tasks, especially when great demands on memory are required.

Blind individuals do show limitations when asked to continuously process the mental image of a previously learned spatial layout (Juurmaa and Lehtinen-Railo, 1994) or when performance can be enhanced through active manipulation of spatial information (Setti et al., 2018). Moreover, blind individuals encounter difficulties using perspective in mental representations (Arditi and Dacorogna, 1988) and in elaborating the third dimension when learning a haptic spatial layout (Vecchi, 1998). When increasing the number of items to be actively processed, thus increasing task demand, blind individuals demonstrate inferior performance compared to sighted individuals (Vecchi, 1998; Vanlierde and Wanet-Defalque, 2004; Cattaneo et al., 2008). Vision is the best sensory modality through which the brain processes several items simultaneously (De Beni and Cornoldi, 1988), and as such, lack of vision impacts this ability (Cornoldi and Vecchi, 2003).

There is evidence that vision plays a crucial role in guiding the maturation of spatial cognition (Hart and Moore, 2017). In early visual deprivation, the remaining intact sensory modalities are recruited to process spatial information. In tactile tasks such as object recognition and immediate hand-pointing localization, visually impaired individuals perform as well or better than sighted controls (Morrongiello et al., 1994; Rossetti et al., 1996; Sunanto and Nakata, 1998). In the auditory processing of space, blind individuals exhibit enhanced abilities for azimuthal localization (King and Parsons, 1999; Roder et al., 1999; Gougoux et al., 2004; Doucet et al., 2005) and relative distance discrimination (Voss et al., 2004; Kolarik et al., 2013). At the same time, blind people show significant impairments for auditory spatial tasks such as vertical localization, absolute distance discrimination and spatial bisection (Zwiers et al., 2001; Lewald, 2002; Gori et al., 2014). Thus, early visual deprivation affects performance in tasks requiring a complex representation of space and it has been argued that these deficits reflect a lack of visual calibration over touch and audition in processing spatial information (Gori et al., 2014). According to the cross-sensory calibration hypothesis, vision calibrates the other sensory modalities to process spatial information. In other words, the brain learns from vision how to evaluate objects’ orientation and proprioceptive position through alternate sensory modalities such as audition and touch (Cappagli et al., 2017; Cuturi et al., 2017). Another explanation for the decreased performance of visually impaired individuals in complex spatial tasks is that it originates from a compromised spatial memory. This hypothesis is supported by studies demonstrating that blind children have limitations in spatial recall (Millar, 1976) and the simultaneous processing of multiple representations (Puspitawati et al., 2014). These results do not suggest that mnemonic skills in general are impaired in blind individuals. Blind individuals ably perform temporal tasks that require participants to understand and remember the temporal order of sound presentations (Vercillo et al., 2016). They show limitations only in tasks requiring complex spatial judgments (Bertonati et al., 2020) where the spatial presentation of stimuli position is fundamental to accomplish the task (Gori et al., 2014).

Lack of visual experience may also lead to the differential use of spatial reference frames to encode the information to be memorized. The two main frames of reference used to represent the location of entities in space are egocentric and allocentric (Ruggiero and Iachini, 2010). The first defines locations of items in the surrounding environment from the observer’s perspective and in relation to observer’s position. Conversely, allocentric reference frames encode spatial information by considering external landmarks and spatial relationships among the items regardless of observer’s position. In the context of spatial memory, previous research has demonstrated that spatial information is organized according to reference frames defined by the layout itself and not by egocentric experience (Mou and McNamara, 2002). Depending on the task to be accomplished, sighted individuals can rely on allocentric frames of reference to orient themselves or to represent and memorize spatial information (Ruggiero et al., 2012; Pasqualotto et al., 2013; Iachini et al., 2014). In contrast, early visual deprivation results in significant impairments in tasks that require an allocentric representation of space (Thinus-Blanc and Gaunet, 1997; Arnold et al., 2013; Gori et al., 2014).

Most research investigating spatial memory in vision loss has been carried out in the haptic domain (Vecchi et al., 1995; Bonino et al., 2008, 2015). Although haptic information plays a substantial role in processing objects proximal to the observer, auditory information allows visually impaired individuals to process surrounding information, including items that are not directly reachable by the observer. For instance, in spatial navigation, sensory substitution devices can aid the ability to build mental maps by integrating auditory and self-motion information (Jicol et al., 2020). In the study, visually impaired individuals could take advantage of visual information converted to acoustic cues more efficiently than sighted participants when performing both egocentric and allocentric navigation tasks, thus indicating that multisensory cueing of space may reduce blindness-related deficits. Conversely, in the context of spatial memory, Setti et al. (2018) demonstrated that congenitally blind individuals show limitations when asked to manipulate spatial information in recalling sequences of spatialized sounds in the acoustic sensory domain.

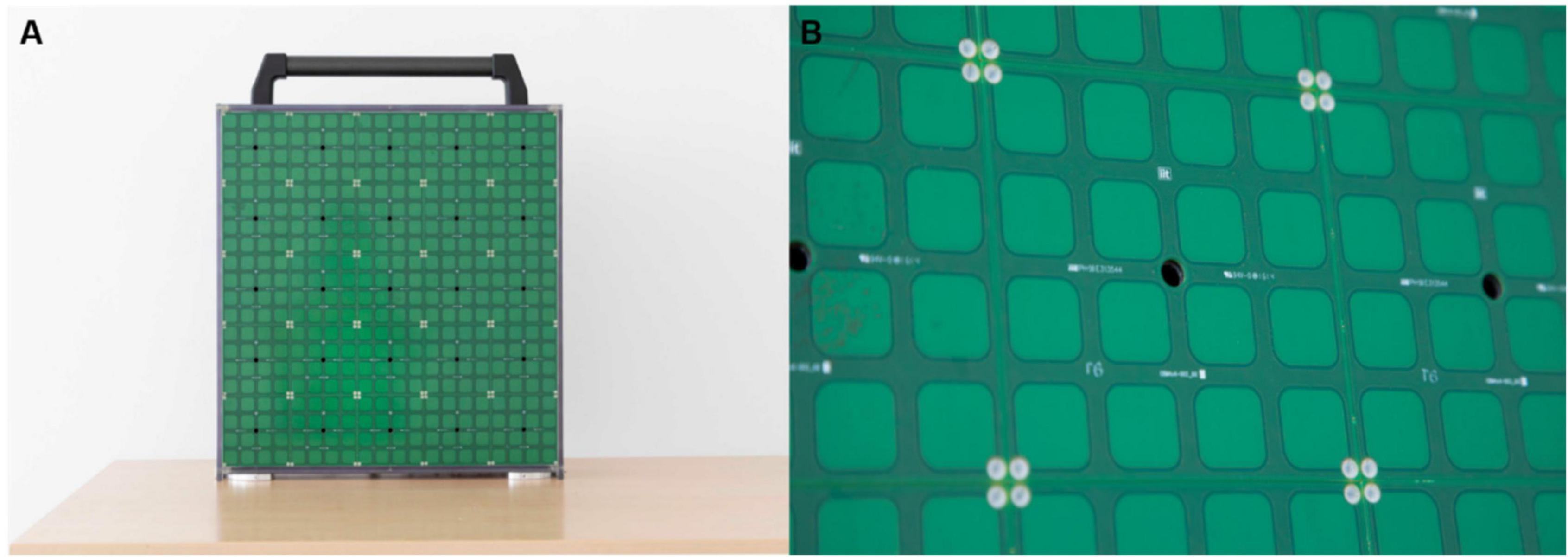

To deeply investigate audio-spatial memory and exploration strategies in blindness, we focus on comparing how blind and sighted individuals construct and manipulate a dynamic auditory structure in a spatial memory task. In the context of this study, the term “dynamic” refers to a spatial structure whose configuration needs to be continuously updated. This aspect reflects everyday life experiences where surrounding acoustic information constantly changes and provides a fundamental sensory cue for blind people to represent the surrounding environment. We tested ability to hold spatialized sounds in memory and update the mental representation of their locations. With this goal in mind, inspiration was taken from the card game “Memory,” which works on attention, memory and concentration (da Cunha et al., 2016). By playing this card game, it is possible to improve concentration, train short-term memory, strengthen associations between concepts, and classify objects grouped by similar traits. In its original form, the game consists of covered cards lying on a table, and the goal is to find pairs among the cards. We adapted the “Memory” game to the auditory domain by employing a vertical array of speakers named ARENA2D (see Figure 1). We call this novel task “Audio-Memory.” Participants were required to match sounds that were spatially displaced over the audio-tactile device. To investigate the impact of memory load on performance, we designed two experimental conditions by increasing the number of sounds paired, using four pairs in the 4-pair condition and 12 pairs in the 12-pair condition (named 4-pair and 12-pair conditions, respectively, for brevity). With the Audio-Memory task, we addressed the following research questions:

Figure 1. ARENA2D at two levels of detail. (A) Presents the device. ARENA2D is a vertical surface (50 × 50 cm) composed of 25 haptic blocks, each with a loudspeaker in the center, arranged in the form of a matrix. (B) Shows a single haptic block in detail. The black hole is the speaker from which the sound is emitted. The blocks are covered by 16 (4 × 4 matrix) tactile sensors (2 × 2 cm2) that register the position of each touch.

1. To what extent does early visual deprivation influence audio-spatial memory skills?

2. What is the exploration strategy used by the two groups when asked to explore a complex auditory structure to construct a spatial representation of sound dispositions?

Since vision is crucial for spatial processing (Alais and Burr, 2004; Burr et al., 2009; Hart and Moore, 2017) we hypothesized that a lack of visual experience would affect audio-spatial memory skills in blind individuals. Due to the great cognitive load required to manipulate spatial information and the difficulties in using the spatial relations among the sounds, we expected sighted to outperform blind participants. Furthermore, in the context of spatial exploration, we hypothesized that congenitally blind participants would explore the layout of speakers differently compared to the sighted group. Considering how the lack of visual experience affects spatial processing in blind individuals, we expected them to show a slower and more sequential exploration of the spatially distributed items compared to sighted participants.

Results

We tested blind and sighted adolescents and adults. All groups performed two experimental tasks, consisting of four and twelve pairs of sounds to be matched (Figure 2). To deliver sounds, we used ARENA2D, a 5-by-5 matrix of speakers covered by tactile sensors, each constituting a haptic block (Ahmad et al., 2019; Setti et al., 2019; Figure 1).

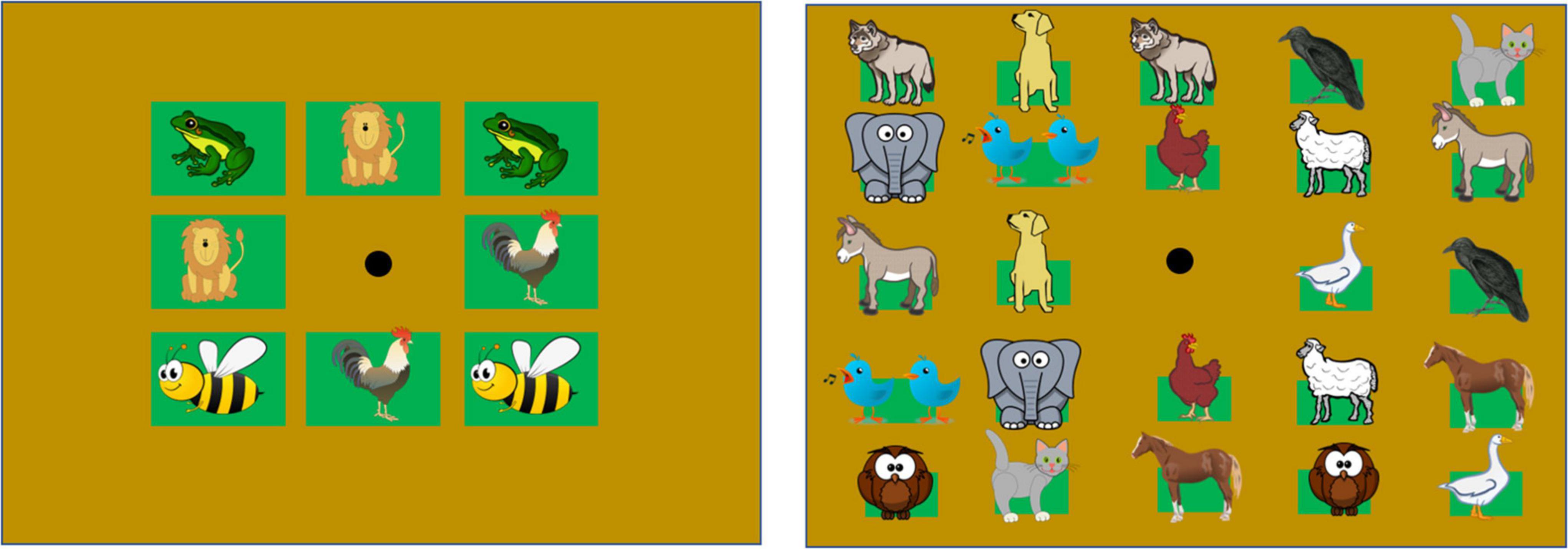

Figure 2. Grids used in experimental conditions. The two grids differ in the size of the apertures for each auditory item. The apertures on the grids represented in the left column are 10 cm × 10 cm, equal to the haptic block size. The apertures on the grids represented in the right panel are 4 cm × 4 cm. Depicted animals placed inside the squares, refer to the position of the animal calls in each grid (images downloaded from a royalty-free website, https://publicdomainvectors.org/). The black dot at the center indicates the speaker emitting feedback sounds.

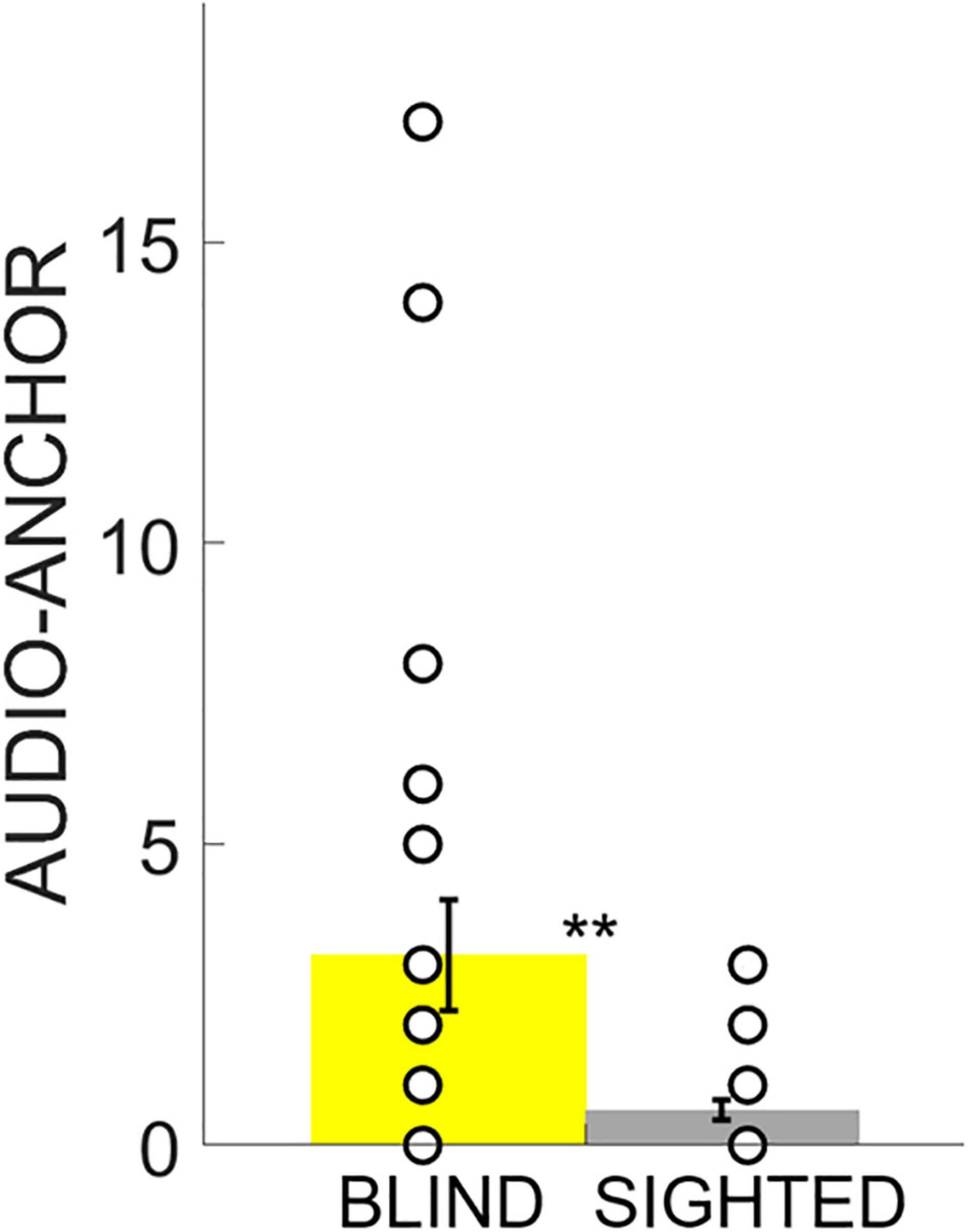

Participants sat facing ARENA2D at a distance of 30 cm. Memory performance was evaluated using the Score reached at the end of the test and the Number of attempts to pair the two stimuli once positions were discovered. Finally, we defined the Audio-Anchor index as an expression of spatial exploration strategy that measured how often participants started two consecutive attempts by touching the same haptic block (see “Data Analysis” for further details). Statistical analyses were conducted using RStudio (Version 1.1.463) and data are shown as means and standard error (Figures 3, 4, 5). Given the small sample size, statistical analyses were conducted with non-parametric tests based on permutations. We first ran a non-parametric MANCOVA (adonis2() R function) with Score, Number of attempts and Audio-Anchor as dependent variables, Group (either blind or sighted) as between-subject, Difficulty (either easy or hard) as within-subject and Age (the age of the participants in decimal number of years) as a covariate. Follow-up analyses were conducted only for the significant interactions with ANOVAs based on permutations (aovp() R function). Finally, post hoc analyses were run with unpaired Student’s t-tests based on permutations (perm.t.test() R function) and Bonferroni corrections were used to correct for multiple comparisons (see the Statistical Analyses section for further details).

Figure 3. Score. Data are presented as mean and standard error for each group. The white circles on the bars represent the individual data. The Score reached by the participants was lower in the 4-pair condition and the sighted outperformed the blind group in both experimental conditions. * indicates p < 0.05, ** indicates p < 0.01, *** indicates p < 0.001.

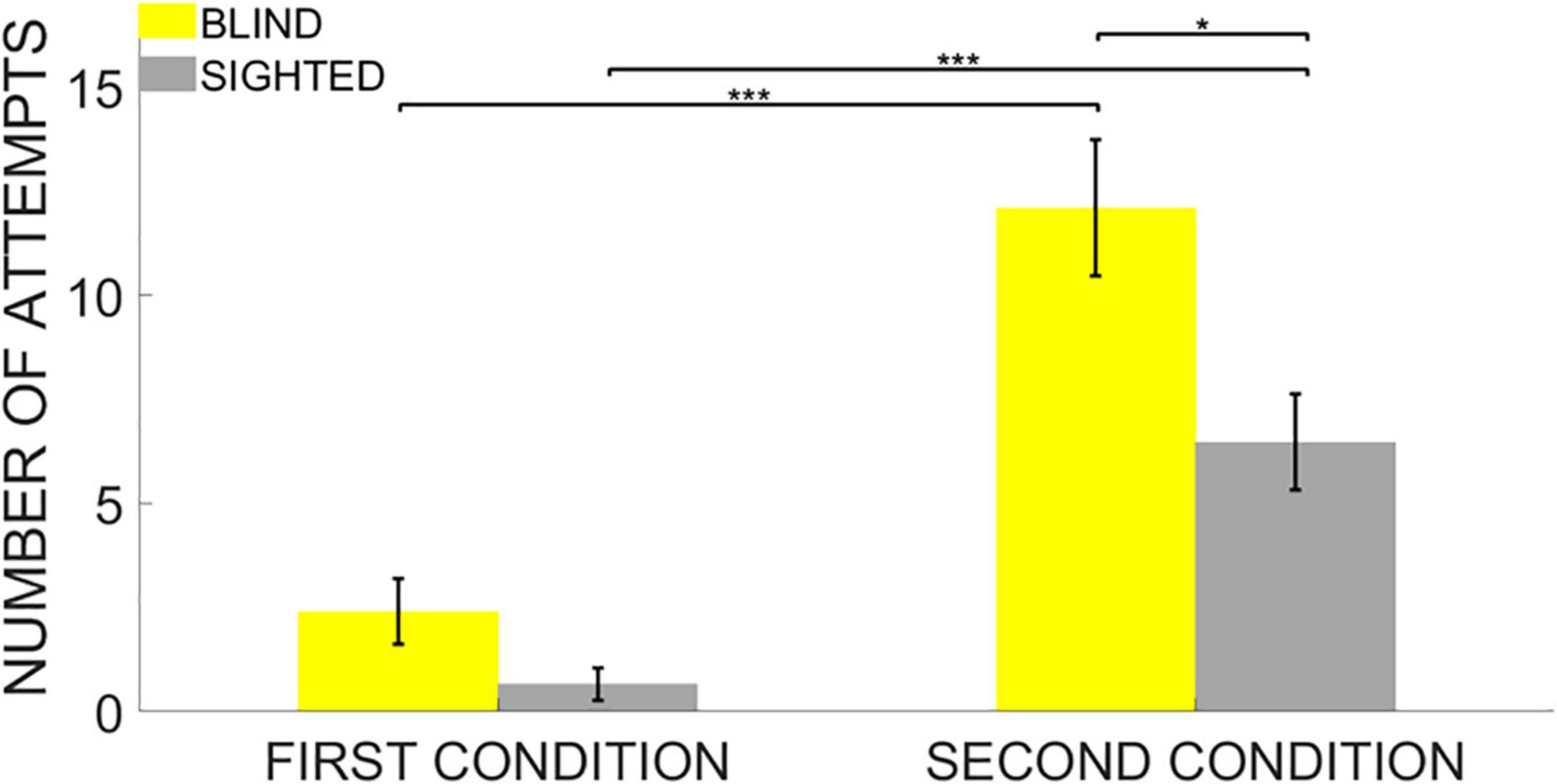

Figure 4. Number of Attempts. Data are presented as mean and standard error for each group. The white circles on the bars represent the individual data. Even though both groups needed more attempts to end the task in the 4-pair compared to the 12-pair conditions, the sighted group needed fewer attempts to pair the items once their locations have been discovered on ARENA2D but only in the second condition. No significant difference between the groups was found in the first condition instead. * indicates p < 0.05, *** indicates p < 0.001.

Figure 5. Audio-Anchor. Data are presented as the mean and standard error. The white circles on the bars represent the individual data. The blind group relied more on the use of the Audio-Anchor regardless of the experimental condition. ** indicates p < 0.01.

Results of the non-parametric MANCOVA (number of permutations = 999) highlighted a significant main effect of the Group [F(1,40) = 9.124, p = 0.001, η2 = 0.243], a significant main effect of Difficulty [F(1,40) = 72.62, p = 0.001, η2 = 0.909] and a significant interaction Group * Difficulty [F(1,40) = 3.35, p = 0.032, η2 = 0.202] but no significant main effect of Age [F(1,40) = 0.44, p = 0.626], nor significant interactions Group* Age [F(1,40) = 0.915, p = 0.367], Age * Difficulty [F(1,40) = 0.07 p = 0.939] nor Group * Age * Difficulty [F(1,40) = 1.87, p = 0.154]. Thus, given that the only significant interaction was Group * Difficulty we ran a follow-up non-parametric ANOVA, separately for the Score the Number of attempts and the Audio-Anchor, with Group as between-subject and Difficulty as within-subject factors and by putting together adolescents and adults in both groups. The results of MANCOVA indeed highlighted that the performance in the task were not affected by participant ages.

The ANOVA of the Score, showed a significant main effect of the Group (iterations = 5,000, p < 0.001, η2 = 0.24), a significant main effect of the Difficulty (iterations = 5,000, p < 0.001, η2 = 0.76) and a significant interaction Group * Difficulty (iterations = 2,131, p = 0.041, η2 = 0.07). As expected, post hoc analyses first revealed that both blind and sighted participants reached a higher score in the first condition because it was easier in terms of number of stimuli to be paired (unpaired two-tailed t-test based on permutations: Welch’s t = 5.66, p < 0.001, Cohen’s d = 1.01, Welch’s t = 2.49, p < 0.001, Cohen’s d = 5.67 for both the blind and sighted groups, respectively). Interestingly, we found that, compared to blind participants, sighted participants reached a higher Score in both the 4-pair (unpaired two-tailed t-test based on permutations: Welch’s t = 2.50, p = 0.031, Cohen’s d = 1.02) and 12-pair condition (unpaired two-tailed t-test based on permutations: Welch’s t = 3.02, p = 0.01, Cohen’s d = 1.23) compared to the blind group (Figure 3).

The results of the ANOVA on the Number of attempts also highlighted a significant main effect of the Group (iterations = 5,000, p < 0.001, η2 = 0.21), a significant main effect of the Difficulty (iterations = 5,000, p < 0.001, η2 = 0.53) and a significant interaction Group * Difficulty (iterations = 2,329, p = 0.04, η2 = 0.07). As expected, also in this case, post hoc analyses first revealed that both blind and sighted participants needed more attempts to end the task in the second condition due to the greater number of sounds to be paired (unpaired two-tailed t-test based on permutations: Welch’s t = 5.35, p < 0.001, Cohen’s d = 2.18, Welch’s t = 4.79, p < 0.001, Cohen’s d = 1.96 for both the blind and sighted groups, respectively).

Post hoc analyses revealed no significant difference between the two groups in the Number of Attempts required to end the task in the first condition (unpaired two-tailed t-test based on permutations: Welch’s t = 1.98, p = 0.21). Nevertheless, the blind group needed more attempts to end the task in the second condition compared to the sighted group (unpaired two-tailed t-test based on permutations: Welch’s t = 2.815, p = 0.035, Cohen’s d = 1.15). Results for Number of Attempts confirmed that blind participants did not hold item locations in memory as efficiently as sighted participants, especially when the cognitive load of the task increased in the second condition (Figure 4).

Finally, we used the Audio-Anchor index to compare the strategies of the two groups when exploring the device and completing the task. The results of the ANOVA highlighted a main effect of the Group (iterations = 5,000, p < 0.001, η2 = 0.16) and of the Difficulty (iterations = 4,735, p < 0.001, η2 = 0.09), but no significant interaction between the two (iterations = 238, p = 0.3). Post hoc analysis revealed no significant difference between the first and second conditions in the tendency to use the Audio-Anchor as an exploration strategy, regardless of the experimental group (unpaired two-tailed t-test based on permutations: Welch’s t = 1.88, p = 0.063). However, the analyses indicated that blind participants were more prone to using this exploratory strategy in both the first and second conditions compared to the sighted group (unpaired two-tailed t-test based on permutations: Welch’s t = 2.76, p < 0.01, Cohen’s d = 0.56) (Figure 5).

Discussion

This research investigated how blind people memorize, learn, and process acoustic spatial information and complex auditory spatial structures. To this aim, we adapted the card game “Memory” to be administered with blind and sighted participants in the form of an experimental paradigm with spatialized acoustic items instead of cards. Participants were asked to pair animal calls that were spatially displaced in two experimental conditions of increasing difficulty (the first one with eight and the second one with 24 items). In comparison to sighted individuals, we hypothesized that blind participants would encounter more difficulties when asked to process complex audio-spatial representations. In support of our hypotheses, we observed that the sighted group outperformed the blind group in both conditions by reaching a higher Score (Figure 3).

Furthermore, in the more difficult condition with twelve pairs to be matched, blind subjects needed more attempts to proceed with the task and returned more times on the same haptic blocks than the sighted group (Figure 4). This suggests that lack of vision may lead to difficulties in integrating the spatial positions of sounds into a coherent and functional spatial representation. Moreover, these results indicate that the absence of visual experience affects the employment of functional spatial exploration strategies in the discovery and memorization of non-visual auditory spatial structures regardless of the experimental condition (Figure 5).

Observed differences between blind and sighted participants can be related to difficulties in combining the spatial position of sound sources in a coherent and functional mental representation. The Audio-Memory indeed, requires the active manipulation of spatial information, a mental operation generally affected by congenital blindness. In the haptic modality, blind individuals can construct a mental representation of a tactile layout and remember the locations of the targets on their surface (Vecchi et al., 2005; Cattaneo et al., 2008). The same ability has been observed with acoustic items (Setti et al., 2018). Following the exploration of a complex and meaningful spatial auditory scene, when asked to recall the position of the items composing the layout one by one, blind and sighted participants performed equally well (Setti et al., 2018). Thus, after the exploration of a spatial arrangement, blind people should overall be able to recall the positions of all items composing a certain configuration (Vecchi, 1998; Cornoldi et al., 2000). Mental representations can indeed be built even in the absence of external visual inputs. In general, when the task demand is the simple memorization of items and the cognitive load imposed by the task is not high, blind and sighted individuals perform similarly (Cattaneo et al., 2008; Cattaneo and Vecchi, 2011). Nevertheless, good performance of blind individuals in spatial memory tests, even passive, strongly depends on the demands on memory and on the amount of spatial elaboration that is needed to perform the task. Visual perception is indeed the “preferred modality” in visuo-spatial working memory and previous studies highlighted that also with simple 2D patterns, blind participants performed poorly (Vecchi, 1998). In Setti et al. (2018), we found that blind participants could remember the spatial positions of the stimuli embedded in ARENA2D, the same device used in the current study. The better performance of the sighted group was only ascribed to a better use of the spatial relations among the sounds and not to the simple memorization of their locations. The same pattern of results was confirmed in another work that relied on an acoustic virtual reality system (Setti et al., 2021a), where the blind group could easily remember sounds’ locations after a spatial exploration of the virtual environment. In the current study, the task required participants to generate a mental spatial image of the audio spatial structure of the items to be remembered. Thus, it is a more complex task than the simple memorization of items’ locations. Specifically, participants were required to memorize and manipulate the mental representation of a complex and dynamic acoustic layout. The dynamic aspect requires a continuous updating process occur while performing the task by maintaining locations in memory. When a new item is discovered, participants must remember its location and, at the same time, update the spatial representation of the scene by adding the uncovered sound’s position. Conversely, when two items were paired, their sites were covered by cardboard squares, thus removing them from the represented scene. These processes progressively increased the cognitive load imposed by the experimental paradigm as the subject proceeded toward the end of the task. In this sense, the differences observed between blind and sighted participants may reflect the greater need for blind individuals to use executive functions affected by increasing cognitive load. Along these lines, De Beni and Cornoldi (1988) observed that congenitally blind individuals experience more difficulties in spatial WM tasks that have high memory demands than sighted individuals.

Previous research has also shown that blind people have difficulty dealing with multiple haptic stimuli presented simultaneously (Vecchi et al., 1995). Our results lead to similar conclusions in the context of spatial memory of acoustic items. In the study presented here, we observed that blind participants tended to use an audio-anchor strategy to explore the audio-spatial structure more than sighted participants (Figure 5). In other words, blind participants were more likely to build their spatial representation of the auditory structure piece-by-piece by referring all spatial locations to a previously explored position on ARENA2D. Thus, in comparison to sighted, blind individuals seem to be less able to organize and maintain spatialized auditory information thus suggesting that the absence of visual experience confines WM abilities to a more sequential and slower processing of spatial information (Pascual-Leone and Hamilton, 2001; Cattaneo et al., 2008; Ruggiero and Iachini, 2010). As a result of their visual experience, sighted people can better code spatial information in the form of global, externally based representations (Cornoldi et al., 1993; Cattaneo et al., 2008). In line with the calibration theory on the development of multisensory processing of spatial information (Gori et al., 2012), visual experience appears to be fundamental for developing a functional representation of spatial information in structured patterns (i.e., chunks). Previous research on the simultaneous manipulation of multiple stimuli suggests that visual experience is needed to acquire such ability even if stimuli are not visually conveyed (De Beni and Cornoldi, 1988). In this context, we interpret our results as evidence of the influence of visual experience in multisensory processing of simultaneous stimulation. The ability to process different sounds simultaneously and represent spatial information in the form of structured patterns may have helped sighted participants in updating the spatial representation of stimuli’s locations during the execution of the Audio-Memory task. Such ability seems to be compromised in blind participants, as expressed by their greater tendency to start consecutive trials by exploring previously discovered items. Similar to previous observations in the context of haptic spatial memory (Ruggiero and Iachini, 2010), blind individuals show limited functional strategies to process spatial information, thus suggesting that their performance required a greater involvement of executive resources compared to sighted.

Since participants generated the auditory feedback through their arm movements, spatial information emerged from sensorimotor contingencies’ coupling. In other words, to touch the sensors and emit the sound corresponding to each haptic block, the participant had first to reach the location with their arm. The movement of the arm could have been used as a cue to identify and consequently remember sound positions because the stimulus was generated after the touch. Past research showed that audition provides informative feedback on limb movement, enhancing localization skills after training (Bevilacqua et al., 2016; Cuppone et al., 2019). In the Audio-Memory task presented here, participants coupled their arm movement with spatial acoustic feedback.

Finally, independent of the presence of visual disability, we did not observe significant differences in the performance between adults and adolescents. Given that 12-year-old pre-adolescents have already reached an adult-like performance in a variety of sensory and cognitive tasks (Vuontela et al., 2003; Peterson et al., 2006; Luna, 2009; Scheller et al., 2021) we did not expect age-related differences in performance on the Audio-Memory task. Our results confirm similar age-related achievements for sighted and blind individuals but we cannot exclude that such differences might be present in a younger population tested with the Audio-Memory task. Finally, given that the task is designed in the form of a game, our experimental paradigm would be suitable to pursue studies in this direction to elucidate the influence of blindness in the development of audio-spatial memory skills. As shown here, the adaptation of a game in an experimental protocol allows the use of such a procedure with visually impaired individuals across a wide age range, including those in late childhood and potentially also with younger children. Beyond scientific settings, the Audio-Memory may be adapted for educational purposes as a tool to speed learning and development of new concepts and associations, facilitating the inclusion of visually impaired individuals in educational contexts.

Conclusion

This study evaluated spatial and memory skills of blind and sighted individuals and their strategies for exploring complex auditory structures. Early visual deprivation affects the processing and exploration of spatial items embedded in a complex acoustic structure. With higher cognitive demands (such as those required in the 12-pair condition), blind subjects needed more attempts to update the spatial information learned during the task than sighted participants. Furthermore, blind participants relied more on the audio-anchor strategy to explore ARENA2D and build a functional, unified and constantly updated spatial representation. In line with previous findings, limitations previously observed in the haptic domain (Vecchi et al., 2005), held for the auditory modality, thus confirming the pivotal role of visual experience in the active manipulation of memorized spatial information. The current paradigm, designed in the form of a game, can be used as a starting point to define novel procedures for cognitive evaluation and rehabilitation. In addition, the Audio-Memory task might be suitable for developing multisensory training to enhance spatial representation through the coupling of auditory and proprioceptive cues. These procedures might be used in those clinical conditions where using the auditory modality can be more effective than vision, such as in the context of visual impairment or cognitive and neuropsychological impairments.

Materials and Methods

Participants

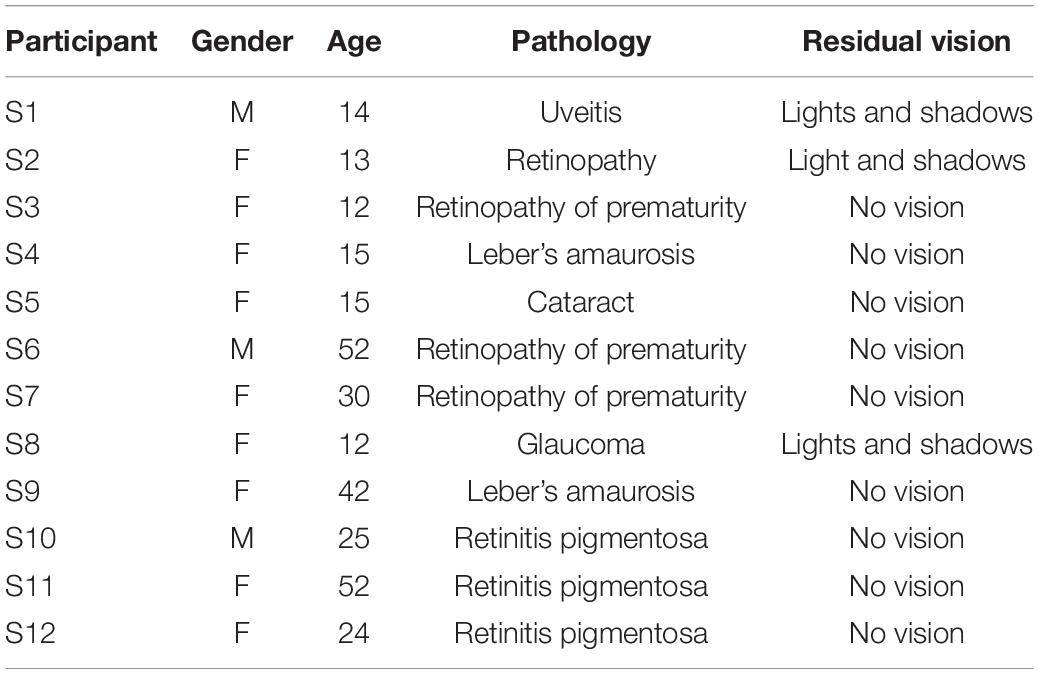

Twelve congenitally blind (nine females; age range: 12–52 years, mean age ± SD: 25.5 ± 15.29 years, ethnicity: Caucasian) and twelve sighted (nine females; age range: 12–54 years, mean age ± SD: 25.83 ± 15.86 years, ethnicity: Caucasian) individuals took part in the experiment. In the recruitment process, we used a broad age range because of general difficulties in recruiting congenitally blind individuals. Clinical details relative to their visual impairment are given in Table 1. Blind adults were recruited from our institute database and blind adolescents from the “Istituto David Chiossone” based in Genoa, Italy. The local health service ethics committee approved the experiments (Comitato Etico, ASL 3, Genoa, Italy). Parental or adult informed written consent for the study was obtained in all cases. All experiments were performed under The Declaration of Helsinki. None of the sighted or blind participants had any additional sensory or cognitive disabilities.

Setup and Stimuli

The test was performed using a vertical array of speakers, arranged in the form of a matrix (50 × 50 × 10 cm) called ARENA2D (see Figure 1 for details) that allowed for the serial emission of spatialized sounds.

This device is comprised of 25 haptic blocks (10 × 10 cm2), each covered by a 4 × 4 matrix of tactile sensors (2 × 2 cm2 each) (see Figure 1 for details). When a tactile sensor of a haptic block is touched, touch position is registered. For each haptic block, the sound is emitted from the speaker belonging to the haptic block itself (see the black holes in Figure 1B), thus sounds are spatially distributed over the surface of ARENA2D. All blocks are connected in cascade through USB cables [see technical details in Setti et al. (2019)]. Two cardboard grids were used to allow for haptic exploration of the device while performing the task. The grids were developed in collaboration with rehabilitators from the David Chiossone Institute (Genoa, Italy). Following rehabilitators’ indications, apertures were as big as the haptic blocks in the 4-pair condition (10 × 10 cm2), while we used smaller apertures for the 12-pair condition (4 × 4 cm2) to facilitate haptic exploration and coding of each position’s device. To avoid performance being influenced by localization abilities, we chose the size of apertures to overcome auditory localization error previously observed in blind individuals, i.e., 3 cm (Cappagli et al., 2017).

The sounds chosen were distinctive animal calls to ensure ease of discrimination (Table 2). All sounds were downloaded from an online database of common licensed sounds1, equalized and reproduced from the speakers at the same volume. All sound clips lasted 3 s to support easy recognition by participants. Feedback sounds about the performance were emitted from the central speaker of ARENA2D (Figure 1): a “Tada!” sound when two items were matched and a recorded voice saying “NO” otherwise. At the end of the task, a jingle was played from the central speaker to make the test engaging. The sound pressure level (SPL) was maintained at 70 dB and the Root Mean Square (RMS) level was calibrated to be the same across the various signals.

Experimental Procedure

The experimental protocol was an adapted audio version of the classic card game “Memory,” designed to be performed by blind individuals. Cards were replaced with sounds (animal calls) and two experimental conditions (Figure 2) considered. In the 4-pair condition, participants searched for four pairs of identical animal calls; in the 12-pair condition, there were 12 pairs to be matched. We used different sets of animal calls for each condition. To differentiate between experimental conditions, we took advantage of two cardboard grids that differed in the number of apertures and shape (Figure 2). Overall, the 12-pair condition required increased memory load.

During the experiment, subjects sat on a chair at a distance of 30 cm from the device, whose position was adjusted to align the subject’s nose with the grid’s central aperture. None of the participants had previously interacted with ARENA2D, and the group of sighted participants entered the room already blindfolded. The experimenter guided the subject’s hands to explore the grid with eight apertures and counted them with the participants by guiding their hands over the grid and its apertures. After this phase, participants freely touched ARENA2D with both hands to familiarize themselves with the device. During the actual test, subjects were instructed to use the index finger on their dominant hand to select items and explore the device. Before starting the experiment, participants practiced with a trial session. Using the 4-pair condition grid, the experimenter guided the participant’s hand, first over two unpaired free slots (that emitted different sounds), and then over two paired items, to familiarize them with the task and the feedback sounds. After this practice session, participants listened to and identified each animal call. The experimenter confirmed that all participants recognized all animal calls. After the recognition phase, the test started with the 4-pair condition (Figure 2, left panel). Once this session had finished, the grid for the second experimental condition was placed over the device (Figure 2, right panel). Subjects explored this grid by counting the free apertures with the experimenter’s help and then exploring the device with no guidance. When the subjects were confident with the grid, the 12-pair condition started. All the subjects were instructed not to move the head through the experiment. In the case of head movements, the experimenter stopped the test to adjust participant’s head. The test did not have a fixed duration since it was self-paced. However, each session lasted 25 min on average.

Data Analysis

In both conditions, to quantify subjects’ performance, three parameters were calculated to measure memory and exploration strategy. Details of parameters follows.

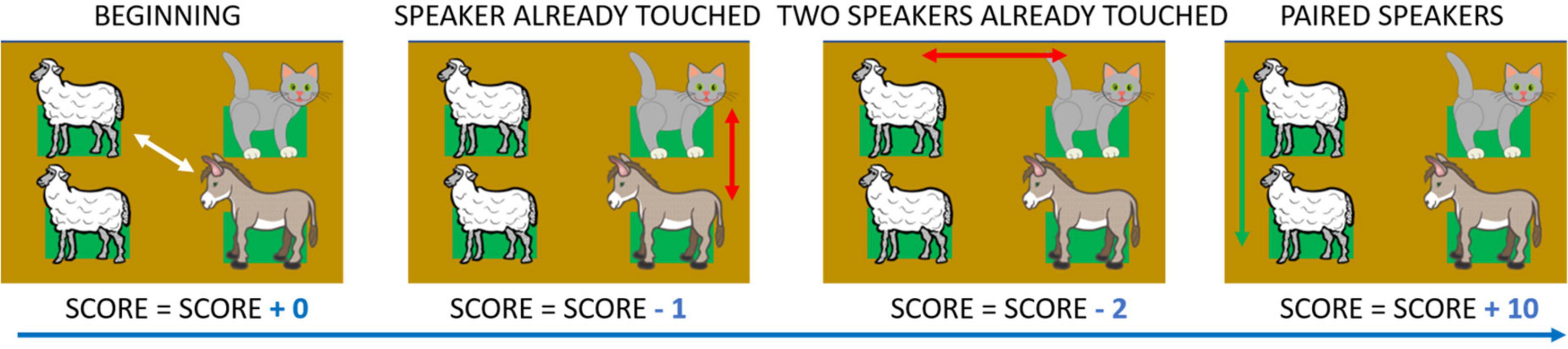

Score

The number of touches on the same haptic block (Figure 6). The more participants touched the same haptic block, the lower their Score. This parameter quantified the overall memory performance in the test. Score was calculated as follows. For each attempt, if both haptic blocks touched were selected for the first time, the total Score was neither increased nor decreased. In fact, in this case, memory processes did not influence item choice as they were not previously discovered. If, in an attempt, only one of the two haptic blocks had already been touched, the total Score decreased by one. If both haptic blocks had already been touched in an attempt, the total Score was decreased by two. In this and the previous case, the Score was decreased to account for inferior performance in recalling the position of the previously uncovered item. When a pair was found, regardless of the number of touches per each haptic block, the total Score was increased by 10. Figure 6 shows a detailed example of how Score is calculated.

Figure 6. Score, example of calculation. Score is an index that decreases when participants press a panel they have previously chosen. When two blocks are touched for the first time a Score of 0 is allocated. If they have already touched one or both blocks, the Score decreases by one or two, respectively. When a pair is found the Score increases by ten. In the example, if the starting value were equal to zero, the final Score would be: 0 – 1 – 2 + 10 = 7. Depicted animals were downloaded from a royalty-free images web archive (https://publicdomainvectors.org/).

Number of Attempts

This quantifies how many attempts the subject required to pair two identical items once their positions had been discovered on the ARENA2D. The higher the value, the more attempts were needed to pair the sounds, and therefore, the worse the performance. This parameter quantifies the ability to maintain the spatial locations of uncovered items in memory. The Number of Attempts to pair sounds once their locations were discovered on the device was calculated for each possible pairing. For each participant, we summed up the number of trials to pair each couple of sounds (four and twelve pairs for the 4-pair and 12-pair condition, respectively). Then, we averaged these numbers and we obtained a mean number of trials to pair two sounds for each participant. Finally, these means were mediated across all the subjects.

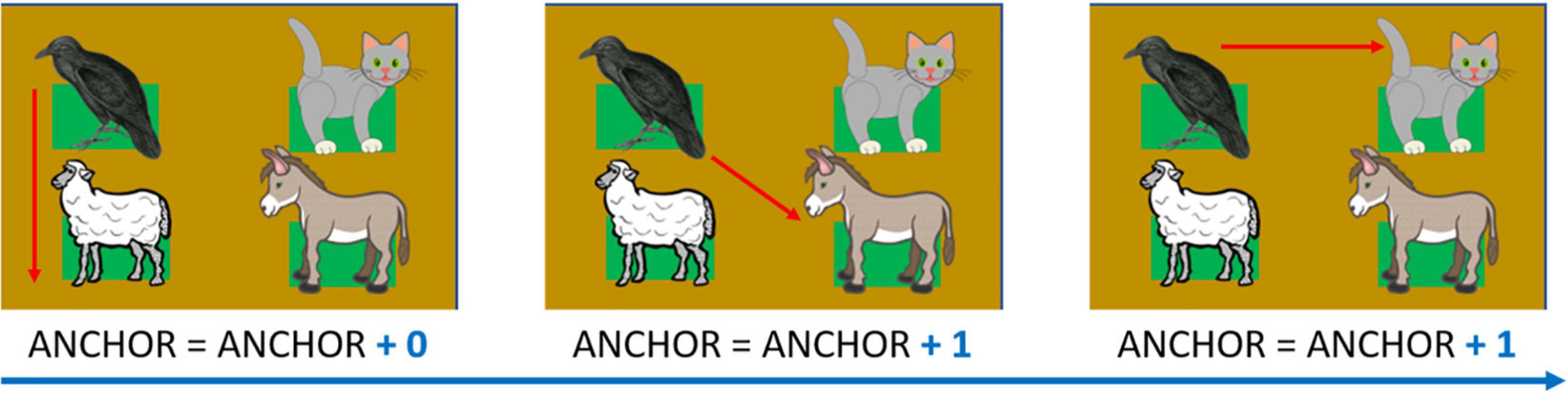

Audio-Anchor

This index accounts for how many consecutive attempts the participant makes by starting with the same haptic block and measures exploration strategy. In other words, this index evaluates how many times the participants started consecutive attempts from the first haptic block of the last pair that they tapped. As this strategy is increasingly adopted, the index increases. For instance, suppose that the participant encounters, in an attempt, the cat meow first and the dog bark after. The Audio-anchor index would increase by one if they began the subsequent attempt again from the same cat’s meow position. The index increases until the child starts an attempt by touching a different stimulus position. As in a previous study (Setti et al., 2021b) the audio-anchor provides a measurement of how well the person construct their spatial representation of sound disposition. Thus, the greater the final index, the less the mutual relationship among the stimuli are used (see Figure 7 for details).

Figure 7. Audio-Anchor, example of calculation. The index equals zero at the beginning of the test. The index increases with more attempts starting with the same haptic block. In the presented example, the final value would be: 0 + 1 + 1 = 2. Depicted animals were downloaded from a royalty-free images web archive (https://publicdomainvectors.org/).

Statistical Analyses

All analyses were carried out in RStudio, Version 1.1.463 (R Core Team (2020), 2020) with non-parametric tests given the small sample size. We first ran a two-way repeated measures MANCOVA based on permutations (adonis2() R function) using the R package “vegan” (Dixon, 2003) with Score, Number of attempts and Audio-Anchor as dependent variables, Group (either blind or sighted) as between-subject, Difficulty (either easy or hard) as within-subject and Age (i.e., the age of the participants in decimal number of years) as covariate to check for the influence of age on overall performances. Since we did not find any effect nor interaction given by the age, follow-up ANOVAs, conducted only for the significant interactions, were run with permutation tests [aovp() function using the R package “lmPerm” (Wheeler and Torchiano, 2016)]. The lmPerm package use permutation tests to obtain p-values for linear models when data do not follow a normal distribution (Wheeler, 2010). In reporting the results of non-normally distributed data, permutation test p-values are reported. Finally, post hoc analyses were run with two-tailed unpaired Student’s t-tests based on permutations (perm.t.test() R function). Effect sizes were calculated in terms of partial eta-squared (η2) for ANCOVAs (η2: small, > = 0.01; medium, > = 0.06; large, > = 0.14) and as Cohen’s d value for the t-tests (small, > = 0.2; medium, > = 0.5; large, > = 0.8). Bonferroni correction was used to test the significance of multiple comparison post hoc tests (p < 0.05 was considered significant).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Comitato Etico, ASL 3, Genoa, Italy. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

Author Contributions

WS, LC, and MG conceived the studies and designed the experiments. EC helped in the recruitment of blind participants. All authors wrote and reviewed the manuscript.

Funding

This research was supported by the “Istituto David Chiossone,” based in Genoa. This research was also partially supported by the MYSpace project (PI MG), which has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 Research and Innovation Program (grant agreement No 948349).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank Claudio Campus for the technical assistance. Finally, a special thank to all the people who took part in the experiments.

Footnotes

References

Ahmad, H., Setti, W., Campus, C., Capris, E., Facchini, V., Sandini, G., et al. (2019). The Sound of Scotoma: audio Space Representation Reorganization in Individuals With Macular Degeneration. Front. Integr. Neurosci. 2019:44. doi: 10.3389/fnint.2019.00044

Alais, D., and Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262. doi: 10.1016/j.cub.2004.01.029

Arditi, R., and Dacorogna, B. (1988). Optimal foraging on arbitrary food distributions and the definition of habitat patches. Am. Natural. 1988:284825. doi: 10.1086/284825

Arnold, A. E. G. F., Burles, F., Krivoruchko, T., Liu, I., Rey, C. D., Levy, R. M., et al. (2013). Cognitive mapping in humans and its relationship to other orientation skills. Exp. Brain Res. 224, 359–372. doi: 10.1007/s00221-012-3316-0

De Beni, R., and Cornoldi, C. (1988). Imagery limitations in totally congenitally blind subjects. J. Exp. Psychol. 14, 650–655. doi: 10.1037/0278-7393.14.4.650

Bertonati, G., Tonelli, A., Cuturi, L. F., Setti, W., and Gori, M. (2020). Assessment of spatial reasoning in blind individuals using a haptic version of the Kohs Block Design Test. Curr. Res. Behav. Sci. 1:100004. doi: 10.1016/j.crbeha.2020.100004

Bevilacqua, F., Boyer, E. O., Françoise, J., Houix, O., Susini, P., Roby-Brami, A., et al. (2016). Sensori-motor learning with movement sonification: perspectives from recent interdisciplinary studies. Front. Neurosci. 2016:385. doi: 10.3389/fnins.2016.00385

Bonino, D., Ricciardi, E., Bernardi, G., Sani, L., Gentili, C., Vecchi, T., et al. (2015). Spatial imagery relies on a sensory independent, though sensory sensitive, functional organization within the parietal cortex: a fMRI study of angle discrimination in sighted and congenitally blind individuals. Neuropsychologia 68, 59–70. doi: 10.1016/j.neuropsychologia.2015.01.004

Bonino, D., Ricciardi, E., Sani, L., Gentili, C., Vanello, N., Guazzelli, M., et al. (2008). Tactile spatial working memory activates the dorsal extrastriate cortical pathway in congenitally blind individuals. Archives Italiennes de Biologie 146, 133–146. doi: 10.1162/jocn_a_00208

Burr, D., Banks, M. S., and Morrone, M. C. (2009). Auditory dominance over vision in the perception of interval duration. Exp. Brain Res. 198, 49–57. doi: 10.1007/s00221-009-1933-z

Cappagli, G., Cocchi, E., and Gori, M. (2017). Auditory and proprioceptive spatial impairments in blind children and adults. Dev. Sci. 20:3. doi: 10.1111/desc.12374

Carreiras, M., and Codina, B. (1992). Spatial cognition of the blind and sighted: visual and amodal hypotheses. Cahiers de Psychologie Cognitive. 12:1.

Cattaneo, Z., and Vecchi, T. (2011). Blind Vision: The Neuroscience of Visual Impairment. Cambridge, MA: Mit press. doi: 10.1007/s00221-007-0982-4

Cattaneo, Z., Vecchi, T., Cornoldi, C., Mammarella, I., Bonino, D., Ricciardi, E., et al. (2008). Imagery and spatial processes in blindness and visual impairment. Neurosci. Biobehav. Rev. 32, 1346–1360. doi: 10.1016/j.neubiorev.2008.05.002

Cornoldi, C., Bertuccelli, B., Rocchi, P., and Sbrana, B. (1993). Processing Capacity Limitations in Pictorial and Spatial Representations in the Totally Congenitally Blind. Cortex 29, 675–689. doi: 10.1016/S0010-9452(13)80290-0

Cornoldi, C., Rigoni, F., Venneri, A., and Vecchi, T. (2000). Passive and active processes in visuo-spatial memory: double dissociation in developmental learning disabilities. Brain Cogn. 43, 117–120.

Cornoldi, C., and Vecchi, T. (2003). Visuo-spatial working memory and individual differences. Book 2003, 1–182. doi: 10.4324/9780203641583

Cuppone, A. V., Cappagli, G., and Gori, M. (2019). Audio-Motor Training Enhances Auditory and Proprioceptive Functions in the Blind Adult. Front. Neurosci. 2019:1272. doi: 10.3389/fnins.2019.01272

Cuturi, L., Cappagli, G., Finocchietti, S., Cocchi, E., and Gori, M. (2017). New rehabilitation technology for visually impaired children and adults based on multisensory integration. J. Vis. 2017:592. doi: 10.1167/17.10.592

da Cunha, S. N. S., Travassos Junior, X. L., Guizzo, R., and de Sousa Pereira-Guizzo, C. (2016). The digital memory game: an assistive technology resource evaluated by children with cerebral palsy. Psicologia 2016:9. doi: 10.1186/s41155-016-0009-9

Dixon, P. (2003). VEGAN, a package of R functions for community ecology. J. Veget. Sci. 14:927. doi: 10.1658/1100-92332003014[0927:vaporf]2.0.co;2

Doucet, M. E., Guillemot, J. P., Lassonde, M., Gagné, J. P., Leclerc, C., and Lepore, F. (2005). Blind subjects process auditory spectral cues more efficiently than sighted individuals. Exp. Brain Res. 160, 194–202. doi: 10.1007/s00221-004-2000-4

Farah, M. J., Hammond, K. M., Levine, D. N., and Calvanio, R. (1988). Visual and spatial mental imagery: dissociable systems of representation. Cogn. Psychol. 1988:6. doi: 10.1016/0010-0285(88)90012-6

Féry, Y. A. (2003). Differentiating visual and kinesthetic imagery in mental practice. Can. J. Exp. Psychol. 2003:87408. doi: 10.1037/h0087408

Finke, R. A., and Freyd, J. J. (1989). Mental Extrapolation and Cognitive Penetrability: reply to Ranney and Proposals for Evaluative Criteria. J. Exp. Psychol. 1989:403. doi: 10.1037/0096-3445.118.4.403

Gori, M., Giuliana, L., Sandini, G., and Burr, D. (2012). Visual size perception and haptic calibration during development. Dev. Sci. 15, 854–862. doi: 10.1111/j.1467-7687.2012.2012.01183.x

Gori, M., Sandini, G., Martinoli, C., and Burr, D. C. (2014). Impairment of auditory spatial localization in congenitally blind human subjects. Brain 2014:311. doi: 10.1093/brain/awt311

Gougoux, F., Lepore, F., Lassonde, M., Voss, P., Zatorre, R. J., and Belin, P. (2004). Neuropsychology: pitch discrimination in the early blind. Nature 430, 309–309. doi: 10.1038/430309a

Hart, R. A., and Moore, G. T. (2017). The development of spatial cognition: a review. Image Env. 2017:26. doi: 10.4324/9780203789155-26

Iachini, T., Ruggiero, G., and Ruotolo, F. (2014). Does blindness affect egocentric and allocentric frames of reference in small and large scale spaces? Behav. Brain Res. 273, 73–81. doi: 10.1016/j.bbr.2014.07.032

Jicol, C., Lloyd-Esenkaya, T., Proulx, M. J., Lange-Smith, S., Scheller, M., O’Neill, E., et al. (2020). Efficiency of Sensory Substitution Devices Alone and in Combination With Self-Motion for Spatial Navigation in Sighted and Visually Impaired. Front. Psychol. 2020:1443. doi: 10.3389/fpsyg.2020.01443

Juurmaa, J., and Lehtinen-Railo, S. (1994). Visual Experience and Access to Spatial Knowledge. J. Vis. Impair. Blind. 88, 157–170.

King, A. J., and Parsons, C. H. (1999). Improved auditory spatial acuity in visually deprived ferrets. Eur. J. Neurosci. 1999:821. doi: 10.1046/j.1460-9568.1999.00821.x

Kolarik, A. J., Cirstea, S., and Pardhan, S. (2013). Evidence for enhanced discrimination of virtual auditory distance among blind listeners using level and direct-to-reverberant cues. Exp. Brain Res. 224, 623–633. doi: 10.1007/s00221-012-3340-0

Kosslyn, S. M. (1980). Image and Mind. Cambridge, MA: Harvard University Press, doi: 10.1017/CBO9780511551277

LeBoutillier, N., and Marks, D. F. (2003). Mental imagery and creativity: a meta-analytic review study. Br. J. Psychol. 2003:712603762842084. doi: 10.1348/000712603762842084

Lederman, S. J., and Klatzky, R. L. (1990). Haptic classification of common objects: knowledge-driven exploration. Cogn. Psychol. 1990:90009. doi: 10.1016/0010-0285(90)90009-S

Lehnert, G., and Zimmer, H. D. (2006). Auditory and visual spatial working memory. Mem. Cogn. 34, 1080–1090. doi: 10.3758/BF03193254

Lehnert, G., and Zimmer, H. D. (2008). Modality and domain specific components in auditory and visual working memory tasks. Cogn. Proc. 2008:6. doi: 10.1007/s10339-007-0187-6

Lewald, J. (2002). Vertical sound localization in blind humans. Neuropsychologia 40, 1868–1872. doi: 10.1016/S0028-3932(02)00071-4

Luna, B. (2009). Developmental Changes in Cognitive Control through Adolescence. Adv. Child Dev. Behav. 2009:9. doi: 10.1016/S0065-2407(09)03706-9

Millar, S. (1976). Spatial representation by blind and sighted children. J. Exp. Child Psychol. 1976:6. doi: 10.1016/0022-0965(76)90074-6

Morrongiello, B. A., Humphrey, G. K., Timney, B., Choi, J., and Rocca, P. T. (1994). Tactual object exploration and recognition in blind and sighted children. Perception 1994:230833. doi: 10.1068/p230833

Mou, W., and McNamara, T. P. (2002). Intrinsic frames of reference in spatial memory. J. Exp. Psychol. 28, 162–170. doi: 10.1037/0278-7393.28.1.162

Palmer, S. (2000). Working memory: a developmental study of phonological recoding. Memory 8, 179–193. doi: 10.1080/096582100387597

Pascual-Leone, A., and Hamilton, R. (2001). The metamodal organization of the brain. Prog. Brain Res. 2001:1. doi: 10.1016/S0079-6123(01)34028-1

Pasqualotto, A., Spiller, M. J., Jansari, A. S., and Proulx, M. J. (2013). Visual experience facilitates allocentric spatial representation. Behav. Brain Res. 236, 175–179. doi: 10.1016/j.bbr.2012.08.042

Peterson, M. L., Christou, E., and Rosengren, K. S. (2006). Children achieve adult-like sensory integration during stance at 12-years-old. Gait Post. 2006:003. doi: 10.1016/j.gaitpost.2005.05.003

Pietrini, P., Furey, M. L., Ricciardi, E., Gobbini, M. I., Wu, W. H. C., Cohen, L., et al. (2004). Beyond sensory images: object-based representation in the human ventral pathway. Proc. Natl. Acad. Sci. U S A 2004:400707101. doi: 10.1073/pnas.0400707101

Puspitawati, I., Jebrane, A., and Vinter, A. (2014). Local and Global Processing in Blind and Sighted Children in a Naming and Drawing Task. Child Dev. 2014:12158. doi: 10.1111/cdev.12158

R Core Team (2020). R: A language and environment for statistical computing. In R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing.

Rinck, M., and Denis, M. (2004). The metrics of spatial distance traversed during mental imagery. J. Exp. Psychol. 2004:1211. doi: 10.1037/0278-7393.30.6.1211

Roder, B., Teder-Sälejärvi, W., Sterr, A., Rosler, F., Hillyard, S. A., and Neville, H. J. (1999). Improved auditory spatial tuning in blind humans. Nature 400, 162–166. doi: 10.1038/22106

Rossetti, Y., Gaunet, F., and Thinus-Blanc, C. (1996). Early visual experience affects memorization and spatial representation of proprioceptive targets. NeuroReport 1996:25. doi: 10.1097/00001756-199604260-00025

Ruggiero, G., and Iachini, T. (2010). The Role of Vision in the Corsi Block-Tapping Task: Evidence From Blind and Sighted People. Neuropsychology 24, 674–679. doi: 10.1037/a0019594

Ruggiero, G., Ruotolo, F., and Iachini, T. (2012). Egocentric/allocentric and coordinate/categorical haptic encoding in blind people. Cogn. Proc. 2012:6. doi: 10.1007/s10339-012-0504-6

Scheller, M., Proulx, M. J., de Haan, M., Dahlmann-Noor, A., and Petrini, K. (2021). Late- but not early-onset blindness impairs the development of audio-haptic multisensory integration. Dev. Sci. 2021:13001. doi: 10.1111/desc.13001

Setti, W., Cuturi, L. F., Cocchi, E., and Gori, M. (2018). A novel paradigm to study spatial memory skills in blind individuals through the auditory modality. Sci. Rep. 2017, 1–10. doi: 10.1038/s41598-018-31588-y

Setti, W., Cuturi, L. F., Engel, I., Picinali, L., and Gori, M. (2021a). The Influence of Early Visual Deprivation on Audio-Spatial Working Memory. Neuropsychology. 2021:776. doi: 10.1037/neu0000776

Setti, W., Cuturi, L. F., Sandini, G., and Gori, M. (2021b). Changes in audio-spatial working memory abilities during childhood: the role of spatial and phonological development. PLoS One 16:260700. doi: 10.1371/journal.pone.0260700

Setti, W., Cuturi, L. F., Maviglia, A., Sandini, G., and Gori, M. (2019). ARENA:a novel device to evaluate spatial and imagery skills through sounds. Med. Measur. Appl. 2019:8802160. doi: 10.1109/MeMeA.2019.8802160

Sunanto, J., and Nakata, H. (1998). Indirect Tactual Discrimination of Heights by Blind and Blindfolded Sighted Subjects. Percept. Motor Skills 86, 383–386. doi: 10.2466/pms.1998.86.2.383

Thinus-Blanc, C., and Gaunet, F. (1997). Representation of space in blind persons: vision as a spatial sense? Psychol. Bull. 121, 20–42. doi: 10.1037/0033-2909.121.1.20

Vanlierde, A., and Wanet-Defalque, M. C. (2004). Abilities and strategies of blind and sighted subjects in visuo-spatial imagery. Acta Psychol. 116, 205–222. doi: 10.1016/j.actpsy.2004.03.001

Vecchi, T. (1998). Visuo-spatial imagery in congenitally totally blind people. Memory 6, 91–102. doi: 10.1080/741941601

Vecchi, T., Monticellai, M. L., and Cornoldi, C. (1995). Visuo-spatial working memory: structures and variables affecting a capacity measure. Neuropsychologia 33, 1549–1564. doi: 10.1016/0028-3932(95)00080-M

Vecchi, T., Richardson, J. T. E., and Cavallini, E. (2005). Passive storage versus active processing in working memory: evidence from age-related variations in performance. Eur. J. Cogn. Psychol. 17, 521–539. doi: 10.1080/09541440440000140

Vecchi, T., Tinti, C., and Cornoldi, C. (2004). Spatial memory and integration processes in congenital blindness. Neuroreport 15, 2787–2790.

Vercillo, T., Burr, D., and Gori, M. (2016). Early visual deprivation severely compromises the auditory sense of space in congenitally blind children. Dev. Psychol. 52, 847–853. doi: 10.1037/dev0000103

Vingerhoets, G., De Lange, F. P., Vandemaele, P., Deblaere, K., and Achten, E. (2002). Motor imagery in mental rotation: an fMRI study. NeuroImage. 2002:1290. doi: 10.1006/nimg.2002.1290

Voss, P., Lassonde, M., Gougoux, F., Fortin, M., Guillemot, J. P., and Lepore, F. (2004). Early- and late-onset blind individuals show supra-normal auditory abilities in far-space. Curr. Biol. 2004:51. doi: 10.1016/j.cub.2004.09.051

Vuontela, V., Steenari, M., and Koivisto, J. (2003). Audiospatial and Visuospatial Working Memory in 6 – 13 Year Old School Children. Learn. Memory 10, 74–81. doi: 10.1101/lm.53503.which

Wheeler, B., and Torchiano, M. (2016). lmPerm: Permutation tests for linear models. R package version 1.1-2. In Cran.

Yates, A. J. (1966). Psychological Deficit. Annu. Rev. Psychol. 1966:551. doi: 10.1146/annurev.ps.17.020166.000551

Zimler, J., and Keenan, J. M. (1983). Imagery in the congenitally blind: how visual are visual images? J. Exp. Psychol. 9, 269–282. doi: 10.1037/0278-7393.9.2.269

Keywords: audio-spatial skills, blindness, development, working memory, user-friendly technologies, acoustic perception

Citation: Setti W, Cuturi LF, Cocchi E and Gori M (2022) Spatial Memory and Blindness: The Role of Visual Loss on the Exploration and Memorization of Spatialized Sounds. Front. Psychol. 13:784188. doi: 10.3389/fpsyg.2022.784188

Received: 27 September 2021; Accepted: 21 April 2022;

Published: 24 May 2022.

Edited by:

Hong Xu, Nanyang Technological University, SingaporeReviewed by:

Antonio Prieto, National University of Distance Education (UNED), SpainNora Turoman, Université de Genève, Switzerland

Copyright © 2022 Setti, Cuturi, Cocchi and Gori. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Walter Setti, walter.setti@iit.it

Walter Setti

Walter Setti Luigi F. Cuturi

Luigi F. Cuturi Elena Cocchi2

Elena Cocchi2 Monica Gori

Monica Gori