Erratum: An other-race effect for configural and featural processing of faces: upper and lower face regions play different roles

- 1Department of Psychology, Zhejiang Sci-Tech University, Hangzhou, China

- 2Department of Psychological and Brain Sciences, University of Delaware, Newark, DE, USA

- 3Department of Psychology, University of Victoria, Vancouver, BC, Canada

- 4Dr. Eric Jackman Institute of Child Study, University of Toronto, Toronto, ON, Canada

- 5School of Computer and Information Technology, Beijing Jiaotong University, Beijing, China

- 6Laboratoire de Psychologie et Neurocognition, Université Grenoble Alpes, Paris, France

We examined whether Asian individuals would show differential sensitivity to configural vs. featural changes to own- and other-race faces and whether such sensitivity would depend on whether the changes occurred in the upper vs. lower regions of the faces. We systematically varied the size of key facial features (eyes and mouth) of own-race Asian faces and other-race Caucasian faces, and the configuration (spacing) between the eyes and between the nose and mouth of the two types of faces. Results revealed that the other-race effect (ORE) is more pronounced when featural and configural spacing changes are in the upper region than in the lower region of the face. These findings reveal that information from the upper vs. lower region of the face contributes differentially to the ORE in face processing, and that processing of face race is influenced more by information location (i.e., upper vs. lower) than by information type (i.e., configural vs. featural).

Introduction

Observers are generally better at recognizing and discriminating own-race faces relative to other-race faces (see Meissner and Brigham, 2001; Anzures et al., 2013, for a review). This phenomenon is called the other-race effect (ORE). In a standard recognition procedure, participants have to discriminate target faces learned in a study phase from distractor faces shown in a test phase. The ORE is reflected by a crossover interaction in discrimination accuracy or response time between the race of participants and the race of stimulus faces (Chance and Goldstein, 1987; Valentine, 1991; Michel et al., 2006b).

A holistic face processing view has been proposed to account for the ORE. This view begins with the idea that a face “schema” forms a holistic representation that selectively integrates face information into a perceived whole (Galton, 1879; Goldstein and Chance, 1980; Sergent, 1984; Tanaka and Farah, 1993). It has been claimed that own-race faces are processed more holistically than other-race faces (see Rossion and Michel, 2011, for a review). The holistic account of the ORE has been assessed using the established measures of holistic processing (Tanaka and Gordon, 2011): the parts/wholes task, the face composite task, and face inversion task. Using the part-whole paradigm (Tanaka and Farah, 1993), Tanaka et al. (2004) reported that Caucasian individuals from a mono-racial background showed a greater recognition benefit when Caucasian face parts were tested in a whole face context than when tested in isolation relative to Asian face parts. Michel et al. (2006a) found that both Belgian and Asian participants showed an ORE in an old/new recognition task and a greater interference for own-race than other-race faces in the composite-face task. Finally, Hancock and Rhodes (2008) found that both Caucasian and Asian participants showed that inversion more severely disrupted the recognition of own-race faces than other-race faces. These findings taken together suggest that holistic information plays an important role in the ORE.

Now, what additional facial information is crucial for the ORE? Traditionally, configural information (the metric relations that separate features) as opposed to featural information (the shape and size of the eyes, nose, and mouth) has been thought to play the predominant role in face processing in general and the ORE of face processing in particular. For example, it has been hypothesized that inversion (Yin, 1969) disproportionately disrupts the process of configural information (Freire et al., 2000; see Maurer et al., 2002, for a review). However, subsequent studies have shown that featural information does not necessarily play a lesser role than configural information in face processing in general (e.g., Taschereau-Dumouchel et al., 2010; Tanaka et al., 2014a) and the ORE in particular. With regard to the ORE, Rhodes et al. (2006) used a sequential matching task and found that Caucasian and Asian participants showed better recognition of both individual features and their spatial relations for upright own-race faces than for upright other-race faces. Likewise, Hayward et al. (2008) and Rhodes et al. (2009) used blurred and scrambled faces in a recognition task to dissociate and manipulate the structure and component information in a face and found that participants performed better for own-race than other-race faces for both the blurred wholes and scrambled components. These findings suggest that the ORE is sensitive to both configural and featural information in a face, rather than selectively relying on only one type of processing.

However, because faces are perceived on multiple featural and spatial dimensions, it is unlikely that own-race faces are processed in a superior way than other-race faces on every dimension. One relevant dimension is face location, with multiple lines of evidence suggesting that the upper vs. lower regions of a face are processed differentially. For example, “top-heavy” face-like patterns have been shown to direct preferential looking in newborn infants (Turati et al., 2002). In addition, sensitivity to information around the eyes begins and matures earlier than sensitivity to information around the nose and mouth in infants (Taylor et al., 2001; Liu et al., 2013). Similarly, eyes are better recognized than the nose or mouth in children’s face recognition and discrimination (Goldstein and Mackenberg, 1966; Hay and Cox, 2000; Pellicano and Rhodes, 2003; Pellicano et al., 2006; Ge et al., 2008; Tanaka et al., 2014b). Moreover, eye features are more heavily utilized than nose or mouth features in face recognition by adults (Walker-Smith, 1978; Sergent, 1984; Tanaka and Farah, 1993), and the eye region has been shown to be the most diagnostic region for face identification in a gaze-tracking study (Peterson and Eckstein, 2012) and in image-based computational analyses (Sekuler et al., 2004; Vinette et al., 2004; Keil, 2008). Functional neuroimaging studies with adults have further shown that the right FFA is tuned to process curvilinear symmetrical patterns with high-contrast elements in the upper region (Caldara et al., 2006; Caldara and Seghier, 2009). Clinically, in individuals with autism (Wolf et al., 2008) or prosopagnosia (Caldara et al., 2005; Bukach et al., 2008; Rossion et al., 2009) who show specific face recognition deficits, the ability to detect change in the mouth region is preserved, but in the eye region is impaired.

To measure sensitivity to configural and featural changes in own- and other-race faces, we used the Face Dimensions Test designed by Bukach et al. (2008) and Tanaka et al. (2009). This test has been used previously to document face processing performance with behavioral and eye tracking measures in healthy children and adults (Xu and Tanaka, 2013; Tanaka et al., 2014a,b), individuals with autism (Wolf et al., 2008), prosopagnosic patients (Bukach et al., 2008; Rossion et al., 2009), and infants (Quinn and Tanaka, 2009; Quinn et al., 2013).

In the current study, participants were asked to detect changes in own- and other-race faces that differed in: (1) eye spacing (distance between the eyes), (2) mouth spacing (distance between nose and mouth), (3) eye feature (size of the eyes), or (4) mouth feature (size of the mouth), at easy, moderate, and difficult discrimination levels (Tanaka and Gordon, 2011). Half of the faces were own-race faces and half were other-race. In this way, we aimed to determine how the configural and featural aspects of the upper and lower regions contribute to the ORE. In particular, we sought to answer the following questions: (1) What types of information contribute to the ORE: configural (spacing), featural (size), or both? and (2) Which regions in the face give rise to an ORE: upper, lower, or both?

Expectations for performance can be derived from three different views of face processing. By the configural view (i.e., configural information in a face plays the predominant role in generating the ORE), participants should show an ORE in conditions where the spacing information between the two eyes or between the nose and mouth are manipulated, but not in conditions where the size information of the eyes or mouth is manipulated. Alternatively, by the configural + featural view (i.e., configural and featural information are equally important to the ORE), participants should show an ORE in both the spacing and size conditions, regardless of whether information is manipulated in the upper or lower regions. Finally, if the upper and lower regions of a face make different contributions to the ORE (i.e., changes in the upper region are more important than changes in the lower region in producing the ORE), participants should show significantly more of an ORE, for both spacing and size changes, in the upper face region than in the lower face region.

Experiment 1: Detection of Spacing Differences

Method

Participants

Nineteen Chinese college students (seven females) served as participants (Mean age = 21.1 years, SD = 2.2, age range: 18–25), with normal or corrected-to-normal vision. The students had no direct contact with any foreign individuals. The Institutional Review Board of Zhejiang Sci-Tech U approved the experiment.

Materials

Eight Asian and eight Caucasian male face photos were selected by a typicality-rating task (with a 7-point Likert scale) out of 36 Asian and 36 Caucasian male faces. The mean (and SD) typicality ratings of the selected eight Asian and Caucasian faces were 5.29 (0.17) and 5.17 (0.19), respectively. There was no jewelry, glasses, or makeup on these faces. Facial markings, if any, were removed. We transformed these photographs into gray-scale faces (approximately 250 pixels in width and 320 pixels in height). The visual angle of one face on the PC screen to an observer was approximately 7.9°× 9.5°.

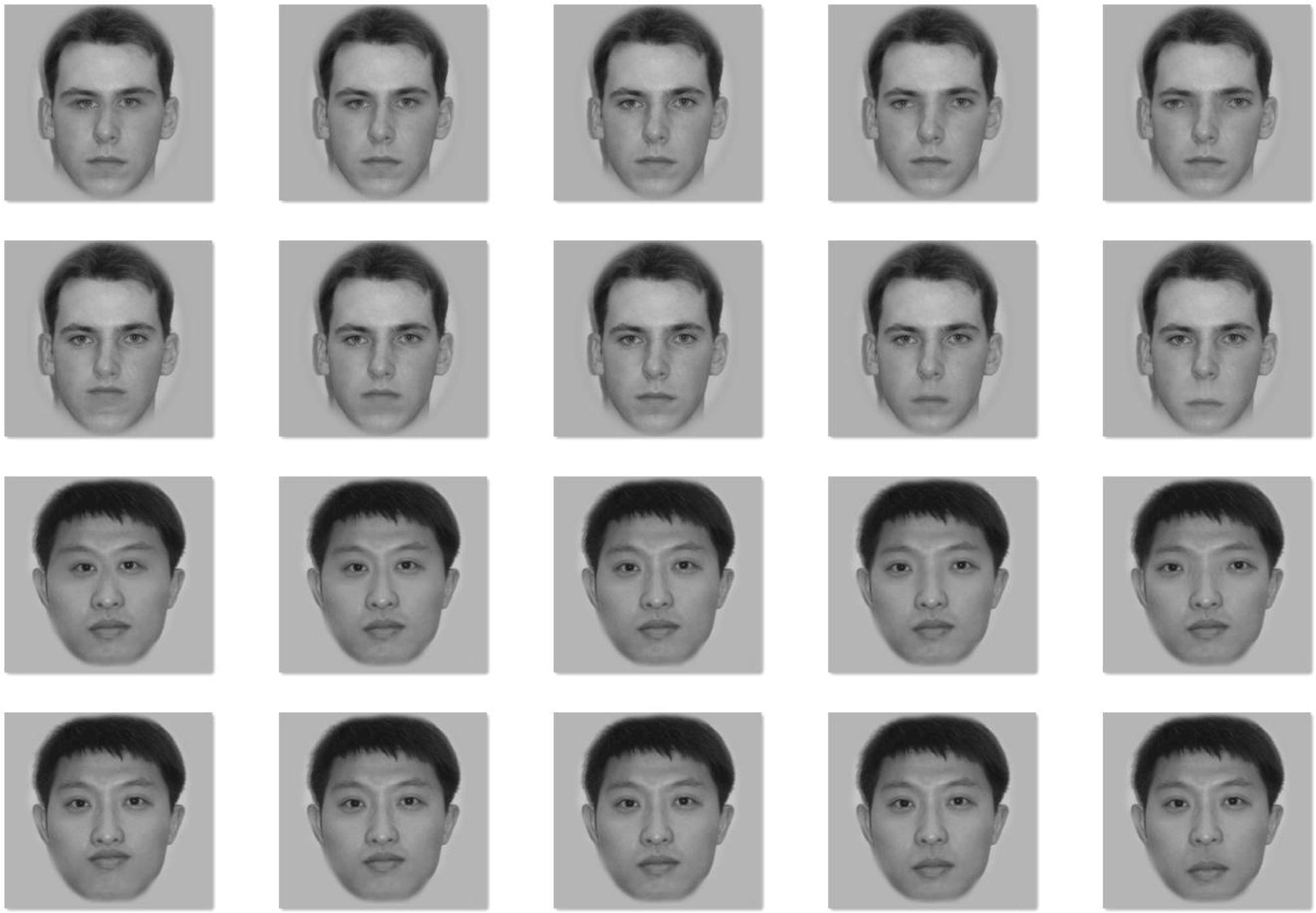

The mean distances (and SDs) between the eyes of the original Caucasian and Asian faces were 85 (3.4) and 87 (2.1) pixels, respectively, without significant difference between the two races, t(14) = 1.59, p = 0.133. The mean distances (and SDs) between the nose and mouth of the original Caucasian and Asian faces were 47 (4.0) pixels and 49 (5.0) pixels, respectively, without significant difference between the two races, t(14) = 0.88, p = 0.393. Using the same manipulations as those in the Face Dimensions Test, we created eight different versions of each face by changing the amount of spacing between the eyes in the upper region (i.e., moving the two eyes 5 or 10 pixels closer or farther away from each other) and between the nose and mouth in the lower region (i.e., moving the mouth closer or farther away by 5 or 10 pixels from the nose) of the face. Figure 1 shows example variants for an Asian and Caucasian male face.

FIGURE 1. Examples of spacing change between the two eyes (Rows 1 and 3) or between the nose and mouth (Rows 2 and 4) in a Caucasian face (Rows 1 and 2) and an Asian face (Rows 3 and 4). The middle face of each row is the original face upon which the manipulations were made. The leftmost face has the distance between eyes or between nose and mouth 10 pixels smaller than the original (the middle face), the face located second from the left has the distance between eyes or between nose and mouth 5 pixels smaller than the original, the rightmost face has the distance between eyes or between nose and mouth 10 pixels larger than the original, and the face located second from the right has the distance between eyes or between nose and mouth 5 pixels larger than the original.

Procedure and Design

Participants were asked to judge whether the two faces were the ‘same’ or ‘different’ with the instruction that a ‘same’ response indicates that the faces were judged to be physically identical. The same pairs presented two identical faces. The different pairs included three levels of difficulty in detecting the change: Easy, Medium, and Hard, with a 15-, 10-, or 5-pixel spacing difference, respectively, in the upper region (between the two eyes) or in the lower region (between the nose and mouth) of the two faces. On each trial, following a 150 ms fixation cross located in the screen center, a pair of photos was presented, side-by-side, with a time limit of 3,000 ms or until the participant pressed one of two keys responding ‘same’ or ‘different’, and this was followed by a 200 ms blank screen.

A full 2 (Race: Own or Other) × 2 (Region: Upper or Lower) × 3 (Level: Easy, Medium, or Hard) within-subject design was used. For each of the three levels of “different” manipulations in both the upper and lower regions, there were 40 trials presented (the 10 Asian and 10 Caucasian faces were both used twice). In total, 240 “different” pairs (as experimental trials) and 240 “same” pairs (as control trials) were mixed and presented randomly.

Results and Discussion

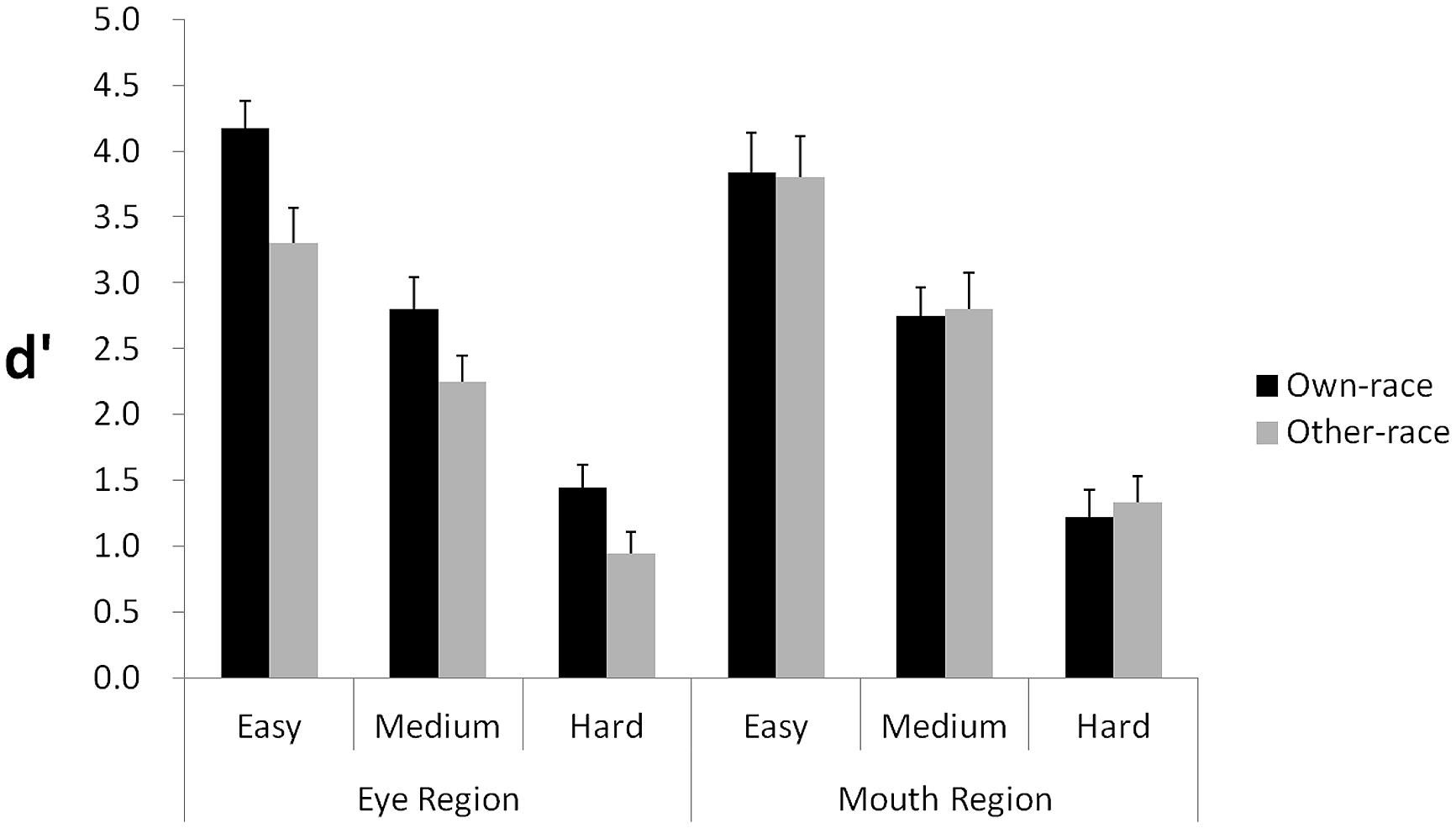

By using correct “different” responses as Hits, incorrect “different” responses as False Alarms, the equation H = P(“different”—Different) = Φ[(-k + d′)/] + Φ[(-k - d′)/], and the equation FA = P(“different”—Same) = 2Φ(-k/), d′ scores were calculated as the dependent measure (Macmillan and Creelman, 2005, p. 197). Results are shown in Figure 2.

FIGURE 2. Experiment 1: manipulating the spacing distance of facial features. The graph shows d′ scores for detecting spacing differences across three levels of change (easy, medium, hard) in the eye and mouth regions, respectively. Error bars represent SEs in each condition.

A 2 (Race: own, other) × 2 (Region: upper, lower) × 3 (Level: Easy, Medium, Hard) repeated measures analysis of variance was performed, with all three independent variables as within-subjects factors. The main effect of Race was significant, F(1,18) = 8.16, p = 0.010, = 0.312, demonstrating that discrimination performance for own-race faces was superior to discrimination performance in other-race faces. The main effect of Level was also significant, F(2,36) = 192.98, p < 0.001, = 0.915, indicating that performance varied according to the three levels of discrimination. The main effect of Region was not significant, F(1,18) = 0.39, p = 0.541, showing that discriminations in the upper face region did not differ from discriminations in the lower face region. Crucially, we found a significant interaction between Race and Region, F(1,18) = 9.33, p = 0.007, = 0.341. No other two-way interactions were significant. The three-way interaction of Race, Region, and Level was not significant, F(2,36) = 0.20, p = 0.823.

To explore the significant two-way interaction between Race and Region, we performed ANOVAs on the data for the upper and lower regions separately. For the upper region, a 2 (Race: Own vs. Other) × 3 (Level: Easy, Medium, Hard) repeated-measures ANOVA was performed. The main effect of Race was significant, F(1,18) = 32.24, p < 0.001, = 0.642, indicating that participant response accuracy was higher for own-race faces than for other-race faces, thereby providing evidence of an ORE in the upper region. The main effect of Level was significant, F(2,36) = 108.48, p < 0.001, = 0.858, indicating that the more spacing difference between two faces, the better participants differentiated them. The interaction of Race and Level was not significant, F(2,36) = 1.25, p > 0.29, indicating that the magnitude of the ORE in the upper region of the face does not dramatically change with changes in the magnitude of the spacing difference.

For the lower region, a 2 (Race: Own vs. Other) × 3 (Level: Easy, Medium, Hard) repeated-measures ANOVA was performed. The main effect of Race was not significant, F(1,18) = 0.053, p > 0.82, indicating that participant response accuracy was not different for own- versus other-race faces, thereby providing no evidence of an ORE. However, the main effect of Level was significant, F(2,36) = 138.44, p < 0.001, = 0.885, indicating that the more spacing difference between two faces, the better participants differentiated them. The interaction of Race and Level was not significant, F(2,36) = 0.096, p > 0.90.

Collectively, the findings indicate that the ORE in response accuracy was manifested in sensitivity to spacing change in the upper region; however, spacing difference in the lower region did not produce an ORE.

Experiment 2: Detection of Feature Size Differences

Experiment 1 showed that spacing change in the upper face, but not in the lower face, influenced the ORE. However, it is still unclear whether this location effect would also be observed for featural (i.e., size) changes in the face. Experiment 2 examined this issue by manipulating eye and mouth size on multiple levels and comparing participant performance for detecting the difference between own-race faces and other-race faces.

Method

Participants

Nineteen Chinese college students (14 females) served as participants (Mean age = 20.32 years, SD = 1.87, age range: 18–24), with normal or corrected-to-normal vision. The students had no direct contact with any foreign individuals. The Institutional Review Board of Zhejiang Sci-Tech U approved the experiment.

Materials

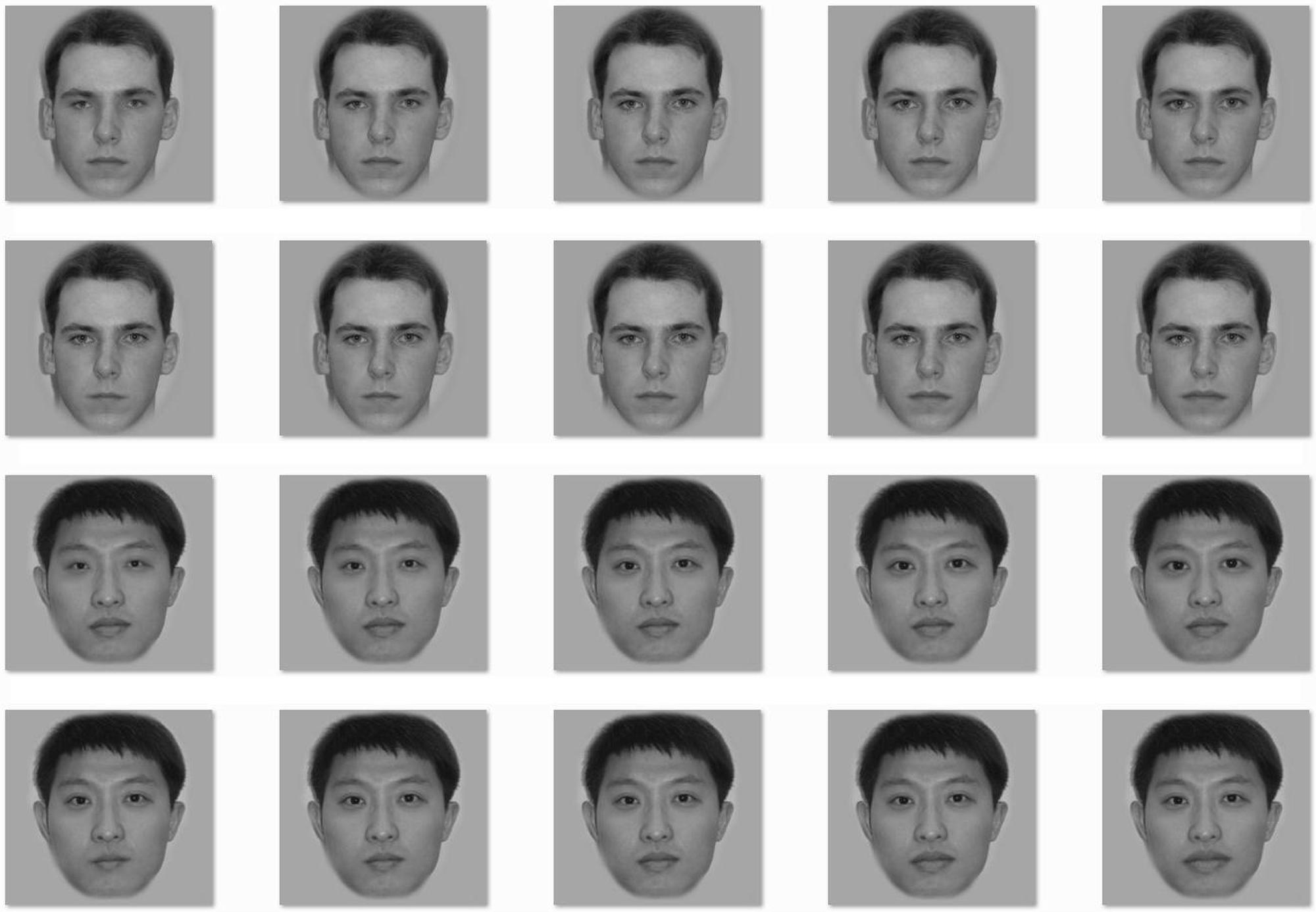

Stimuli were created in the same fashion as in Experiment 1. However, the manipulation was of size instead of spacing (i.e., increasing or decreasing the size of the eyes or mouth by 10 or 20%). The means (and SDs) of eye size (width) of the original Caucasian and Asian faces were 39 (3.2) and 38 (2.8) pixels, respectively, without significant difference between the two races, t(14) = 1.17, p = 0.262. The means (and SDs) of mouth size (width) of the original Caucasian and Asian faces were 69 (6.9) pixels and 70 (6.6) pixels, respectively, without significant difference between the two races, t(14) = –0.30, p = 0.771. Figure 3 shows example variants for an Asian and Caucasian male face.

FIGURE 3. Examples of size change of the two eyes (Rows 1 and 3) or the mouth (Rows 2 and 4) in a Caucasian face (Rows 1 and 2) and an Asian face (Rows 3 and 4). The middle face of each row is the original face upon which the manipulations were made. The leftmost face has eyes or mouth 20% smaller than the original (the middle face), the face located second from the left has eyes or mouth 10% smaller than the original, the rightmost face has eyes or mouth 20% larger than the original, and the face located second from the right has eyes or mouth 10% larger than the original.

Procedure and Design

The procedure and design were the same as in Experiment 1.

Results and Discussion

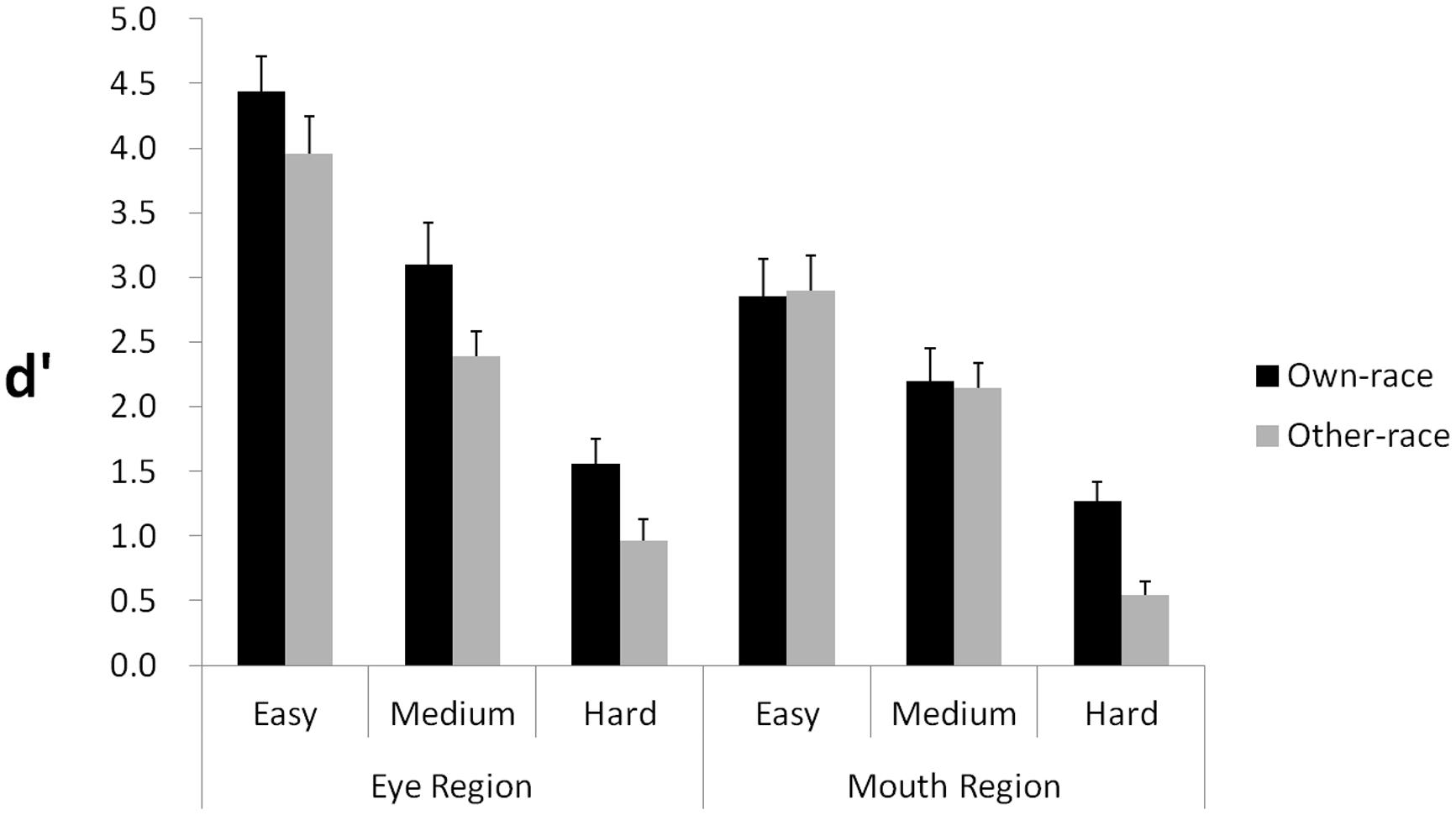

By using correct “different” responses as Hits, incorrect “different” responses as False Alarms, the equation H = P(“different”—Different) = Φ [(–k + d’)] + Φ[(-k - d′)/x11], and the equation FA = P(“different”—Same) = 2Φ –k/), d′scores were calculated as the dependent measure (Macmillan and Creelman, 2005, p. 197). Results are shown in Figure 4.

FIGURE 4. Experiment 2: manipulating the size of facial features. The graph shows d′ scores for detecting size differences across three levels of change (easy, medium, hard) in the eye and mouth regions, respectively. Error bars represent SEs in each condition.

A 2 (Race: own, other) × 2 (Region: Upper, Lower) × 3 (Level: Easy, Medium, Hard) repeated measures analysis of variance was performed, with all three independent variables as within-subjects factors. The main effect of Race was significant, F(1,18) = 44.60, p < 0.0001, = 0.712, demonstrating an advantage for discriminating own-race size change over other-race size change. The main effect of Region was also significant, F(1,18) = 14.60, p = 0.001, = 0.448, showing that discriminating size change in the upper region was easier than in the lower region. The main effect of level was additionally significant, F(2,36) = 144.60, p < 0.001, = 0.889, indicating that performance varied according to the three levels of discrimination.

With respect to interactions, we found Region interacted with Level, F(2,36) = 9.42, p = 0.001, = 0.344, but Race did not, F(2,36) = 2.40, p = 0.105, showing that discrimination of size differences in the face images was affected by region of the face. The interaction between Race and Region is marginally significant, F(1,18) = 4.24, p = 0.054, = 0.191. The three-way interaction of Race, Region, and Level was significant, F(2,36) = 3.91, p = 0.029, = 0.178.

To explore the two-way and three-way interactions further, we also performed ANOVAs on the data for the two regions separately. For the upper region, a 2 (Race: Own vs. Other) × 3 (Level: Easy, Medium, Hard) repeated-measures ANOVA was performed. The main effect of Race was significant, F(1,18) = 25.06, p < 0.001, = 0.58, indicating that participants performed better for own-race faces than for other-race faces, thereby providing evidence that eye size differences contribute to the ORE. The main effect of Level was also significant, F(2,36) = 121.44, p < 0.001, = 0.87, indicating that the more eye size difference between two faces, the better participants differentiated them. The interaction of Race and Level was not significant, F(2,36) = 0.29, p > 0.75.

For the lower region, a 2 (Race: Own vs. Other) × 3 (Level: Easy, Medium, Hard) repeated-measures ANOVA was performed. The main effect of Level was significant, F(2,36) = 59.46, p < 0.001, = 0.768, indicating that the more mouth size difference between two photos, the better participants differentiated them. The main effect of Race was significant, F(1,18) = 7.36, p = 0.014. The interaction of Race and Level was also significant, F(2,36) = 8.41, p < 0.001, = 0.32, showing that the main effect of Race was due to an own-race advantage observed at the level of small mouth size change.

Collectively, the findings indicate that the ORE was manifested in sensitivity to size change, but was stronger and more stable for changes in the upper region relative to changes in the lower region. In the lower region of a face, participants showed an inconsistent ORE at different levels of mouth size change.

General Discussion

In the current study, we examined whether participants are differentially sensitive to configural vs. featural changes or differentially sensitive to such changes in the upper vs. lower regions for own- and other-race faces. First, we found that in the upper region, both configural and featural changes in own-race faces were differentiated significantly better than in other-race faces, across multiple change levels. Second, in the lower region, we found that the featural changes resulted in an inconsistent race difference in participant performance, and the configural changes did not result in any ORE. Taken together, these findings provide direct evidence that individuals are selectively more sensitive to changes around the eye region on own-race faces than on other-race faces.

Our findings show that the ORE has region-selectivity during a face discrimination task. Both types of information (i.e., size and spacing) simultaneously contribute to the ORE. Their effects are localized primarily in the upper region, but not in the lower region of a face. These findings demonstrate that the ORE in face information processing is more influenced by the location of the featural and configural changes than by the type of processing (i.e., featural vs. configural). Extending previous findings showing that participants performed better for blurred or scrambled own-race faces than other-race faces (e.g., Schwaninger et al., 2002; Rhodes et al., 2006; Hayward et al., 2008), the current study indicates that the own-race advantage is due to superior processing of featural and configural information in the upper region of the face. In this way, the region-dependency of face processing noted for face perception (e.g., Turati et al., 2002; Peterson and Eckstein, 2012; Quinn et al., 2013; Tanaka et al., 2014b) and face perception deficits (e.g., Caldara et al., 2005; Bukach et al., 2008; Wolf et al., 2008) has been extended to explain the difference between own- and other-race face processing, suggesting that the location of information in a face should be considered as a key factor in investigating and theorizing about face perception and face processing expertise.

Analogously, judgments of featural and configural variations are similarly affected by the face inversion effect, once processing difficulty or participant expectations for variation-change are equated for the two types of information (Riesenhuber et al., 2004; Sekuler et al., 2004; Yovel and Kanwisher, 2005; McKone and Yovel, 2009). Particularly, Tanaka et al. (2014a) demonstrated that both featural and configural information processing in the eye region were preserved, but both featural and configural information processing were impaired in the mouth region when participants observed an inverted face. To understand these findings and our current results in an integrated way, we would suggest that participants have an expertise area (i.e., the eye region), where the processing resolution is higher for (1) information inside relative to information outside, so that eye region processing is preserved, but mouth region processing is impaired during face inversion, and (2) own-race face processing relative to other-race face processing, so that an own-race advantage across information type (i.e., both configural and featural) is shown consistently in the eye region, but not in the mouth region.

It is still an open question as to whether the ORE is sensitive to changes around the nose, relative to changes around the mouth and eyes. Future studies could manipulate not only the eyes and mouth, but also the nose as an isolated feature. We would speculate that the nose area plays a different role in Caucasian versus Asian participants when they respond to Caucasian and Asian faces. This speculation is based on recent eye-tracking data showing that Asian participants spend a significantly greater proportion of fixation time on the nose of Asian faces than on the nose of Caucasian faces (e.g., Blais et al., 2008; Caldara et al., 2010; Kelly et al., 2010; Fu et al., 2012). Also, since the current study tested only Asian participants, it will become important to test both Asian and Caucasian participants to firmly rule out the influence of low-level visual properties of the stimuli (Vizioli et al., 2010a,b) and determine whether the effect of location may extend across multiple races of perceivers. Given that the effects of spacing and size on the key facial features were investigated separately in the current study, the question of whether these effects might interact also remains open. Future studies might therefore vary spacing and size at the same time to test the effect of the interaction between these two factors.

In summary, we examined the sensitivity of observers to configural and featural changes in the upper and lower regions of own- and other-race faces. Our data reveal that the ORE is more pronounced when featural and configural changes are in the upper region than in the lower region. These findings indicate that information from the upper vs. lower region of the face contributes differentially to the ORE in face processing, and that processing of face race is influenced more by information location (i.e., upper vs. lower) than by information type (i.e., configural vs. featural).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was supported by grants from the Natural Science and Engineering Research Council of Canada, National Institutes of Health (R01 HD046526), National Science Foundation of China (31100737 and 31371032), Zhejiang Provincial Natural Science Foundation of China (LY13C090005) and the Research Foundation of Educational Department of Zhejiang Province of China (No. Z201122112).

References

Anzures, G., Quinn, P. C., Pascalis, O., Slater, A. M., Tanaka, J. W., and Lee, K. (2013). Developmental origins of the other-race effect. Curr. Dir. Psychol. Sci. 22, 173–178. doi: 10.1177/0963721412474459

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Blais, C., Jack, R. E., Scheepers, C., Fiset, D., and Caldara, R. (2008). Culture shapes how we look at faces. PLoS ONE 3:e3022. doi: 10.1371/journal.pone.0003022

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Bukach, C. M., Le Grand, R., Kaiser, M. D., Bub, D. N., and Tanaka, J. W. (2008). Preservation of mouth region processing in two cases of prosopagnosia. J. Neuropsychol. 2, 227–244. doi: 10.1348/174866407X231010

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Caldara, R., Schyns, P., Mayer, E., Smith, M. L., Gosselin, F., and Rossion, B. (2005). Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. J. Cogn. Neurosci. 17, 1652–1666. doi: 10.1162/089892905774597254

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Caldara, R., and Seghier, M. L. (2009). The fusiform face area responds automatically to statistical regularities optimal for face categorization. Hum. Brain Mapp. 30, 1615–1625. doi: 10.1002/hbm.20626

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Caldara, R., Seghier, M. L., Rossion, B., Lazeyras, F., Michel, C., and Hauert, C. A. (2006). The fusiform face area is tuned for curvilinear patterns with more high-contrasted elements in the upper part. NeuroImage 31, 313–319. doi: 10.1016/j.neuroimage.2005.12.011

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Caldara, R., Zhou, X., and Miellet, S. (2010). Putting culture under the “spotlight” reveals universal information use for face recognition. PLoS ONE 5:e9708. doi: 10.1371/journal.pone.0009708

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Chance, J. E., and Goldstein, A. G. (1987). Retention interval and face recognition: response latency measures. Bull. Psychon. Soc. 25, 415–418. doi: 10.3758/BF03334728

Freire, A., Lee, K., and Symons, L. A. (2000). The face-inversion effect as a deficit in the encoding of configural information: direct evidence. Perception 29, 159–170. doi: 10.1068/p3012

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Fu, G., Hu, C. S., Wang, Q., Quinn, P. C., and Lee, K. (2012). Adults scan own- and other-race faces differently. PLoS ONE 7:e37688. doi: 10.1371/journal.pone.0037688

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Galton, F. (1879). Composite portraits made by combining those of many different persons into a single resultant figure. J. Anthropol. Inst. Great Britain Irel. 8, 132–144. doi: 10.2307/2841021

Ge, L., Anzures, G., Wang, Z., Kelly, D. J., Pascalis, O., Quinn, P. C., et al. (2008). An inner face advantage in children’s recognition of familiar peers. J. Exp. Child Psychol. 101, 124–136. doi: 10.1016/j.jecp.2008.05.006

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Goldstein, A. G., and Chance, J. E. (1980). Memory for faces and schema theory. J. Psychol. 104, 47–59. doi: 10.1080/00223980.1980.9915131

Goldstein, A. G., and Mackenberg, E. J. (1966). Recognition of human faces from isolated facial features: a developmental study. Psychon. Sci. 6, 149–150. doi: 10.3758/BF03328001

Hancock, K. J., and Rhodes, G. (2008). Contact, configural coding and the other-race effect in face recognition. Br. J. Psychol. 99, 45–56. doi: 10.1348/000712607X199981

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Hay, D. C., and Cox, R. (2000). Developmental changes in the recognition of faces and facial features. Infant Child Dev. 9, 199–212. doi: 10.1002/1522-7219(200012)9:4<199::AID-ICD231>3.0.CO;2-K

Hayward, W. G., Rhodes, G., and Schwaninger, A. (2008). An own-race advantage for components as well as configurations in face recognition. Cognition 106, 1017–1027. doi: 10.1016/j.cognition.2007.04.002

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Keil, M. S. (2008). Does face image statistics predict a preferred spatial frequency for human face processing? Proc. R. Soc. B Biol. Sci. 275, 2095–2100. doi: 10.1098/rspb.2008.0486

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Kelly, D. J., Miellet, S., and Caldara, R. (2010). Culture shapes eye movements for visually homogeneous objects. Front. Psychol. 1:6. doi: 10.3389/fpsyg.2010.00006

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Liu, S., Anzures, G., Ge, L., Quinn, P. C., Pascalis, O., Slater, A. M., et al. (2013). Development of recognition of face parts from unfamiliar faces. Infant Child Dev. 22, 165–179. doi: 10.1002/icd.1781

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Macmillan, N. A., and Creelman, C. D. (2005). Detection Theory: A User’s Guide, 2nd Edn. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Maurer, D., Le Grand, R., and Mondloch, C. J. (2002). The many faces of configural processing. Trends Cogn. Sci. 6, 255–260. doi: 10.1016/S1364-6613(02)01903-4

McKone, E., and Yovel, G. (2009). Why does picture-plane inversion sometimes dissociate perception of features and spacing in faces, and sometimes not? Toward a new theory of holistic processing. Psychon. Bull. Rev. 16, 778–797. doi: 10.3758/PBR.16.5.778

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Meissner, C. A., and Brigham, J. C. (2001). Thirty years of investigating the own-race bias in memory for faces: a meta-analytic review. Psychol. Public Policy Law 7, 3–35. doi: 10.1037//1076-8971.7.1.3

Michel, C., Caldara, R., and Rossion, B. (2006a). Same-race faces are perceived more holistically than other-race faces. Vis. Cogn. 14, 55–73. doi: 10.1080/13506280500158761

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Michel, C., Rossion, B., Han, J., Chung, C.-S., and Caldara, R. (2006b). Holistic processing is finely tuned for faces of one’s own race. Psychol. Sci. 17, 608–615. doi: 10.1111/j.1467-9280.2006.01752.x

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Pellicano, E., and Rhodes, G. (2003). Holistic processing of faces in preschool children and adults. Psychol. Sci. 14, 618–622. doi: 10.1046/j.0956-7976.2003.psci_1474.x

Pellicano, E., Rhodes, G., and Peters, M. (2006). Are preschoolers sensitive to configural information in faces? Dev. Sci. 9, 270–277. doi: 10.1111/j.1467-7687.2006.00489.x

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Peterson, M. F., and Eckstein, M. P. (2012). Looking just below the eyes is optimal across face recognition tasks. Proc. Natl. Acad. Sci. U.S.A. 109, 3314–3323. doi: 10.1073/pnas.1214269109

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Quinn, P. C., and Tanaka, J. W. (2009). Infants’ processing of featural and configural information in the upper and lower halves of the face. Infancy 14, 474–487. doi: 10.1080/15250000902994248

Quinn, P. C., Tanaka, J. W., Lee, K., Pascalis, O., and Slater, A. M. (2013). Are faces special to infants? An investigation of configural and featural processing for the upper and lower regions of houses in 3- to 7-month-olds. Vis. Cogn. 21, 23–37. doi: 10.1080/13506285.2013.764370

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Rhodes, G., Ewing, L., Hayward, W. G., Maurer, D., Mondloch, C. J., and Tanaka, J. W. (2009). Contact and other-race effects in configural and component processing of faces. Br. J. Psychol. 100, 717–728. doi: 10.1348/000712608X396503

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Rhodes, G., Hayward, W., and Winkler, C. (2006). Expert face coding: configural and component coding of own-race and other-race faces. Psychon. Bull. Rev. 13, 499–505. doi: 10.3758/BF03193876

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Riesenhuber, M., Jarudi, I., Gilad, S., and Sinha, P. (2004). Face processing in humans is compatible with a simple shape-based model of vision. Proc. R. Soc. B Biol. Sci. 271, S448–S450. doi: 10.1098/rsbl.2004.0216

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Rossion, B., Kaiser, M. D., Bub, D., and Tanaka, J. W. (2009). Is the loss of diagnosticity of the eye region of the face a common aspect of acquired prosopagnosia? J. Neuropsychol. 3, 69–78. doi: 10.1348/174866408X289944

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Rossion, B., and Michel, C. (2011). “An experience-based holistic account of the other-race face effect,” in Oxford Handbook of Face Perception, eds G. Rhodes, A. Calder, M. Johnson, and J. V. Haxby (Oxford: Oxford University Press), 215–244.

Schwaninger, A., Lobmaier, J., and Collishaw, S. M. (2002). Role of featural and configural information in familiar and unfamiliar face recognition. Lecture Notes Comput. Sci. 2525, 643–650. doi: 10.1007/3-540-36181-2_64

Sekuler, A. B., Gaspar, C. M., Gold, J. M., and Bennett, P. J. (2004). Inversion leads to quantitative, not qualitative, changes in face processing. Curr. Biol. 14, 391–396. doi: 10.1016/j.cub.2004.02.028

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Sergent, J. (1984). An investigation into component and configural processes underlying face perception. Br. J. Psychol. 75, 221–242. doi: 10.1111/j.2044-8295.1984.tb01895.x

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Tanaka, J. W., and Farah, M. J. (1993). Parts and wholes in face recognition. Q. J. Exp. Psychol. A 46, 225–245. doi: 10.1080/14640749308401045

Tanaka, J. W., and Gordon, I. (2011). “Features, configuration, and holistic face processing,” in Oxford Handbook of Face Perception, eds G. Rhodes, A. Calder, M. Johnson, and J. V Haxby (Oxford: Oxford University Press), 177–194.

Tanaka, J. W., Kaiser, M. D., Hagen, S., and Pierce, L. J. (2014a). Losing face: impaired discrimination of featural and configural information in the mouth region of an inverted face. Atten. Percept. Psychophys. 76, 1000–1014. doi: 10.3758/s13414-014-0628-0

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Tanaka, J. W., Quinn, P. C., Xu, B., Maynard, K., Huxtable, N., Lee, K., et al. (2014b). The effects of information type (features vs. configuration) and location (eyes vs. mouth) on the development of face perception. J. Exp. Child Psychol. 124, 36–49. doi: 10.1016/j.jecp.2014.01.001

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Tanaka, J. W., Kaiser, M., Bub, D., and Pierce, L. (2009). Generalized impairment of featural and configural information in the lower region of the face through inversion. J. Vis. 9, 531. doi: 10.1167/9.8.531

Tanaka, J. W., Kiefer, M., and Bukach, C. M. (2004). A holistic account of the own-race effect in face recognition: evidence from a cross-cultural study. Cognition 93, B1–B9. doi: 10.1016/j.cognition.2003.09.011

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Taschereau-Dumouchel, V., Rossion, B., Schyns, P. G., and Gosselin, F. (2010). Interattribute distances do not represent the identity of real world faces. Front. Psychol. 1:159. doi: 10.3389/fpsyg.2010.00159

Taylor, M. J., Edmonds, G. E., McCarthy, G., and Allison, T. (2001). Eyes first! Eye processing develops before face processing in children. Neuroreport 12, 1671–1676. doi: 10.1097/00001756-200106130-00031

Turati, C., Simion, F., Milani, I., and Umiltà, C. (2002). Newborns’ preference for faces: what is crucial? Dev. Psychol. 38, 875–882. doi: 10.1037/0012-1649.38.6.875

Valentine, T. (1991). A unified account of the effects of distinctiveness, inversion, and race in face recognition. Q. J. Exp. Psychol. A 43, 161–204. doi: 10.1080/14640749108400966

Vinette, C., Gosselin, F., and Schyns, P. G. (2004). Spatio-temporal dynamics of face recognition in a flash: it’s in the eyes. Cogn. Sci. 28, 289–301. doi: 10.1016/j.cogsci.2004.01.002

Vizioli, L., Foreman, K., Rousselet, G. A., and Caldara, R. (2010a). Inverting faces elicits sensitivity to race on the N170 component: a cross-cultural study. J. Vis. 10, 1–23. doi: 10.1167/10.1.15

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Vizioli, L., Rousselet, G. A., and Caldara, R. (2010b). Neural repetition suppression to identity is abolished by other-race faces. Proc. Natl. Acad. Sci. U.S.A. 107, 20081–20086. doi: 10.1073/pnas.1005751107

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Walker-Smith, G. J. (1978). The effects of delay and exposure duration in a face recognition task. Percept. Psychophys. 24, 63–70. doi: 10.3758/BF03202975

Wolf, J. M., Tanaka, J. W., Klaiman, C., Cockburn, J., Herlihy, L., Brown, C., et al. (2008). Specific impairment of face-processing abilities in children with autism spectrum disorder using the Let’s Face It! skills battery. Autism Res. 1, 329–340. doi: 10.1002/aur.56

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Xu, B., and Tanaka, J. W. (2013). Does face inversion qualitatively change face processing: an eye movement study using a face change detection task. J. Vis. 13, 1–16. doi: 10.1167/13.2.22

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Yin, R. K. (1969). Looking at upside-down faces. J. Exp. Psychol. 81, 141–145. doi: 10.1037/h0027474

Yovel, G., and Kanwisher, N. (2005). The neural basis of the behavioral face-inversion effect. Curr. Biol. 15, 2256–2262. doi: 10.1016/j.cub.2005.10.072

PubMed Abstract | Full Text | CrossRef Full Text | Google Scholar

Keywords: face processing, face recognition, other-race effect, Face Dimensions Test, configural information, featural information, upper vs. lower face

Citation: Wang Z, Quinn PC, Tanaka JW, Yu X, Sun Y-HP, Liu J, Pascalis O, Ge L and Lee K (2015) An other-race effect for configural and featural processing of faces: upper and lower face regions play different roles. Front. Psychol. 6:559. doi: 10.3389/fpsyg.2015.00559

Received: 26 September 2014; Accepted: 17 April 2015;

Published online: 08 May 2015

Edited by:

Frédéric Gosselin, University of Montreal, CanadaReviewed by:

Roberto Caldara, University of Fribourg, SwitzerlandCarl M. Gaspar, Hangzhou Normal University, China

Caroline Blais, Université du Québec en Outaouais, Canada

Copyright © 2015 Wang, Quinn, Tanaka, Yu, Sun, Liu, Pascalis, Ge and Lee. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yu-Hao P. Sun, Department of Psychology, Zhejiang Sci-Tech University, Hangzhou 310018, Zhejiang, China; Dr. Eric Jackman Institute of Child Study, University of Toronto, Toronto, ON M5R 2X2, Canada, sunyuhao@zstu.edu.cn; Kang Lee, Dr. Eric Jackman Institute of Child Study, University of Toronto, Toronto, ON M5R 2X2, Canada, kang.lee@utoronto.ca

Zhe Wang

Zhe Wang Paul C. Quinn

Paul C. Quinn James W. Tanaka

James W. Tanaka Xiaoyang Yu1

Xiaoyang Yu1 Yu-Hao P. Sun

Yu-Hao P. Sun Jiangang Liu

Jiangang Liu Olivier Pascalis

Olivier Pascalis Kang Lee

Kang Lee