Abstract

The paper introduces a new type of rules into Natural Deduction, elimination rules by composition. Elimination rules by composition replace usual elimination rules in the style of disjunction elimination and give a more direct treatment of additive disjunction, multiplicative conjunction, existence quantifier and possibility modality. Elimination rules by composition have an enormous impact on proof-structures of deductions: they do not produce segments, deduction trees remain binary branching, there is no vacuous discharge, there is only few need of permutations. This new type of rules fits especially to substructural issues, so it is shown for Lambek Calculus, i.e. intuitionistic non-commutative linear logic and to its extensions by structural rules like permutation, weakening and contraction. Natural deduction formulated with elimination rules by composition from a complexity perspective is superior to other calculi.

Similar content being viewed by others

1 Introduction

Since its appearence in 1934/35 by Gentzen the rules of Natural Deduction played an epistemological role, because its discoverer or inventor claimed that these rules ’einen Formalismus aufstellen, der moeglichst genau das wirkliche logische Schliessen in mathematischen Beweisen wiedergibt.’ Gentzen (1934/35, p.183). And although the calculus of Natural Deduction formulated ’real logical reasoning’ in Gentzen’s eyes he went over to another calculus, the calculus of sequents, serving him as the preferred object of mathematical language in which his basic and famous theorem was shown, the theorem of cut elimination. Maybe due to the technical preferences of Gentzen the calculus of Natural Deduction slept for a while, was neither modified nor really used, at least until Prawitz (1965) showed in his famous Natural Deduction the proof-theoretical subtleties of this calculus: cut-elimination can be proved in Natural Deduction not just by some translation via sequent calculus, but as a result of its own in the form of normalising or converting maximum formulas. Even Gentzen’s idea that Natural Deduction somehow mirrors ’the real logical calculating in mathematical proofs’ was further fostered since it seemed to be confirmed by the BHK - Brouwer-Heyting-Kolmogorow interpretation of logic, e.g. Heyting (1956). And the introduction of types into the \(\lambda \)-calculus for an understanding of the syntax of propositional and predicate logic led to a very close relation of Natural Deduction and typed \(\lambda \)-calculus formulated as the Curry-Howard Isomorphism.

A further important step in the short history of Natural Deduction was the discovery of general schemata of rules by Schroeder-Heister. He remarked in Schroeder-Heister (1984) that especially the form of the elimination rule of intuitionistic disjunction could be applied to other eliminations as well, for instance to intuitionistic conjunction, and may serve as a generator for arbitrary connectives. In the line of such ideas several directions of investigation started. von Plato (2003) discovered that general elimination rules are the missing link to understand the relation of the calculus of sequents and the calculus of Natural Deduction much better. Avron (1988), Troelstra (1995) and Tennant (2007) started to apply general elimination rules for the formulation of some substructural logics in the framework of Natural Deduction like linear logic and relevant logic. Not to forget, the author of this paper went a further step and gave in Zimmermann (2007, 2010) an exposition of rules in the style of general elimination rules for substructural logics including Lambek Calculus, so for Intuitionistic Linear Non-Commutative Logic.

But in this paper it is shown, that there is yet another typ of rules in Natural Deduction worth to be considered, to be called elimination rules by composition, and presented by this author Zimmermann (2017, 2019). These rules form an alternative to elimination rules in the style of elimination of disjunction, and they are able to give elimination rules at least for intuitionistic additive disjunction, existence quantifier, multiplicative conjunction and possibility. These rules have an enormous impact on proof-structures of derivations: they do not produce segments, so they do not give rise for reductions like permutations; they are only binary branching, at least for binary connectives; they do not allow vacuous discharge, so they do not give rise to immediate simplifications and to inconfluence phaenomena caused by immediate simplifications; and last but not least they allow a better understanding of substructural logics, i.e. logics without structural rules.

1.1 Rules for Intuitionistic Disjunction \(\vee \)

The new typ of rules, elimination rules by composition, can be shown best by example. As a starter intuitionistic disjunction is chosen. The rules for this connective, disjunction introduction and disjunction elimination rules, are going back to Gentzen and Schroeder-Heister showed, as mentioned, that especially the rule of disjunction elimination deserves extra attention, since it can be regarded as a general schema for other connective rules, for instance conjunction elimination can be formulated in the style of disjunction elimination. But on the other side Girard formulated some critical comments on the rule of disjunction elimination, so that one might be interested to consider alternatives to this rule:

’The elimination rules are very bad. What is catastrophic about them is the parasitic presence of a formula C which has no structural link with the formula which is eliminated. C plays the role of a context, and the writing of these rules is a concession to sequent calculus.’ Girard (1989), p.73.

As usual the elimination rule of intuitionistic disjunction \(\vee E\) constructs a new deduction by presupposing other deductions, which look as follows if spelled out:

But under the presupposition of these deductions the following elimination rules of intuitionistic disjunction \(\vee ER\) and \(\vee EL\) construct deductions as well:

In contrast to \(\vee EL\) and \(\vee ER\) the traditional \(\vee E\) allows elimination of a disjunction with complete empty discharge, whereas elimination by composition always needs a non-empty set of active formulas to proceed.

Elimination by composition does comply much better to the very idea of Natural Deduction, that every connective has introduction rules and elimination rules. In introduction rules the connective formula is the conclusion and its components are the premisses; whereas in elimination rules the connective formula is the premiss and its components are the conclusions.

The full advantage of elimination rules by composition is transparent if substructural logics and rules are considered. So we change to Lambek Calculus, which is intuitionistic non-commutative linear logic. This is a logic without structural rules like weakening, contraction and exchange, very early formulated in Lambek (1958), extended in Lambek (1993), when Lambek recognized that cut elimination in the calculus of sequents still holds if structural rules are removed - appropriate formulations of connective rules presupposed. First we stick to disjunction, the additive case.

1.2 Rules for Additive Disjunction \(\vee \) in Lambek Calculus

The sketched rules, by misuse of notation, modify the intuitionistic case insofar as the elimination rules \(\vee E\) are more properly defined. Since the order and the amount of the open assumptions in the context \(\Gamma , \Delta \) of active formulas A, B is important, A and B are required to have up to its order the same context, this context has to be carefully discharged, such that the order and the amount of the context is kept constant. Now rule \(\vee E\) can be replaced by two rules \(\vee EL\) and \(\vee ER\), both showing again elimination of a connective, here additive disjunction \(\vee \) by composition. Both rules are translatable into each other, so for the purpose of complete calculus one of the two rules is sufficient. Examples with the rules are shown in the sequel.

1.3 Rule Assignement

Rules like elimination rules by composition presuppose careful rule assignement for unique readability, since the last applied rule in a deduction is not necessarily the rule at the end, at the bottom of the deduction; instead the last applied rule may be a rule at the top of the deduction. A careful rule assignement by natural numbers reveals the order of rules applied in a deduction. To every instance of a rule in a deduction a natural number k is assigned inductively, its step. To instances of the base rule, stating assumptions, step 0 is assigned. If a rule R is applied to deductions where m is the largest step number of instances of rules, then step \(m+1\) is assigned to R. The step number k of a rule is further assigned to its discharged assumptions \(A^k\) or discharged contexts \(\Gamma ^k\). This rule assignement is unique, and a given derivation can be uniquely decomposed according to its last step number, because for every deduction there is exactly one largest step number. If the rule with the largest step number in a deduction is removed, there result deductions with lower step numbers, each deduction again with only one largest step number.

Such assignements of natural numbers to instances of rules are used at least by van Dalen (2004) and Prawitz (1965), more or less occasionally. But Natural Deduction with elimination rules by composition will use natural numbers as rule assignement systematically. Strictly speaking rule assignement by natural numbers is not a new kind of thing. The usual assignement of variables to instances of rules amounts to the very same: in a large deduction with many rule instances variables very early will receive indices consisting of natural numbers since different variables are limited.

1.4 Rules for Multiplicative Conjunction \(\bullet \) in Lambek Calculus

Finally rules for multiplicative conjunction in Lambek Calculus are discussed to show the enormous effects of elimination rules by composition. This author showed 2010 that multiplicative conjunction is correctly definable for Lambek Calculus by using symmetric general elimination rules. The symmetries of such rules allow in case of multiplicative conjunction to take care not only of the amount but more, of the order of assumptions, what is essential for Lambek Calculus. So, the elimination rule for multiplicative conjunction \(\bullet \) takes the following form, besides the unproblematic introduction rule:

Although a correct rule, it is immediately seen that \(\bullet E\) is a source of inconfluence and even of indeterministic conversion of maximum formulas of the form \(\bullet \). Such phaenomena disappear if for formulation of \(\bullet \) elimination by composition is taken into account. Again there are two rules, \(\bullet ER\) and \(\bullet EL\) which are both translatable into each other, so, one rule fulfills the purpose.

2 Lambek Calculus - Intuitionistic Non-Commutative Linear Logic

Definition 1

Lambek Calculus LC or intuitionistic \(non-commutative\) linear logic in natural deduction with binary connectives \(\rightarrow , \Rightarrow , \bullet , \wedge , \vee \), constants \(\bot , \top , \mathbf{0}, \mathbf{1}\), quantifiers \(\forall , \exists \) and modals \(\Box , \Diamond \). Assumptions are considered as sequences of formulas, so up to their order, and the order of assumptions in a deduction is their order in the tree of deduction. \(B^k\) are discharged assumption singletons, \(\Gamma ^k\) discharged sequences of formulas. Assumptions are open iff not discharged. \(qR+1\) assigns a natural number to rule instance R for operator q, which is larger than any other natural number assigned to given rule instances at least by 1. To a rule instance BR, the base rule, natural number 0 is assigned.

In \(\rightarrow I\) assumption singleton A is most right in the order of open assumptions.

In \(\Rightarrow I\) assumption singleton A is most left in the sequence of open assumptions.

In \(\bullet E\) there is no other open assumption between A, B.

In \(\wedge I\) premisses A, B have the same open assumptions, up to the order.

One context \(\Gamma \) is discharged to keep the amount and the order of the context constant, one could discharge left or right, \(\wedge I\) discharges the right context.

In \(\vee E\) lower contexts C have the same open assumptions, up to the order.

\(\bot , \mathbf{0}\) as conclusion have the meaning that \(\bot , \mathbf{0}\) or nothing is conclusion.

\(\Diamond E\): every open branch (branch with open assumption), except the branch with A, has a \(\Box \) or \(\bot \) node.

\(\Box I\): every open branch has a \(\Box \) or \(\bot \) node.

The modal rules pick up an idea of de Paiva / Bierman Bierman and de Paiva (2000), that every branch has a certain modal configuration, which originally was formulated for Natural Deduction in sequent style.

The stipulation for \(\mathbf{0}\) needs an explanation, since it is indeed meaningful, although there is no rule for \(\mathbf{0}\). The stipulation says: \(\mathbf{0}\) as conclusion has the meaning that \(\mathbf{0}\) or nothing is conclusion. Written in sequents it says: \(\Gamma \vdash \mathbf{0}\) iff \(\Gamma \vdash \). So, having \(\mathbf{0}\) as conclusion is the same as having no conclusion at all.

Examples

Definition 2

In an instance of a rule  for operator q formulas A, B immediately above the rule line are the premisses and formula C immediately below the conclusion, except in \(qR = \vee E\). If \(R = I\), the rule is an introduction rule, if \(R = E\), the rule is an elimination rule. In \(\vee E\) only the connective formula \(A \vee B\) above the rule line is a premiss and formula A below the rule line and discharged assumption B are conclusions; the other formula C above the rule line is a lower context together with the pairwise occuring C at the end of the subdeduction from A. And conclusion D of lower context C, its premiss, in \(\vee E\) is conclusion of corresponding lower context C too, again its premiss. Finally discharged formula B in \(\bullet E\) is a conclusion of premiss \(A \bullet B\) too.

for operator q formulas A, B immediately above the rule line are the premisses and formula C immediately below the conclusion, except in \(qR = \vee E\). If \(R = I\), the rule is an introduction rule, if \(R = E\), the rule is an elimination rule. In \(\vee E\) only the connective formula \(A \vee B\) above the rule line is a premiss and formula A below the rule line and discharged assumption B are conclusions; the other formula C above the rule line is a lower context together with the pairwise occuring C at the end of the subdeduction from A. And conclusion D of lower context C, its premiss, in \(\vee E\) is conclusion of corresponding lower context C too, again its premiss. Finally discharged formula B in \(\bullet E\) is a conclusion of premiss \(A \bullet B\) too.

In \(\rightarrow E, \Rightarrow E\) the connective premiss is the major premiss and in W rules the formula being conclusion is the major premiss; other premisses are minor.

In \(\wedge I\) with conclusion \(A \wedge B\) all open assumptions \(\Gamma \) of the subdeduction ending with A and all open assumptions \(\Gamma \) of the subdeduction ending with B are upper context formulas. In \(\vee E\) with premiss \(A \vee B\) all open assumptions \(\Gamma A \Delta \) of the subdeduction ending with C except A and all open assumptions \(\Gamma B \Delta \) of the subdeduction ending with C except B are upper context formulas.

To every instance of a rule R in a deduction a natural number k is assigned inductively, its step, yielding Rk. To instances of the base rule, stating assumptions, step 0 is assigned. If rule R is applied to deductions where m is the largest step number of instances of rules, then step \(m+1\) is assigned to R. So, the step numbers of the instances of rules in a deduction serve as parameter in proofs by induction on the so-called length of a deduction.

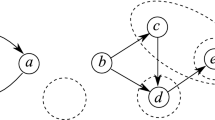

A branch in a deduction \(\mathcal {D}\) is a sequence of formulas \(\langle A_1, ..., A_k \rangle \) in \(\mathcal {D}\) s.t. \(A_1\) is an assumption of \(\mathcal {D}\), \(A_k\) is the conclusion of \(\mathcal {D}\) and \(A_{m+1}\) is the conclusion of a rule applied on premiss \(A_m\) for \(m+1 \le k\). So, for branches with \(C \vee D = A_m\) and \(\vee E\) applied on \(A_m\) as premiss or \(C \bullet D = A_m\) and \(\bullet E\) applied on \(A_m\) as premiss, there are two branches, one s.t. \(C = A_{m+1}\) and the other s.t. \(D = A_{m+1}\).

A maximum formula is a formula which is

-

the conclusion of an introduction rule and the premiss of an elimination rule or

-

the conclusion of \(\bot E\) and the premiss of an elimination rule or

-

the conclusion of an introduction rule and the premiss of \(\top I\) or

-

the minor premiss of any weakening rule if it is not an assumption.

A segment is a sequence of occurrences \(\langle A_1, ..., A_k \rangle \) of one and the same formula A s.t. each pair \(A_j, A_{j+1}\) is an instance of a weakening rule, \(A_j\) as the major premiss and \(A_{j+1}\) as the conclusion.

A chain of applications of \(\vee E\) is a finite sequence of l applications of \(\vee E\) with lower context formulas of the same shape, s.t. the left lower context of application i is a lower context of application \(i+1\) for all \(i+1 \le l\) and the lower context formula of application l is major premiss of an elimination rule.

The degree of a formula is a natural number assigned to a formula by a recursive function d: \(d(\mathbf{P}(s_1,..,s_k)) = 0\), \(d(AqB) = MAX\{ d(A), d(B) \} +1\).

2.1 Proposition on Unique Readability of Deductions

In every deduction \(\mathcal {D}\) of LC there is exactly one rule last applied, and this rule last applied can be uniquely detected.

Proof by induction on the length of a deduction, so by induction on its step number. So, assume m to be the step number of \(\mathcal {D}\). If \(m=0\), \(\mathcal {D}\) consists of a single node, stated by the Base Rule, and the proposition holds. Now assume the proposition to hold for deductions with step number m, show it to hold for deductions with step number \(m+1\). So assume for three deductions \({\mathcal {D}}^1, {\mathcal {D}}^2, {\mathcal {D}}^3\) with step numbers \(k_1, k_2, k_3\), respectively the proposition to hold s.t. \(k_i = m\) for some i and \(k_j \le k_i\), and an arbitrary rule R to be applied on these deductions. Then the definition of a step number of rules guarantees that the step number of \({\mathcal {D}}^*\) as a result of R applied on \({\mathcal {D}}^i\) is \(m+1\).

It should be said, what this uniqueness of readibility is not. It is not a unique mapping of derivation trees to step-numbers and vice versa. There are identical derivation trees with the same formulas as nodes but with different step numbers, as the simple example shows.

But it is neither accident nor incident that these different assignements of step numbers to one derivation tree are existing - a translation into sequent calculus shows too, that these two objects are really different derivations.

2.2 Proposition on Closure Under Substitution

Generally formulated substitution in Natural Deduction is the following combination of two derivations to one derivation:

In presence of the defined calculus LC with its explicit rule assignement for every rule application substitution is something more delicate. Simply substituting two derivations in each other, leaving rule assignements untouched, yields in most cases no rule assignement for the combined derivations, i.e. no derivation. So, for substitution of two given derivations the rule assignement has to be partially redefined. Assume the rule assignement for derivation \(\begin{array}{c} \Gamma \\ \vdots \\ A \end{array}\) to be defined and n its largest step number. Then the construction of the substitution derivation with its rule assignement proceeds by induction on the step numbers k of derivation \(\begin{array}{c} A \\ \vdots \\ B \end{array}\).

Induction begin \(k = 0 = BR\).

For \(k=0\) the largest step number of the substitution derivation is n.

For induction step \(k+1\) assume as induction hypothesis the substitution for k to be constructed with the result of m being the largest step number resulting from substituting derivation with step number n into derivation with step number k such that \(k \le m\) and \(n \le m\). Then substitution in induction step \(k+1\) has various cases according to the different rules - some interesting cases are exemplified:

3 Adding Structural Rules

Adding structural rules like permutation, contraction or weakening to Lambek calculus gives intuitionistic versions of other substructural logics like linear, relevant or affine logic. In the sequel it is shown that such structural rules exist in Natural Deduction as well.

Proposition

Intuitionistic Linear Logic ILL is LC by interpreting assumptions in derivations not as sequences but as multisets, so by neglecting their order - Girard (1987).

These deductions are characteristic for ILL, they implement commutativity. I.e. the order of assumptions is free in ILL, for instance discharge \(\rightarrow I3\) in the first example is not possible in LC, due to restrictions on order.

3.1 Explicit Structural Rules

In Natural Deduction explicit structural rules Permutation, Contraction and Weakening can be formulated as well as in the calculus of sequents. In the above weak instances of explicit structural rules are already used, \(\bot W, \top W, 1 W\) rules are instances of full weakening. But there are full structural rules definable in natural deduction, for instance an explicit permutation rule P.

Proposition

Intuitionistic Linear Logic can be equivalently defined as LC+P, LC extended by explicit permutation rule P.

Two examples of applications of P in LC+P show commutativity of \(\bullet \) and \(\rightarrow \):

For deductive equivalence of ILL and LC+P it is first shown that P is a derived rule in ILL: either by connective \(\bullet \), where permutations can be executed locally, or by connective \(\rightarrow \), where permutations of two assumptions presuppose a whole sequence of reordering of applications of rules.

For deductive equivalence of ILL and \(LC+P\) it is shown secondly that sequences of assumptions in deductions of LC+P can be rearranged to an arbitrary order by permutation rule P. This is a proof by induction on the length k of a sequence of open assumptions. If \(k \le 2\) the proof is by one application of P. If \(k=n+1\) and the proof is shown for length n the argument is this. The sequence be \(\langle A_{n+1}, A_n, .., A_i, .., A_1 \rangle \). Sequence \(\langle A_n, .., A_i, .., A_1 \rangle \) can be arranged by induction assumption to any order, even to \(\langle A_i, .., A_n, .., A_1 \rangle \), so any \(A_i\) can be put to the end of the sequence. But with permutation rule P it holds that sequence \(\langle A_i, A_{n+1}, .., A_n, .., A_1 \rangle \) can be constructed, and that \(\langle A_{n+1}, .., A_n, .., A_1 \rangle \) - without \(A_i\) - can be arranged by induction assumption to any order.

Proposition

IRL, intuitionistic relevant logic, is ILL extended by contraction rule C.

For sake of uniqueness contraction rule C underlies a convention if many assumption formulas of the same type occur in a derivation: formula \(A^m\) discharged by contraction rule Cm refers to that other formula occurrence A being on the left side of \(A^m\) but rightmost.

The following derivations show characteristic axioms of relevant logic.

Proposition

IAL, intuitionistic affine logic, or BCK logic is ILL extended by weakening rule W.

The derivations show characteristic axioms of affine logic.

Proposition

IL, intuitionistic logic, is ILL extended by rules weakening W and contraction C.

The derivation shows a characteristic axiom of IL, distributivity of additive \(\wedge \) over additive \(\vee \).

Of course, there is a great redundancy of the defined rules for IL and this redundancy starts even with ILL. For example weakening rules for the constants \(\bot WR, \bot WL, \top WR, \top WR\) are superfluous in presence of full weakening W or some of these weakening rules are superfluous in presence of permutation P. But we neglect these considerations to keep the presentation straight forward.

4 Reductions

4.1 Conversions

For the conversions it is assumed that the rule instance of the elimination rule producing the max formula to be converted is the last rule instance in the derivation, so has the largest step number in the derivation.

In the sequel reductions only for one implication \(\rightarrow \) in LC are shown, reductions for \(\Rightarrow \) are left out, they are simply symmetric to each other.

A similar conversion holds for B as conclusion of max formula \(A \wedge B\).

A similar conversion holds for A as premiss of max formula \(A \vee B\).

4.2 \(\bot \)- Conversions

The rule assignements with their step numbers in the following derivations have to be read very carefully, since they can not be read as open variables with a possible universal closure. Instead they have the following specific meaning: if there is a derivation \(\mathcal {D}\) with highest step number k, then there is a reduced (converted, permuted) derivation \(\mathcal {E}\) with highest step number m. Generally there can not be much said about m for given k, although m is unique for given \(\mathcal {E}\).

A similar conversion holds for B as conclusion of max formula \(A \wedge B\).

4.3 \(\top \) -Conversions

A similar conversion holds for B as premiss of max formula \(A \vee B\).

For \(q \in \{ \forall , \exists , \Box , \Diamond \}\).

4.4 \(\bot \) -W and \(\top \) -W Conversions

Such conversions hold for weakening rules \(\bot WL\), \(\top WR\) and \(\top WL\) too.

4.5 Conversions in Upper Contexts

If conclusions of maximum formulas are upper contexts in \(\wedge I\) or \(\vee E\), then there are corresponding contexts, and the substitutions in the converted derivation have to be done in the contexts and in the corresponding contexts, as exemplified below. Even more, one and the same formula occurrence can be context not only in one application of \(\wedge I\) or \(\vee E\), but in many. And so the substitution of derivations caused by conversion has to occur manifold. This is shown in an example of a context formula being context in two applications of \(\wedge I\) in the below.

Finally a concrete example of substitution in contexts caused by conversion.

4.6 Simultaneous Substitution Due to Conversion of \(\bullet \) Max Formulas

Substitution of derivations due to conversion of a max formula \(\bullet \) is done simultaneously. This is possible without violating determinacy of conversions, since there may exist below any step k of rule applications more than 1 rule applications of step \(k-1\). In the sequel is an example of a derivation \({\mathcal {D}}^1\) with 2 max formulas \(\bullet \) converting twofold to \({\mathcal {D}}^2\) and to \({\mathcal {D}}^3\) and the final conflueing normal derivation \({\mathcal {D}}^4\).

4.7 Permutations

As usual elimination rules qE having a lower context of \(\vee E\) as premiss can be permuted with this \(\vee E\), such that the conclusion of qE is lower context of \(\vee E\), up to preserving the order of assumptions. In the permutations below the variables for formulas A, B, C, ... are open variables, so schema variables and can arbitrarily instantiated. But the step numbers of permutations are existentially closed: if there are step numbers k, m for a derivation, then there are step numbers i, j, n for its permuted derivation. Elimination rules qE are assumed to have the largest step number in the derivation to be permuted.

A simple example of a derivation \({\mathcal {D}}^1\) reducing to a normal derivation \({\mathcal {D}}^5\) via conversions and a permutation: permutation of \({\mathcal {D}}^1\) gives \({\mathcal {D}}^2\), which can be converted twofold to \({\mathcal {D}}^3\) and to \({\mathcal {D}}^4\), which conflue by conversion to \({\mathcal {D}}^5\).

4.8 1-Conversion and 1-Permutation

Such conversions and permutations hold for \(\mathbf{1}WL\) too.

5 Normalisation

Weak normalisation for a calculus of Natural Deduction is the property that from every deduction of the calculus a normal deduction, a deduction without any maximum formula, can be constructed by reductions. The first published proof of this property was given by Prawitz (1965) for intuitionistic, classical and minimal predicate logic in natural deduction and will shortly be sketched for the case of intuitionistic logic. It is a proof by double induction on the pair \(\langle l, s \rangle \), with an outer, major induction on l, the largest degree of max formulas in a given deduction and with an inner, minor induction on s, the sum of lengths of the segments of max formulas of largest degree in this very deduction. Further Prawitz gives an algorithm how to detect an appropriate segment of max formulas of largest degree for reduction (conversion or permutation), such that the induction value can be minimized. For reduction a segment \(\sigma \) of largest degree is chosen such, that no other segment \(\kappa \) of largest degree a) is above \(\sigma \) or b) is above a formula side-connected to the last formula of \(\sigma \) or c) contains a formula side-connected to the last formula of \(\sigma \). So no other segment \(\kappa \) of largest degree is above the conclusion of the last formula of \(\sigma \) or contains a formula side-connected to the last formula of \(\sigma \).

Formula A is side-connected to formula B iff A and B are premisses in one and the same instance of a rule. And segment \(\kappa \) is above segment \(\sigma \) iff the last formula occurrence of \(\kappa \) is above the first formula occurrence of \(\sigma \). Troelstra / Schwichtenberg Schwichtenberg and Troelstra (2000) call these conditions for segments of largest degree to be chosen for reduction top critical and rightmost, assumed that major premisses are notated left and minor premisses right in elimination rules. The argument of Prawitz, that such segments exist, goes at follows: in the set of segments of largest degree, which are topmost, must be a segment which is rightmost.

This normalisation proof of Prawitz can be modified slightly, by taking as induction parameters again the largest degree of max formulas as major value, but the number of max formulas of largest degree as minor value. Now the segments to be reduced are again as in Prawitz algorithm the topmost, rightmost, but the segment, which is chosen for reduction, is reduced completely to size zero. This can be done simply because the property of segments being topmost and rightmost is preserved under permutation.

5.1 Lemma on Normalisation in LC - Lambek Calculus

From a deduction \(\mathcal {D}\) in LC a normal deduction \({\mathcal {D}}'\) without max formulas can be constructed, preserving up to the order the open assumptions and the conclusion.

Proof

The proof proceeds by an induction on pair \(\langle k, m \rangle \), where k is the largest degree of max formulas in a given deduction \(\mathcal {D}\) and m is the number of max formulas of largest degree in \(\mathcal {D}\), so k is the major induction value and m is the minor induction value. Inspection of the conversions of operators \(\rightarrow , \Rightarrow , \bullet , \wedge , \vee , \forall , \exists , \Box , \Diamond , \bot , \top , 1, 0\) shows, that every conversion of a max formula A of degree k in \(\mathcal {D}\) removes A, possibly generating max formulas of degree at most \(k-1\), but preserving the conclusion and the assumptions of \(\mathcal {D}\) up to their order. So, a conversion applied on a max formula A of largest degree k in \(\mathcal {D}\) gives a \({\mathcal {D}}'\) with \(m-1\) max formulas of largest degree k, or \({\mathcal {D}}'\) has max formulas of largest degree at most \(k-1\), if \(m=1\).

But some cases of max formulas need a special treatment.

If a max formula occurs as a segment of length l, this segment is due to applications of 1W, but this segment can be shortened to \(l-1\) by a 1W-permutation moving 1W applications upward. 1W-permutations do not affect subderivations or assumptions.

If a max formula occurs as a lower context of some \(\vee E\) or even as a lower context in a chain of some \(\vee E\) at least one permutation preceeds the conversion. Apparently a chain of l \(\vee E\) can be shortened to \(l-1\) by permutation.

If in a derivation many max formulas of largest degree do occur, multiplication of subderivations during conversions and permutations are to be considered. In LC multiplication of subderivations \({\mathcal {D}}\) happen during conversions due to multiple occurrences of upper contexts of \(\wedge I\) and \(\vee E\): If conversion forces multiple substitution of \({\mathcal {D}}\) in upper contexts of \(\wedge I\) or \(\vee E\) with a max formula X of largest degree in \({\mathcal {D}}\), X is converted first.

Further multiplication of some subderivation \(\mathcal {D}\) may happen in LC during permutation of an elimination rule with \(\vee E\). If a max formula of largest degree X is in such \({\mathcal {D}}\), again X is converted first.

The search in subderivations comes to an end, since derivations here considered are finite and the relation of \(\mathcal {D}\) being a subderivation (subtree) of \(\mathcal {E}\), is transitiv, antisymmetric and not cyclic.

Finally lengthening of chains of \(\vee E\) may happen during permutations, if the conclusion of the elimination rule which is permuted with a \(\vee E\) is itself a lower context in an elimination rule of a chain. So there are two chains i, j, and j would be lengthened by shortening i through permutation, but not vice versa. So chain j is permuted first.

In the case of a collection of max formulas of largest degree as lower contexts of \(\vee E\) we use Prawitz’ argument: firstly we take the subclass of this collection such that permutations do not produce multiplication effects; and secondly in this subclass there must be a permutation, which does not generate lengthening of chains, since from two chains i, j the bottom-most does not lengthen the top-most. q.e.d.

5.2 Lemma on Normalisation in ILL - Intuitionistic Linear Logic

Normalisation of ILL, its statement and its proof, is exactly the same as normalisation in LC, the order or disorder of assumptions does not affect reductions like conversions and permutations, so the normalisation lemma of LC can be immediately transferred to ILL.

For sake of uniqueness the rules for additives \(\wedge , \vee \) need some specifications. If in pairwise occuring multiset context \(\Gamma \) of \(\vee E\) or \(\wedge I\) there is some formula occuring multiple, say \(\Gamma = \{ A, A \}\), it is to be specified which occurrences do correspond to each other in the pairs. Therefore it is simply stipulated that multiple occurrences of one formula do correspond to each other according to their natural order in the derivation trees. So the left most occurrences do correspond to each other, than the second left most, and so on. Such specifications are important for unique substitution in contexts in case of reductions, which always take place in both components of the pairwise occuring context.

Finally it is to be specified in \(\vee E\), which of the assumptions counts as the active subformula A and B of major premiss \(A \vee B\) in case of multiple occurrences of these formulas as assumptions. Here for B the left-most and for A the right-most occurrence is chosen. These specifications again guarantee deterministic substitutions in case of reductions.

5.3 Permutation of Weakening

5.4 Lemma on Normalisation in IAL - Intuitionistic Affine Logic

The one and only difference of normalisation in IAL to normalisation in ILL is the existence of additional segments of max formulas, due to general weakening rule W. But segments are reduced to a minimal length similar to 1W by permuting applications of W upwards to prepare conversion. Subderivations and multisets of assumptions are not affected by such W-permutations. With these additional W-permutations the normalisation lemma of ILL can be transferred to IAL.

5.5 Substitution in Contraction

If deduction \(\mathcal {D}\) is substituted in deduction \({\mathcal {D}}'\) at substitution formula A, where A is a contraction formula, substitution is done twice and open assumptions \(B_i\) of \(\mathcal {D}\) now occuring twice are contracted, as shown below. If contractions are applied manifold on one formula, substitution is done manifold.

5.6 Lemma on Normalisation in IRL - Intuitionistic Relevant Logic

The difference of normalisation in IRL to normalisation in ILL is, that additional multiplications of subdeductions do occur, whenever multiple substitutions of subdeductions \(\mathcal {D}\) in course of conversions are carried out within contraction rules C. If in \(\mathcal {D}\) exists a max formula Y of largest degree, Y is converted first.

5.7 Lemma on Normalisation in IL - Intuitionistic Logic

Normalisation of IL, so ILL extended by rules W and C, simply combines the techniques of IRL and IAL for normalisation.

6 Concluding Remark

The author wants to express his thanks to the helpful comments of an anonymous referee.

References

Avron, Arnon. (1988). The semantics and proof theory of linear logic. Theoretical Computer Science, 57(2–3), 161–184.

Bierman, G., & de Paiva, V. (2000). On an intuitionistic modal logic. Studia Logica, 65, 383–416.

Gentzen, G. (1934, 1935). Untersuchungen ueber das logische Schliessen, Mathematische Zeitschrift, 39, pp.176-210, pp.405-431.

Girard, J.-Y., et al. (1989). Proofs and types. Cambridge: Cambridge University Press.

Girard, J.-Y. (1987). Linear logic. Theoretical Computer Science, 50(1), 1–102.

Heyting, A. (1956). Intuitionism. Amsterdam: North-Holland Publishing Company.

Lambek, J. (1958). The mathematics of sentence structure. American Mathematical Monthly, 65, 154–170.

Lambek, J. (1993). From categorial grammar to bilinear logic. Substructural Logics, 2, 207–237.

Negri, S. (2002). A normalizing system of natural deduction for intuitionistic linear logic. Archive for Mathematical Logic, 41(8), 789–810.

Prawitz, D. (1965). Natural deduction. Stockholm: Almqist and Wiksell.

Schroeder-Heister, P. (1984). A natural extension of natural deduction. Journal of Symbolic Logic, 49, 1284–1300.

Schroeder-Heister, P., & Dosen, K. (Eds.). (1993). Substructural logics. Oxford: Clarendon Press.

Schroeder-Heister, P., & Piecha, T., Eds. (2019). Proof-theoretic semantics, proceedings of the third tuebingen conference, University of Tuebingen.

Schwichtenberg, H., & Troelstra, A. S. (2000). Basic proof theory (2nd ed.). Cambridge: CUP.

Shapiro, S. (Ed.). (2007). The oxford handbook of philosophy of mathematics and logic. Oxford: Oxford University Press.

Tennant, N. (2007). Relevance in reasoning. In Stewart Shapiro (Ed.), The oxford handbook of philosophy of mathematics and logic (pp. 696–726). Oxford: Oxford University Press.

Troelstra, A. S. (1995). Natural deduction for intuitionistic linear logic. Annals for Pure and Applied Logic, 73, 79–108.

van Dalen, D. (2004). Logic and structure (4th ed.). Berlin, Heidelberg, New York: Springer.

von Plato, J. (2003). Translations from natural deduction to sequent calculus. Mathematical Logic Quarterly, 49, 435–443.

Zimmermann, E. (2007). Substructural logics in natural deduction. Logic Journal of the IGPL, 15(3), 211–232.

Zimmermann, E. (2010). Full lambek calculus in natural deduction. Mathematical Logic Quarterly, 56, 85–88.

Zimmermann, E. (2017). Elimination by composition in natural deduction, workshop: Proof. Complexity and computation, Goettingen: Talk.

Zimmermann, E. (2019). Proof-theoretic semantics of natural deduction based on inversion. In P. Schroeder-Heister & T. Piecha (Eds.), Proof-theoretic semantics. Proceedings of the 3rd Tuebingen Conference (pp. 633–650). University of Tuebingen.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zimmermann, E. Natural Deduction Bottom Up. J of Log Lang and Inf 30, 601–631 (2021). https://doi.org/10.1007/s10849-021-09329-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10849-021-09329-8