Abstract

As the COVID-19 outbreak remains an ongoing issue, there are concerns about its disruption, the level of its disruption, how long this pandemic is going to last, and how innovative technological solutions like Artificial Intelligence (AI) and expert systems can assist to deal with this pandemic. AI has the potential to provide extremely accurate insights for an organization to make better decisions based on collected data. Despite the numerous advantages that may be achieved by AI, the use of AI can be perceived differently by society, where moral and ethical issues may be raised, especially in regards to accessing and exploiting public data gathered from social media platforms. To better comprehend the concerns and ethical challenges, utilitarianism and deontology were used as business ethics frameworks to explore the aforementioned challenges of AI in society. The framework assists in determining whether the AI’s deployment is ethically acceptable or not. The paper lays forth policy recommendations for public and private organizations to embrace AI-based decision-making processes to avoid data privacy violations and maintain public trust.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The outbreak of coronavirus may be the most concerning the topic of discussion nowadays. The COVID-19 pandemic does not only concern the health sciences, but almost all sectors, in one way or another, are impacted by the coronavirus. Economically, it has significantly impacted the tourism sector, oil and gas, automotive, consumer products and electronics. Although the effects of outbreaks and pandemics are not spread uniformly throughout the business and economy, it is important to note that while some businesses have struggled, such as the airline industry, agriculture, health insurance firms, tourism and hospitality, other businesses have, to some extent, prospered, such as Amazon.com, pharmaceutical companies manufacturing vaccines and antibiotics, mask production companies, and the like. There are also disparities in effect between the wealthy and the working class and particularly the poor, as they would have less access to health insurance and fewer resources to shield them from financial catastrophe. The pandemic may also be a period when both public and private organizations should apply technological innovation like deploying AI to combat the negative effects of the pandemic.

A wide range of areas could benefit from the adoption of AI. Disaster prevention, rapid reaction, and improved communication among the government, public, business groups, and other stakeholders in communities using the digital expert platform are all examples of AI applications in combating the COVID-19 epidemic. AI and expert systems allow the flow of real-time information with advanced data analytics by linking both physical and cyber networks (Trotta et al. 2020). For instance, within the context of healthcare services, automatic acute care triaging and chronic illness management, including remote monitoring, preventative treatment, patient intake, and referral help, are all possible with AI-enabled Telehealth (Jadczyk et al. 2021). AI has played an important role in the development of various activities in both public and private organizations, which includes strategies that utilize social media as a platform to engage public or customers effectively and efficiently.

By adopting AI in social media, this process allows public organizations to gain insights and a better understanding of people’s perceptions and behaviours to influence them proactively through the means of their preferred communication platform, thus leading to an increase in public awareness and knowledge about COVID-19. However, despite its advantages, the deployment of AI may raise several concerns especially to social media users in terms of privacy, consent and discrimination or unequal treatment (Anshari and Sumardi 2020). Thus, these three ethical issues need to be tackled and addressed properly for the purpose of protecting the interests of all the users and mitigating the ethical shortcomings, as well as the negative impacts of AI in the public sphere as a whole (Zulkarnain et al. 2021).

This study attempts to address and examine the ethical impacts of AI on social media strategies, by mainly emphasizing on data privacy, users’ consent, and discrimination. Before proceeding to these ethical implications, a brief explanation is provided on the role and process of AI with particular reference to its adoption as part of social media strategies.

The philosophical framework of ethics is adopted as it is critical in making sound, ethical decisions. This study highlights two ethics frameworks of utilitarianism and ethics of duties (deontology), in which both theories are used as guidelines to examine and address the chosen issues related to AI. Based on these ethical theories, the investigation thus helps the study to better articulate the issues and evaluate whether the applicability of AI on social media strategies is in line or contradicts with the proper ethical conduct. In addition, by employing these theories of ethics, the study came up with several policy recommendations, which serve as options to potentially resolve arising issues. This paper is organized as follows: in Section two, the literature review is presented. The study’s methodology is described in Section three. The fourth section discusses the findings and finally, the last section contains the concluding remarks.

1.1 Literature review

Nowadays, it is rare to walk somewhere without seeing someone glancing at their smartphone when practically everyone is engrossed in their device (Mulyani et al. 2019). People with their smart mobile devices or machines and robots can generate big data sources with sensors gathering data, satellite images, GPS signals, CCTV, digital pictures and videos, and transaction records (Trotta et al. 2018). Big data are growing not just as a result of the increasing number of smartphone users, but also as a result of AI’s ability to extract big data into big values (Ahad et al. 2017). AI cannot function well without big data. To derive value from big data, i.e. patterns and trends or behaviour, data analytics processing is required. Indeed, without the deployment or extraction of data via AI, big data will have no significant value (Anshari 2020; Razzaq et al. 2018).

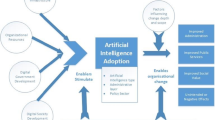

There are three types of data sources that can be used in surveillance setting; directed, automated, and volunteered (Kitchin 2014). First, people gather data on purpose or directed, with obvious examples like CCTV systems and police looking for vehicle ownership records. Second, automation data are collected by AI or expert systems without people’s knowledge or consent. For instance, transactions with banks or consumer outlets, as well as communications utilizing communication devices such as smartphones and other telephones, leave traces. Smart meters capture and send real-time data on energy consumption. Third, data are voluntarily supplied by users who provide information on social media sites and other similar sites. Although social media users do not always think of their activities in terms of sharing data with third parties (Trottier 2014), these are the three scenarios to understand about surveillance data collection in this context. Figure 1 depicts how AI can compile data, extract information, enhance it, and provide recommendations for an organization using any data source, including social media data. Data can come from a variety of places (see Fig. 2), including what the general public likes on the post and page, sharing information, post comments, and post views as to what people are truly interested in, preferred, or wanted.

1.2 Artificial intelligence and social media

AI in social media has become an effective tool for advertising products and services. Advertisements work effectively in social media since they have an enormous amount of users’ information which requires AI to work to understand users’ behaviour. Social media providers claim that users’ data are anonymous and serves according to custom demographic categories customised for advertisers. Advertisers can further alter the information based on their branding objectives as well as the background of the users (Sharma 2018). Hence, AI has empowered advertisers to accurately target their advertisements to a specific group of people or customers who may be interested in their products and services (Marr 2015). Monnappa (2018) identified that social media with AI-enabled gathered users data by analyzing their behavior in tracking cookies where it can trace its users visiting sites; facial recognition by tracing its users across the internet and other profiles; tag suggestions by suggesting its users who to tag in the users’ photo, and by examining its users’ likes that can predict personal characteristics of its users. Other than examining its users’ behavior, social media may join forces with data brokers to collect more data (Hill 2013). Despite the fact that social media examines its users’ behavior and collects their data, the main reason is often to sell such information to third parties or advertisers (Marr 2015).

To illustrate, in the case of Facebook, the company had made a clear statement that they do not sell data to advertisers or anyone (Gilbert 2018 cited in Business Insider). In fact, they allowed advertisers to tell them who they want to reach and then Facebook makes the placement. Although Facebook always convinced its users that sharing their data to the advertisers was only with the users’ approval through an agreement of terms and conditions, there were still some privacy issues arising among its users. Beninger et al. (2014) showed that users do not necessarily correspond to consent by signing the terms and conditions, and users basically sign or click ‘agree’ without fully reading these complex legal documents to open their accounts. Marr (2015) mentioned that Facebook had been accused for implementing unethical psychological experiment on its users without their consent. They tried to change the users’ moods by displaying specific posts, either affecting good or bad vibes, or then measuring the users’ responses. Apart from psychological research, another Facebook privacy scandal is linked to Cambridge Analytica (CA), a British political consulting firm, which used Facebook users’ data without their consent in an attempt to persuade elections (Anderson 2018). This firm obtained Facebook users’ data through an application to construct psychological profiles that can be used for advertisements customized to Facebook users based on their online activity. Hence, questions have arisen in terms of the ability of Facebook to protect users’ privacy as well as misusing their personal information to provoke its profitable advertising business (Graham 2018).

Similarly, many government agencies are making efforts to develop effective mechanisms that will empower and make the general public participate in public services (Anshari et al. 2018). In traditional government practices, the public is perceived as recipients of services and they hardly participate in the processes of public services development. Participation of the public is considered essential for good governance (Anshari, et al. 2021). Therefore, social media has been evolving from connecting people for social interactions, to a variety of uses and purposes, which includes acting as a platform to engage people for increasing public participation, digital business, and policymaking stakeholders’ input. With various social media platforms available today, such as Facebook, Linked, Instagram, Twitter and others, people can take advantage of their useful functions and benefits in sharing or delivering messages and up-to-date information effectively, efficiently and in a quick manner. For instance, public agencies use social media as a platform for the purpose of public relations and as a source of information regarding their public services delivery. In fact, AI-enabled social media platforms can become an effective and efficient tool for public agencies to encourage public participation, innovative collaboration, and transparency management through the process of exchange. AI enables the multi-channels of interactivity connecting all stakeholders such as public, private organizations, government departments, NGOs, to obtain optimum benefits for all in return.

As the number of social media users is increasing each day, it becomes a platform of communication allowing organizations to interact directly with the public and convince strategies for public interests, which in return will boost public acceptance, awareness and enhance reputation, recognition as well as image (Ahad and Anshari 2017). Nonetheless, the challenge for public agencies to recognize that although it is capable of reaching a broad demographic, it could not appeal to all of them or at least not to all in the same way. This is because the public is numerous as well as varied in terms of requirements, preferences and practices (Karnik 2018; Anshari et al. 2019). Hence, it is necessary for the public agencies, to better understand all the audiences thoroughly and their behavioural patterns beforehand to identify and classify the targeted segment on which they will focus, or simply, to determine the public profiles that the organization can best serve. Figure 2 shows that AI as a tool for data analytics that is not only quick but also cost effective, and is able to interpret large amounts of data into useful insights in full context for making better future predictions and more informed decisions.

1.3 Artificial intelligence and decision making

The ultimate goal of machine ethics is to develop a machine that operates according to an ideal ethical principle or set of principles and is directed by those principles while making decisions about alternative courses of action. Any organization either public or private requires a reliable data to make effective and efficient decision in daily basis (Anderson and Anderson 2007). Artificial Intelligence is a digital phenomenon involving the capture, use, analysis and storage of enormous amounts of data created by humans and devices that can facilitate in making decision effectively and efficiently (Richards and King 2014). Previously, generating and analyzing data was a time-consuming task, however, as the amount of data continuously grow, to meet the demands of gathering and analyzing these larger data sets, a new era of AI, expert systems, IoT, and big data began (Richards and King 2014) and one of the data source is social media (Zulkarnain and Anshari 2016). Figure 2 illustrates a scenario on how AI is able to capture a massive quantity of data from any data sources—either structured or unstructured, such as users’ profiles, and every interaction on social media platforms such as likes, comments, shares, followers, profile visit, search history, and purchase history (Almunawar et al. 2018). AI is able to extract and learn more than enough information about the users, beyond the publicly available information on their profile such as age, gender, race and location. Having studied these factors, the collected data is then precisely segregated with the help of AI to create the focused and precise subsets or segments of the larger audience who will likely be most responsive to what the business offers. By accurately identifying the targeted audience, it can help to enhance the certainty and chances of convincing the potential audiences or customers for business (Almunawar and Anshari 2014).

For business usage, traditionally, businesses kept track of customers’ behavior by means of interviews or surveys. Also previously, marketers defined their most valuable customers as the ones who spend the most amounts of money on their products. They assume that those who have common characteristics with the current customers will also most likely to exhibit similar interest. In contrast to these traditional approaches, today, with the help of AI, not only can this new technology specifically identify customers based on details extracted from their interactions and engagements online, but it can further predict future trends and patterns that can result in higher profits for businesses utilising such technologies. As mentioned by Gokalp et al. (2016), due to the competitive business environment or marketplace today, businesses are forced to process high speed data and integrate valuable insights and information into their production processes, hence by deploying AI into business strategies, organizations can gain insights that could guide them to meet the customers’ expectations, improve competitive advantage, and produce proactive data-driven decisions.

During the COVID-19 outbreak, AI deployment provides various benefits, including making information available to the public in real time, improving public services, and the capacity to contribute input and feedback to the public policy delivery process. Businesses may benefit from AI’s ability to extract data and share real-time data with the public by making quick judgments, which will help to improve economic growth by speeding up corporate activities. In addition, as shown at Fig. 2, AI should ideally be able to filter any noise and hoax news. Hoax, fake, and unverified news have become rampant in the past few years, and appear to be flourishing even more during the COVID-19 pandemic as and such information can easily spread through social media simply with a click of a ‘share’ button. Ilahi (2019) stated that the impact of hoax news is strongly supported by the massive usage of social media and instant messenger services (IMS). Social media and IMS like WhatsApp and Telegram can easily spread unverified news and messages relating to COVID-19, without recipients knowing the original source of the sender, or the authenticity of the information shared. Many receivers tend to spread the news without even considering its reliability or authenticness. Furthermore, the speed of the news increases dramatically due to the ability of IMS features that can cross-post messages, forwarding the same unverified content across multiple chat groups in text, audio, or video formats. Such amplification of broadcasting media even go as far as using deep fake technologies to create contents that are misleading, yet convincing. There are a few characteristics of hoax news; for instance, Kumar et al. (2016) highlighted that fact-based false information or hoax news tend to be longer in the textual content, which can generate more confusion among readers, but have fewer authentic references from the Web, yet the information itself—with its strong and convincing claims look rather genuine. Such information are also often created by recently registered ‘new’ accounts that show a lack of editing skills. However, readers could also wrongly assume that non-hoax news could be hoax—since some of the news is shared or created by the same editor or source (Kumar et al. 2016). Hoax news also spread deeper and quicker in one and across multiple platforms but just the same, such news also get deleted quickly once debunked (Kumar et al. 2016). This does not, however, resolve the issue of wrongful and unethical spread of unverified and false information, once such information has been consumed by readers, and even stored as data for reference in personal handheld devices and computers. Finally, search engines and website content authors are not always reliable in quickly deleting debunked news; or even bother to retract such information for fear of losing their credibility among followers.

1.4 Artificial intelligence & ethical challenges

As asserted by Jonathan and Neil (2014) in Forbes Magazine, the revolution of AI creates a lot of ethical issues concerning people’s privacy, confidentiality, transparency, and identity. AI-enabled applications work together to reveal valuable insights into an organization either public or private to which that particular data belonged. Ethical violations may occur through improper use of data extracted by AI. To what extent AI is acceptable towards the society that meets the current legal and ethical guidelines are the most common questions arising today. Failure to meet legal and ethical criteria means that AI usage risks the society’s values as a result of innovation and expediency. Apart from that, any unethical business activities conducted by a company will in return risk their reputation and their relationship with the customer which further affects their revenues in the long run (Samantha 2016).

The biggest challenge faced in deploying AI-enabled applications is related to privacy concerns (Kumari 2016). Data privacy implies that there are boundaries that should not be crossed in terms of translating the acquired data while deploying AI as to protect the sensitive information contained in the data. However, it is challenging to meet this requirement as it is nearly unavoidable to block the sensitive contents and unwanted disclosure of the data (Kumari 2016). To tackle these security issues, it is crucial to take considerations of the users involved in this AI process, on the data provider, data collector, data miner and decision maker.

Furthermore, AI is being used for making effective decisions (see Fig. 2), which may also intensify the existing systems of racism, discrimination, and inequality. This point is supported by previous research conducted by Sweeney (2015), where elements of discrimination against certain races were revealed when searching for people’s names via the Google search engine. Names that are associated with people of black ancestry were most likely to show arrest-related contents compared to other races, regardless of whether or not they were related to an event of police arrest. This could lead to unjust and false perceptions towards a person and their ethnic group. On top of that, in business world, companies conducting unethical businesses also aggravate the existing imbalance and disparities. The occurrence of price discrimination and other unwanted exploiting marketing practices towards certain ethnic groups are made easy through algorithmic profiling (Newman and Nathan 2014).

Additionally, AI involves using data that are posted publicly on the Internet by many people who might not have given their full consent, or were not fully aware of their information being used by third parties for other purposes (Farfield and Shtein, 2014). A massive amount of data can be created where, according to some estimate, over 20 petabytes of data is processed in a day by Google (Scott and Bracetti 2013) and each petabyte is equal to 250 billion pages of text (Vance 2012). The researchers, Farfield and Shtein, also stressed on how commercials use the End User License Agreements as way to get users’ “consent”. However, they argued that the method is not a tenable or ethically sound one, owing to the fact that they use vague and hidden language in the context. The End User License Agreements could be improvised by stating in the agreement that the users’ data may be used for research purposes and whichever company that are using their data as well as their motives should be disclosed.

1.5 Ethical framework

Ethics is a complex form to portray and understand. It is important to clarify what ethics is before understanding the vital role it plays in an organization. Merriam–Webster (2018) defined ethics as “the discipline dealing with what is good and bad and with moral duty and obligation.” People have different views of what is considered right and wrong or good and bad. In this regard, ethics becomes difficult to interpret and adhere to (De Cremer and de Bettignies 2013). Oates and Dalmau (2013) described ethics as the body of knowledge related to the study of universal principles that determine right from wrong. Racelis (2010) further explained that ethics is in contrast with morality where morality mainly deals with the principles of right and wrong while ethics concentrates on the behavioral standards that are generally accepted by a large group. Therefore, it is significant to note that ethical behavior focuses on what is favorable for others rather than for oneself. Mihelic et al. (2010) added to the explanation noting that ethical behavior is both legally and morally acceptable to the bigger community. Thus, business ethics is a branch of applied ethics pertaining to the diverse business activities of human beings (Keller-Krawczyk 2010). The capability of understanding and implementing good ethical principles into the business context is a means to developing a substantial organizational culture nurtured by ethical principles.

Christensen (2014) classified ethics into two different ways of looking at the morality of people in making (business) decisions, which include consequentialism and non-consequentialism. Consequentialism focuses on the outcome of the decision and has the philosophical context of egoism and utilitarianism (Bivins 2009). As stated by Ferrell et al. (2015), egoism represents right or acceptable behavior as those that maximize an individual’s self-interest, whereas utilitarianism is making decisions based on consequences and brings the greatest happiness principle. For example, harming others is acceptable if it increases the well-being of a greater number of people (Conway and Gawronski 2013). On the other hand, non-consequentialism has a focus on the conduct of the decision and consists of the theories of ethics of duties, and rights and justice. The difference between the two approaches is that ethics of duty is the responsibility of humans to make a decision, which is considered to be right instead of offering the best outcome, while rights and justice is ethical if it is in accordance with the legislation given to the society (Crane and Matten 2010).

According to Morrison and Mujtaba (2010), organizations that comply with unethical practices and are managed by unethical leaders could reduce and destroy shareholder value as a result of the high expenditure affiliated with unethical actions such as fines and penalties, audit charges and costs associated with loss of customers and reputation whereas leaders that are engaged in profoundly ethical corporations are able to raise shareholders. Furthermore, Daft (2004) discussed on the concept of managerial ethics, which is a crucial aspect of business ethics in relation to the decisions, actions and behavior of managers, and whether they are considered to be right or wrong. De Cremer and de Bettignies (2013) explained that in the business ambiance there are many inherent expectations and norms that encourage managers and eventually push them to traverse the boundaries and act unethically. However, this is not always the case, therefore, it is essential for managers to comprehend the distinction between laws and ethical standards. Managers can focus on creating good ethical resolutions for the organization provided that they have a fair understanding on what is recognized as a proper ethical conduct.

Figure 3 shows theories of ethics are divided into two, which are consequentialism and non-consequentialism. Under these theories, there are different approaches that can be used to assess the ethical dimensions in a given situation. However, in this study, we focus only on two concepts, namely utilitarianism, and ethics of duties (deontology), to evaluate the ethical principles in using AI for decisions making. These concepts are outlined in Fig. 3 below.

Utilitarian approach advocates an action based on its utility or usefulness. The utilitarian theory is also known as teleology or situation ethics. In general, this approach deals with the consequences of actions and these actions are judged as right or wrong in relation to the balance of their good and bad consequences. Utilitarianism defines “good” as happiness or pleasure and “the right” as maximizing the greatest good and reducing the amount of harm for the larger group of people. There are two underlying principles driving utilitarianism; firstly, the greatest good for the greatest number which means that the welfare of society takes precedence over that of individuals and secondly, the end justifies the means. Utilitarian practice various criteria to evaluate the morality of an action where either the righteousness of each individual’s behavior must be evaluated to decide whether it generates the greatest utility for the bigger number of society (act-utilitarianism) or general rules should be adopted to decide which conduct is the best (rule-utilitarianism). As a matter of fact, utilitarianism has both advantages and disadvantages. The advantage of utilitarianism is that the consequences of actions are taken intensely, whereas the disadvantage is that the concern with aggregate happiness neglects the worth of the individual, who, although in the minority, may deserve help. Furthermore, the utilitarian approach ignores justice in regard to the uncertainty of results. In spite of its prompt appeal, utilitarianism misfires because the predictions of outcomes are never precise. The consequences of opinions and actions are crucial, however, the consequentialism used as the modus operandi (method of operating) of moral decision making oversimplifies by disregarding important features of moral frameworks. In some measure, consequentialism can provide what seems to be a reasonable course of action, nevertheless, it cannot guarantee the rightness of any action.

The ethics of duties (deontology) approach, sometimes called deontology, is associated with the German philosopher named Immanuel Kant. It is a system of ethical decision making based on moral rules and places a higher value on duty or obligation without the consideration of consequences. The deontological philosophy rejects the perception that the end justifies the means. It takes the view that human beings have the capacity to ascertain which actions are morally right and wrong through the use of reason and result of virtuous intention. Unlike utilitarianists, deontologists argue that there are some things people should not do, even to increase utility. Kant’s famous formula for discovering the ethical duty is known as the categorical imperative, which states: “Act only according to that maxim by which you can at the same time will that it should become a universal law.” With reference to that, individuals would only act in ways that they want everyone else to act at all times. There are two types of deontology; act-deontology and rule-deontology. Act-deontology refers to the appropriate basis of actions on which to judge morality or ethicalness, while rule-deontology is the rule of conduct that determines the rightness or wrongness of an act. Even though deontology emphasizes the role of duty and respect for people, this theory also has restraint and problems in practice. The concept of ethics of duties underestimates the significance of satisfaction for the greatest number of people and social utility. Moreover, the limitation of the deontological stance is that its application is occasionally impractical and can lead to unfavorable consequences because of applying the rigid principles. Subsequently, the followings are the ethical issues of AI pertaining to the implementation of marketing strategy through social media in which each of them is analyzed individually and eventually achieve ways of tackling those issues. Hence, the ethical frameworks described above can be used to craft policy recommendations that are relevant to the issues linked with AI as the frameworks provide ethical rules and principles that enable people to deal with moral problems. Figure 4 shows ethical principles and social marketing in AI strategies.

1.6 Immanuel Kant and Mill’s on ethics

Kant’s view on ethics that is underpinned by the notion of a categorical imperative, argued that one should respect the humanity in others, and that one should only act in accordance with rules that could hold for everyone (Kant and Paton 1948). It implies that all rational creatures are bound by the same moral law. In response to how AI will replace human functions and the human mind with technology to engage in moral reasoning to act ethically, Kant’s theory on ethics provides an inclusive approach for assessing ethical conduct of individuals and states and incorporates justifications for moral and legitimate responses to immoral conduct. Though AI do not possess human rational thinking capacity, human agency that designs, develops, tests and deploys such technology can determine rules to be programmed into the technology to ensure ethical use and moral conduct and for the rules to be made public and shareable. Nevertheless, the broad purposes and uses of AI such as in the military sphere may lead to competing rules which may or may not be capable of universalization. Mill’s (1993) view on ethics, on the other hand, is not on rights or ethical sentiments but rather based on utilitarian principle wherein actions are right in proportion as they tend to promote overall human happiness. John Stuart Mill’s earlier text in 1861, was regarded as one of the prominent works that advocates the notion of utilitarianism as the foundation of morals. The principle of utilitarianism is to promote maximum level of satisfaction or general happiness among people. Mill provides a clear and unambiguous meaning of happiness stating, “By happiness is intended pleasure, and the absence of pain; by unhappiness, pain, and the privation of pleasure” (Mill 1863; p.10). Mill was critical of Bentham’s “theory of human nature” and departs from his work in that some happiness or pleasure are of higher quality, more desirable and more valuable than others and thus would be valued more. Therefore, he claimed that it would be rational to sacrifice lower amount of happiness or pleasure if it means gaining higher level of pleasure. Mill claims that moral wrong is connected to that of punishment and that an act is morally wrong if the action is blameworthy, either by law, by a fellow’s opinion or by the individual’s own conscience (Macleod 2016). It focuses on the consequences of actions and justification of punishment, and is more suited to the context of human-AI partnerships. To have morally acting AI is to program ethical principles into the machines, but whether or not happiness can be examined as a matter of goodness is unquestionable (Serafimova 2020). To define ethical and non-ethical usage of AI, Mill’s utilitarianism theory is relevant for the creation of ethical tool to regulate good consequences for society on the ethical side, and bad consequences for society on the unethical one.

1.7 Methodology

The study's primary purpose is to highlight and analyze published information in the fields of AI and Ethical Decision Making to provide new insights that will aid in understanding the current level of readiness for companies and policymakers in particular. Rowe (2014) establishes a methodology for conducting effective literature reviews to accomplish the study objectives. Rowe (2014)'s five components were used in this study, as illustrated in Fig. 3. As indicated previously, the subject of artificial intelligence is rapidly expanding, and research is being performed to address the needs of policy construction. As a result, the study samples only fully qualified research publications from the research domain (excluding research notes, brief communication papers, editorial notes, industrial whitepapers, and technical and non-academic documents). Sampling is the most critical part of this investigation. To obtain the sample, a thorough search was undertaken from top to bottom using a five-step approach. A thorough search was undertaken to produce a list of the majority of papers from reputable databases such as Springer, ScienceDirect, Wiley, Scopus, NCBI, IEEE, and ACM. The first phase collects articles based on search engine-discovered keywords such as "AI," "Decision Making," and "Ethics." This search pattern generates a significant number of articles, which must be filtered to acquire more precise extracts. After examining the abstract, keywords, title, and body of the article, a new list was created. Following that, samples containing information pertaining to the case scenario of prototype conceptualisation are included, bringing the total number of articles on the list to 70. Finally, a content analysis will be conducted using the articles that were gathered and determined to be highly relevant to the study.

1.8 Lack of privacy protection

Protecting users’ privacy data or information in social media is an essential component of maximizing one’s safety and minimizing harm from unauthorized disclosure, manipulation, etc. Violation of privacy constitutes a risk, thus, a threat to security. For example, a data breach in an organization could lead to negative impacts of the organization’s performances and reputation as well as decreasing customers trust. Therefore, for the purpose of understanding privacy issues in AI, it is possible by applying it to the ethical theories such as utilitarianism and deontology as mentioned above.

Deontology or ethics of duties are based on moral rules that focus on doing things that is right for them without considering the consequences. In analyzing information privacy or data privacy with this ethical method, there must be corresponding duties for claiming a right to information privacy. Thus, to protect privacy of information, duties that must be determined and imposed are by considering the sources of right in this privacy. According to Gilbert (2012), there are four primary sources of right: human rights, position rights, legal rights and contract rights. To declare data privacy as a human right is difficult because it would require regulation and legislation that would make it also a legal right. If data privacy is a contract right, it would be in particular cases where the contracts may exist. Lastly, position right is also not suitable to be declared as protect data privacy protection. Hence, to decide appropriate duties that encourage the protection of such rights, this reasoning would indicate that any rights to the data privacy would need to be formed as legal rights but it requires more legislation and regulations and imposing more laws do not effectually fulfill their purpose. Therefore, protecting data privacy must be a duty of the people to make suitable framework to protect these rights. For the case of Cambridge Analytica in Facebook, for example, Facebook overcame this problem by outlining a new privacy policy to clarify more clearly to its users regarding data collection (Anderson 2018). This shows that the protection of data privacy becomes the moral duty of Facebook without considering the consequences to the company’s performances from these decisions.

In contrast to deontology, the utilitarianism theory focuses on doing things that is right or wrong by considering the consequences of the actions as well as for the greatest good for the greatest number. The issues of data privacy violation are privacy-concern data mining (PCDM) and users’ information was sold to the third parties. Subjects that are affected in this violation are mainly the users of social media. Due to this issue of data violation, although there are benefits for a short term where the company could gain more profit by selling the data as well as the third parties could specifically target their customers, the downside from this action would be the long-term effects where the customers or users of social media would lose their trust and impose financial penalties, thus damaging the company’s reputation and credibility. This is because customers of the company are important stakeholders and business could not sustain without them, even though they might gain benefits from the third parties but the third parties could not also survive without any specific customers. For example, since the story of Cambridge Analytica broke, stocks of Facebook fell about 14% and some of the advertisers or the third parties had even left Facebook (Anderson 2018). This illustrates that instead of promoting the most overall happiness, privacy violation harms the people as a whole. There is no utilitarian approach in the decision making of this case. Therefore, if the company thought of the cost from these consequences and did not violate their customers’ data privacy, they might not encounter any greater lost such as compensation payment in lawsuits and losing customers’ confidence with them. On the other hand, privacy violation could be handled if there is transparency in the company’s privacy policy by stating clearly how the users data would be used by giving them options to manage their data profile as well as their information remain as anonymous when given to the third parties.

1.9 Discrimination and unequal treatment

Unethical organizations that only think about maximizing their profits tend to be unjust to a certain group of people by charging them with different prices for the same good or services. In the AI’s era, organization is able to categories an individual based on their characteristics such as race, ethnicity, religion, interests, hobbies, spending habits and other millions of things about their private life (Gumbus and Grodzinski 2015). Such information is derived from their social media using AI which allows the company to determine what type of person they are dealing with. The knowledge about the individual’s income, credit rating and history also helps the company to see how financially stable the customers are. Since the only concern the company has been about making a lot of profit, without taking into consideration on how their decision may affect negatively to other people, this practice does not reflect to both of the frameworks mentioned in the previous section.

Based on utilitarian moral theory, the morality of an action is calculated by totaling up happiness or well-being created by that action. An action is considered right if it results in greater happiness over sufferings and pain. Discrimination occurs when a certain group of people are receiving different treatment from others and results in disparate impact (Yu et al. 2014). Banks and insurance companies that are engaged with data analysis will be able to detect the characteristics of their customers which allow them to impose higher interest rates towards the low-income and communities of color and other less favorable terms.

From a deontological viewpoint, the act of discriminating against a certain group of people is intrinsically wrong and we have a duty not to do these things as everyone deserves to be treated equally. According to the Human Rights Act, it is illegal to discriminate people on the basis of their sex, race, color, language, religion, political or other opinion, national or social origin, association with a national minority, property, birth or other status. Without discrimination, it helps to bring all the society together and create harmony between them. It is irrelevant to discriminate and being unjust to a person based on their personal characteristics in determining whether they have a right to some social benefit or gain.

1.10 Uninformed consent

Data that have been gathered and assembled from various and different sources may produce information that may not be appropriate to be shared or known by other people as it may invade the person’s privacy and autonomy. Therefore, it is important to get consent from the data generator firsthand. Getting their consent is somewhat easy, usually obtained through the click-sign terms and conditions forms. However, it contradicts with the concept of informed consent as users tend to click the agree button instantly upon seeing the agreement form without having a clear understanding of what they are agreeing for. This is because of the lengthiness and the use of complicated and technical words used inside it. It is essential for the user to firstly read all the terms and conditions to get a full understanding on how their data may be collected, stored, used and shared by other people.

Businesses are able to target their customers through data analytics with AI-enabled based on the data the customer share in their social media. According to Newman (2015), users of social media tend to be honest in revealing their preferences, likes and dislikes through the pages they like and posts that they share. Such information is beneficial to the company as they bring insights to what the customer demands and needs. They are able to target the individual consumers that are most likely to buy their products and services through advertisements that are linked to their social media accounts. They get accessed to information that they may not know about, get promotional offers and other additional benefits. Companies can also get to communicate with their customers by responding to their comments, addressing and fixing customer issue when it occurs and getting to know their customers better. It helps to fulfill customers’ expectations and create great experiences for them. The amount of happiness created outweighs the bad in using the data aggregated from social media to provide meaningful information. It also reflects to the second principle of utilitarianism, where morally wrong actions are sometimes necessary to achieve morally right outcomes. The act of analyzing an individual’s data without getting their proper consent or without them knowing what the data are used for, their actions are considered as morally wrong. However, as long as the information produced does not harm the individual or exposing their privacy and creates a greater happiness, the action is considered as acceptable.

On the other hand, under some circumstances, users are required to link their social media accounts such as Facebook, Google + or Twitter to get access to a certain website or application as their verification. Without linking to these accounts, users are unable to get accessed to the websites and getting their approval means that they are just being forced to. In other words, users that give the website access to their social media accounts do not only trust the website but also third parties embedded on it. The use of big AI be concluded as to whether it is right or wrong should be based on the total positives an action created which outweighs the negatives.

1.11 AI, COVID-19 and policy recommendations

To fully exploit the potential of AI usage, there is a need to tackle the ethical issues mentioned thus far. In an attempt to mitigate these ethical drawbacks and moral dilemmas that AI impose, we propose several recommendations for consideration. First, to overcome data privacy violation and to retain the social media users’ trust, an organization might wish to consider a utilitarian approach in its decision making process. In this situation, transparency plays an essential role in reducing data privacy concerns. The users or customers are obliged to place trust onto the company with their data when they purchase products or use the services provided, although this still does not resolve the issue of how often very little information is provided regarding what data is being collected, how it is being used, and who has access to this data. Thus the issue of informed consent must always be prioritised to address the moral hazards associated with unethical business practices, including using loopholes as a means to escape rebuke. Organisations may want to rely on tools like the End User Licence Agreement, securing users’ consent—though this remains problematic. Certainly, placing the onus on users to read complex jargon-laden legal documents is already receiving pushbacks, particularly in instances where users are prompted to make quick decisions to agree to terms and conditions during sign up or registration for a service. During these COVID-19 times, health applications are often used by companies who act as government proxies to collect vast amounts of health and private data of individuals and even their household/family members. To the extent that each individual with such apps downloaded into their smart phones have arguably been treated like automaton nodes: data points consisting of faceless end-users, that are trackable, traceable, and monitored for long periods of time (e.g. in the use of contact tracing for COVID-19 patient exposure). Though done for the ‘greater good’, public rebuke regarding the loss of privacy, rights, freedom of movement, and liberty—have all been part of the discourse on COVID-19 and AI usage in the past 2 years.

Different datasets that would not initially be considered as having privacy concerns could be merged and diffused together in ways that then threaten the user’s privacy. Issues on consent may also arise due to users being inadequately informed of the future uses of the collected data and the participation of third parties without proper authorization. Thus, to avoid these problems, transparency should be applied in all stages from collecting and processing the data. Such timely and accurate disclosure will increase awareness of the users. Moreover, privacy and disclosure policy may be required to be put into place to notify the users concerning the collection and intended use of the data as well as to protect their private identity. It is high time that governments play their part to introduce regulations that would ensure companies to abide by ethical practices in businesses related to AI.

Furthermore, in matters of consent, users should also be provided with proper and clear guarantees that data will not be sold to other third parties without informed consent. This is because to be perceived as being ethical, the company must ensure that the users are granted control over their personal data, such as providing them with options to opt out of the data collection. Facebook for instance, provides the users a choice to protect their information through privacy settings in which it can limit certain access to their Facebook profile and customize their displayed content (Moreno et al. 2013). On the other hand, to resolve the damage caused by privacy violation, the deontology approach could also be considered in managing this issue. It is the moral duty of the company to respect the users’ privacy and make sure they feel comfortable in sharing their personal information. There is a need to maintain an equilibrium of trust between utilizing the users’ data and limiting the data privacy concerns. The critical way forward is to clarify what users are ‘signing up for—perhaps even at the cost of adding extra layers of classical red tape, bar the complicated legal jargon. What this does is to nudge users towards consciously and knowingly taking steps towards getting informed prior to giving consent over their data usage. Ultimately, it is not simply a matter of securing user data protection, but one that is effective and consensual, protecting both parties. During these pandemic times, so much of personal data is transmitted especially on social media, IMS and even educational platforms—yet once a service is registered to a user, it is rarely the case for users to want to spend time poring over the privacy policies that they had agreed to. As we increasingly see marketers using more advanced AI technologies, including the newly renamed Facebook company, The Metaverse, users will be subjects immersed in a virtual universe with 3D experiences where the digital and physical worlds converge. Governments, therefore, need to catch up with these advancements, particularly in creating guidelines and even regulations that could potentially govern the ways AI-enabled devices, Virtual Reality headsets, and the like, are used. The liberalisation of AI, software, hardware, and content should not come at a cost of misuse and manipulation by profiteering companies.

On the subject of discrimination, no distinction should be made between people whereby everyone can claim their rights regardless of sex, race, language, religion, social status, etc. The challenges of AI are still attributed to the human made decision of discrimination on the basis of data correlation. However, the data do not lead to this sort of discrimination, it is the algorithms that might be programmed by someone to create the discrimination based on some correlations that are possibly inaccurate and ethically wrong. Detecting discrimination in algorithms is very complex and not an easy task. Nonetheless, despite the complexity, algorithms need to be audited to show that they are lawful and eliminate biases. Also, to be ethical, the social media industry should adhere to any Acts related to the issue, for example, the Human Rights Act which helps to create a society in which people’s rights and responsibilities are properly balanced and protect them from any violations. After enforcing this action, then both aforementioned frameworks are applicable. When there is no more discrimination, then it is ethical according to the utilitarian because the course of action brings the greatest pleasure to the society and oversimplifies the company’s personal desire in earning more profit. From the deontology aspect, the deontologists believe that it is the duty of the company to prevent any discrimination and treat the people equally, hence, this conduct is considered morally right.

In general, all organizations should apply the stakeholder theory which involves the relationships between a business, individuals and group of people who can affect or are affected by the decisions. According to the stakeholder theory, all stakeholders must be treated fairly. However, some social media companies, for instance, Facebook, failed to cater all stakeholders in their decision making where the customers’ statuses are being ignored. Hence, this theory would solve problems such as understanding the potential harms and benefits for groups or individuals, effective management of stakeholder relationship that helps the survival or thrive of the business as well as to create value and prevent moral failures. Furthermore, organizations or companies should implement and practice good governance as it would benefit them by adding transparency, justice, accountability and responsibility in their operations and decision makings. Thus, may potentially avoid downfall of the company due to the loss of customers trust and ultimately reduce the shareholders’ value and as a result, unable to sustain the profitability.

1.12 Ethical challenges

Given the eagerness of companies to monetize AI and the tension between research, industry and business interest and ethical principles alongside wider social interest (Hagendorff 2020), there are a few ethical challenges that need to be addressed. The ethical challenges include: a) environmental sustainability, including availability of resources, b) privacy and confidentiality; including security issue, trust and transparency, c) data accuracy, and e) equality, including discrimination and bias (Antoniou 2021).

Indeed, infringements on privacy, autonomy and freedom (Wolf 2015) have taken place so that the algorithm can curate “personalised” advertisements shown on YouTube, and sponsored posts on Facebook and Instagram. Hence, while the EU have imposed the General Data Protection Act in 2018 to all organisations that target data collection in EU Member Stateshave tried to give control back to the individual through informed, unambiguous consent (What is GDPR 2018), it is not the case for other parts of the world, especially in the developing countries.

That said, AI technology is developing faster than regulators and lawmakers can react to it (Cannarsa 2021), making it even more challenging for regulators in the EU, let alone the regulators in developing countries. The problem is even more compounded by the fact that harm caused by AI “may not necessarily be the result of any wrongdoing on the part of a human agent or the result of any product defect” (Cannarsa 2021, p.293), as was the analysis of big data and algorithms used in various fields in the book “Weapons of Math Destruction” (O’Neill 2016). Cannarsa (2021) even went on to posit that “the trade-offs between fundamental rights and economic stakes will always be resolved in a negative way for individuals’ rights” (p.296), and given the ethical challenges discussed, as well as the need for organisations such as YouTube, Google and Facebook to make money off the people through curated content, we fear that he may be right.

2 Limitations and future direction

This study also has limitations. The study’s design is based on a literature review analysis to develop a better understanding of the issues and assess whether the use of AI to social media methods is consistent with or contradicts ethical conduct. On the other hand, the proposed models can be used to produce policy recommendations to encourage public and private enterprises to adopt AI-based decision-making processes to avoid data privacy violations and maintain public trust. While the research is ongoing, the proposed model will be employed to capture empirical data to give a roadmap for organizations to design more effective AI deployment strategies, including Post-COVID-19 strategy, and to develop Industry 4.0 capabilities.

3 Conclusion

Deploying AI requires ethically compliance to help utilize the maximum benefits of AI to collect, manage, interpret and analyses the large datasets to gain insights and drive smarter decisions. Despite its many benefits in enhancing strategies and reaching customers/public in innovative ways, its adoption and execution may be performed in ethical considerations. Such ethical concerns that arise are in regard to user’s data privacy, consent and the practice of discrimination in marketing strategies. As the amount of data keeps accelerating and as public concerns regarding their data security still remains, it is important to ensure that AI is adopted ethically. This is because responsible and ethical data usage is part of the requirements of using data effectively and efficiently, thus the collected data has to be used in a way that is in the best interests of the customers. Awareness and control of the data collection and its intended use could also minimize the ethical issues and public concerns. Besides that, all the stakeholders play a vital role in any organization as they are the key to successfully manage the organization where fairness and trust should exist as well as taking social responsibilities.

References

Ahad AD, Anshari M (2017) Smartphone habits among youth: Uses and gratification theory. Int J Cyber Behav Psychol Learn (IJCBPL), 7(1): 65–75

Ahad AD, Anshari M, Razzaq A (2017) Domestication of smartphones among adolescents in Brunei darussalam. Int J Cyber Behav, Psychol Learn (IJCBPL), 7(4): 26–39

Almunawar MN, Anshari M, Susanto H (2018) Adopting open source software in smartphone manufacturers' open innovation strategy. In Encyclopedia of Information Science and Technology, Fourth Edition (pp. 7369–7381). IGI Global

Anderson M (2018) Facebook privacy scandal explained. The Associated Press. Retrieved from https://www.ctvnews.ca/sci-tech/facebook-privacy-scandal-explained-1.3874533

Anshari M, Almunawar MN, Lim SA (2018) Big data and open government data in public services. In Proceedings of the 2018 10th International Conference on Machine Learning and Computing (pp. 140–144)

Anshari M, Almunawar MN, Masri M, Hrdy M (2021) Financial technology with ai-enabled and ethical challenges. Society, 1–7

Anshari M (2020) Workforce mapping of fourth industrial revolution: Optimization to identity. In J Phys Conference Series 1477(7):072023. IOP Publishing

Antoniou J (2021) Dealing with emerging ai technologies: Teaching and learning ethics for AI. In: Antoniou J, Quality of experience and learning in information systems (pp. 79–93). Springer International Publishing. https://doi.org/10.1007/978-3-030-52559-0_6

Almunawar MN, Anshari M (2014) Applying transaction cost economy to construct a strategy for travel agents in facing disintermediation threats. J Internet Commerce 13(3–4):211–232

Anderson M, Anderson SL (2007) Machine ethics: Creating an ethical intelligent agent. AI Mag 28(4):15–15

Anshari M, Sumardi WH (2020) Employing big data in business organisation and business ethics. Int J Bus Govern Ethics 14(2):181–205

Anshari M, Almunawar MN, Lim SA, Al-Mudimigh A (2019) Customer relationship management and big data enabled: Personalization & customization of services. Appl Comput Inform 15(2):94–101

Beninger K, Fry A, Jago N (2014) Research using Social Media: Users’ Views. NatCen Social Research

Bivins T (2009) Mixed Media: Moral Distinctions in Advertising, Public Relations and Journalism, 2nd edn. Routledge, New York

Cannarsa M (2021) Ethics guidelines for trustworthy AI. In: DiMatteo LA, Janssen A, Ortolani P, de Elizalde F, Cannarsa M, Durovic M(Eds.), The Cambridge Handbook of Lawyering in the Digital Age (1st ed., pp. 283–297). Cambridge University Press. https://doi.org/10.1017/9781108936040.022

Christensen L (2014) Ethics and Manipulative Marketing. Denmark

Conway P, Gawronski B (2013) Deontological and utilitarian inclinations in moral decision making: a process dissociation approach. J Pers Soc Psychol 104(2):216–235

Crane A, Matten D (2010) Business Ethics: Managing Corporate Citizenship and Sustainability in the Age of Globalization, 3rd edn. Oxford University Press, New York

De Cremer D, de Bettignies HC (2013) Pragmatic business ethics. Bus Strateg Rev 24(2):64–67

Daft RL (2004) Organization Theory and Design. Mason, Ohio: Thomson/South-Western

Ferrell OC, Fraedrich J, Ferrell L (2015) Business ethics: ethical decision making and cases, 10th edn. Cengage Learning, USA

Gilbert J (2012) Ethics for managers: Philosophical foundations and business realities. Routledge, New York, NY

Gilbert B (2018) How Facebook makes money from your data, in Mark Zuckerberg’s words. https://www.businessinsider.com/how-facebook-makes-money-according-to-mark-zuckerberg-2018-4

Gokalp MO, Kayabay K, Akyol MA, Eren PE, Koçyiğit A (2016). Big Data for industry 4.0: A conceptual framework. In Computational Science and Computational Intelligence (CSCI), 2016 International Conference on (pp. 431–434)

Graham M (2018) Facebook, Big Data, and the Trust of the Public. Retrieved from http://blog.practicalethics.ox.ac.uk/2018/04/facebook-big-data-and-the-trust-of-the-public/

Gumbus A, Grodzinski F (2015) Era of big data: danger of discrimination. ACM SIGCAS Computers and Society 45(3):118–125. https://doi.org/10.1145/2874239.2874256

Hagendorff T (2020) The ethics of AI ethics: An evaluation of guidelines. Mind Mach 30(1):99–120. https://doi.org/10.1007/s11023-020-09517-8

Hill K (2013) Facebook joins forces with data brokers to gather more Intel About Users For Ads. Forbes. Retrieved from http://www.forbes.com/sites/kashmirhill/2013/02/27/

Ilahi HN (2019) Women and HOAX news processing on Whatsapp. Jurnal Ilmu Sosial Dan Ilmu Politik 22(2):98

Jadczyk T, Wojakowski W, Tendera M, Henry TD, Egnaczyk G, Shreenivas S (2021) Artificial intelligence can improve patient management at the time of a pandemic: the role of voice technology. J Med Internet Res 23(5):e22959

Jonathan H, Neil M (2014) What's up with big data ethics? Forbes. Retrieved from https://www.forbes.com/sites/oreillymedia/2014/03/28/whats-up-with-big-data-ethics/#2d2fa3753591

Kant I, Paton H (1948) The moral law. Routledge, London

Karnik A (2018) The rise of the data-driven marketer: Why it’s beneficial and how to hire one. forbes. Retrieved on 15th September 2018 from https://www.forbes.com/sites/forbescommunicationscouncil/2018/03/05/the-rise-of-the-data-driven-marketer-why-its-beneficial-and-how-to-hire-one/#35c553c0490d

Keller-Krawczyk L (2010) Is business ethics possible and necessary? Econ Sociol 3(1):133–142

Kitchin R (2014) Big Data, new epistemologies and paradigm shifts. Big Data Soc 1(1):2053951714528481. https://doi.org/10.1177/2053951714528481

Kumari S (2016) Impact of Big Data and social media on society. Global J Res Analysis 5:437–438

Kumar S, West R, Leskovec J (2016) Disinformation on the web: Impact, characteristics, and detection of wikipedia hoaxes. In: Proceedings of the 25th international conference on World Wide Web, pp 591–602

Macleod C (2016) “John Stuart Mill”, The Stanford Encyclopedia of Philosophy (Summer 2020 Edition), edited by Edward N. Zalta, URL = <https://plato.stanford.edu/archives/sum2020/entries/mill/>

Marr B (2015) Big data case study collection, 7 amazing companies that really get big data. Retrieved from https://www.bernardmarr.com/img/bigdata-case-studybook_final.pdf

Merriam-Webster Dictionary (2018) Retrieved on 27th August 2018 from https://www.merriam-webster.com/dictionary/ethics

Mihelic KK, Lipicnik B, Tekavcic M (2010) Ethical Leadership. Int J Manag Inf Syst 14(5):31–41

Mill J (1863) Utilitarianism. Parker, Son and Bourn, London

Mill, John Stuart (1993) Utilitarianis m, In: Williams, Geraint (ed.), John Stuart Mill: Utilitarianism, On Liberty, Considerati ons of Representativ e Government, Remarks on Bentham’s Philosophy, Everyman, London

Monnappa A (2018) How facebook is using big data - The good, the bad, and the ugly. Retrieved from https://www.simplilearn.com/how-facebook-is-using-big-data-article

Moreno MA, Goniu N, Moreno PS, Diekema D (2013) Ethics of social media research: common concerns and practical considerations. Cyberpsychol Behav Soc Netw 16(9):708–713

Morrison H, Mujtaba B (2010) Strategic philanthropy and maximization of shareholder investment through ethical and values-based leadership in a post enron/anderson debacle. J Bus Stud Quarterly 1(4):94–109

Mulyani MA, Razzaq A, Sumardi WH, Anshari M (2019) Smartphone adoption in mobile learning scenario. In 2019 International Conference on Information Management and Technology (ICIMTech) (Vol. 1, pp. 208–211). IEEE

Newman D (2015) Social media metrics: Using big data and social media to improve retail customer experience [Blog]. Retrieved from https://www.ibmbigdatahub.com/blog/social-media-metrics-using-big-data-and-social-media-improve-retail-customer-experience

Newman & Nathan (2014) How big data enables economic harm to consumers, especially low income and other vulnerable sectors of the population. J Internet Law Dec 18.6: 11–23

Oates V, Dalmau T (2013) Ethical Leadership: A Legacy For A Stronger Future. Performance 5(2):18–33

O’Neil C (2016) Weapons of math destruction: How big data increases inequality and threatens democracy (First edition). Crown

Racelis AD (2010) The Influence of Organisational Culture on Performance of Philippine Banks. Social Science 6(2):29–49

Razzaq A, Samiha YT, Anshari M (2018) Smartphone habits and behaviors in supporting students self-efficacy. Int J Emerg Technol Learn 13(2)

Richards NM, King JH (2014) Big Data ethics. Wake Forest l Rev 49:393

Rowe F (2014) What literature review is not: diversity, boundaries and recommendations. Eur J Inf Syst 23:241–255

Samantha, W. (2016). 6 ethical questions about Big Data. Financial Management. Retrieved from https://www.fm-magazine.com/news/2016/jun/ethical-questions-about-big-data.html

Scott, D., & Bracetti, A. (2013). 50 things you didn’t know about Google. Complex. Retrieved from http://www.complex.com/tech/2013/02/50-things-you-didnt-know-about-google/20-petabytes

Serafimova S (2020) Whose morality? Which rationality? Challenging artificial intelligence as a remedy for the lack of moral enhancement. Humanit Soc Sci Commun 7:119. https://doi.org/10.1057/s41599-020-00614-8

Sharma R (2018) How Does Facebook Make Money? Retrieved from https://www.investopedia.com/ask/answers/120114/how-does-facebook-fb-make-money.asp

Sweeney L (2015) Can computers be racist? Big Data, inequality, and discrimination. Presentation. Ford Foundation

Trottier D (2014) Vigilantism and power users: police and user-led investigations on social media. In: Social media, politics and the state. Routledge, pp 221–238

Trotta A, Di Felice M, Montori F, Chowdhury KR, Bononi L (2018) Joint coverage, connectivity, and charging strategies for distributed UAV networks. IEEE Trans Rob 34(4):883–900

Trotta A, Montecchiari L, Di Felice M, Bononi L (2020) A GPS-free flocking model for aerial mesh deployments in disaster-recovery scenarios. IEEE Access 8:91558–91573

Vance A (2012) Facebook’s is bigger than yours. Bloomberg Businessweek. Retrieved from https://www.businessweek.com/articles/2012-08-23/facebooks-is-bigger-than-yours and challenges. IEEE Network, 28(4), 5–13.

What is GDPR, the EU’s new data protection law? (2018) GDPR.Eu. https://gdpr.eu/what-is-gdpr/

Wolf B (2015) Burkhardt Wolf: big data, small freedom?/radical philosophy. Radic Philos. https://www.radicalphilosophy.com/commentary/big-data-small-freedom. Accessed 22/12/2021

Yu P, McLaughlin J, Levy M (2014) Big data: A big disappointment for scoring consumer credit risk. National Consumer Law Center March 2014

Zulkarnain N, Anshari M (2016) Big data: Concept, applications, & challenges. In 2016 International Conference on Information Management and Technology (ICIMTech) (pp. 307–310). IEEE

Zulkarnain N, Anshari M, Hamdan M, Fithriyah M (2021) Big data in business and ethical challenges. In 2021 International Conference on Information Management and Technology (ICIMTech) (Vol. 1, pp. 298–303). IEEE

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Anshari, M., Hamdan, M., Ahmad, N. et al. COVID-19, artificial intelligence, ethical challenges and policy implications. AI & Soc 38, 707–720 (2023). https://doi.org/10.1007/s00146-022-01471-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-022-01471-6