Abstract

One determining characteristic of contemporary sociopolitical systems is their power over increasingly large and diverse populations. This raises questions about power relations between heterogeneous individuals and increasingly dominant and homogenizing system objectives. This article crosses epistemic boundaries by integrating computer engineering and a historicalphilosophical approach making the general organization of individuals within large-scale systems and corresponding individual homogenization intelligible. From a versatile archeological-genealogical perspective, an analysis of computer and social architectures is conducted that reinterprets Foucault’s disciplines and political anatomy to establish the notion of politics for a purely technical system. This permits an understanding of system organization as modern technology with application to technical and social systems alike. Connecting to Heidegger’s notions of the enframing (Gestell) and a more primal truth (anfänglicheren Wahrheit), the recognition of politics in differently developing systems then challenges the immutability of contemporary organization. Following this critique of modernity and within the conceptualization of system organization, Derrida’s democracy to come (à venir) is then reformulated more abstractly as organizations to come. Through the integration of the discussed concepts, the framework of Large-Scale Systems Composed of Homogeneous Individuals (LSSCHI) is proposed, problematizing the relationships between individuals, structure, activity, and power within large-scale systems. The LSSCHI framework highlights the conflict of homogenizing system-level objectives and individual heterogeneity, and outlines power relations and mechanisms of control shared across different social and technical systems.

Similar content being viewed by others

Introduction

As a set of norms and perspectives, the way of interpreting, perceiving, and relating to the world, adhering to the principles of Enlightenment and Encyclopedia, modernity shaped western societies, including the conceptualizations of technologies that influence our lives. This historical, sociopolitical project sought to order, classify and measure the universe, to control and to standardize it, with disciplined bodies and uniform individuals being an essential part of this vision. As Foucault noted: “modern thought is advancing towards that region where man’s Other must become the Same as himself” (Foucault, 2005, p. 358). Against this background, the reactions of western societies to recently intensifying migration across the world might therefore not be surprising. The USA betrays its proclaimed principle of liberty and its image as the land of opportunities by incarcerating migrants, most despicably, children (Long, 2019). Similarly, the EU outsources border control and tries to curb migration by financially supporting the Lybian coast guard, returning migrants to violent detention camps in a country torn by an intensifying civil war (Sunderland and Salah, 2019). Many authors problematized the project of modernity. While they are not necessarily entirely against it, they denounced the obscure, the hidden, and unacceptable aspects of it. Nietzsche, among others, criticized the notion of a unique and unified “truth”, the primacy of rationality, and order that are so fundamental to modernity. In his critique of metaphysics of presence, Heidegger protested “an objectivistic understanding of a world composed of law-governed things subject to theoretical representation and technical manipulation” (Feenberg, 2018, p. 131). Following this epistemological tradition, Michel Foucault and Jacques Derrida were two of the most avid critics of modernity (Clifford, 1987, p. 223) and also exhibit significant similarities in their political thought regarding the need for pluralism (Bennington, 2016, pp. 3–7). Foucault advanced our understanding of contemporary social organization as modern technology, essential to the exercise of political power such as in Bentham’s Panopticon. Modern technologies of power also mark the increasing interpretation of politics as policy, where politics is reduced to the administration and organization of people—a common point of criticism in Derrida’s work.

Modern technologies have played a particularly central role in the advancement and implementation of the principles of modernity, including its instrumentalization for exercising political power. Among various others, Langdon Winner (1980) showed that political ideology is embedded in technological artifacts. He convincingly argued that technology enforces politics among humans as a result of their interaction with socio-technical systems. Politics emerge as a necessary element as system-level objectives might not be collectively agreed upon and have to be achieved through some kind of coercion (i.e., economic dependence and enforcement of state power). A collection of essays by Andrew Feenberg, providing a range of additional perspectives on the intricate relations of “technology, modernity, and democracy” can be found in Beira and Feenberg (2018). In this work, we explore the interdependence of sociopolitical ideas and technological design for one of the most defining modern technologies, the digital computer. The first digital, general-purpose computer, the ENIAC, was designed to calculate ballistic missile trajectories for the US Army during World War II (McCartney, 1999). As McCartney argues, while primitive in comparison to today’s computers, it laid a foundation that influenced computer architecturesFootnote 1 for decades. This suggests that military interests and applications, with significant social and political ramifications, have had an impact on computer design. As argued by Tedre et al. (2006), computer science is locked up in northwestern cultures reinforcing the prevailing binary logic system employed in the design of computer applications. While this binary logic is but one representation through which we can engage with the world, since it is the dominant representation, it eliminates a vast amount of knowledge systems and creativity from other cultures in the shaping of our societies (Eglash, 1999; Eglash, 2007). In other words, we can consider the digital computer as a manifestation, not just of a powerful and important technology, but of modern thought itself.

This relation of technology and modernity becomes especially relevant in reflecting on Heidegger’s concept of the enframing (Gestell) of modern technology (Heidegger, 1981, p. 20). We understand the enframing as a set of constraints (conscious or unconscious) that determine the technological design decisions we perceive as viable. By reflecting on the value-laden political dimension of technology (Winner, 1980), as well as the dominance of a specific culture over others (Eglash, 1999), we can then take seriously Heidegger’s concern that the danger of modern technology lies in the exclusion of alternative forms of being and human-technology relations. To establish an epistemological link between social systems and computer architectures, we based this research on the theoretical and methodological framework proposed by Foucault mainly in Discipline & Punish (1977). The concepts of the disciplines and political anatomy (Foucault, 1977, pp. 137–8) outline how modern political technologies of surveillance, normalization, and synchronization are used to arrange individuals as to function within a large system. Under notions of efficiency and utility, individuals become subject to homogenization, which results in a change in power relations between individuals and the system. As Foucault noted, the Panopticon, as one such example, is a metaphor of the disciplinary power, a materialization of the capitalist discipline, an architectural figure of comprehending the reality (Foucault, 1977, p. 200). “The Panopticon […] must be understood as a generalizable model of functioning; a way of defining power relations in terms of the everyday life of men” (Foucault, 1977, p. 205). With a genealogical approach, this article reinterprets Foucault’s concepts by applying them to computer architecture, demonstrating that politics can reside in social and technical systems and that the defining features of political organization might not necessarily be found only in the social realm. Through an archeological exploration of computer architecture, which is strongly determined by modern thought, it is argued that by understanding different social and technical systems as manifestations of a more abstract system class, we can potentially identify power relations and mechanisms of control inherent to the more abstract system class itself (as a specific modern enframing). In Heidegger’s interpretation, it might allow us to identify an essence that shaped the abstract system class. This excavation of the organizing principles of computer architecture and its similarities to social organization, are then advanced through Derrida. The democracy to come (à venir) is a problematization of modern sociopolitical organization and institutions and demands ideas about potential alternatives. These are not clearly defined, but are argued to not be achieved through the modern approach of extensive quantification, homogenization, and engineering of the social through the reduction of politics to policies.

Within this work we pursue two objectives. First, we want to broaden our understanding of politics from social and socio-technical systems to purely technical systems themselves. This will open up reflections on the design of technical artifacts in general and computer architecture in particular and can help reveal an enframing influential in engineering practices and conceptualizations. And second, we aim to generalize from the observation of two distinct systems, one social, one technical, to a more abstract notion of system organization, the roles and activities of individuals, and individual and system-level functionality. In other words, we intend to arrive at an essential relation of the enframing that governs social organization and computer architecture. In summary, this paper follows an epistemological tradition to rethink the mechanisms of power (in technical or social systems) that subject individuals to contemporary levels of functional reduction and homogenization. It aims to open up reflections on alternative forms of organizations, organizations that embrace plurality, and posits that by rethinking technology (as artifact or as method), we are also rethinking the essence of modern organization more generally.

Foucault and computer architecture

In this section, we reinterpret parts of Discipline & Punish (Foucault, 1977) to establish a philosophical narrative about computer architecture and a notion of politics for this technical system. With only a few changes (in italics) to the original vocabulary (see Table 1), the following analogies are intended to support a different imagination of computer architecture and to establish significant similarities in the organization of two distinct systems that both rely on principles of modernity. However, first we need to justify the reinterpretations and explain why they are more than superficial analogies, simply retelling an existing story in a different context.

First, the most fundamental reinterpretation was made from individual/body to transistor. Generally speaking, any given system is either composed of homogenous or heterogenous components. That is, either a system consists of components of only a single class, or of components from across many classes. In heterogeneous systems, components can be said to have their functionality inscribed in them (e.g., due to a specific form or other property), and hence their position within the system is predetermined and not simply replaceable by any other arbitrary component. Contrary, and as we will discuss in detail throughout this work, the assumption in homogeneous systems is that all components are (to a degree) identical. The implication is that individuals are generic and replaceable, and their precise function is determined by the location and sequence they are embedded in. In that sense, the analogies between social systems and computer architecture are much more adequate as compared to the vast majority of other technical systems composed of heterogeneous components.

A second argument motivating the reinterpretations concerns the inception of the normalization of individuals (the elimination or marginalization of differences across (almost) homogeneous individuals). Humans are born into social systems with their “imperfections” and are successively normalized over time. Contrary, transistors are specified and homogenized prior to their integration into computer architecture. This difference informs the reinterpretations of surveillance and tactics. Surveillance in social systems carries the expectation that non-sanctioned behavior will need to be corrected. With transistors being fully homogenized prior to system integration, any non-sanctioned behavior is eliminated by default and the notion of control suffices to operate the system. Similarly, the expectation of the unexpected behavior then requires tactics to deal with them, while in the absence of the unexpected design suffices to define all behavior from the start. The remaining reinterpretations (mechanical-digital, movements-activity, labor-computation, profit-information) simply reflect the context of the computer as a digital technology.

The concepts and techniques that led to the architecture of the first microcontrollers did not redefine or reinvent basic principles of computation, such as Boolean logic. “However, there were several new things in these techniques. To begin with, there was the scale of the control: it was a question not of treating transistorsFootnote 2, en masse, “wholesale”, as if it were an indissociable unity, but of working it “retail”, individually; of exercising upon it a subtle coercion, of obtaining holds upon it at the level of the mechanism itself—activity, stable states, energy, frequencyFootnote 3: an infinitesimal power over the active transistor. Then there was the object of the control: it was not or was no longer the signifying elements of function or the properties of the transistor, but the economy, the efficiency of switching, their internal organization; constraint bears upon the forces rather than upon the states; the only truly important act is that of switching. Lastly, there is the modality: it implies an uninterrupted, constant coercion, controllingFootnote 4 the processes of the activity rather than its result and it is exercised according to a codification that partitions as closely as possible time, space, activity. These methods, which made possible the meticulous control of the operations of the transistor, which assured the constant subjection of its forces and imposed upon them a relation of docility, might be called “disciplines”” (Foucault, 1977, pp. 136–137).

Based on the definition of the disciplines that individual transistors are subjected to, Foucault continues to describe the political and economic reasoning behind individualized subjection. “What was then being formed was a policy of coercions that act upon the transistor, a calculated manipulation of its electrons, its states, its switching. The transistor was entering a machinery of power that explores it, breaks it down and rearranges it. A political anatomy, which was also a “mechanics of power”, was being born; it defined how one may have a hold over many transistors, not only so that they may do what one wishes, but so that they may operate as one wishes, with the techniques, the speed and the efficiency that one determines. Thus, discipline produces subjected and practised transistors, “docile”Footnote 5 transistors. Discipline increases the forces of the transistor (in economic terms of utility) and diminishes these same forces (in technical terms of reduced variationFootnote 6). In short, it dissociates power from the transistor; on the one hand, it turns it into an “aptitude”, a “capacity”, which it seeks to increase; on the other hand, it reverses the course of the energy, the power that might result from it, and turns it into a relation of strict subjection. If economic exploitation separates the force and the product of labor, let us say that disciplinary coercion establishes in the transistor the constricting link between an increased aptitude and an increased domination” (Foucault, 1977, p. 138).

Computer architecture, or the arrangement of initially a few thousand, then millions, nowadays billions of transistors, had to develop methods to efficiently integrate these transistors into a single system. “It does this first of all on the principle of elementary location or partitioning. Each transistor has his own place; and each place its transistor. Avoid distributions in groups; break up collective dispositions; analyze confused, massive or transient pluralities. Disciplinary space tends to be divided into as many sections as there are transistors or elements to be distributed. One must eliminate the effects of imprecise distributions, the uncontrolled disengagement of individuals, their diffuse connections, their unusable and uncontrollable coagulation; it was a designFootnote 7 of anti-desertion, anti-vagabondage, anti-concentration. Its aim was to establish conductance’s and resistances, to know where and how to locate transistors, to set up useful communications, to avoid others, to be able at each moment to control the conduct of each transistor, to assess it, to judge it, to calculate its qualities or merits. It was a procedure, therefore, aimed at knowing, mastering and using. Discipline organizes an analytical space” (Foucault, 1977, p. 143).

The precise spatial definition of computer architectures was the foundation for the implementation of temporal methods of control and processing. “By creating a central clockFootnote 8 it was possible to carry out a control that was both general and individual: to observe the transistor’s presence and application, and the states of their work; to compare transistors with one another, to classify them according to characteristics and speed; to follow the successive stages of processingFootnote 9. All these serializations formed a permanent grid: confusion was eliminated: that is to say, processing was divided up and the computation process was articulated, on the one hand, according to its stages or elementary operations, and, on the other hand, according to the individuals, the particular transistors, that carried it out: each variable of this force—energy, speed, accuracy—would be observed, and therefore characterized, assessed, computed, and related to the individual who was its particular agent. Thus, spread out in a perfectly legible way over the whole series of individual transistors, the processing force may be analyzed in individual units. At the emergence of large-scale computation, one finds, beneath the division of the processing, the individualizing fragmentation of computational power; the distributions of the disciplinary space often assured both” (Foucault, 1977, p. 145). The reinterpretation of this paragraph has to be taken with a grain of salt as transistors are not observed, compared and classified in the literal sense (see argument of inception of homogenization at the beginning of the section). Interestingly, various science fiction narratives (e.g., Gattaca, Blade Runner, The Stepford Wives) portray the idea of observing, comparing, and classifying humans prior to their conception, thus blurring the lines between social systems and deterministic machines. An idea that was already pursued by the Lebensborn program of the German National Socialist regime aiming to ensure the reproduction of racially pure, thus homogenized individuals.

With the shift from designing computers for specific applications to a “general purpose architecture” computation redefined the relation to time, which was now made manageable through the disciplines. “Discipline […] arranges a positive economy; it poses the principle of a theoretically ever-growing use of time: exhaustion rather than use; it is a question of extracting, from time, ever more available moments and, from each moment, ever more useful forces. This means that one must seek to intensify the use of the slightest moment, as if time, in its very fragmentation, were inexhaustible or as if, at least by an ever more detailed internal arrangement, one could tend towards an ideal point at which one maintained maximum speed and maximum efficiency. […] The more time is broken down, the more its subdivisions multiply, the better one disarticulates it by deploying its internal elements under a gaze that controls them, the more one can accelerate an operation, or at least regulate it according to an optimum speed; hence this regulation of the time of an action that was so important in the army and which was to be so throughout the entire technology of computational activity” (Foucault, 1977, p. 154).

In its fundamental operation computer architecture relies on fully fragmented individual control and exhaustion of time. The relation between the individual transistor and the computer as a whole is defined through these methods. “The “seriation” of successive tasks makes possible a whole investment of duration by power: the possibility of a detailed control and a regular intervention (of differentiation, correction, elimination) in each moment of time; the possibility of characterizing, and therefore of using individual transistors according to the level in the series that they are embedded in; the possibility of accumulating time and activity, of rediscovering them, totalized and usable in a final result, which is the ultimate capacity of a transistor. Temporal dispersal is brought together to produce informationFootnote 10, thus mastering a duration that would otherwise elude one’s grasp. Power is articulated directly onto time; it assures its control and guarantees its use” (Foucault, 1977, p. 160). Computation in this sense is therefore “that technique by which one imposes on the transistor tasks that are both repetitive and different, but always graduated. By bending behavior towards terminal states, computation makes possible a perpetual characterization of the transistor either in relation to this term, in relation to other transistors, or in relation to a type of process. It thus assures, in the form of continuity and constraint, a growth, an observation, a qualification” (Foucault, 1977, p. 160).

We can find further motivations for specific design choices applicable to large-scale computer architecture. “Thus a new demand appears to which discipline must respond: to construct a machine whose effect will be maximized by the concerted articulation of the elementary parts of which it is composed. Discipline is no longer simply an art of distributing transistors, of extracting time from them and accumulating it, but of composing forces in order to obtain an efficient machine. This demand is expressed in several ways” (Foucault, 1977, p. 164). One such way is the operation of a transistor as a binary device that will only know two valid states. “This is a functional reduction of the transistor. However, it is also an insertion of this transistor-segment in a whole ensemble over which it is articulated. The transistor whose physics have been optimized to function part by part for particular operations must in turn form an element in a mechanism at another level” (Foucault, 1977, p. 164). This statement better demonstrates the use of docility in connection with transistors, where docility is inextricably tied to functional reduction and system integration. In addition to the functional reduction of the individual transistor, how that transistor interacts must be specified. “The various chronological series that discipline must combine to form a composite time are also pieces of machinery. The cycleFootnote 11 of each must be adjusted to the cycle of the others in such a way that the maximum quantity of forces may be extracted from each and combined with the optimum result” (Foucault, 1977, pp. 164–165).

This adaptation of Foucault demonstrates how computer architecture follows very similar organizing principles as modern social systems. We initially defined the disciplines and political anatomy of computer architecture, followed by descriptions of spatial and temporal control. Integrated into a “mechanics of power”, these methods of control define the relations between individual transistors, their activity, and the collective functionality. This technology is so powerful that it has reshaped our lives like few technologies before, and what enabled the vast system-level functional diversity of its applications is the extreme functional reduction of and full control over the individual transistors. We could therefore count the computer architecture as additional empirical evidence for Winner’s question about the organization of large technical systems (Winner, 1980).

Additional engineering perspectives

We will now take the main arguments from the previous section and provide a more mainstream engineering perspective that incorporates some of the implications and limitations of certain computer engineering choices. In our adaptation of Foucault, we pointed to three main elements of what he described as the disciplines. First, control was not exercised on groups, leaving some level of internal autonomy, but on each individual. Second, one can observe a shift from specific qualitative properties or functions attributed to the individual toward the economy of the system that focuses on a more abstract evaluation of efficiency. And third, the modality of disciplines is oriented towards processes, rather than results. We can find the exact same elements of discipline in computer architecture. Before going further, there is an important point to make about the concealment (Verborgenheit) articulated as part of the enframing (Heidegger, 1981, p. 20). When studying mainstream computer architecture literature (e.g., Hennessy and Patterson, 2011), transistor-level considerations seem to be lacking. That is, the modus operandi of the actual individual elements and how they are organized seems to require no further explanation or justification. Rather, discussions occur at higher levels of abstraction that obscure the individual transistor. However, when we take the term computer architecture literally, we have to discuss the design at a brick-by-brick or transistor-by-transistor level to understand design choices (or the lack of them) at higher design levels.

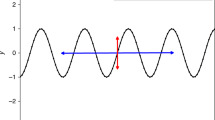

Foucault used the image of the docile body to describe the elementary units used to build a modern social system that subjects these units to complete and omnipresent surveillance. The equivalent to the docile body in computer architecture is the transistor (Fig. 1). The transistor is a three-terminal device (it has three connectors), and in its most common digital use it either connects or disconnects two terminals (“drain” and “source”) based on a control signal applied to its “gate”. It therefore acts like a switch that is, in an idealized interpretation, either OFF or ON (0 or 1). In this operation, the transistor is the physical device carrying out the binary operations that form the foundation of Boolean logic and digital computation.

However, this operational mode is the result of specific design choices and does not fully reflect the physical nature of the transistor. The transistor is an intrinsically analog device that “knows” more than just two terminal states. In a transition from one terminal state to another, the transistor has to pass through the whole spectrum of possible states that lie between 0 and 1. Although it is an electronic device, one can picture the transition between terminal states like a mechanical switch bound by physical laws. Any time electrons move, they have to move through space in a finite amount of time. The functionally non-reduced analog nature of transistors is related to two behavioral properties that lead to a functional reduction in favor of easier control and system-level integration: nonlinear state transitions and device variation. Nonlinear state transitions make transistors very sensitive to small variations in the applied control signal. If two identical transistors receive only a marginally different control signal, they can exhibit significantly different output states (behavior). Device variation poses a problem of interpretation. If two transistors are indeed controlled by the exact same signal, small variations in their physical properties can also cause these two transistors to exhibit different states. In both cases we are facing a problem of asserting meaning. It becomes almost impossible to assert if different transistor outputs are intended or a result of unknown variations in either their control or their internal properties. We could say that this creates an epistemological problem for computation.

We have to see contemporary computer architecture as a response to these challenges. Binary operation of transistors eliminates both problems at the same time. By allowing only two possible states (ON, OFF), tiny variations in transistor states do not present interpretation problems anymore. As long as a transistor state is somewhat close to one of two terminal states, the transistor is interpreted as exhibiting that state. In Foucault’s terms, it was an elimination of confusion. The success of contemporary computer architectures relies on this homogenization of its elementary units. The impact of heterogeneous device characteristics is eliminated by reducing representation to only two terminal states at opposite ends of a spectrum. When we referred to docile transistors, obviously also for metaphorical reasons, we intended to express this functional reduction of the transistor that ignores all “individuality” of expression and only demands adherence to two predefined states of being. The extreme functional reduction and homogenization of the transistors permitted the construction of large-scale computer architecture that achieves complicated functionality at higher levels. Yet, in transistor operation, significant electrical energy is consumed throughout the process of device switchingFootnote 12, the process of changing between terminal states while passing through all states in between. To understand its implication in light of recent civilizational crises such as global warming and excessive need for fossil fuels, how do we justify operations that require functionally reduced individuals that consume the majority of their energy for a process that we eliminate from meaning creation?

Closely related to the homogenization of the transistor is the application and distribution of the clock signal, which controls all activity. As Foucault noted, social organization saw a shift from a qualitative evaluation of specific tasks or applications to a quantitative evaluation of activity. Similarly, computer architecture is mostly evaluated based on quantitative measures such as clock frequency (speed at which transistors change states) and number of instructions (basic operations) per second. The ever more exhaustive use of time was a main driver for performance increases of computer architecture over the last decades. This will lead to an absolute, ever-growing exploitation, and control of moments of time required a centrally controlled and fully synchronized computer architecture. The central clock is what generates the rhythm to which all transistors must respond. Nowadays, computer architectures contain more than a billion transistors. The clock signal is distributed, directly or indirectly, to each and every one to control its activity and ensure synchronization. Just like functional reduction of individual transistors, central control of time also comes at a high cost that can be reflected on philosophically. First, the distribution of the central clock signal requires physical infrastructure that reaches every remote corner of the computer architecture and to which the spatial arrangement of all other functional units is subordinated. Activity and functionality do not simply emerge; it is preceded by a conceptualization of control. Second, the operation of this infrastructure incurs significant energy consumption. For some computer architectures, the clock distribution system consumes >25% of total energy (Friedman, 2001). In this sense, investments in the clock system represent the desire to control and the assumption that without centralized control there will be no meaningful, graduated, collective activity.

Another notion we adopted from Foucault in the previous section was the composition of forces and the disciplinary space that creates as many segments as there are individuals. In computer architecture, the spatial arrangement of billions of transistors is inextricably tied to the notions of homogenization (functional reduction) and control (clock signal). The extreme functional reduction of transistors to only two possible states presupposes a composition of transistors into functional units in order to perform any task of significant complexity. Activity and functional heterogeneity, therefore, are the result of specific spatial and temporal arrangements of a set of interconnected homogenized transistors. The structure given to a set of transistors determines their individual activity and collective functionality. Clearly defined spatial structures and functional roles of transistors permit the precise enforcement of the clock rhythm. The combination of fully known spatial arrangements and the distribution of the clock signal they facilitate, then creates a system for which we can determine the state of each of the more than a billion transistors at every nanosecond of operation (see Jonas and Kording (2017) for an interesting transistor-level analysis of computer architecture). While such a computer architecture allows anything that can be expressed algorithmically to be computed, the spatial (and therefore functional) fragmentation of computation is one of its main limitations in terms of efficiency and its evolution. Spatial fragmentation leads to an architecture where we have clearly separated functional units such as the control unit, the memory, and the arithmetic logic unit. However, any computation requires all of these units to interact, which is resolved by internal communication infrastructure. The price to pay for the functional fragmentation is a high need for communication (mobility) of data and instructions. The “von Neumann bottleneck” describes how the functioning of computer architecture is limited by its data transfer capabilities. To better relate to the problem, we can draw a parallel to global capitalism, which exhibits a similar spatial fragmentation of production integrated in a single system through constant mobility of people and goods.

It should now be clear what we could call the political anatomy of computer architecture. Exercising the disciplines over large quantities of homogenized and functionally reduced transistors to form a single integrated system would not have been possible without a political anatomy. Political anatomy is the set of structural arrangements and methods that on the one hand divide individuals and processes, but at the same time ensure the direction of all activities toward a common objective. The modular design of computer architectures, with distinct functional units (at design levels higher than the transistor level), then implements a political anatomy that controls the flow of data and operations throughout the system. The word political therefore refers to the command structures embedded in the functioning of computer architectures that subject individual and docile transistors to system objectives. In contrast, recent developments in computer architecture design provide examples for reflection upon alternative (political) principles guiding the integration and interaction of millions and billions of transistors. Neural computer architectures such as Neurogrid (Benjamin et al., 2014) and Loihi (Davies et al., 2018) are highly distributed information processing units that tolerate and even benefit from heterogeneity across homogeneous individuals (neurons). Yet, they are not general purpose architectures and further scaling of neural computers still faces technical challenges in their physical realization. Nonetheless, with the structure given to often millions of neurons they represent another form of organization. Asynchronous computer architectures do away with the global clock signal that governs and constraints all activity on a computer chip and move toward local organization of computing (Sutherland and Ebergen, 2002). In his article “The tyranny of the clock”, Sutherland (2012) provides technical motivations for a paradigm shift in computer design, but also points to the required social paradigm shift in design and education allowing students to think differently about (computer) system organization and for asynchronous computer architectures (as a paradigm free of a single global control signal) to self-perpetuate. A third trend to mention is the shift from homogeneous to heterogeneous computer architectures (HCA) (Augonnet et al., 2011; Kumar et al., 2006). Rather than a single form of organization and control for the whole system and its operations, HCA exploit different subsystems that are tailored to specific tasks, mostly through imposition of different structures onto the individual transistors within the subsystems. While heterogeneity is leading to more efficient computers, challenges of translations and interpretations between subsystems arise.

Large-scale systems composed of homogeneous individuals

By giving a computer architecture interpretation of Foucault’s writing and a short philosophical reflection on the design principles of contemporary computer architecture, we expect to have earned skepticism of computer engineers and philosophers alike. It is therefore time to generalize the analogies we have created. In section “Foucault and computer architecture”, we reinterpreted Foucault’s original text through minimal changes in vocabulary and argued that it is, while certainly unconventional, a meaningful description of computer architecture. This was contextualized in section three by discussing some of the direct technical ramifications and some preliminary philosophical implications of computer architecture design choices. It should come as no surprise that what we are developing here is a comparison of social organization (or social architecture) and computer architecture. Although we are talking about two very different systems and types of individuals, humans and transistors, we find that both are exposed to the disciplines and political anatomy.

That technological artifacts in general, or the computer in particular, can embody or exercise forms of politics is by no means a new proposition. As was mentioned before, Winner (1980) demonstrated how politics are imposed through the design of a bridge, as well as the factory. Pinch and Bijker (1984) used the bicycle to show how technological design, in combination with social norms, can reinforce the dominance of already dominant groups within society. ActorNetwork Theory holds that, among others, social relations as well as technologies are social constructs and results of interacting and mutually dependent actants (human and non-human) (Latour, 1999, 2005). Feenberg (2008), with the concept of the technical code, expressed how a technical solution is the result of filtering through alternative technical possibilities as to meet certain social or ideological requirements. Moreover, Akrich (1997, p. 208) established the notion of the script embedded in technological artifacts that represents “specific tastes, competences, motives, aspirations, political prejudices, and the rest” of the designer. These seminal contributions to our sociological and philosophical understanding of technology have pointed out how technology establishes, reinforces, and intensifies power dynamics within social groups through the interaction of humans with a technological artifact or the mediation of human relations through technology. This paper explores something different: not a socio-technical system or artifact, but the conception of a purely technical one; one that does not involve human interaction in its internal operations. This is to say that we are adopting, may be even extending, the concept of politics (understood as power relations) to define the internal organization and operation between strictly technical elements. Arguing that we have two systems, one social, one technical, that exhibit significant organizational similarities, identified through the political principles they embody, then begs the question of their relation. On the one hand, we have a modern technological artifact that behaves entirely deterministically and is composed of devices that exhibit, arguably, a less complex set of behaviors than humans. On the other hand, we have technology as method embedded in numerous mechanisms of control in a social system with many non-deterministic dynamics and whose organization and evolution cannot be justified by purely technical considerations. What could be the basis for any meaningful comparison of such two systems?

To develop a response to this question, we will incorporate ideas from Heidegger, Foucault, and Derrida. First, it can be argued that the close resemblance of computer architecture and Foucault’s writing is by no means a coincidence. Foucault himself provides ample support for certain organizational principles of Panopticism being replicated in the military, schools, hospitals, etc. While it might present a larger conceptual shift to consider these principles in a purely technological artifact, we find it plausible to assume that the organization of many individuals to form a single functioning system might be the defining factor for these principles. This can be supported by understanding that Foucault’s discussions have focused on social systems undergoing rapid growth. Secondly, to develop a position regarding the design principles mentioned above, we adopt Heidegger’s notion of the enframing (Heidegger, 1981, p. 20). In our reading of Heidegger, the enframing is something that imposes boundaries on the possibilities of a thing to become. We could say it is an ideological framework that makes us accept established notions while at the same time making us blind to alternatives. More concretely, this could imply that the design of computer architecture and principles of social systems share the same essence. This would be an argument supporting our adaptation of Foucault. The implication, in a Heideggarian sense, is the existence of a more primal truth (Heidegger, 1981, p. 28). This primal truth can then refer to either a rethinking of computation or even a rethinking of social organization. We therefore argue that we are observing two systems defined by a common enframing and that the revealing of the enframing can have direct implications for our understanding of technical, as well as social organization. And thirdly, we should then ask what this primal truth is? Can we go beyond just referring to this metaphysical concept? For this we turn to Derrida and his work on modern democracy (Derrida, 2005). Democracy (or, more accurately, representative democracy) is at least in part, similar to Foucault’s arguments: an organizational form that is a response to growing population sizes. It therefore can be understood within the notion of integrating many individuals in a single system. Derrida’s critique of contemporary western democracies is that they represent a minimal democracy. In other words, democratic processes are for the most part reduced to casting votes every couple of years. This resembles the functional reduction of the individual for the sake of system-level objectives. Derrida develops the concept of the democracy to come (Derrida, 2005, p. 8). This is a democracy that represents a social system without subjecting individuals to contemporary levels of homogenization and functional reduction. Rather, as Derrida argues, this democracy would be heterogeneous, yet inseparable (Derrida, 2005, p. 88); exhibit dynamic divisions of sovereignty (Derrida, 2005, p. 87); and degenerate violence, authority and the power of law (Derrida, 2002b, p. 281). The democracy to come relies “on the condition of thinking life otherwise, life and the force of life.” We argue that this condition also expresses the idea of revealing the enframing to arrive at a more primal truth, or may be we should say alternative truth.

We compile and depict all these ideas in Fig. 2. We have to understand some social and technical organizing principles using a more abstract notion of Large-Scale Systems Composed of Homogeneous Individuals (LSSCHI). The first aspect of this notion is the fact that it is large-scale. Obviously, all systems, no matter their size, subject their individuals to some forms of homogenization (i.e., shared language, rituals, roles, responsibilities, etc.). However, what is important is the relation between the individual and the system. In large-scale systems with millions of individuals, the relative importance of any individual becomes marginalized and subjected to system objectives. This is qualitatively different from small systems that exhibit a much greater interdependence between the system and any given individual. The second aspect of the notion is that of homogeneous individuals (a system composed of the same type of individuals; while a necessary condition, this is not equivalent to homogenized individuals). We can clearly point to large-scale systems composed of heterogeneous individuals (or components), such as airplanes and factories that are composed of millions of individual parts. However, heterogeneity of individuals does not permit the arbitrary replacement of one individual with another without compromising the functionality of the system. While heterogeneous components have their functionality, and through that their place in the system inscribed in them, the universality of homogeneous individuals requires politics for the articulation of activities and functions within the system. Here, we have discussed two possible manifestations of such systems—one technical and one social. Drawing on Heidegger, we argued that the similarity between these two systems is a result of a shared enframing rather than a coincidence. Both systems we discussed therefore represent manifestations of the same mode of system organization (for completeness we also consider natural systems such as ant colonies or bee hives as possible manifestations of LSSCHI, though for the sake of maintaining our focus we will not discuss them here). If we see it as our responsibility to question the status quo, to not accept it as the best we can get, but to seek alternative social organizations, we can then adopt Heidegger’s idea of a more primal truth, or Derrida’s notion of a democracy to come. With respect to our more abstract interpretation of social and technical systems, we rephrase Derrida by proclaiming the possible existence of organizations to come. Organizations to come, understanding social and technical organization as being determined by principles relating to the integration of large numbers of homogeneous individuals into a functioning system, then have implications for social, as well as technical systems. Moreover, we could imagine that any revealing in one specific manifestation can find analogies in another manifestation, leading to a more comprehensive understanding of the systems in which we are so deeply embedded that we often cannot even grasp them.

In the most general sense, our proposal regarding LSSCHI is a framework allowing for new perspectives on the relations between individuals, structure, activity, and power within large-scale systems. As we argued, individuals are functionally reduced and embedded in a structure to recreate complex functionality at the system level. Their particular interrelations then constitute the power relations between individuals themselves and between individuals and the system. We have seen that the modern organizations of computer architecture and societies rely strongly on similar forms of homogenization and control. However, should we accept modern organization with its levels of homogenization and control as necessary, or should we seek to reveal its enframing in an effort to arrive at organizations to come? For Derrida, democracy to come was an elusive idea, not one we can clearly point to. As he said, “it is not the democracy of the future, but a democracy that must have the structure of a promise—and thus the memory of that which carries the future, the to-come, here and now” (Derrida, 2005, p. 85). What is interesting about this statement is the reference to a structure of a promise. What does Derrida mean by this reference? Maybe, and using a subsequent reference of his, it is the idea of “the condition of thinking life otherwise, life and the force of life” (Derrida, 2005, p. 33). In our abstract LSSCHI context, we understand life as analogous to the individual and the force of life as resembling activities that individuals perform. Life then poses the question of individuality, plurality, and to some extent the refusal of system objectives. Activity, as the force of life, poses a contradictory demand (we could say life and the force of life constitute an auto-immune process (Derrida, 2002a, p. 78)). Activity is what sustains life, individual and communal. It is an articulation of shared interests across individuals and in this sense a homogenizing force, one that sets limits to individuality. Coming back to the structure of a promise, the structure imposed upon individuals is then what determines the promises of life (individual) and activity (force of life). Organizations to come, for any LSSCHI, will therefore be characterizable by their relations of individuals, structure, and activity. As at the beginning of this paragraph, we have to add the notion of power to this relation. While a core principle in Foucault, power is also at the heart of Derrida’s understanding of the democracy to come. When he talks about societies that are “heterogeneous, but inseparable” (Derrida, 2005, p. 88), dynamic “divisions of sovereignty” (Derrida, 2005, p. 87), or the “degeneration of law, the violence, the authority and the power of law” (Derrida, 2002b, p. 281) he sets clear examples of the centrality of power. We argue that the democracy to come remains rather elusive as our ideas for viable power relations are limited when we think of heterogeneity and reduction of control in large-scale systems. This challenge translates directly to the more abstract concept of organizations to come.

Some final remarks

When individuals become so homogenized that dissent is impossible, we have created a machine, as the example of computer architecture shows. After 1840, when Comte was spreading his Religion of Humanity, his printed works included an epigraph with the positivist motto: “Love as a principle and order as the basis; progress as the goal” (Angenot, 2006, p. 67). When the American Revolution and, especially, the French Revolution shaped modern thought and transformed the way we understand politics, Comte’s ideas became profoundly influential for many nation states founded after the nineteenth century, like the Latin American countries. An unquestionable manifestation of this influence is the inscription of “Order and Progress” in the curved banner on the Brazilian national flag. With order as the basis, i.e., when a specific anatomy is imposed, the proclaimed principle of love can only be legitimate if the subject of that love respects the singular order. It is of course correct to assert that homogeneity is not a necessary condition for order, but the examples given above are strong indicators that modern thought equates order with homogeneity. Order then implies the prohibition of disorder, dissent, or questioning of structures. Moreover, progress is a singular and univocal notion, since the goal excludes all other possibilities for human development. This vague notion of progress, in the context of order, undoubtedly refers to progress toward society as a machinery. It transmits a utilitarian vision of improving the human condition. It is emblematic of the enframing of modern organization and is an expression of how we seek for homogeneity, despite all claims to the contrary.

Since Plato, the main problem political theory deals with is resolving conflicts within a community or society. In the modern and liberal tradition, politics is merely a functional process of problem-solving or achieving objectives. This rather utilitarian approach to politics is the basis for the numeric and managerial understanding of democracy (Derrida, 2005, pp. 29–30) that denies any politics not based in the quantification of the individual. That is to say, the political phenomenon is limited to sanctioned activities within the bounds of formal institutions, like governments, nation states, or supranational organizations (i.e., European Union). These organizations are frequently highly structured, large-scale social systems, defining and holding sovereignty over large numbers of individuals. To be a legitimate individual within such social systems requires embracing homogeneity according to predefined roles and mechanisms. Any perturbation of the order by individuals results in a defense mechanism of the system that tries to delimit, isolate, or even eliminate the abnormality. Our Western democracies, that legitimize themselves through the unconditional defense of human rights, are constantly breaking this very principle for the sake of self-preservation and a utilitarian vision of order and progress. As Derrida pointed out: “Democracy is suicidal” (Derrida, 2005, p. 33). When modern democratic systems perceive something as a threat, they exhibit auto-immune reactions. They increase control and surveillance over individuals, a reinforcement and assertion of homogeneity, to single out the non-conforming, the abnormal, the dangerous. Modern democracy, therefore, respects individuals who are a recognized part of the system. It defends the ones who have the same “origin,” the same genesis, the ones who have a document that can identify them as compliant and homogenized. However, the foreigner, the strange, the undocumented, represents a threat.

War on terror, immigration crisis, hate crimes, among other profound problems we are facing, are symptoms of the inability or our democratic institutions to uphold their own principles when it comes to individuals that challenge the established structures or even our singular idea of progress. However, we should not ignore the fact that the dysfunctional or dangerous individual, the antigen, historically has also been the engine of social change. A high concentration of antigens can radically transform or end a system. When “sick” bodies, the undocile bodies, gain considerable strength, they force the large-scale system to reorganize, to change its own laws, to keep from perishing. Social transformation is always related to heterogeneity. A defense of plurality is a claim, a manifesto of the dynamism of social systems, the validity and the possibility for movement and dissent; it is an affirmation of life. It is therefore in our utmost interest to challenge the modern mode of organization and move towards organizations to come that are characterized by structures that affirm plurality, not just in terms of system-level functionality, but for individuals and activity, as life and the force of life.

Notes

Computer architecture describes the specific spatial and functional arrangements that integrate billions of individual devices into a single microprocessor (computer chip).

A transistor is the basic element used to build a microcontroller. It is equivalent to Foucault’s “body”.

We replaced “movements, gestures, attitudes, rapidity” with similar transistor-related concepts. Activity is the act of moving from one state to another. A stable state is a representation of a transistors value and its meaning in reference to the system. Energy is the cost it takes to move between states. Frequency defines the speed at which a transistor can change states.

We adopt Foucault’s notion of supervision as control as a more common concept in computer architecture.

A docile transistor is obviously a figure of speech to construct a certain imagination. Docility, here, does not mean that the transistor was once willful, but expresses the elimination of possible alternative behaviors.

The original text states “in political terms of obedience”. Reduced variation expresses the same idea as it reduces the risk of individual transistors disrupting the system.

We reinterpret “tactics” as design, which is what defines control in computer architecture.

The original text “by walking up and down the central aisle of the workshop” implies a constantly returning control and enforcement of work. In computer architecture such rhythmic control of activity is enforced through a clock signal that reaches each individual transistor across the entire computer architecture.

We reinterpret the “mechanical” terms of labor process and production as the “digital” term of processing.

The objective of the spatial and temporal fragmentation of processing raw data is to create information, which is the “profit” computation generates.

Here, we reinterpret “the time of each” as the cycle of each to stress the repetitive and synchronizing force of the central clock signal that controls all transistors.

Total power consumption is the sum of static and dynamic power consumption. Static power is consumed when the transistor is in a terminal state and it increased proportionally as device sizes shrank. Dynamic power is consumed during the switching between terminal states.

References

Akrich M (1997) The description of technical objects. In: Bijker WE, Law J (eds) Shaping technologies/building society: studies in sociotechnical change. The MIT Press, Cambridge/London, pp. 205–224

Angenot M (2006) Tombeau d’Auguste Comte. Chaire James McGill d'étude du discours social de l’Université McGill

Augonnet C, Thibault S, Namyst R et al. (2011) StarPU: a unified platform for task scheduling on heterogeneous multicore architectures. Concurr Copm-Pr E 23(2):187–198

Beira E, Feenberg A (2018) Technology, modernity, and democracy. Rowman & Littlefield International, London

Benjamin BV, Gao P, McQuinn E et al. (2014) Neurogrid: a mixed-analogue-digital multichip system for large-scale neural simulations. IEEE 102(5):699–716

Bennington G (2016) Scatter 1: the politics of politics in Foucault, Heidegger, and Derrida. Fordham University Press, New York, NY

Clifford MR (1987) Crossing (out) the boundary: Foucault and derrida on transgressing. Philos Today 31(3):223–233

Davies M, Srinivasa N, Lin TH et al. (2018) Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38(1):82–99

Derrida J (2002a) Faith and knowledge: the two sources of “religion” at the limits of reason alone. In: Anidjar G (ed.) Acts of religion. Routledge, New York, NY, p 40–101

Derrida J (2002b) Force of law: the ‘mystical foundation of authority’. In: Anidjar G (ed) Acts of Religion. Routledge, New York, NY, pp. 228–298

Derrida J (2005) Rogues: two essays on reason. Stanford University Press, Stanford

Eglash R (1999) African fractals: modern computing and indigenous design. Rutgers University Press, New Brunswick NJ

Eglash R (2007) Ethnocomputing with native American design. In: Dyson LE, Hendriks M, Grant S (eds) Information technology and indigenous people. Information Science, London, pp. 210–219

Feenberg A (2008) Critical theory of technology: an overview. Tailoring Biotechnologies 1(1):47–64

Feenberg A (2018) The politics of meaning: modernity, technology, and rationality. In: Beira E, Feenberg A (eds) Technology, modernity, and democracy. Rowman & Littlefield International, London, pp. 127–145

Foucault M (1977) Discipline and punish: the birth of the prison. Sheridan A (trans.). Vintage 1, New York, NY

Foucault M (2005) The order of things. Routledge, London

Friedman EG (2001) Clock distribution networks in synchronous digital integrated circuits. P IEEE 89(5):665–692

Heidegger M (1981) The question concerning technology and other essays. Harper Torchbooks, New York, NY

Hennessy JL, Patterson DA (2011) Computer architecture: a quantitative approach. Morgan Kaufmann, Burlington

Latour B (1999) Pandora’s hope: essays on the reality of science studies. Harvard university press, Cambridge

Latour B (2005) Reassembling the social: an introduction to actor-network-theory. Oxford University Press, Oxford

Long C (2019) Children at risk in US border jails. Human rights watch. https://www.hrw.org/news/2019/06/20/children-risk-us-border-jails. Accessed 10 Nov 2019

Jonas E, Kording KP (2017) Could a neuroscientist understand a microprocessor? PLoS Comput Biol 13(1):e1005268

Kumar R, Tullsen DM, Jouppi NP (2006) Core architecture optimization for heterogeneous chip multiprocessors. In: 2006 International Conference on Parallel Architectures and Compilation Techniques (PACT). IEEE, pp. 23–32

McCartney S (1999) ENIAC: the triumphs and tragedies of the world’s first computer. Walker, New York, NY

Pinch TJ, Bijker WE (1984) The social construction of facts and artefacts: or how the sociology of science and the sociology of technology might benefit each other. Soc Stud Sci 14(3):399–441

Sunderland J and Salah H (2019) No escape from hell-EU policies contribute to abuse of migrants in Libya. Human rights watch. https://www.hrw.org/report/2019/01/21/no-escape-hell/eu-policiescontribute-abuse-migrants-libya. Accessed 10 Nov 2019

Sutherland IE, Ebergen J (2002) Computers without clocks. Sci Am 287(2):46–53

Sutherland IE (2012) The tyranny of the clock. Commun ACM 55(10):35–36

Tedre M, Sutinen E, Kähkönen E et al. (2006) Ethnocomputing: ICT in cultural and social context. Commun ACM 49(1):126–130

Winner L (1980) Do artifacts have politics? Daedalus 109(1):121–136

Acknowledgements

We would like to thank Peter Banda for valuable feedback throughout the development of the paper and Collin Stewart for language editing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bürger, J., Laguna-Tapia, A. Individual homogenization in large-scale systems: on the politics of computer and social architectures. Palgrave Commun 6, 47 (2020). https://doi.org/10.1057/s41599-020-0425-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-020-0425-4

This article is cited by

-

Urbanized knowledge syndrome—erosion of diversity and systems thinking in urbanites’ mental models

npj Urban Sustainability (2022)