Abstract

We provide a version of first-order hybrid tense logic with predicate abstracts and definite descriptions as the only non-rigid terms. It is formalised by means of a tableau calculus working on sat-formulas. A particular theory of DD exploited here is essentially based on the approach of Russell, but with descriptions treated as genuine terms. However, the reductionist aspect of the Russellian approach is retained in several ways. Moreover, a special form of tense definite descriptions is formally developed. A constructive proof of the interpolation theorem for this calculus is given, which is an extension of the result provided by Blackburn and Marx.

Similar content being viewed by others

1 Introduction

Hybrid logic (\(\textsf {HL}\)) is an important augmentation of standard modal logic with rich syntactic resources. The basic language of \(\textsf {HL}\) is obtained by adding a second sort of propositional atoms, called nominals, each of which holds true at exactly one state of a model and serves as a name of this state. Additionally, one can introduce several extra operators; the most important one is the satisfaction, or @-, operator which takes as its first argument a nominal \(\varvec{j}\) and as the second one an arbitrary \(\textsf {HL}\)-formula \(\varphi \). A formula \(@_{\varvec{j}} \varphi \) indicates that \(\varphi \) is satisfied at the state denoted by \(\varvec{j}\). This allows us to internalise an essential part of the semantics in the language. Another specific operator is the downarrow binder (\(\downarrow \)) which binds the value of a state variable to the current state. What is nice about \(\textsf {HL}\) is that the additional hybrid machinery does not seriously affect the modal logic core it is based on. In particular, modifications in the relational semantics are minimal. The concept of frame remains intact. Only at the level of models we have some changes. Moreover, adding a binder-free hybrid toolkit typically does not increase the computational complexity of the underlying modal logic. These relatively small modifications of standard modal languages give us many advantages:1. a more expressive language,2. a better behaviour in completeness theory, 3. a more natural and simpler proof theory. In particular, defining frame conditions such as irreflexivity, asymmetry, trichotomy, and others, impossible in standard modal languages, becomes possible in \(\textsf {HL}\). This machinery and results are easily extendable to multimodal logics, in particular to tense and temporal logic (Blackburn & Tzakova, 1999; Blackburn & Jørgensen, 2012). Proof theory of \(\textsf {HL}\) offers an even more general approach than applying labels popular in proof theory for standard modal logic, namely it allows for internalising those labels as part of standard hybrid formulas (Braüner, 2011; Indrzejczak, 2010).

\(\textsf {HL}\) offers considerable benefits pertaining to the interpolation property. It is well known that for many modal logics in standard languages this property fails. The situation is particularly bad for the first-order case; Fine (1979) showed that the first-order variant of \(\textsf {S5}\) does not enjoy interpolation, and also all modal logics from the modal cube with constant domains fail to satisfy it. On the other hand, \(\textsf {HL}\) offers resources which significantly improve the situation. In this case the \(\downarrow \)-binder turns out particularly useful. The uniform interpolation theorem for all propositional modal logics complete with respect to any class of frames definable in the bounded fragment of first-order logic was proved by Areces, Blackburn, and Marx (2001). In the follow-up paper (2003) the result was extended to first-order hybrid logic (\(\textsf {FOHL}\)). In both cases the results were obtained semantically and non-constructively, however, in the later work (Blackburn & Marx, 2003) a constructive proof of interpolation was also provided for a tableau calculus for \(\textsf {FOHL}\).

In this paper we provide an extension of the aforementioned tableau calculus and the interpolation theorem for a richer version of \(\textsf {FOHL}\) involving predicate abstracts and definite descriptions. Let us briefly comment on these two kinds of extensions. Adding definite descriptions or other complex terms to \(\textsf {FOHL}\) increases the expressive power of the language, which has recently also been noticed in the area of description logics (Artale et al., 2021). On the other hand, in the previous versions of \(\textsf {FOHL}\) due to Blackburn and Marx (2003) or Braüner (2011) only simple non-rigid terms were used to represent descriptions, whereas involving the \(\imath \)-operator enables us to unfold rich informational contents of descriptions which is often indispensable in checking the correctness of an argument. Several formal systems with rules characterising definite descriptions were proposed by Orlandelli (2021), Fitting and Mendelsohn (1998), or Indrzejczak and Zawidzki (2021; 2023). A novelty of our approach in this paper involves also the introduction of a new, specifically temporal, category of definite descriptions which we call tensal definite descriptions. Formally they also are treated by means of the \(\imath \)-operator but applied to tense variables to obtain the phrases uniquely characterising some time points, hence syntactically they behave like nominals and tense variables and may also be used as first arguments of the satisfaction operator. Intuitively, descriptions of this kind correspond to phrases such as ‘the wedding day of Anne and Alex’, ‘the moment in which this accident took place’, ‘the first year of the French Revolution’, etc. Although it seems that in the general setting of modal logics the introduction of such descriptive nominal phrases is not always needed, in the case of temporal interpretation such an extension of the language is very important since these phrases are commonly used.Footnote 1 What differs in the way tensal definite descriptions are used in natural language and in the formal setting specified below is that in the latter they are syntactically treated as sentences uniquely characterising some points in time, whereas in the former they are usually noun phrases. Moreover, as we will show later, they are characterisable by means of well-behaved rules and the interpolation theorem applies to this extended system.

In addition to descriptions of two kinds we enrich our system with predicate abstracts built by means of the \(\lambda \)-operator. Such devices were introduced to the studies on \(\textsf {FOML}\) by Thomason and Stalnaker (1968) and then the technique was developed by Fitting (1975). In the realm of modal logic it has mainly been used for taking control over scoping difficulties concerning modal operators, but also complex terms like definite descriptions. Such an approach was developed by Fitting and Mendelsohn (1998), and independently formalised in the form of cut-free sequent calculi by Orlandelli (2021) and Indrzejczak (2020). Orlandelli uses labels and characterises definite descriptions by means of a ternary designation predicate. Indrzejczak applies hybrid logic and handles definite descriptions by means of intensional equality. It provides the first version of \(\textsf {FOHL}\) with descriptions and \(\lambda \)-terms (\(\textsf {FOHL}_{\lambda ,\imath }\)).

The system of \(\textsf {FOHL}_{\lambda ,\imath }\) presented here is different from the one due to Indrzejczak (2020). The latter was designed with the aim of following closely the approach of Fitting and Mendelsohn (1998), which was based on the Hintikka axiom. Here we provide an approach based on the Russellian theory of definite descriptions enriched with predicate abstracts and developed in the setting of classical logic by Indrzejczak and Zawidzki (2023). The specific features of the Russellian approach to definite descriptions, its drawbacks and advantages were discussed at length by Indrzejczak (2021), so we omit its presentation. It should be nevertheless stressed that in spite of the fact that Russell treated descriptions as incomplete symbols eliminable by means of contextual definitions, we treat them as genuine terms. However, the reductionist aspect of the Russellian approach is retained in several ways. At the level of syntax the occurrences of definite descriptions are restricted to arguments of predicate abstracts forming so-called \(\lambda \)-atoms. At the level of calculus definite descriptions cannot be instantiated for variables in quantifier rules, but they are eliminated with special rules for \(\lambda \)-atoms. Eventually, at the level of semantics definite descriptions are not defined by an interpretation function, but by satisfaction clauses for \(\lambda \)-atoms. Therefore, their semantic treatment is different than the one known from the Fitting and Mendelsohn approach. It leads to less complex proofs of completeness of the calculus and to different rules characterising definite descriptions which are simpler than the ones from the sequent calculus by Indrzejczak (2020). Hybridised versions of the rules for \(\lambda \)-atoms are added here to the tableau calculus by Blackburn and Marx (2003), which allows us to maximally shorten the proof of the Interpolation Theorem by referring to their rules for calculating interpolants.

In Sect. 2 we briefly characterise the language and semantics of our logic. The tableau calculus and the completeness proof for it are presented in Sects. 3 and 4. In Sect. 5 we extend the proof of the Interpolation Theorem presented by Blackburn and Marx (2003). We conclude the paper with a brief comparison of the present system with Indrzejczak’s former system (2020) and with some open problems.

2 Preliminaries

In what follows we will provide a formal characterisation of first-order hybrid tense logic with definite descriptions, abbreviated as \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\). The language of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) consists of the sets of logical and non-logical expressions. The former is constituted by:

-

A countably infinite set of individual bound variables \(\textsf {BVAR} = \{x, y, z, \ldots \}\),

-

A countably infinite set of individual free variables \(\textsf {FVAR} = \{a, b, c, \ldots \}\),

-

A countably infinite set of tense variables \(\textsf {TVAR} = \{\varvec{x},\varvec{y},\varvec{z},\ldots \}\),

-

The identity predicate \(=\),

-

The (possibilistic) existential quantifier \(\exists \),

-

The abstraction operator \(\lambda \),

-

The definite description operator \(\imath \),

-

Boolean connectives \(\lnot \), \(\wedge \),

-

Tense operators \(\textsf {F}\) (somewhere in the future), \(\textsf {P}\) (somewhere in the past),

-

The satisfaction operator @,

-

The downarrow operator \(\downarrow \).

The set of non-logical expressions of the language of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) includes:

-

A countably infinite set of individual constants \(\textsf {CONS} = \{i, j, k, \ldots \}\),

-

A countably infinite set of tense constants called nominals \(\textsf {NOM} = \{\varvec{i}, \varvec{j}, \varvec{k},\ldots \}\),

-

A countably infinite set of n-ary predicates \(\textsf {PRED}^n = \{P, Q, R, \ldots \}\), for each \(n \in \mathbb {N}\). By \(\textsf {PRED}\) we will denote the union \(\bigcup _{n=0}^\infty \textsf {PRED}^n\).

Intuitively, nominals are introduced for naming time instances in the temporal domain of a model. Thus, on the one hand, they play a role of terms. On the other hand, however, at the level of syntax they are ordinary sentences. In particular, they can be combined by means of boolean and modal connectives. When a nominal \(\varvec{i}\) occurs independently in a sentence, its meaning can be read as “the name of the current time instance is \(\varvec{i}\) (and thus, \(\varvec{i}\) holds only here)”. If it occurs in the scope of the satisfaction operator, it only serves as a name of the time instance it holds at. Tense-variables are double-faced expressions, too, which can serve both as labels of time instances and as full-fledged formulas, each being true at only one time instance. They can additionally be bound by the downarrow operator and by the iota-operator, but not by the quantifier or the lambda-operator. It is important to note that both nominals and the satisfaction operator are genuine language elements rather than an extra metalinguistic machinery. Observe that for convenience of notation we separate the sets of bound and free object variables. We do not do that for tense variables, as, with a slight violation of consistency, at the temporal level nominals often play an analogous role to free variables at the object level.

We will denote the set of well-formed terms, well-formed temporal formulas, and well-formed formulas of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) by \(\textsf {TERM}\), \(\textsf {TFOR}\), and \(\textsf {FOR}\), respectively. The second set is only auxiliary and we introduce it to make the notation more uniform in the remainder of the section. All the sets are defined simultaneously by the following context-free grammars:

where \(a \in \textsf {FVAR}\), \(i \in \textsf {CONS}\), \(x \in \textsf {BVAR}\), \(\varphi \in \textsf {FOR}\), \(\varvec{a} \in \textsf {TVAR}\), \(\varvec{i} \in \textsf {NOM}\), \(\varvec{x} \in \textsf {TVAR}\), \(n\in \mathbb {N}\), \(P \in \textsf {PRED}^n\), \(\eta _1, \ldots , \eta _n \in \textsf {FVAR} \cup \textsf {CONS}\), \(\xi \in \textsf {TERM}\), and \(\varvec{\xi } \in \textsf {TFOR}\). We write \(\varphi [\mathcal {x}]\) to indicate that \(\mathcal {x}\) is free in \(\varphi \). Observe that we require that in a definite description \(\imath x\varphi \) a variable x occurs freely in \(\varphi \). Similarly, in an expression \(\lambda x\varphi \) it is assumed that x occurs freely in \(\varphi \). On the other hand, for a temporal definite description \(\imath \varvec{x}\varphi \) we do not expect \(\varvec{x}\) to necessarily occur (freely) in \(\varphi \). Note that since \(\textsf {BVAR} \cap \textsf {FVAR} = \emptyset \), in a formula of the form \((\lambda x\varphi )(\xi )\), x does not occur freely in \(\xi \). Similarly, we require that in a formula of the form \(\imath \varvec{x}\varphi \) a tense variable \(\varvec{x}\) does not occur freely in \(\varphi \). For any \(\eta _1,\eta _2\) in \(\textsf {FVAR}\cup \textsf {CONS}\) or in \(\textsf {TVAR}\cup \textsf {NOM}\), a formula \(\varphi [\eta _1/\eta _2]\) is the result of a uniform substitution of \(\eta _2\) for \(\eta _1\) in \(\varphi \), whereas a formula \(\varphi [\eta _1/\!/\eta _2]\) results from replacing some occurrences of \(\eta _1\) with \(\eta _2\) in \(\varphi \). Note that we can make substitutions and replacements only using variables or constants, but not definite descriptions. In practice, when constructing a tableau proof, variables are substituted only with free variables, however in the formulation of the semantics and in metalogical proofs it may happen that variables are substituted or replaced with bound variables. In such cases it is assumed that the variable substituting or replacing another variable in a formula is free after the substitution or replacement.

Let us now briefly discuss an informal reading of hybrid elements of \(\textsf {FOR}\). An expression \(@_{\varvec{\xi }} \varphi \), where \(\varvec{\xi } \in \textsf {TFOR}\), reads “\(\varphi \) is satisfied at a time instance denoted by \(\varvec{\xi }\)”. If \(\varvec{\xi }\) is of the form \(\imath \varvec{x}\varphi \), then \(@_{\imath \varvec{x}\varphi } \psi \) reads: “\(\psi \) holds at the only time instance at which \(\varphi \) holds”. Expressions of the form \(\imath \varvec{x}\varphi \) play a double role which is similar to the one of nominals, that is, on the one hand, they unambiguously label time instances and on the other, they are formulas that hold at these time instances. An expression \(\downarrow _{\varvec{x}}\varphi \) fixes the denotation of \(\varvec{x}\) to be the time instance the formula \(\downarrow _{\varvec{x}}\varphi \) is currently evaluated at. Finally, we also use the following standard abbreviations:

We define a tense first-order frame as a tuple \(\mathcal {F}= (\mathcal {T}, \prec , \mathcal {D})\), where:

-

\(\mathcal {T}\) is a non-empty set of time instances (the universe of \(\mathcal {F}\)),

-

\(\prec \subset \mathcal {T}\times \mathcal {T}\) is a relation of temporal precedence on \(\mathcal {T}\), and

-

\(\mathcal {D}\) is a non-empty set called an object domain.

Given a frame \(\mathcal {F}= (\mathcal {T}, \prec , \mathcal {D})\), a tense first-order model based on \(\mathcal {F}\) is a pair \(\mathcal {M}= (\mathcal {F}, \mathcal {I})\), where \(\mathcal {I}\) is an interpretation function defined on \(\textsf {NOM} \cup \textsf {CONS} \cup (\textsf {PRED} \times \mathcal {T})\) in the following way:

-

\(\mathcal {I}(\varvec{i}) \in \mathcal {T}\), for each \(\varvec{i} \in \textsf {NOM}\),

-

\(\mathcal {I}(a) \in \mathcal {D}\), for each \(a \in \textsf {CONS}\),

-

\(\mathcal {I}(P, \varvec{t}) \subseteq \overset{n}{\overbrace{\mathcal {D}\times \ldots \times \mathcal {D}}}\), for each \(n \in \mathbb {N}\), and \(P \in \textsf {PRED}^n\).

Note that in our setting individual constants are rigidified, that is, they have the same interpretation at all time instances, whereas extensions of predicates may vary between different time instances. By making this choice we follow the approach of Blackburn and Marx (2003).

Given a model \(\mathcal {M}= ((\mathcal {T}, \prec ,\mathcal {D}), \mathcal {I})\), an assignment \(\mathcal {v}\) is a function defined on \(\textsf {TVAR}\cup \textsf {FVAR}\cup \textsf {BVAR}\) as follows:

-

\(\mathcal {v}(\varvec{x}) \in \mathcal {T}\), for each \(\varvec{x} \in \textsf {TVAR}\),

-

\(\mathcal {v}(x) \in \mathcal {D}\), for each \(x \in \textsf {FVAR}\cup \textsf {BVAR}\).

Moreover, for an assignment \(\mathcal {v}\), time instance \(\varvec{t} \in \mathcal {T}\), a variable \(x \in \textsf {FVAR}\cup \textsf {BVAR}\) and an object \(o \in \mathcal {D}\) we define an assignment \(\mathcal {v}[x\mapsto o]\) as:

Analogously, for a tense-variable \(\varvec{x}\) and time instance \(\varvec{t}\) we define the assignment \(\mathcal {v}[\varvec{x}\mapsto \varvec{t}]\) in the following way:

Finally, for a model \(\mathcal {M}= (\mathcal {F}, \mathcal {I})\) and an assignment \(\mathcal {v}\) an interpretation \(\mathcal {I}\) under \(\mathcal {v}\), in short \(\mathcal {I}_{\mathcal {v}}\), is a function which coincides with \(\mathcal {I}\) on \(\textsf {NOM} \cup \textsf {CONS} \cup (\textsf {PRED} \times \mathcal {T})\) and with \(\mathcal {v}\) on \(\textsf {TVAR}\cup \textsf {FVAR}\cup \textsf {BVAR}\). Henceforth, we will write \((\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\) to denote the model \(((\mathcal {T}, \prec ,\mathcal {D}),\mathcal {I})\).

Below, we inductively define the notion of satisfaction of a formula \(\varphi \) at a time instance \(\varvec{t}\) of a model \(\mathcal {M}\) under an assignment \(\mathcal {v}\), in symbols \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \varphi \).

where \(P \in \textsf {PRED}^n\), \(\eta , \eta _1,\ldots ,\eta _n \in \textsf {FVAR}\cup \textsf {CONS}\), \(\varphi , \psi \in \textsf {FOR}\), \(x,y \in \textsf {BVAR}\), \(\varvec{\eta } \in \textsf {TVAR}\cup \textsf {NOM}\), \(\varvec{\xi }\in \textsf {TFOR}\), and \(\varvec{x} \in \textsf {TVAR}\). A \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) formula \(\varphi \) is satisfiable if there exists a tense first-order model \(\mathcal {M}\), a time instance \(\varvec{t}\) in the universe of \(\mathcal {M}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \varphi \); it is true in a tense first-order model \(\mathcal {M}\) under an assignment \(\mathcal {v}\), in symbols \(\mathcal {M}, \mathcal {v}\models \varphi \), if it is satisfied by \(\mathcal {v}\) at all time instances in the universe of \(\mathcal {M}\); it is valid, in symbols \(\models \varphi \), if, for all tense first-order models \(\mathcal {M}\) and assignments \(\mathcal {v}\), it is true in \(\mathcal {M}\) under \(\mathcal {v}\); it globally entails \(\psi \) in \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) if, for every tense first-order model \(\mathcal {M}\) and assignment \(\mathcal {v}\), if \(\varphi \) is true in \(\mathcal {M}\) under \(\mathcal {v}\), then \(\psi \) is true in \(\mathcal {M}\) under \(\mathcal {v}\); it locally entails \(\psi \) if, for every tense first-order model \(\mathcal {M}\), time instance \(\varvec{t}\) in the universe of \(\mathcal {M}\), and assignment \(\mathcal {v}\), if \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \varphi \), then \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \psi \).

We can obtain different underlying temporal structures by imposing suitable restrictions on \(\prec \), such as, for instance, transitivity, irreflexivity, connectedness etc.

Example 1

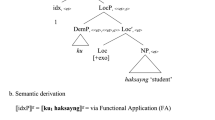

Let us consider a simplified Russellian example of the bald king of France, formalised as \((\lambda xB(x))(\imath yK(y))\) to see how \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) deals with several recognisable problems. Consider a model \(\mathcal {M}=(\mathcal {T},\prec ,\mathcal {D},\mathcal {I})\), depicted in Fig. 1, with:

-

\(\mathcal {T}= \{\varvec{t}_0, \varvec{t}_1, \varvec{t}_2, \varvec{t}_3, \varvec{t}_4\}\),

-

\(\varvec{t}_0\prec \varvec{t}_1\), \(\varvec{t}_0\prec \varvec{t}_2\), \(\varvec{t}_2\prec \varvec{t}_3\), \(\varvec{t}_3\prec \varvec{t}_1\), \(\varvec{t}_3\prec \varvec{t}_4\),

-

\(\mathcal {D}= \{o_1,o_2\}\),

-

\(\mathcal {I}(B,\varvec{t}_0)=\emptyset \), \(\mathcal {I}(K,\varvec{t}_0)=\{o_1\}\), \(\mathcal {I}(B,\varvec{t}_1)=\mathcal {I}(K,\varvec{t}_1)=\{o_1\}\), \(\mathcal {I}(B,\varvec{t}_2)=\mathcal {D}\), \(\mathcal {I}(K,\varvec{t}_2)=\emptyset \), \(\mathcal {I}(B,\varvec{t}_3)=\mathcal {I}(K,\varvec{t}_3)=\mathcal {D}\), \(\mathcal {I}(B,\varvec{t}_4)=\mathcal {I}(K,\varvec{t}_4)=\{o_2\}\).

We discard \(\mathcal {I}\) which is unessential for our needs, but define an assignment \(\mathcal {v}[\circ \mapsto o_1,\bullet \mapsto \varvec{t}_0]\) which maps all variables to \(o_1\) and all tense-variables to \(\varvec{t}_0\). One may easily check that \((\lambda xB(x))(\imath yK(y))\) is satisfied at \(\varvec{t}_1\) and \(\varvec{t}_4\) but for different objects, namely for \(o_1\) and \(o_2\), respectively, since descriptions are non-rigid terms. At the remaining time instances it is false, hence \(\lnot (\lambda xB(x))(\imath yK(y))\) is satisfied there. Note, however, that \((\lambda x\lnot B(x))(\imath yK(y))\) is satisfied at \(\varvec{t}_0\) since it holds of \(o_1\). So there is no difference between saying that the king is not bald here or that it is not the case that he is bald. On the other hand, at \(\varvec{t}_2\) and \(\varvec{t}_3\) it is also false that \((\lambda x\lnot B(x))(\imath yK(y))\) since there is no unique king there. Also \(\textsf {F}(\lambda x B(x))(\imath yK(y))\) is satisfied at \(\varvec{t}_0\) and at \(\varvec{t}_3\) even \(\textsf {G}(\lambda x B(x))(\imath yK(y))\) holds, whereas at \(\varvec{t}_2\) \(\textsf {F}(\lambda x B(x))(\imath yK(y))\) is false.

Tense model from Example 1

3 Tableau calculus

Several proof systems, including tableaux, sequent calculi and natural deduction, were provided for different versions of \(\textsf {HL}\) (see, e.g., Braüner (2011), Indrzejczak (2010), Zawidzki (2014)). Most of them represent so-called sat-calculi where each formula is preceded by the satisfaction operator. Using sat-calculi instead of calculi working with arbitrary formulas is justified by the fact that \(\varphi \) holds in (any) hybrid logic iff \(@_{\varvec{j}}\varphi \) holds, provided that \({\varvec{j}}\) is not present in \(\varphi \). And so, proving \(@_{\varvec{j}}\varphi \) is in essence equivalent to proving \(\varphi \). In what follows we present a sat-tableau calculus for the logic \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\), which we denote by \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\). It is in principle the calculus of Blackburn and Marx (2003) enriched with rules for DD and the lambda operator. Strictly speaking it is not a pure sat-calculus, since equality formulas are admitted also without satisfaction operators. Before we proceed to discussing the rules of \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\), let us briefly recall basic notions from the tableau methodology.

A tableau \(\mathfrak {T}\) generated by a calculus \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) is a derivation tree whose nodes are assigned formulas in the language of deduction. A branch of \(\mathfrak {T}\) is a simple path from the root to a leaf of \(\mathfrak {T}\). For simplicity, we will identify each branch \(\mathfrak {B}\) with the set of formulas assigned to nodes on \(\mathfrak {B}\).

A general form of rules is as follows: \(\frac{\Phi }{\Psi _1 | \ldots | \Psi _n}\), where \(\Phi \) is the set of premises and each \(\Psi _i\), for \(i\in \{1,\ldots ,n\}\), is a set of conclusions. If a rule has more than one set of conclusions, it is called a branching rule. Otherwise it is non-branching. Thus, if a rule \(\frac{\Phi }{\Psi _1 | \ldots | \Psi _n}\) is applied to \(\Phi \) occurring on \(\mathfrak {B}\), \(\mathfrak {B}\) splits into n branches: \(\mathfrak {B}\cup \{\Psi _1\}, \ldots , \mathfrak {B}\cup \{\Psi _n\}\). A rule \((\textsf {R})\) with \(\Phi \) as the set of its premises is applicable to \(\Phi \) occurring on a branch \(\mathfrak {B}\) if it has not yet been applied to \(\Phi \) on \(\mathfrak {B}\). A set \(\Phi \) is called \((\textsf {R})\)-expanded on \(\mathfrak {B}\) if \((\textsf {R})\) has already been applied to \(\Phi \) on \(\mathfrak {B}\). A term \(\xi \) is called fresh on a branch \(\mathfrak {B}\) if it has not yet occurred on \(\mathfrak {B}\). We call a branch \(\mathfrak {B}\) closed if the inconsistency symbol \(\bot \) occurs on \(\mathfrak {B}\). If \(\mathfrak {B}\) is not closed, it is open. A branch is fully expanded if it is closed or no rules are applicable to (sets of) formulas occurring on \(\mathfrak {B}\). A tableau \(\mathfrak {T}\) is called closed if all of its branches are closed. Otherwise \(\mathfrak {T}\) is called open. Finally, \(\mathfrak {T}\) is fully expanded if all its branches are fully expanded. A tableau proof of a formula \(\varphi \) is a closed tableau with \(\lnot @_{\varvec{i}}\varphi \) at its root, where \(\varvec{i}\) is a nominal not occurring in \(\varphi \). A formula \(\varphi \) is tableau-valid (with respect to the calculus \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\)) if there exists a tableau proof of \(\varphi \). The calculus \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) is sound (with respect to the semantics of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\)) if, whenever a \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\)-formula \(\varphi \) is tableau-valid, then \(\varphi \) is valid. \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) is complete (with respect to the semantics of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\)) if, whenever a \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\)-formula \(\varphi \) is valid, then \(\varphi \) is tableau-valid.

3.1 Basic rules

In Fig. 2 we present the rules constituting \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\). We transfer the notation from the previous section with the caveat that a denotes an object free variable that is fresh on the branch, whereas \(b, b_1, b_2\) denote object free variables or individual constants that have already been present on the branch. Similarly, \(\varvec{i}\) denotes a nominal that is fresh on the branch, while, \(\varvec{j}, \varvec{j}_1, \varvec{j}_2, \varvec{j}_3\) are nominals that have previously occurred on the branch. Recall that we are only considering sentences, that is, formulas without free variables (both object and tense). Consequently, even though there exist satisfaction conditions for formulas of the form \(\varvec{x}\) or \(@_{\varvec{x}}\varphi \), where \(\varvec{x}\) is a tense variable, the presented calculus does not comprise any rules that handle such formulas occurring independently on a branch, as such a scenario cannot materialise under the above assumption. The closure rules and the rules handling conjunction are self-explanatory, however the remaining ones deserve a brief commentary. The rules \((\lnot )\) and \((\lnot \lnot )\) capture self-duality of the @-operator. The quantifier rules are standard rules for possibilistic quantifiers ranging over the domain of a model. Bear in mind that bound variables can only be substituted with free variables or constants, but not definite descriptions, when a quantifier rule is applied. The rules \((\textsf {F})\), \((\lnot \textsf {F})\), \((\textsf {P})\), and \((\lnot \textsf {P})\) are standard rules for temporal modalities relying on a hybrid representation of two time instances being linked by the temporal precedence relation. More precisely, in a model \(\mathcal {M}\) a time instance \(\varvec{t}_2\) represented by a nominal \(\varvec{j}_2\) occurs after a time instance \(\varvec{t}_1\) represented by a nominal \(\varvec{j}_1\) if and only if a formula \(@_{\varvec{j}_1} \textsf {F}\varvec{j}_2\) holds true in \(\mathcal {M}\). With regard to the nominal rules, \((\textsf {gl})\) and \((\lnot \textsf {gl})\) capture a global range of @, that is, if a formula preceded by the @-operator is satisfied at one time instance in a model \(\mathcal {M}\), it is satisfied at all time instances in \(\mathcal {M}\). The rule \((\textsf {ref}_{\varvec{j}})\) guarantees that every nominal \(\varvec{j}\) is satisfied at a time instance labelled by \(\varvec{j}\). The bridge rules \((\textsf {nom})\) and \((\textsf {bridge})\) ensure that if a nominal \(\varvec{j}_1\) is satisfied at a time instance labelled by \(\varvec{j}_2\), then \(\varvec{j}_1\) and \(\varvec{j}_2\) are interchangeable. The rules \((\downarrow )\) and \((\lnot \!\downarrow )\) embody the semantics of the \(\downarrow _{\varvec{x}}\)-operator which fixes the denotation of \(\varvec{x}\) to be the state the formula is currently evaluated at. More concretely, if a \(\downarrow _{\varvec{x}}\)- (or \(\lnot \downarrow _{\varvec{x}}\)-)formula is evaluated at a time instance labelled by \(\varvec{j}\), that is, is preceded by \(@_{\varvec{j}}\), then \(\varvec{x}\) is substituted with \(\varvec{j}\) in the formula in the scope of \(\downarrow _{\varvec{x}}\). The rules \((\textsf {eq})\) and \((\lnot \textsf {eq})\) reflect the fact that the object constants have the same denotations at all time instances in the model. The rule \((\imath _1^o)\) handles the scenario where, at a given time instance, an object definite description occurs in the scope of a \(\lambda \)-expression. Then \((\imath _1^o)\) enforces that both the formulas hold of the same fresh object constant, at the same time instance. If, moreover, a formula constituting an object definite description occurs independently on the branch, preceded with a nominal representing a given time instance, then \((\imath _2^o)\) guarantees that all the free variables or constants it holds of at this time instance denote the same object. If at a given time instance a \(\lambda \)-expression \(\lambda x \psi \) does not hold of an object definite description \(\imath y \varphi \), then for any constant b present on the branch, either \(\varphi \) does not hold of b at this time instance or \(\psi \) does not hold of b at this time instance, or we can introduce a fresh constant a distinct from b such that \(\varphi \) holds of a at this time instance. The rules for temporal definite descriptions work in the following way. The rule \((\imath _1^t)\) unpacks a temporal definite description at the time instance of its evaluation. The rule \((\imath _2^t)\) guarantees that a time instance satisfying the formula which constitutes a temporal definite description is unique. According to \((\lnot \imath ^t)\), if a temporal definite description is not satisfied at a time instance, then either a formula constituting this description is not satisfied there or it is satisfied at a different time instance. The rule \((@\imath ^t)\) reduces a formula \(@_{\imath \varvec{x}\varphi }\psi \) being satisfied at a time instance to the temporal definite description \(\imath \varvec{x}\varphi \) and a formula \(\psi \) being satisfied at some (not necessarily distinct) time instance. The rule \((\lnot @\imath ^t)\) guarantees that if a formula \(@_{\imath \varvec{x}\varphi }\psi \) is not satisfied at some time instance, then \(\imath \varvec{x}\varphi \) and \(\psi \) cannot be jointly satisfied at any time instance. Note that these rules play an analogous role to that of \((\textsf {gl})\) and \((\lnot \textsf {gl})\), but this time with a tense definite description in place of \(\varvec{j}_2\). In this case, however, the rule does not make this description the argument of the leftmost @-operator, as, by the construction of a proof tree, these must always be labelled by nominals. The rules \((\lambda )\) and \((\lnot \lambda )\) are tableau-counterparts of the standard \(\beta \)-reduction known from the \(\lambda \)-calculus. Their application is restricted to constants. Finally, the \((\textsf {ref})\) guarantees that \(=\) is reflexive over all constants occurring on the branch and \((\textsf {RR})\) is a standard replacement rule. The rule \((\textsf {NED})\) is a counterpart of the non-empty domain assumption. Mind, however, that it is only applied if no other rules are applicable and if no formula of the form \(b=b\) is already present on the branch. Notice that also the rules \((\textsf {ref}_{\varvec{j}})\) and \((\textsf {ref})\) do not explicitly indicate premises, however it is assumed that a nominal \(\varvec{j}\) or an object constant b must have previously been present on the branch.

Example proofs conducted in \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\); Fig. 3(a) shows a proof tree for the derivation \(@_{\imath \varvec{x}W(t,j)}M(t,j,l),\ @_{\imath \varvec{x}W(t,j)}\imath \varvec{y}B\ \vdash \ @_{\imath \varvec{y}B}M(t,j,l)\) from Example 2; Fig. 3(b) presents a proof of the derivability of the rule \((\textsf {DD})\) \(@_{\varvec{j}} @_{\imath \varvec{x}\varphi }\imath \varvec{y}\psi \,\ @_{\varvec{j}}@_{\imath \varvec{x}\varphi }\chi \ / \ @_{\varvec{j}} @_{\imath \varvec{y}\psi }\chi \) in \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\); Fig. 3(c) displays a proof of the validity of the Barcan formula in \(\textsf {HFL}_{\textsf {K}}\)

Example 2

We provide a simple example to illustrate the application of our rules for tense definite descriptions.Footnote 2 Consider the following valid argument:

At the year of their wedding Tricia and John moved to London. The wedding day of Tricia and John and the Brexit happened at the same year. Hence they moved to London at the year of Brexit.

It may be formalised in a simplified form (avoiding details not relevant for the validity of this example) in the following way:

As shown by the tableau proof displayed in Fig. 3(a), the above reasoning is indeed valid in \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\).

Example 3

Let us consider a definite description-counterpart of the rule \((\textsf {nom})\) defined on nominals, namely the rule:

In Fig. 3(b) we show, using \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\), that \((\textsf {DD})\) is derivable in \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\).

Example 4

Since in \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) we assume that the object domain is common for all time instances, that is, we make the constant domain assumption, the Barcan formula should be valid in this logic. This is indeed the case, which is proved in Fig. 3(c) with the following instance of the Barcan formula:

4 Soundness and completeness

In what follows, we will be using two auxiliary results (whose standard proofs by induction on the complexity of \(\varphi \) are omitted).

Lemma 1

(Coincidence Lemma) Let \(\varphi \in \textsf {FOR}\), let \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\) be a tense model, let \(\varvec{t} \in \mathcal {T}\), and let \(\mathcal {v}_1,\mathcal {v}_2\) be assignments. If \(\mathcal {v}_1(\mathcal {x})=\mathcal {v}_2(\mathcal {x})\) for each \(\mathcal {x} \in \textsf {FVAR} \cup \textsf {TVAR}\) occurring in \(\varphi \), then \(\mathcal {M}, \varvec{t}, \mathcal {v}_1 \models \varphi \) iff \(\mathcal {M}, \varvec{t}, \mathcal {v}_2 \models \varphi \).

Lemma 2

(Substitution Lemma) Let \(\varphi \in \textsf {FOR}\), \(a\in \textsf {FVAR}\), \(\varvec{i} \in \textsf {NOM}\), let \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\) be a tense model, and let \(\varvec{t} \in \mathcal {T}\). Then \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \varphi [x/a]\) iff \(\mathcal {M}, \varvec{t}, \mathcal {v}[x\mapsto \mathcal {v}(a)]\models \varphi \), where \(x\in \textsf {BVAR}\). Similarly, \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \varphi [\varvec{x}/\varvec{i}]\) iff \(\mathcal {M}, \varvec{t}, \mathcal {v}[\varvec{x}\mapsto \mathcal {I}(\varvec{i})]\models \varphi \), where \(\varvec{x}\in \textsf {TVAR}\).

4.1 Soundness

Let \((\textsf {R})\) \(\frac{\Phi }{\Psi _1\mid \ldots \mid \Psi _n}\) be a rule from \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\). We say that \((\textsf {R})\) is sound if whenever \(\Phi \) is satisfiable, then \(\Phi \cup \Psi _i\) is satisfiable, for some \(i \in \{1,\ldots ,n\}\). It holds that:

Lemma 3

All rules of \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) are sound.

Proof

Since closure, propositional, quantifier rules, basic modal, and most nominal rules are standard and proved to be sound elsewhere (see, e.g., Braüner (2011)), below we only present proofs of soundness of \(\imath \)-object rules, \(\imath \)-temporal rules and \(\lambda \)-rules.

\((\imath _1^o)\) Assume that \(@_{\varvec{j}}(\lambda x\psi )(\imath y \varphi )\) is satisfiable. It means that there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}(\lambda x \psi )(\imath y \varphi )\). Hence, by the satisfaction condition for @-formulas, there exists a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M},\varvec{t}',\mathcal {v}\models (\lambda x \psi )(\imath y \varphi )\). Thus, there is an object \(o \in \mathcal {D}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}[y\mapsto o] \models \varphi \) and \(\mathcal {M}, \varvec{t}, \mathcal {v}[x\mapsto o] \models \psi \), and for any \(o' \in \mathcal {D}\), if \(\mathcal {M}, \varvec{t}, \mathcal {v}[y\mapsto o'] \models \varphi \), then \(o' = o\). Without loss of generality let’s assume that a is a fresh free variable such that \(\mathcal {v}(a) = o\). By the Substitution Lemma, we get that \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \varphi [y/a],\psi [x/a]\). Finally, it means that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\varphi [y/a],@_{\varvec{j}}\psi [x/a]\), as expected.

\((\imath _2^o)\) Assume that \(@_{\varvec{j}}(\lambda x\psi )(\imath y \varphi )\), \(@_{\varvec{j}}\varphi [y/b_1]\), and \(@_{\varvec{j}}\varphi [y/b_2]\) are jointly satisfiable. It means that there exists a model \(\mathcal {M}=(\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}(\lambda x \psi )(\imath y \varphi ), @_{\varvec{j}}\varphi [y/b_1], @_{\varvec{j}}\varphi [y/b_2]\). Thus, by the satisfaction condition for @-formulas we imply that there is a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M}, \varvec{t}', \mathcal {v}\models (\lambda x\psi )(\imath y \varphi ), \varphi [y/b_1], \varphi [y/b_2]\). And so, there exists an object \(o \in \mathcal {D}\) such that \(\mathcal {M}, \varvec{t}',\mathcal {v}[x,y\mapsto o] \models \varphi , \psi \) and, for any \(o' \in \mathcal {D}\) if \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o'] \models \varphi \), then \(o'=o\). Let \(\mathcal {v}(b_1) = o'\) and \(\mathcal {v}(b_2) = o''\). By the Substitution Lemma, \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o' \models \varphi \) and \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o''] \models \varphi \). Since x does not occur freely in \(\varphi \), by the Coincidence Lemma we get \(\mathcal {M}, \varvec{t}', \mathcal {v}[x\mapsto o,y\mapsto o'] \models \varphi \) and \(\mathcal {M}, \varvec{t}', \mathcal {v}[x\mapsto o,y\mapsto o''] \models \varphi \). By the relevant satisfaction condition we obtain \(o' = o\) and \(o'' = o\), and so, \(o = o' = o''\). As \(o = \mathcal {v}(b_1) = \mathcal {v}(b_2)\), the respective satisfaction conditions yield \(\mathcal {M}, \varvec{t}'', \mathcal {v}\models b_1 = b_2\) for any \(\varvec{t}'' \in \mathcal {T}\), so in particular, \(\mathcal {M}, \varvec{t}, \mathcal {v}\models b_1=b_2\).

\((\lnot \imath ^o)\) Assume that \(\lnot @_{\varvec{j}}(\lambda x \psi )(\imath y \varphi )\) is satisfiable. Then there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}}(\lambda x \psi )(\imath y \varphi )\), and so, \(\mathcal {M}, \varvec{t}, \mathcal {v}\not \models @_{\varvec{j}}(\lambda x \psi )(\imath y \varphi )\). By the relevant satisfaction condition for @-formulas there exists a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models (\lambda x \psi )(\imath y \varphi )\). Consequently, it means that for all objects \(o \in \mathcal {D}\) (at least) one of the following three conditions holds: 1. \(\mathcal {M}, \varvec{t}', \mathcal {v}[x\mapsto o] \not \models \psi \); 2. \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o] \not \models \varphi \); 3. there exists \(o'\in \mathcal {D}\) such that \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o'] \models \varphi \) and \(o'\ne o\). Let b be a free variable present on the branch and \(\mathcal {v}(b) = o'\). If (1) holds for \(o'\), that is, \(\mathcal {M}, \varvec{t}', v[x\mapsto o'] \not \models \psi \), then, by the Substitution Lemma, \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models \psi [x/b]\), whence, by the respective satisfaction condition, we get \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \lnot \psi [x/b]\) and, subsequently, \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\lnot \psi [x/b]\) and \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}} \psi [x/b]\). Let (2) hold for \(o'\), that is, \(\mathcal {M}, \varvec{t}', v[y\mapsto o'] \not \models \varphi \). By the Substitution Lemma we get \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models \varphi [y/b]\). By the satisfaction conditions for negation and @-formulas we obtain, subsequently, \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \lnot \varphi [y/b]\), \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\lnot \varphi [y/b]\), and \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}}\varphi [y/b]\). Assume that (3) holds for \(o'\), that is, there exists \(o''\in \mathcal {D}\) such that \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o''] \models \varphi \) and \(o'' \ne o'\). Without loss of generality we may assume that there exists \(a \in \textsf {FVAR}\) such that a does not occur freely in \(\varphi \) and \(\mathcal {v}(a) = o''\). Since x does not occur freely in \(\varphi \), we can apply the Substitution Lemma twice and from \(\mathcal {M}, \varvec{t}', \mathcal {v}[y\mapsto o''] \models \varphi \) obtain \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \varphi [y/a]\) and further \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\varphi [y/a]\).

\((\imath _1^t)\) Assume that \(@_{\varvec{j}}\imath \varvec{x}\varphi \) is satisfiable. It means that there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\imath \varvec{x}\varphi \). Hence, by the relevant satisfaction conditions for @-formulas, there exists a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M},\varvec{t}',\mathcal {v}\models \imath \varvec{x}\varphi \), and further, \(\mathcal {M}, \varvec{t}', \mathcal {v}[\varvec{x}\mapsto \varvec{t}'] \models \varphi \). Without loss of generality let’s assume that \(\varvec{i}\) is a fresh nominal such that \(\mathcal {v}(\varvec{i}) = \varvec{t}'\). By the Substitution Lemma, we get that \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \varphi [\varvec{x}/\varvec{i}]\). Finally, it means that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}} \varphi [\varvec{x}/\varvec{i}]\), as required.

\((\imath _2^t)\) Assume that \(@_{\varvec{j}_1}\imath \varvec{x}\varphi \) and \(@_{\varvec{j}_2}\varphi [\varvec{x}/\varvec{j}_2]\) are jointly satisfiable. It means that there exists a model \(\mathcal {M}=(\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}_1}\imath \varvec{x}\varphi , @_{\varvec{j}_2}\varphi [\varvec{x}/\varvec{j}_2]\). Thus, by the relevant satisfaction condition for @-formulas we imply that there are time instances \(\varvec{t}',\varvec{t}'' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}_1) = \varvec{t}'\), \(\mathcal {I}(\varvec{j}_2) = \varvec{t}''\), \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \imath \varvec{x}\varphi \), and \(\mathcal {M}, \varvec{t}'', \mathcal {v}\models \varphi [\varvec{x}/\varvec{j}_2]\). Further, by the satisfaction condition for \(\imath \varvec{x}\varphi \), we get that \(\mathcal {M}, \varvec{t}', \mathcal {v}[\varvec{x}\mapsto \varvec{t}'] \models \varphi \). By the Substitution Lemma we obtain \(\mathcal {M}, \varvec{t}'', \mathcal {v}[\varvec{x}\mapsto \varvec{t}''] \models \varphi \), and so, again by the same satisfaction condition as before, it follows that \(\varvec{t}'=\varvec{t}''\). Since we have that \(\mathcal {M}, \varvec{t}',\mathcal {v}\models \varvec{j}_1\) and \(\mathcal {M}, \varvec{t}'',\mathcal {v}\models \varvec{j}_2\), by the respective satisfaction conditions we get \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \varvec{j}_2\) and subsequently, \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j_1}}\varvec{j}_2\).

\((\lnot \imath ^t)\) Assume that \(\lnot @_{\varvec{j}}\imath \varvec{x}\varphi \) is satisfiable. Then there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}}\imath \varvec{x}\varphi \), and so, \(\mathcal {M}, \varvec{t}, \mathcal {v}\not \models @_{\varvec{j}}\imath \varvec{x}\varphi \). By the relevant satisfaction condition for @-formulas there exists a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models \imath \varvec{x}\varphi \). By the satisfaction condition for \(\imath \varvec{x}\varphi \) it means either \(\mathcal {M}, \varvec{t}', \mathcal {v}[\varvec{x}\mapsto \varvec{t}'] \not \models \varphi \) or there exists a time instance \(\varvec{t}''\in \mathcal {T}\) such that \(\mathcal {M},\varvec{t}'',\mathcal {v}[\varvec{x}\mapsto \varvec{t}'']\models \varphi \) and \(\varvec{t}'\ne \varvec{t}''\). In the former case, by the Substitution Lemma we get \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models \varphi \) and further, by the relevant satisfaction conditions, \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \lnot \varphi \), \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}\lnot \varphi \), and finally, \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}}\varphi \). In the latter case assume, without loss of generality, that \(\varvec{i}\in \textsf {NOM}\) is such that \(\mathcal {I}(\varvec{i}) = \varvec{t}''\). Since \(\varvec{t}'\ne \varvec{t}''\), by the respective satisfaction conditions we get, subsequently, \(\mathcal {M},\varvec{t}'\mathcal {v}\not \models \varvec{i}\), \(\mathcal {M},\varvec{t}',\mathcal {v}\models \lnot \varvec{i}\), \(\mathcal {M},\varvec{t},\mathcal {v}\models @_{\varvec{j}}\lnot \varvec{i}\), and \(\mathcal {M},\varvec{t},\mathcal {v}\models \lnot @_{\varvec{j}}\varvec{i}\). Moreover, by the Substitution Lemma we obtain \(\mathcal {M},\varvec{t}''\mathcal {v}\models \varphi [\varvec{x}/\varvec{i}]\), whence, by the relevant satisfaction condition for @-formulas, we derive \(\mathcal {M},\varvec{t}\mathcal {v}\models @_{\varvec{i}}\varphi [\varvec{x}/\varvec{i}]\).

\((@\imath ^t)\) Assume that \(@_{\varvec{j}}@_{\imath \varvec{x}\varphi }\psi \) is satisfiable. It means that there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}@_{\imath \varvec{x}\varphi }\psi \). Hence, by the relevant satisfaction conditions for @-formulas, there exist time instances \(\varvec{t}', \varvec{t}'' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M},\varvec{t}',\mathcal {v}\models @_{\imath \varvec{x}\varphi }\psi \), and further, \(\mathcal {M}, \varvec{t}'', \mathcal {v}\models \imath \varvec{x}\varphi ,\psi \). Without loss of generality let’s assume that \(\varvec{i}\) is a fresh nominal such that \(\mathcal {v}(\varvec{i}) = \varvec{t}''\). Then we obtain \(\mathcal {M},\varvec{t},\mathcal {v}\models @_{\varvec{i}}\imath \varvec{x}\varphi , @_{\varvec{i}}\psi \).

\((\lnot @\imath ^t)\) Assume that \(\lnot @_{\varvec{j}_1}@_{\imath \varvec{x}\varphi }\psi \) is satisfiable. It means that there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}_1}@_{\imath \varvec{x}\varphi }\psi \). By the satisfaction condition for \(\lnot \) we get \(\mathcal {M}, \varvec{t}, \mathcal {v}\not \models \lnot @_{\varvec{j}_1}@_{\imath \varvec{x}\varphi }\psi \). Next, by the relevant satisfaction conditions for @-formulas, we know that there exists a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(\varvec{j}) = \varvec{t}'\) and \(\mathcal {M},\varvec{t}',\mathcal {v}\not \models @_{\imath \varvec{x}\varphi }\psi \). Let \(\varvec{t}'' \in \mathcal {T}\) and \(\varvec{j}_2 \in \textsf {NOM}\) be such that \(\mathcal {I}(\varvec{j}_2) = \varvec{t}''\). From the satisfaction condition for \(@_{\imath \varvec{x}\varphi }\) we derive that either \(\mathcal {M},\varvec{t}'',\mathcal {v}\not \models \imath \varvec{x}\varphi \) or \(\mathcal {M},\varvec{t}'',\mathcal {v}\not \models \psi \). In the former case, by applying the relevant satisfaction conditions we obtain \(\mathcal {M},\varvec{t},\mathcal {v}\not \models @_{\varvec{j}_2}\imath \varvec{x}\varphi \), and finally, \(\mathcal {M},\varvec{t},\mathcal {v}\models \lnot @_{\varvec{j}_2}\imath \varvec{x}\varphi \). In the latter case, by applying the same satisfaction conditions we derive \(\mathcal {M},\varvec{t},\mathcal {v}\not \models @_{\varvec{j}_2}\psi \), and finally, \(\mathcal {M},\varvec{t},\mathcal {v}\models \lnot @_{\varvec{j}_2}\psi \), as expected.

\((\lambda )\) Let b be a free variable present on the branch. Assume that \(@_{\varvec{j}}(\lambda x \psi )(b)\) is satisfiable. Then there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models @_{\varvec{j}}(\lambda x \psi )(b)\). By the relevant satisfaction condition for @-formulas it holds that there exists a state \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(i) = \varvec{t}'\) and \(\mathcal {M}, \varvec{t}', \mathcal {v}\models (\lambda x \psi )(b)\). By the respective satisfaction condition it means that \(\mathcal {v}(b) = o\), for some \(o \in \mathcal {D}\), and \(\mathcal {M}, \varvec{t}', \mathcal {v}[x\mapsto o] \models \psi \). By the Substitution Lemma it holds that \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \psi [x/b]\), hence \(\psi [x/b]\), and thus \(@_{\varvec{j}} \psi [x/b]\), are satisfiable.

\((\lnot \lambda )\) Let b be a parameter present on the branch. Assume that \(\lnot @_{\varvec{j}}(\lambda x \psi )(b)\) is satisfiable. Then there exists a model \(\mathcal {M}= (\mathcal {T}, \prec ,\mathcal {D},\mathcal {I})\), a time instance \(\varvec{t} \in \mathcal {T}\), and an assignment \(\mathcal {v}\) such that \(\mathcal {M}, \varvec{t}, \mathcal {v}\models \lnot @_{\varvec{j}}(\lambda x \psi )(b)\). By the relevant satisfaction condition for @-formulas it means that there is a time instance \(\varvec{t}' \in \mathcal {T}\) such that \(\mathcal {I}(i) = \varvec{t}'\) and \(\mathcal {M},\varvec{t}', \mathcal {v}\not \models (\lambda x \psi )(b)\). Assume that \(\mathcal {v}(b) = o\) for some \(o \in \mathcal {D}\). Then by the respective satisfaction condition \(\mathcal {M}, \varvec{t}', \mathcal {v}[x\mapsto o] \not \models \varphi \). By the Substitution Lemma we get that \(\mathcal {M}, \varvec{t}', \mathcal {v}\not \models \psi [x/b]\). Again, by the satisfaction condition for negation it follows that \(\mathcal {M}, \varvec{t}', \mathcal {v}\models \lnot \psi [x/b]\), and finally, \(\mathcal {M},\varvec{t}, \mathcal {v}\models @_{\varvec{j}}\lnot \psi [x/b]\). \(\square \)

Now we are ready to prove the following theorem:

Theorem 4

(Soundness) The tableau calculus \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) is sound.

Proof

Let \(\varphi \) be a \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\)-formula. Let \(\mathfrak {T}\) be a \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\)-proof of \(\varphi \). Each branch of \(\mathfrak {T}\) is closed. By Lemma 3 all the rules of \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) preserve satisfiability, and so, going from the bottom to the top of \(\mathfrak {T}\), we start from unsatisfiable leafs and mark sets of formulas labelling subsequent preceding nodes as unsatisfiable, eventually reaching the root, where we have \(\lnot @_{\varvec{j}} \varphi \). Since it is unsatisfiable, too, we obtain that \(\varphi \) is valid. \(\square \)

4.2 Completeness

In this section, we show that \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) is complete with respect to the semantics of \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\), that is, that all valid \(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath }\) formulas have \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\)-proofs. To this end, we show the contrapositive, i.e., that if applying \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\) to a formula \(\lnot @_{\varvec{j}}\varphi \) generates a tableau with an open and fully expanded branch \(\mathfrak {B}\), i.e., a tableau which is not a proof of \(\varphi \), then there exists a model satisfying \(\lnot \varphi \) and this model can be constructed by using information stored on \(\mathfrak {B}\).

Now, for the remainder of this section assume that \(\mathfrak {T}\) is a \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\)-tableau with \(\lnot @_{\varvec{j}}\varphi \) at the root and \(\mathfrak {B}\) is an open and fully expanded branch of \(\mathfrak {T}\). Let \(\textsf {FVAR}_\mathfrak {B}\), \(\textsf {CONS}_\mathfrak {B}\), and \(\textsf {NOM}_\mathfrak {B}\) be, respectively, the sets of all free variables, individual constants, and nominals occurring on \(\mathfrak {B}\). Below we define relations \(\sim _\mathfrak {B}\subseteq (\textsf {FVAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B})^2\) and \(\approx _\mathfrak {B}\subseteq \textsf {NOM}_\mathfrak {B}^2\). Let \(b_1,b_2 \in \textsf {PAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\) and \(\varvec{j}_1,\varvec{j}_2 \in \textsf {NOM}_\mathfrak {B}\). Then:

The proposition below points to a useful property of \(\sim _\mathfrak {B}\) and \(\approx _\mathfrak {B}\) which we will rely on in the further construction of a model.

Proposition 5

-

1.

\(\sim _\mathfrak {B}\) is an equivalence relation on \(\textsf {PAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\).

-

2.

\(\approx _\mathfrak {B}\) is an equivalence relation on \(\textsf {NOM}_\mathfrak {B}\).

Proof

Reflexivity of \(\sim _\mathfrak {B}\) follows from the expandedness of \(\mathfrak {B}\) and the presence of \((\textsf {ref})\) in \(\mathscr{T}\mathscr{C}(\textsf {FOHL}^\textsf {F,P}_{\lambda ,\imath })\). Relexivity of \(\approx _\mathfrak {B}^\textsf {NOM}\) is a consequence of the expandedness of \(\mathfrak {B}\), which results in \(@_{\varvec{j}} \varvec{j}\) being present on \(\mathfrak {B}\) for each \(\varvec{j} \in \textsf {NOM}_\mathfrak {B}\) (thanks to \((\textsf {ref}_{\varvec{j}})\)). For the symmetry of \(\sim _\mathfrak {B}\) assume that \(b_1 = b_2 \in \mathfrak {B}\). By the expandedness of \(\mathfrak {B}\) we know that \((\textsf {ref})\) has been applied to \(b_1\) and \(b_2\), yielding \(b_1=b_1,b_2=b_2 \in \mathfrak {B}\). A single application of \((\textsf {RR})\) to \(b_1=b_1,b_1=b_2 \in \mathfrak {B}\) results in \(b_2=b_1 \in \mathfrak {B}\). To prove the symmetry of \(\approx _\mathfrak {B}\) assume that \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_2\). Then \(@_{\varvec{j}_1}\varvec{j}_2 \in \mathfrak {B}\). Since \(\mathfrak {B}\) is fully expanded, \(@_{\varvec{j}_1} \varvec{j}_1 \in \mathfrak {B}\) by \((\textsf {ref}_{\varvec{j}})\). Then \((\textsf {nom})\) must have been applied to \(@_{\varvec{j}_1}\varvec{j}_2, @_{\varvec{j}_1}\varvec{j}_1 \in \mathfrak {B}\), thus yielding \(@_{\varvec{j}_2}\varvec{j}_1 \in \mathfrak {B}\). For the transitivity of \(\sim _\mathfrak {B}\) assume that \(b_1,b_2,b_3 \in \textsf {PAR}_\textsf {B}\cup \textsf {CONS}_\mathfrak {B}\), \(b_1 \sim _\mathfrak {B}b_2\), and \(b_2 \sim _\mathfrak {B}b_3\). If \(b_1\) is identical to \(b_2\) or \(b_2\) is identical to \(b_3\), we immediately obtain \(b_1\sim _\mathfrak {B}b_3\). If \(b_1, b_2, b_3\) are pairwise distinct, the identities \(b_1 = b_2\) and \(b_2 = b_3\) must have occurred on \(\mathfrak {B}\). A single application of \((\textsf {RR})\) to both of them yields \(b_1 = b_3\) and since \(\mathfrak {B}\) is fully expanded, \(b_1 = b_3 \in \mathfrak {B}\). Hence, \(b_1 \sim _\mathfrak {B}b_3\). For the transitivity of \(\approx _\mathfrak {B}\) assume that \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_2\) and \(\varvec{j}_2 \approx _\mathfrak {B}\varvec{j}_3\). Then \(@_{\varvec{j}_1}\varvec{j}_2, @_{\varvec{j}_2}\varvec{j}_3 \in \mathfrak {B}\). By the argument used in the proof of symmetry of \(\approx _\mathfrak {B}\), we know that \(@_{\varvec{j}_2}\varvec{j}_1 \in \mathfrak {B}\). Applying \((\textsf {nom})\) to \(@_{\varvec{j}_2}\varvec{j}_1, @_{\varvec{j}_2}\varvec{j}_3 \in \mathfrak {B}\) gives us \(@_{\varvec{j}_1}\varvec{j}_3 \in \mathfrak {B}\), and so, \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_3\). \(\square \)

We will now show how to use the data stored on \(\mathfrak {B}\) to construct the branch structure \(\mathcal {M}_\mathfrak {B}= (\mathcal {T}_\mathfrak {B}, \prec _\mathfrak {B}, \mathcal {D}_\mathfrak {B}, \mathcal {I}_\mathfrak {B})\) and branch assignment \(\mathcal {v}_\mathfrak {B}\). Let \(\textsf {PRED}_\mathfrak {B}\), \(\textsf {BVAR}_\mathfrak {B}\), and \(\textsf {TVAR}_\mathfrak {B}\) denote the sets of all predicate symbols occurring on \(\mathfrak {B}\), all (bound) variables occuring on \(\mathfrak {B}\), and all tense variables occurring on \(\mathfrak {B}\), respectively. We define \(\mathcal {M}_\mathfrak {B}\) in the following way:

-

\(\mathcal {T}_\mathfrak {B}\) is the set of all equivalence classes of \(\approx _\mathfrak {B}\) over \(\textsf {NOM}_\mathfrak {B}\);

-

For any \(\varvec{t}_1,\varvec{t}_2 \in \mathcal {T}_\mathfrak {B}\), \(\varvec{t}_1\prec _\mathfrak {B}\varvec{t}_2\) if and only if there exist \(\varvec{j}_1,\varvec{j}_2 \in \textsf {NOM}_\mathfrak {B}\) such that \(\varvec{j}_1 \in \varvec{t}_1, \varvec{j}_2\in \varvec{t}_2\) and \(@_{\varvec{j}_1}\textsf {F}\varvec{j}_2 \in \mathfrak {B}\);

-

\(\mathcal {D}_\mathfrak {B}\) is the set of all equivalence classes of \(\sim _\mathfrak {B}\) over \(\textsf {FVAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\);

-

For any \(\varvec{j} \in \textsf {NOM}_\mathfrak {B}\), \(\mathcal {I}_\mathfrak {B}(\varvec{j}) = \varvec{t}\), for \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) such that \(\varvec{j} \in \varvec{t}\);

-

For any \(i \in \textsf {CONS}_\mathfrak {B}\), \(\mathcal {I}_\mathfrak {B}(i) = o\), for \(o \in \mathcal {D}_\mathfrak {B}\) such that \(i \in o\);

-

For any \(n \in \mathbb {N}^+\), n-ary predicate symbol \(P \in \textsf {PRED}_\mathfrak {B}\) and \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\), \(I_\mathfrak {B}(P,\varvec{t}) = \{\langle o_1,\ldots ,o_n\rangle \in (\mathcal {D}_{\mathfrak {B}})^n\mid @_{\varvec{j}} P(b_1,\ldots ,b_n) \in \mathfrak {B}\text { and } \varvec{j} \in \varvec{t} \text { and } b_1\in o_1,\ldots ,b_n\in o_n\}\);

Let \(\varvec{j_0}\) be an arbitrarily chosen element of \(\mathcal {T}_\mathfrak {B}\) and let \(o_0\) be an arbitrarily chosen element of \(\mathcal {D}_\mathfrak {B}\). Since \(\mathfrak {B}\) is an open branch for a formula \(@_{\varvec{j}}\varphi \), we are guaranteed that \(\mathcal {T}_\mathfrak {B}\) is non-empty, and therefore, such \(\varvec{j}_0\) can be picked. Moreover, thanks to the rule \((\textsf {NED})\) \(\mathcal {D}_\mathfrak {B}\) is also non-empty and the existence of \(o_0\) is secured too. By the branch assignment \(\mathcal {v}_\mathfrak {B}\) we will understand a function \(\mathcal {v}_\mathfrak {B}: \textsf {FVAR}_\mathfrak {B}\cup \textsf {BVAR}_\mathfrak {B}\cup \textsf {TVAR}_\mathfrak {B}\longrightarrow \mathcal {D}_\mathfrak {B}\cup \mathcal {T}_\mathfrak {B}\) defined as follows:

-

For any \(x \in \textsf {BVAR}_\mathfrak {B}\), \(\mathcal {v}_\mathfrak {B}(x) = o_0\);

-

For any \(b \in \textsf {FVAR}_\mathfrak {B}\), \(\mathcal {v}_\mathfrak {B}(b) = o \in \mathcal {D}_\mathfrak {B}\) if and only if \(b \in o\);

-

For any \(\varvec{x} \in \textsf {TVAR}_\mathfrak {B}\), \(\mathcal {v}(\varvec{x}) = \varvec{j}_0\).

Note that it is not possible that, for some \(b_1,b_2\in \textsf {FVAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\), \(b_1=b_2, b_2\ne b_1 \in \mathfrak {B}\). For assume the contrary. Then, after a single application of \((\textsf {RR})\) to the above-mentioned pair of formulas, we would obtain \(b_2\ne b_2 \in \mathfrak {B}\), which, together with \(b_2=b_2 \in \mathfrak {B}\) (thanks to \((\textsf {ref})\) and the expandedness of \(\mathfrak {B}\)) would close \(\mathfrak {B}\). Consequently, for any \(o \in \mathcal {D}_\mathfrak {B}\), any \(b_1,b_2 \in o\) and any \(\varphi \), \(\varphi [b_1] \in \mathfrak {B}\) if and only if \(\varphi [b_1/\!/b_2] \in \mathfrak {B}\). Moreover, it cannot be the case that there exist \(\varvec{j}_1, \varvec{j}_2 \in \textsf {NOM}_\mathfrak {B}\) such that \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_2\) and \(\lnot @_{\varvec{j}_1} \varvec{j}_2 \in \mathfrak {B}\). Indeed, if \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_2\), then \(@_{\varvec{j}_1}\varvec{j}_2 \in \mathfrak {B}\) (we use the argument from the symmetry proof of \(\approx _\mathfrak {B}\)), and so, the branch would immediately close. It is also impossible that there exist \(\varvec{j}_1,\varvec{j}_2,\varvec{j}_3 \in \textsf {NOM}_\mathfrak {B}\) such that \(\varvec{j}_2 \approx _\mathfrak {B}\varvec{j}_3\), \(@_{\varvec{j}_1} \textsf {F}\varvec{j}_2\), and \(\lnot @_{\varvec{j}_1} \textsf {F}\varvec{j}_3\). If it were the case, then \(@_{\varvec{j}_2} \varvec{j}_3\) would have to be present on \(\mathfrak {B}\). Since \(\mathfrak {B}\) is fully expanded, \((\textsf {bridge})\) would have been applied to \(@_{\varvec{j}_2}\varvec{j}_3,@_{\varvec{j}_1} \textsf {F}\varvec{j}_2 \in \mathfrak {B}\) resulting in \(@_{\varvec{j}_1}\textsf {F}\varvec{j}_3 \in \mathfrak {B}\) and closing \(\mathfrak {B}\). Finally, by the definition of \(\mathcal {T}_\mathfrak {B}\) and \(\mathcal {D}_\mathfrak {B}\), \(\mathcal {v}_\mathfrak {B}\) is defined on the whole domain. Thus, \(\mathcal {M}_\mathfrak {B}\) is a well-defined model and \(\mathcal {v}_\mathfrak {B}\) is a well-defined assignment.

Let \(\textsf {FOR}_\mathfrak {B}\) be the set of all formulas \(\psi \) such that \(@_{\varvec{j}}\psi \in \mathfrak {B}\) for some \(\varvec{j} \in \textsf {NOM}_\mathfrak {B}\). Below we make an observation that will be of use in the remainder of the section.

Fact 1

Let \(b_1,b_2\in \textsf {FVAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\) be such that \(b_1\sim _\mathfrak {B}b_2\), let \(\varvec{j},\varvec{j}_1,\varvec{j}_2\in \textsf {NOM}_\mathfrak {B}\) be such that \(\varvec{j}_1 \approx _\mathfrak {B}\varvec{j}_2\), and let \(\psi \in \textsf {FOR}_\mathfrak {B}\). Then:

-

1.

\(@_{\varvec{j}}\psi \in \mathfrak {B}\) if and only if \(@_{\varvec{j}}\psi [b_1/\!/b_2] \in \mathfrak {B}\);

-

2.

\(@_{\varvec{j}_1}\psi \in \mathfrak {B}\) if and only if \(@_{\varvec{j}_2}\psi \in \mathfrak {B}\).

The next lemma is the pillar of the completeness theorem concluding this section

Lemma 6

Let \(\mathcal {M}_\mathfrak {B}\) and \(\mathcal {v}_\mathfrak {B}\) be defined as above. Then for any \(\psi \in \textsf {FOR}_\mathfrak {B}\), \(\varvec{j}\in \textsf {NOM}_\mathfrak {B}\), and \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) such that \(\varvec{j} \in \varvec{t}\):

-

1.

if \(@_{\varvec{j}}\psi \in \mathfrak {B}\), then \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \psi \);

-

2.

if \(\lnot @_{\varvec{j}}\psi \in \mathfrak {B}\), then \(\mathcal {M}_\mathfrak {B}, \varvec{\varvec{t}}, \mathcal {v}_\mathfrak {B}\not \models \psi \).

Proof

We prove the lemma by induction on the complexity of \(\psi \) skipping the boolean and quantifier cases which are well known. We first show that the first implication holds.

\(\psi :=P(b_1,\ldots ,b_n)\) Assume that \(@_{\varvec{j}}P(b_1,\ldots ,b_n) \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\) and \(o_1, \ldots , o_n \in \mathcal {D}_\mathfrak {B}\) are such that \(b_1 \in o_1, \ldots , b_n \in o_n\). By the definition of \(\mathcal {M}_\mathfrak {B}\), \(\langle o_1,\ldots ,o_n\rangle \in {\mathcal {I}_\mathfrak {B}}(P,\varvec{t})\), and so, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models P(b_1,\ldots ,b_n)\).

\(\psi := b_1\!=\!b_2\) Assume that \(@_{\varvec{j}}b_1=b_2 \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\) and \(o_1, o_2\in \mathcal {D}_\mathfrak {B}\) are such that \(b_1 \in o_1, b_2 \in o_2\). Since \(\mathfrak {B}\) is fully expanded, \((\textsf {eq})\) must have been applied to \(@_{\varvec{j}} b_1=b_2\), thus yielding \(b_1 = b_2 \in \mathfrak {B}\). By the definition of \(\sim _\mathfrak {B}\), \(o_1 = o_2\), and so, by the definition of \(\mathcal {M}_\mathfrak {B}\) and \(\mathcal {v}_\mathfrak {B}\), \({\mathcal {I}_\mathfrak {B}}_{\mathcal {v}_\mathfrak {B}}(b_1) = {\mathcal {I}_\mathfrak {B}}_{\mathcal {v}_\mathfrak {B}}(b_2)\). Thus, by the satisfaction condition for \(=\)-formulas, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models b_1 = b_2\).

\(\psi := \varvec{j}'\) Assume that \(@_{\varvec{j}}\varvec{j}' \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t}, \varvec{t}' \in \mathcal {T}_\mathfrak {B}\) are such that \(\varvec{j} \in \varvec{t}\) and \(\varvec{j}' \in \varvec{t}'\). By the definition of \(\approx _\mathfrak {B}\), \(\varvec{t} = \varvec{t}'\), and so, by the definition of \(\mathcal {M}_\mathfrak {B}\) and the satisfaction condition for nominals, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, v_\mathfrak {B}\models \varvec{j}'\).

\(\psi := (\lambda x\chi )(b)\) Assume that \(@_{\varvec{j}}(\lambda x\chi )(b)\in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\) and \(o\in \mathcal {D}_\mathfrak {B}\) is such that \(b \in o\). Since \(\mathfrak {B}\) is fully expanded, the rule \((\lambda )\) must have been applied to \(@_{\varvec{j}}\psi \), yielding \(@_{\varvec{j}}\chi [x/b] \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \chi [x/b]\). By the Substitution Lemma, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}[x\mapsto o] \models \chi \), which, together with the fact that \({\mathcal {I}_\mathfrak {B}}_{\mathcal {v}_\mathfrak {B}}(b) = o\), gives \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models (\lambda x \chi )(b)\).

\(\psi := (\lambda x\chi )(\imath y \theta )\) Assume that \(@_{\varvec{j}}(\lambda x\chi )(\imath y \theta )\in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\). Due to the expandedness of \(\mathfrak {B}\), the rule \((\imath _1^o)\) must have been applied to \(@_{\varvec{j}}\psi \), yielding \(@_{\varvec{j}}\chi [x/a], @_{\varvec{j}}\theta [y/a] \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \chi [x/a],\theta [y/a]\). Let \(o \in \mathcal {D}_\mathfrak {B}\) be such that \(a \in o\). By the Substitution Lemma, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}[x\mapsto o] \models \chi , \theta [y/a]\). Now, let \(b\in \textsf {FVAR}_\mathfrak {B}\cup \textsf {CONS}_\mathfrak {B}\) be such that \(@_{\varvec{j}}\theta [y/b] \in \mathfrak {B}\). Then \((\imath _2)\) was applied to \(@_{\varvec{j}}\theta [y/a]\) and \(@_{\varvec{j}}\theta [y/b]\) yielding \(@_{\varvec{j}}a=b \in \mathfrak {B}\). By the inductive hypothesis, \({\mathcal {I}_\mathfrak {B}}_{\mathcal {v}_\mathfrak {B}}(b)={\mathcal {I}_\mathfrak {B}}_{\mathcal {v}_\mathfrak {B}}(a)=o\) and \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \theta [y/b]\), and so, by the Substitution Lemma, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}[y\mapsto o] \models \theta \). Since b is arbitrary, we get \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models (\lambda x \chi )(\imath y \theta )\).

\(\psi := \textsf {F}\chi \) Assume that \(@_{\varvec{j}}\textsf {F}\chi \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\). By the expandedness of \(\mathfrak {B}\) it follows that \((\textsf {F})\) was applied to \(@_{\varvec{j}}\textsf {F}\chi \) yielding \(@_{\varvec{j}}\textsf {F}\varvec{j}', @_{\varvec{j}'}\chi \in \mathfrak {B}\). Let \(\varvec{t}'\) be such that \(\varvec{j}' \in \varvec{t}'\). By the inductive hypothesis we obtain \(\mathcal {M}_\mathfrak {B}, \varvec{t}', \mathcal {v}_\mathfrak {B}\models \chi \). By the construction of \(\prec _\mathfrak {B}\) and the fact that \(@_{\varvec{j}}\textsf {F}\varvec{j}' \in \mathfrak {B}\) we get \(\varvec{t}\prec _\mathfrak {B}\varvec{t}'\). Thus, by the satisfaction condition for \(\textsf {F}\), we arrive at \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \textsf {F}\chi \).

\(\psi := \textsf {P}\chi \) We proceed similarly to the previous case.

\(\psi := \imath \varvec{x}\chi \) Assume that \(@_{\varvec{j}} \imath \varvec{x}\chi \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\). Since \(\mathfrak {B}\) is fully expanded, \((\imath _1^t)\) must have been applied to \(@_{\varvec{j}}\imath \varvec{x}\chi \), which resulted in \(@_{\varvec{j}} \chi [\varvec{x}/\varvec{j}] \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \chi [\varvec{x}/\varvec{j}]\). Now, let \(\varvec{j}' \in \textsf {NOM}_\mathfrak {B}\) be such that \(@_{\varvec{j}'}\chi [\varvec{x}/\varvec{j}'] \in \mathfrak {B}\) and let \(\varvec{t}'\in \mathcal {T}_\mathcal {D}\) be such that \(\varvec{j}' \in \varvec{t}'\). Then, by the expandedness of \(\mathfrak {B}\), \((\imath _2^t)\) was applied to \(@_{\varvec{j}} \imath \varvec{x}\chi \) and \(@_{\varvec{j}'} \chi \) resulting in \(@_{\varvec{j}}\varvec{j}' \in \mathfrak {B}\). By the definition of \(\approx _\mathfrak {B}\) and \(\mathcal {T}_\mathfrak {B}\), \(\varvec{t} = \varvec{t'}\). Since \(\varvec{j}'\) (and therefore, \(\varvec{t}'\)) was arbitrary, the respective satisfaction condition is satisfied, and so, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \imath \varvec{x} \chi \).

\(\psi := @_{\varvec{j}'} \chi \) Assume that \(@_{\varvec{j}} @_{\varvec{j}'} \chi \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t},\varvec{t}' \in \mathcal {T}_\mathfrak {B}\) are such that \(\varvec{j} \in \varvec{t}\) and \(\varvec{j}' \in \varvec{t}'\). Since \(\mathfrak {B}\) is fully expanded, \((\textsf {gl})\) must have been applied to \(@_{\varvec{j}}@_{\varvec{j}'} \chi \), which resulted in \(@_{\varvec{j}'} \chi \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \chi \). By the satisfaction condition for \(@_{\varvec{j}}\)-formulas we obtain \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models @_{\varvec{j}'} \chi \).

\(\psi := @_{\imath \varvec{x}\chi } \theta \) Assume that \(@_{\varvec{j}} @_{\imath \varvec{x}\chi } \theta \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\). Since \(\mathfrak {B}\) is fully expanded, \((@\imath ^t)\) must have been applied to \(@_{\varvec{j}} @_{\imath \varvec{x}\chi } \theta \), which resulted in \(@_{\varvec{i}} \imath \varvec{x}\chi , @_{\varvec{i}}\theta \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \imath \varvec{x}\chi \) and \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \theta \). By the satisfaction condition for \(@_{\imath \varvec{x}\varphi }\)-formulas we obtain \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models @_{\imath \varvec{x}\chi } \theta \).

\(\psi := \downarrow _{\varvec{x}}\! \chi \) Assume that \(@_{\varvec{j}}\!\downarrow _{\varvec{x}}\! \chi \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t}\in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\). Since \(\mathfrak {B}\) is fully expanded, \((\downarrow )\) was applied to \(@_{\varvec{j}}\!\downarrow _{\varvec{x}}\! \chi \in \mathfrak {B}\), which resulted in \(@_{\varvec{j}} \chi [\varvec{x}/\varvec{j}] \in \mathfrak {B}\). By the inductive hypothesis, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \chi [\varvec{x}/\varvec{j}]\). By the Substitution Lemma we obtain \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}[\varvec{x}\mapsto \varvec{t}] \models @_{i'} \chi \). By the satisfaction condition for @-formulas we obtain \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\models \downarrow _{\varvec{x}}\! \chi \).

We now proceed to a proof of the second implication.

\(\psi :=P(b_1,\ldots ,b_n)\) Assume that \(\lnot @_{\varvec{j}}P(b_1,\ldots ,b_n) \in \mathfrak {B}\). Assume, moreover, that \(\varvec{t} \in \mathcal {T}_\mathfrak {B}\) is such that \(\varvec{j} \in \varvec{t}\) and \(o_1, \ldots , o_n\in \mathcal {D}_\mathcal {T}\) are such that \(b_1 \in o_1, \ldots , b_n \in o_n\). Since \(\mathfrak {B}\) is open, we know that \(@_{\varvec{j}}P(b_1,\ldots ,b_n) \notin \mathfrak {B}\). By the definition of \(\mathcal {M}_\mathfrak {B}\), \(\langle o_1,\ldots ,o_n\rangle \notin {\mathcal {I}_\mathfrak {B}}((P,\varvec{t})\), and so, \(\mathcal {M}_\mathfrak {B}, \varvec{t}, \mathcal {v}_\mathfrak {B}\not \models P(b_1,\ldots ,b_n)\).