Abstract

I examine the concept of granting for the sake of the argument in the context of explanatory reasoning. I discuss a situation where S wishes to argue for H1 as a true explanation of evidence E and also decides to grant, for the sake of the argument, that H2 is an explanation of E. S must then argue that H1 and H2 jointly explain E. When H1 and H2 compete for the force of E, it is usually a bad idea for S to grant H2 for the sake of the argument. If H1 and H2 are not positively dependent otherwise, there is a key argumentative move that he will have to make anyway in order to retain a place at the table for H1 at all—namely, arguing that the probability of E given H2 alone is low. Some philosophers of religion have suggested that S can grant that science has successfully provided natural explanations for entities previously ascribed to God, while not admitting that theism has lost any probability. This move involves saying that the scientific explanations themselves are dependent on God. I argue that this “granting” move is not an obvious success and that the theist who grants these scientific successes may have to grant that theism has lost probability.

Similar content being viewed by others

Notes

I owe this point to Timothy McGrew.

In the field of biblical studies, there is sometimes a failure to recognize that the theory that an author was introducing a narrative detail for symbolic reasons tends to compete with the theory that the author was narrating the detail because he believed it to be historically true. This case is much like that of John and the 5k in the last section. This leads to a concomitant failure to recognize the historical value of seemingly artless narration of details that have no apparent symbolic meaning (McGrew, 2021).

I do not mean to attribute to these philosophers any particular personal views (which they do not state) about the natural processes in question or their sufficiency to explain the evidence. The burden of their argument is that there are good strategies for the theist to adopt to rebut SEAGA even if these processes are the true explanations of E. This means that these recommended strategies should work for a theist who is merely granting these things for the sake of the argument.

I owe this objection to a reviewer.

In an earlier paper, Glass (2012, pp. 92–93) made the quite different suggestion that the probability that complex life forms would arise on earth given natural processes and no design is low, due to the improbability of the origin of life and the origin of the eukaryotic cell given natural processes alone. Apparently at that time he considered this to be a good theistic strategy.

Interestingly, Richard Swinburne makes two outright errors concerning this point. First, he claims that a fine-tuning argument can have significant force for theism only if the features included in it lead with “considerable probability” to the existence of human and animal bodies. This is incorrect, since in principle a pre-condition argument could have significant force for theism even if this were not the case. Second, he implies that we have independent scientific evidence that the conditions at the beginning of the universe really did predict the arising of animal and human bodies with a significantly high probability (Swinburne, 2004, p. 189). This is also not true. Swinburne’s own listed specifics of constant values and the like that must be “just so” for the universe to be life-permitting do not come close to being sufficient conditions. I note these surprising slips by Swinburne because it seems plausible that they arise from the idea that we should abandon any separate BDA but that we can pack all the previous force that was thought to come from BDAs into the FTA instead, thus avoiding an admission of explaining away.

A reviewer points out that merely necessary conditions could be a part of a causal explanation according to the “difference making” theory advocated by Woodward (2003). In that sense the friend’s conception is part of the causal explanation for his being late, because he could not be late if he didn’t exist. But when the contrastive implication is taken into account (his being late as opposed to his existing and being on time), then the merely necessary condition of his existence does not make a difference to that contrastive outcome. Similarly, even if God’s existence is a difference maker for the existence of complex life as opposed to its non-existence, just in the sense that it is needed to bring about certain pre-conditions, it is arguably not a difference maker for the actual existence of complex life as opposed to a state of affairs in which, even though a life-permitting universe exists, no complex life exists—a very plausible outcome given that the pre-conditions in question are so far from being sufficient.

My thanks to Timothy McGrew for generating the unique distribution in which all of these prior conditions are jointly satisfied, as a proof that the conditions assumed are probabilistically consistent.

References

Behe, M. (1996). Darwin’s black box. The Free Press.

Collins, R. (2009). The teleological argument: An exploration of the fine-tuning of the Universe. In J. P. Moreland, & W. Lane Craig (Eds.), The Blackwell Companion to natural theology (pp. 202–281). Wiley-Blackwell.

Crupi, V., Tentori, K., & Gonzalez, M. (2007). On bayesian measures of evidential support: Theoretical and empirical issues. Philosophy of Science, 74, 229–252.

Dawkins, R. (1996). The blind watchmaker: Why the evidence of evolution reveals a universe without design. Norton.

Earman, J. (1992). Bayes or bust: A critical examination of bayesian confirmation theory. MIT Press.

Fitelson, B. (1998). The plurality of bayesian measures of confirmation and the problem of measure sensitivity. Philosophy of Science 66, Supplement. In Proceedings of the 1998 Biennial Meetings of the Philosophy of Science Association (pp. S362–S378), Part I.

Glass, D. (2012). Can evidence for design be explained away? In J. Chandler & V. S. Harrison (Eds.), Probability in the Philosophy of Religion (pp. 79–102). Oxford University Press.

Glass, D. (2017). Science, God and Ockham’s Razor. Philosophical Studies, 174, 1145–1161.

Glass, D. (2021). Competing hypotheses and abductive inference. Annals of Mathematics and Artificial Intelligence, 89, 161–178.

Glass, D., & Schupbach, J. (2017). Hypothesis competition beyond mutual exclusivity. Philosophy of Science, 84, 810–824.

Good, I. J. (1950). Probability and the weighing of evidence. Griffin.

Keynes, J. M. (1921). A treatise on probability. Macmillan.

Lewis, G. F., & Barnes, L. A. (2016). A fortunate universe: Life in a finely tuned Cosmos. Cambridge University Press.

McGrew, L. (2014). On not counting the cost: Ad hocness and disconfirmation Acta Analytica, 29, 491–505.

McGrew, L. (2016). Bayes factors all the way: Toward a new view of coherence and truth. Theoria, 82, 329–350.

McGrew, L. (2021). The eye of the beholder: The gospel of John as historical reportage. DeWard.

McGrew, T. (2003). Confirmation, heuristics, and explanatory reasoning. British Journal for the Philosophy of Science, 54, 553–567.

Schupbach, J. N. (2016). Competing explanations and explaining away arguments. Theology and Science, 16, 256–267.

Swinburne, R. (2004). The existence of God (2nd ed.). Oxford University Press.

Woodward, J. (2003). Making things happen: A theory of causal explanation. Oxford University Press.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Competition Assuming Marginal Independence

Let H1 be “John won a 5k race.”

Let H2 be “John’s statements are motivated by vanity.”

Let E be “John says that he won a 5k race.”

Suppose that the prior probabilities of both H1 and H2 are 0.3, and suppose that H1 and H2 are marginally independent—i.e., independent aside from E. Hence P(H1|H2) = P(H1|~H2) = 0.3 and vice versa.

Suppose further that P(~ H1 & ~H2), P(H1 & H2), P(H1 & ~H2), and P(H2 & ~H1) are all greater than zero. These conditions are jointly satisfiable, and the priors of each part of this partition (which will not be used directly in the calculations) are:Footnote 9

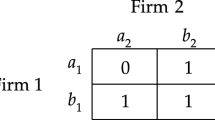

First, suppose a situation in which each of H1 and H2 alone has equal likelihood vis a vis E, as follows:

It follows from these assumptions that H1 and H2 compete by the indirect pathway, so either one disconfirms the other modulo E. We can see this as follows:

Since both priors and likelihoods are the same in this distribution, the same numbers are also correct for P(E|H2) and P(E|~H2).

By Bayes’s Theorem,

But if H2 is granted, the posterior is much lower. Since H1 and H2 are marginally independent, the posterior of H1 given E once H2 is granted (by a conditional form of Bayes’s Theorem) is

So H2 disconfirms H1 modulo E (and vice versa). H1 is confirmed in both instances (whether or not H2 is granted) but does not even rise above 0.5 when H2 is granted, when the probability of E is the same given either of the two hypotheses alone.

Suppose then that we present a cogent argument that P(E|H2 & ~H1) is lower than P(E|H1 & ~H2). Suppose that we retain the above assumptions with one exception. Let.

This would be the case, for example, if John is a habitual truth teller so that he is unlikely to say that he won a 5k if he is motivated by vanity alone, when the statement is untrue.

Then we have the following:

Thus, if H2 is granted, we have.

So by arguing that H2 (vanity) all by itself is a much poorer explanation of John’s utterance than the truth of what he says, we allow H1 to be confirmed to a respectable posterior probability well above 0.5 (namely, 0.72), even when H2 is granted.

However, this change (arguing that the probability of the utterance given vanity alone is 0.1) is also helpful to the confirmation of H1 if H2 is not granted.

Given the probabilities stipulated,

So

If we do not grant H2 and argue convincingly that John would not say that he won a 5k if it were not true, we have

So arguing for John’s truthfulness is a helpful part of the argument either way.

Since granting H2 disconfirms H1 modulo E in this scenario, and since one needs to argue for a low P(E|H2 & ~H1) anyway, one might as well not grant H2.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

McGrew, L. Be Careful What You Grant. Philosophia 51, 2657–2679 (2023). https://doi.org/10.1007/s11406-023-00702-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11406-023-00702-4