Abstract

There are many ways in which biases can enter processes of scientific reasoning. One of these is what Ludwik Fleck has called a “harmony of illusions”. In this paper, Fleck’s ideas on the relevance of social mechanisms in epistemic processes and his detailed description of publication processes in science will be used as a starting point to investigate the connection between cognitive processes, social dynamics, and biases in this context.

Despite its usefulness as a first step towards a more detailed analysis, Fleck’s account needs to be updated in order to take the developments of digital communication technologies of the 21st century into account. Taking a closer look at today’s practices of science communication shows that information and communication technologies (ICTs) play a major role here. By presenting a detailed case study concerning the database SCOPUS, the question will be investigated how such ICTs can influence the division of epistemic labour. The result will be that they potentially undermine the epistemic benefits of social dynamics in science communication due to their inherent tendency to reduce the diversity of scientific hypotheses and ideas.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There are many ways in which biases can enter processes of scientific reasoning. Some of them are more relevant than others; some of them are more sensitive than others. Relevant and sensitive with respect to what? This depends on, for example, what is considered to be the aim of science or the degree of preciseness which is required for scientific hypotheses or the adequacy of scientists’ answers to specific questions. A crucial line is usually drawn between the epistemic and the non-epistemic: what affects the former and is of the latter kind is usually regarded with suspicion by philosophers of science who worry that non-epistemic values might distort scientific reasoning processes, i.e. the epistemic endeavour. Unfortunately, things aren’t that simple.

Several philosophers have pointed out that it would be wrong to exclude any kind of non-epistemic value judgement from scientific practices (see e.g. Douglas 2009; Longino 2008). Philip Kitcher, for instance, stresses the point that scientific investigations are purposefully pursued and that these purposes are connected to people asking questions and looking for answers (see Kitcher 2004; 2011). Science should be regarded as a social endeavour all the way down. It is via this route that interests and values can and will quite easily find their way into epistemic processes. Admittedly, we might come across instances of scientific reasoning that are pursued as mere intellectual puzzles, but more often than not this is the exception, not the rule. This insight, however, combined with the general idea mentioned earlier, namely that interests and values have the capacity to distort epistemic results, leaves us with the question of which values to discard and which ones to keep – and why.

In this paper, the admissibility of particular interests, kinds of interests, and values won’t be discussed. However, it will be analysed how interests and values enter the process of scientific reasoning due to the usage of ICTs in science communication. In this context, the latter have become an essential part of the division of epistemic labour, which is often described as contributing to the objectivity of scientific results. Our focus will be this social institution. By referring to a detailed case study concerning the database SCOPUS, the question will be investigated how ICTs can influence this basic epistemic practice.

The argument will be developed as follows: (1) we will take a closer look at the division of epistemic labour as a principle of scientific progress. In this context, Ludwik Fleck’s ideas concerning publishing processes in science will be used as a starting point to explain the mechanisms and their effects on epistemic contents in more detail. (2) Fleck’s account will be transferred to the 21st century. Publishing processes are deeply affected by ICTs nowadays. Some of these technological means exhibit the potential to undermine the diversity of scientific opinions. This general assumption will be substantiated by a concrete case study. The example discussed will concern the database SCOPUS, developed and provided by “Elsevier”, commonly known as one of the largest publishing houses for scientific journals and books.Footnote 1 A brief summary will be given of the intended purposes of this database and how it works. (3) The results of the case study will be critically examined with respect to the division of epistemic labour as a prerequisite for scientific progress. The criteria and processes for ensuring the quality of the data stored will be analysed, because they are closely related to mechanisms used in the scientific community (and beyond) in order to evaluate their output. Here the question of values and value criteria becomes crucial. It will become clear (4) that technological developments such as the database SCOPUS can undermine the positive outcome of the division of epistemic labour in science. This is due to the fact that such ICTs exhibit a tendency to reduce the plurality and diversity of ideas that are relevant to scientific progress.

1 Publishing Processes and the Division of Epistemic Labour

The phrase standing on the shoulders of giants is usually attributed to Isaac Newton. It expresses the inherently social character of science and refers to what philosophers call the division of epistemic labour (or cognitive labour), which plays a significant role in enabling scientific progress (see e.g. Kitcher 1995).

The fact that epistemic achievements in science are not the result of the genius of individual scientists because science is a social endeavour was pointed out by Ludwik Fleck in the 1930s. He defends the claim that “[c]ognition is the most socially-conditioned activity of man, and knowledge the paramount social creation [Gebilde]” by carefully analysing a number of often-overlooked mechanisms of how social dynamics influence scientific reasoning processes (Fleck 1979, 42). One such mechanism is inherent to communicative processes both among scientific experts and between experts and laypeople. Fleck, a scientist himself,Footnote 2 describes these in detail (see Fleck 1986; 1979, Chap. 4.4). In particular, he explains the mechanisms of publishing processes of scientific results and hypotheses in different kinds of media, i.e. in popular science for laypeople and in journals and booksFootnote 3 for experts.

Fleck points out how the contents of thoughts change during these communicative processes.Footnote 4 Summarising his ideas, we can highlight two main reasons for such alterations: on the one hand, changes in meaning are often a result of the intention to transmit information as clearly as possible. To make sure that the recipient will understand the information, the speaker tries to anticipate what the common ground of communication might be, i.e., what she could expect her audience to know beforehand and what she has to explain in more detail. She will then translate her scientific findings accordingly. These efforts can be successful, but don’t have to be. Sometimes they might fail and, as a result, misunderstandings can occur. Since the idea that circulates within such a group of people is continuously transformed in this way, Fleck ascribes its authorship to the community as a whole instead of a particular researcher.Footnote 5 It is through these steps of content alterations and adaptions that the social enters epistemic processes. This can be seen particularly clearly at the level of communication from expert to novice.

Social effects can also occur at the level of expert-to-expert communication. Here, changes in meaning are the result of the speaker’s attempt to seek the approval of her community with respect to her research hypotheses. Theses are thus presented in a way that is intended to follow the community’s “thought style”Footnote 6. Fleck thinks that authors will formulate their ideas in accordance with principles embedded within their community. Moreover, it is not only the anticipation of the collective’s expectations that let the social enter the discourse, but also the actual and continuous discussion of ideas, especially, when preparing results and hypotheses while compiling and updating handbooks. Fleck claims that: “Very often it is impossible to find any originator for an idea generated during discussion and critique. Its meaning changes repeatedly; it is adapted and becomes common property. Accordingly it achieves a superindividual value, and becomes an axiom, a guideline for thinking” (Fleck 1979, 121). Here, similar social dynamics occur that result in a depersonalisation of thoughts and ideas, just as in the realm of expert-to-novice communication mentioned above.

The social mechanisms of the division of epistemic labour that Fleck highlights are processes of communication – especially publication processes focussing on different kinds of audiences. Fleck describes these communicative acts as a circulation of thoughts. He thereby refers to the reciprocity of the process, which means that, in the end, both speaker and hearer are affected by the change of meaning of genuinely transmitted ideas: “After making several rounds within the community, a finding often returns considerably changed to its originator, who reconsiders it himself in quite a different light. He either does not recognize it as his own or believes, and this happens quite often, to have originally seen it in its present form” (Fleck 1979, 42 f.). Here, the development of ideas is described as the participant’s unconscious self-deception. This means that the inventor of the idea thinks that he himself made the suggestion to take things as they were after the modification took place.Footnote 7 He is seemingly not aware of the fact that others shared the discussion and made relevant contributions. Or, alternatively, the inventor of the idea is no longer able to recognise it as his own invention because it has been altered substantially.

One might feel tempted to think of these transformative processes as mere changes in the way ideas are expressed. However, Fleck argues that this isn’t merely a change in wording, but that these changes can often be regarded as the actual driving force of scientific progress. Following the ideas of Gestalt psychology, he claims that it is due to the thought style of a particular community that certain entities can be perceived as facts. Changes within the conceptual scheme of the thought style at hand allow for making new observations. Progress in science is regarded as a result of shifts in Gestalt perception that are related to changes of the conceptual basis offered by the community’s thought style. “This change in thought style, that is, change in readiness for directed perception, offers new possibilities for discovery and creates new facts. This is the most important epistemological significance of the intercollective communication of thoughts” (Fleck 1979, 110).

Here some critical remarks seem to be called for, as some of Fleck’s theses are quite strong and imply rather controversial social constructivist ideas about the natural world. Expectations caused by theoretical assumptions do play a role in scientific observations – as the problem of the theory-ladenness of observation shows – but the latter are not simply the result of wishful thinking due to theoretical assumptions.Footnote 8 Regardless of this point, we will not pursue the question of social constructivism any further here, because the current analysis is not concerned with ontological questions, but with the division of epistemic labour. It should have become clear that the division of epistemic labour takes place within the scientific community not only by reading, but also by writing.

Writing and publishing processes constitute the first step of an audience’s reception of a fixed content. It is also at this stage that significant changes in meaning and the development of ideas can occur due to the mechanisms explained above. This is the insight gained by Fleck’s careful and informed discussion of actual scientific practices. But his considerations were based on the publishing techniques of his time. His focus is on journals and books. The media landscape, however, has changed considerably since Fleck’s analysis. In the late 20th and early 21st century, digital processes have had a huge impact on the production and publishing processes of scientific journals and books. They have thoroughly changed many of the traditional ways of publicly presenting an idea. In the next section, a closer look will be taken at these modifications by means of a case study, namely by analysing the database SCOPUS. This technological device is of particular interest as its provider tries to implement it at the heart of the division of epistemic labour. The question will be what the epistemic effects of these efforts are.

2 ICTs in Publication Processes: The case of SCOPUS

Today, the use of ICTs in work processes significantly affects a variety of different practices within the scientific community. These IT solutions are employed for data work, and the corresponding technologies seem to have become indispensable – which is also due to their usage. As Luciano Floridi has pointed out, it is “a self-reinforcing cycle” (Floridi 2014, 13), that is, the more of these technologies are used, the more data is produced by them that has to be managed.

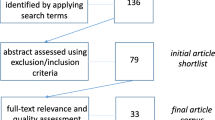

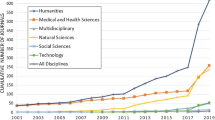

This acceleration of data production can also be noticed with respect to publishing processes. Let us focus on scientific journals to illustrate the point: an increasing amount of journals have become globally available and accessible via the Internet. Moreover, new publishing formats have been established such as online first publications or repositories offering open access pre-print versions of scientific articles.Footnote 9 As a result, more and more articles of all kinds are published. As a consequence, the need to navigate through this flood of information becomes even more pressing. IT-tools offering help therefore seem to be more than welcome within the scientific community. The database SCOPUS is one such solution, offering services to the community that are considered helpful – at least according to its provider. So what is SCOPUS about?

On the company’s website, its services are advertised as follows: “Scopus uniquely combines a comprehensive, curated abstract and citation database with enriched data and linked scholarly content. Quickly find relevant and trusted research, identify experts, and access reliable data, metrics and analytical tools to support confident decisions around research strategy – all from one database and one subscription.”Footnote 10 Two main functionsFootnote 11 of the database can be highlighted: first, it serves research purposes by distributing information – one can search the database with respect to scientific articles, data, and experts in a field of study. The company claims that the data made available belongs to a broad variety of different academic disciplines: “[…] Scopus delivers the most comprehensive overview of the world’s research output in the fields of science, technology, medicine, social science, and arts and humanities” (Elsevier 2020, 4, 6 emphasis added).

Second, SCOPUS is also a tool meant to evaluate science. For example, the database can be used to find “experts” in a specific field of study. For this end, criteria are required that algorithms can work with, namely numbers or digital units. This concerns the field of “scientometrics”, that is, “[…] the study of science through the measurement and analysis of researchers’ productive outputs. These outputs include journal articles, citations, books, patents, data, and conference proceedings” (Schmid 2020).Footnote 12 SCOPUS is not merely another search engine on the web. It is also a tool used to qualitatively assess scientific achievements.

For individual scientists, this is achieved by calculating and displaying their h-index.Footnote 13 This metrical number is based on how often an author’s scientific papers have been cited – a number that is generated by processing the data stored in SCOPUS. More precisely, “[t]he h-index considers the number of publications produced by an individual or organization and the number of citations these publications receive. An individual can be said to have an h-index of h when she produces h publications each of which receives at least h citations and no other publication receives more than h citations” (Schmid 2020). Thus, the h-index is meant to eliminate discordant values in the metrics so that a more harmonised overall picture of a scientist’s achievements over time can be generated. There are, however, many problems related to such quantitative approaches of science evaluation (see e.g. Herb 2018; Krull 2017; Gillies 2008), and we will come back to a critical discussion of some of these in the next section.

For now, let us stick to the descriptive task: the utility and success of SCOPUS depends on the sufficiency and quality of its database. So what kind of data is collected by SCOPUS and where does it come from? We will start with the latter question: in 2021, more than 7,000 publishers were used as a data source.Footnote 14 In total, more than 82 million data sets are stored and, thus, searchable via SCOPUS. They encompass more than 17 million author profiles and more than 80,000 institutional profiles. The provider also claims that 1.7 billion cited references dating back to the 1970s turn this database into a treasure chest for anybody interested in data mining.

By indexing an increasing amount of information and collecting more and more data, “Elsevier” wants to play an important role in what is called “big data science”.Footnote 15 However, beyond the mere quantity of data, there is also the question about its quality. As the quote at the beginning of this section shows, the company claims that their database perfectly meets this requirement. They claim that, by using SCOPUS, researchers are able to “[q]uickly find relevant and trusted research, identify experts, and access reliable data, metrics and analytical tools […].”Footnote 16 This leaves us with the questions of (1) which criteria are used to assess the quality of the data provided by SCOPUS, that is, what makes the data reliable and trustworthy, and (2) how does “Elsevier” make sure that only reliable and trustworthy data is stored and made available?

Again, let us address the latter question first: the provider describes the content selection process as comprising different steps to ensure the quality of the data.Footnote 17 Two main components can be highlighted: on the one hand, formal criteria have been defined to allow for a preselection of sources (publishers, book series, journals) and, on the other hand, a content selection and advisory board (CSAB) has been established in order to control decisions, based on these criteria by adding relevant expertise from different academic fields.Footnote 18 So in order to be added to SCOPUS, a suggested journal title first has to meet all of the following formal criteria:

-

“Consist of peer-reviewed content and have a publicly available description of the peer review process.

-

Be published on a regular basis and have an International Standard Serial Number (ISSN) as registered with the ISSN International Centre.

-

Have content that is relevant for and readable by an international audience, i.e., have references in Roman script and have English language abstracts and titles.

-

Have a publicly available publication ethics and publication malpractice statement” (Elsevier 2020, 17).

If the journal passes this first suitability test, a review process by the members of the CSAB will take place. Its members are introduced as experts, that is, as specialists of various academic disciplines. They are supposed to select the content relevant to their research community and to guarantee for the neutrality of the decision being made. Again, a list of criteria has been defined to guide their choices and to make them transparent. These criteria are related to the following five aspects: “journal policy, content, journal standing, publishing regularity, online availability”.Footnote 19 Regarding the journal policy, the CSAB members are, for instance, expected to take into account the diversity of a journal’s editors and authors with respect to their geographical residence. The content criterion is thought to guarantee, for example, the “academic contribution to the field”. Journal standing refers, among other things, to how often the journal under consideration has already been cited by indexed sources stored in SCOPUS. The publishing regularity is evaluated by taking a look at probable delays in publishing processes and their frequency. And, finally, the criterion of online availability is focused on the question whether the whole journal content is available online and whether the journal’s website is in English and readable by an international audience. In contrast to the formal selection criteria that a journal has to all meet in order to be taken into consideration for being indexed by SCOPUS, there is no information available about the weighting of the criteria used by CSAB members.

Moreover, to ensure the quality of their data, “Elsevier” claims that a title re-evaluation process takes place on annual basis to make sure that journals once selected continue to meet the standards set by the provider.

All of these procedures are meant to improve and ensure the advertised high quality of the data offered by SCOPUS. ‘High quality’ is defined in terms of relevance and acceptance within the respective scientific community, as the following quotation illustrates: “Scopus quickly finds relevant and authoritative research, identifies experts and provides access to reliable data, metrics and analytical tools. Be confident in progressing research, teaching or research direction and priorities — all from one database and with one subscription.”Footnote 20 What becomes clear is that “Elsevier” strives for a monopoly position regarding its database. Scientists are supposed to rely more or less exclusively on SCOPUS.Footnote 21

Assuming that “Elsevier”’s product policy will be successful, that is, scholars give in to the temptation of relying completely on the services of this one database, SCOPUS will not only add content to their research processes, but will also structure and guide the processes based on the pre-selection of data sources. Whether this is a reason to worry about the epistemic success of the entire process, hinges on the question whether the criteria meant to guarantee the quality of the data are suitable in the context of the particular scientific community.

Implementing ICTs in publishing processes in science has an increasing impact on the division of epistemic labour. Contrary to Fleck’s initial idea of circulating ideas – which involved only two types of agents, i.e. authors and their different audiences (fellow scientists, students, lay people) – we now have to add a third player: the provider of the database. Moreover, this new player is not a neutral party in the process of developing scientific hypotheses, as some might still assume by claiming that it is only a supportive technology which is provided by this player. The presumed neutrality is undermined by the fact that the provider has an enormous impact on research processes. This is due to the criteria that “Elsevier” defines to evaluate the suitability of data for a given task. The data selection process is no longer a task that researchers themselves carry out. Instead, it has already been preconfigured by the provider of SCOPUS which is, in the end, a player driven by commercial interests. The acceleration of content production and the multiplication of data availability due to the use of ICTs make reliance on technology a necessity in order to navigate this flood of information. So there is no option of simply opting out.

Thus, the above case study shows that, in the 21st century, another loop has to be added to Fleck’s initial description of the inter- and intracollective circulation of ideas. It is no longer only via a communicative exchange between scientists and their intended audience (and respective feedback loops) that ideas and hypotheses are developed, but also a result of data selection processes and related mechanisms preconfigured by technology providers such as “Elsevier”. Given the fact that this new player is not neutral, we have to investigate the effects of this newly structured division of epistemic labour. This will be discussed in the next two sections. Here, a new version of Fleck’s thesis that the circulation of ideas affects their meaning will turn up in our investigation whether ideas presented in publications are altered due to this new player.

3 The new Bottleneck?

It seems that what scientists will have to face as a result of using ICTs such as SCOPUS in communication processes is that a technology provider has an impact on their research process. This technology provider is, first and foremost, a commercial agent whose interests will play a crucial role. In the case study above, two aspects are relevant in this respect. First, concerning big data issues, the emphasis is on quantifiable criteria guiding content selection processes which can be processed by the algorithms of SCOPUS. Due to this technological requirement, the current trend to focus on quantitative criteria to evaluate scientific achievements – such as the h-index discussed above – is accelerated. This tendency is increased even further by the second point of concern, namely the provider’s obvious striving for a monopoly position. “Elsevier” clearly states that they want to be a one-stop shop for their users so that no alternative product is needed.

It is via this route that the interests of the commercial provider have an impact on epistemic processes in science. The provider becomes a third teammate in the division of epistemic labour besides authors and recipients. In this context, “Elsevier” defines criteria that are meant to guide the content selection process and thereby establishes quality standards which will preconfigure research activities in science. But why should we be concerned about this? After all, one result of the above case study was that “Elsevier” seemingly tries to do its best to guarantee a high quality of the data. The company has established a procedure consisting of various evaluative steps, even comprising a so-called “independent” advisory board, the CSAB. Why should we not trust these mechanisms?

Two reasons call for a more critical stance towards this technological development: first, ”Elsevier” strives for a monopoly, as statements such as the following show: “When searching for insights, efficiency is a priority. Being able to search a single, trustworthy and authoritative database saves valuable time that would otherwise be spent cross-checking multiple databases and having to confirm results.”Footnote 22 Users, that is, scientists, are not supposed to gain data from different sources. The company wants them to only use SCOPUS. Assuming that “Elsevier” will be successful in this attempt, researchers will have to adapt to this technology, regardless of whether the content provided is actually what they need. Establishing a monopoly means that no alternative will be available any longer. Obviously, chances are good that this will create a new bottleneck: ideas, data, hypotheses presented in journal papers that are indexed by SCOPUS will be taken into consideration in future research activities – ideas etc. that are excluded due to SCOPUS’s selection criteria will be barred from future discourse.

The second reason is that quantifiable criteria will dominate the process, such as the h-index with respect to the presumed expertise of a given scholar. For sure, this kind of evaluative criteria is nothing new and has not been invented by the provider of SCOPUS. Nonetheless, by making their technology the heart and soul of the division of epistemic labour, these quantitative criteria will have a continuously growing influence on the scientific community. At the same time, the latter is not as homogeneous as this quantitative approach presupposes, regardless of the question of whether these criteria are adequate at all. Again, this means establishing a new criterion to guide future research processes. Scientists are supposed to pick papers as sources that are written by their peers, who are marked as experts due to their high h-index displayed on that platform. This is the criterion of excellence that the providers of SCOPUS guarantee their users. However, it is far from clear that expertise can be determined by counting citations – which is the basis of the h-index, as will be explained below. But even if we were to agree that scientific quality can be measured by numbers in this way, it would be a mere stipulation that the epistemic authorities can only be found on SCOPUS. Consequently – again under the prerequisite that SCOPUS is successful in its monopoly-like strategy – the database influences the development of hypotheses and ideas – in this case, by preconfiguring the core of the thought collective, that is, what Fleck calls its “esoteric circle” (Fleck 1979, 105). Determining who the experts are, based on their h-index, also implies that these are the people who other scientists have to exchange ideas with in order to develop their hypotheses. Peers thus indicated as experts are also the people other scientists will ask for approval regarding their own hypotheses. This is another important point where we see criteria developed by technology providers influencing research activity, that is, the circulation of ideas in Fleck’s sense.Footnote 23

In the following section, we will take a look at some problems regarding the epistemic processes in science that can arise because of these two points.

4 Big Data, the Problems of Diversity, and Systematic Bias

SCOPUS is meant to be a big data solution. “Elsevier” claims, for example, that its IT-tool can be used to identify experts and upcoming research trends.Footnote 24 Such evaluative tasks hinge on both the quantity and the quality of the data available – but how suitable is the data stored in SCOPUS with respect to such tasks?

Adherents of big data might raise the objection that this is a question of quantity alone: “Moving into a world of big data will require us to change our thinking about the merits of exactitude. […] the obsession with exactness is an artifact of the information-deprived analog era. When data was sparse, every data point was critical, and thus great care was taken to avoid letting any point bias the analysis. Today we don’t live in such an information-starved situation. […] Rather than aiming to stamp out every bit of inexactitude at increasingly high cost, we are calculating with messiness in mind” (Mayer-Schönberger and Cukier 2013, 40). It can be assumed that SCOPUS’s provider thinks that they can offer a large quantity of data – much more than has ever been available to a scientist by consulting only one database. Nonetheless, the provider’s insistence on quality shows that they do not share Mayer-Schönberger and Cukier’s thesis that their users could well live with a certain amount of messiness. The reason is that the data stored in SCOPUS belongs to many different academic disciplines. Consequently, the mere quantity of SCOPUS’s data alone might not be helpful for answering a particular question in a particular field of study. A selection has to be made which might significantly reduce the amount of data. The threshold of sufficiency can – at least at the moment – not been guaranteed. Therefore, the quality of the data provided matters. It is therefore unsurprising that “Elsevier” advertises its product as a source of very reliable data. This assessment is based on the multi-level content selection process carried out in order to guide decisions about which journals (books etc.) to index and which ones to dismiss. Does this provide the quality standard needed to use SCOPUS as a tool for searching trustworthy experts and research trends in science?

Apparently not, because the mechanisms described and the criteria on which they are based do not rule out the serious problem of systematic bias inherent to the data. The data selection process used to fill SCOPUS’s stacks is based on the assumption that it should mirror the current state of research within the community to allow scientists to develop state-of-the-art theories etc. However, if the current state of research already entails some sort of systematic bias, it will also be present in SCOPUS. The fact that such difficulties exist in science was recently demonstrated by feminist philosophers of science.Footnote 25

Moreover, as Fleck has pointed out, we shouldn’t be surprised by this fact because all thought collectives, including those in science, are prone to what he calls the “harmony of illusions”. By this he refers to the tendency of the members of a (scientific) community to stick to their theories, or more generally speaking, beliefs. “Once a structurally complete and closed system of opinions consisting of many details and relations has been formed, it offers constant resistance to anything that contradicts it” (Fleck 1979, 27).

Mechanisms such as peer review processes play their part in the creation of such a harmony of illusions, as Donald Gillies argues. He points out that, more often than not, it is mainstream topics and approaches that are selected by referees as suitable for publication (see Gillies 2008, 33). Due to the fact that these referees themselves work as scientists, their own research agendas inform their decision processes. Consequently, we will just get more of the same stuff, so to speak. Gillies wryly describes a suitable strategy for publishing a paper in a peer reviewed journal: “If you want a paper published in a leading academic journal, you have to choose a well-established discussion, make flattering comments about all the participants so far, and then add an epicycle to their work” (Gillies 2008, 38). He also points out that the decision to follow such a strategy is not a simple opt-in or opt-out decision, but vital to the scientist’s career opportunities. “Now in order to get a job, and then promotion, researchers need to publish in leading academic journals. Thus most young researchers will follow the strategy just indicated” (Gillies 2008, 38). These remarks illustrate that the quality standards implemented by the provider of SCOPUS – which are based on, among other factors, such peer review processes – tend to diminish the diversity and plurality of scientific hypotheses within the community by eliminating those that do not follow the current mainstream. An idea that cannot be shared due to these mechanisms will have a very hard time to survive.

But this is not the only reason for concern. Things can get even worse. Suppose that the current state of research entails errors. This should not be a surprising assumption. Karl R. Popper has pointed it out long ago by endorsing the view of fallibilism in the philosophy of science (see e.g. Popper 2002). Even Fleck, who is notoriously reluctant to talk about truth and falsity with respect to comparing the ideas, statements, theories etc. of members of different thought collectives,Footnote 26 calls the general result of a collective’s tendency to stick to once-acquired ideas a “harmony of illusions” (Fleck 1979, 38, emphasis added). ‘Illusions’, however, are (usually) not the same as the truth. Coming back to SCOPUS, the criteria chosen to ensure the proclaimed quality of its data with the emphasis on the relevance of peer review processes might contribute to the addition and circulation of mistakes and biases already entailed in the thought style at hand. Even worse, faulty data might be consolidated by relying on databases such as SCOPUS.

Admittedly, social mechanisms such as peer review processes, do not have to contribute to such a negative development. On the contrary, they can also be used as correctives in epistemic processes. Naomi Oreskes, for instance, highlights this fact (see Oreskes 2019, 58 f.). According to her, it is exactly due to these mechanismsFootnote 27 that people are still allowed to trust the objectivity of scientific findings, although scientists are fallible and some are also prone to be led by economical or political interests instead of seeking the truth.Footnote 28 Oreskes argues that even though some scientific theories turned out to be false and some researchers have been found guilty of tampering with their data as a result of wrong motivations, the ability to reach a consensus despite the diversity of theories and explanatory accounts speaks in favour of the overall trustworthiness of science (see Oreskes 2019, 142 f.).

Oreskes admits that this positive evaluation of science’s trustworthiness strongly hinges on its epistemic processes working properly. As an important part of this ideal, she mentions the diversity of the community. “This, it seems to me, is the most important argument for diversity in science, and for diversity in intellectual life in general. A homogenous community will be hard-pressed to realize which of its assumptions are warranted by evidence and which are not. After all, just as it is hard to hear your own accent, it is hard to identify prejudices that you share. A community with diverse values is more likely to identify and challenge prejudicial beliefs embedded in, or masquerading as, scientific theory” (Oreskes 2019, 136 f.).

A similar idea about the relevance of plurality in science has been put forward by Thomas S. Kuhn. He claims that scientific progress is a result of working with a variety of different approaches – even if only one of them will turn out to be the next paradigmatic account (see Kuhn 1977, 435 f.). Again, the background assumptions are that scientists make use of value judgements in order to decide which theories to further develop and that the values used in this context aren’t necessarily epistemic or shared by the community. But instead of being worried about this fact, Kuhn actually thinks it is an advantage that scientists are guided by different values and interests and therefore develop different theories. The benefit of this more tolerant strategy is that the risk of adhering to the wrong account can thus be minimised (see Kuhn 1977, 436).

Unfortunately, it is exactly at this stage of the process – where it becomes clear that diversity is of the utmost importance – that the case study above shows how things seem to go awry in science. Diversity and pluralism are virtues that tend to be forfeited because of the capacity of ICTs to make scientific reasoning processes more efficient – in other words, to streamline them. Admittedly, this is not a negative effect of digital technology per se, nor a problem inherent to the SCOPUS database. Still, the technology functions as a magnifying glass, so to speak, by putting into focus trends in science that might turn out to be vices.Footnote 29 Oreskes underestimates how far off the actual practice is from the ideal case that she has in mind when stressing the relevance and potential of social mechanisms as a corrective in science.

This worry is made plain by Jon A. Krosnick in his comment on Oreskes’s ideas (see Krosnick 2019). Besides the problem of a reduction of diversity, he points out several difficulties resulting from the ever increasing pressure due to the competition in science. This competitive setting amplifies the call for quantifiable criteria of comparisonFootnote 30, criteria which will be highly welcomed by technology providers such as “Elsevier”, as they can be used to feed and query their database. However, such quantitative evaluation strategies tend to accelerate market competition and encourage violations of good scientific practice, as Krosnick points out by discussing some recent examples, such as the problem of replicating experimental findings published in the empirical sciences. And here it comes full circle when he explains how science as a social institution fits into the picture: “Researchers publish a lot […] They want to disseminate findings as quickly as possible, and they attract the attention of the news media. Institutions encourage all this, because universities are increasingly using metrics counting publications and citation accounts in tenure and promotion decisions” (Krosnick 2019, 209, emphasis added).

To sum up: social mechanisms in science can contribute to epistemic progress in science, but they might also have the opposite effect. ICTs such as SCOPUS, implemented at the heart of the division of epistemic labour, can function as a sort of magnifying glass putting into focus the pros or cons of the mechanisms at work. In particular, if these technologies are guided by the economic interests of a company, it will be highly unlikely that such IT-tools contribute to an enhancement of epistemic practices already going awry.

5 Conclusion

ICTs have a deep impact on scientific activities. The aim of the above analysis was to point out how this impact affects practices of science communication and hence the development of ideas in the course of the division of epistemic labour. Fleck’s theses offer a good starting point showing how social mechanisms are involved in the development of an idea. His focus on communication and publication processes in particular helps to explain how the division of epistemic labour works in the scientific community. Fleck emphasises the relevance of the social dynamics in science and, as a scholar informed by his own practices, explains how these mechanisms can contribute to the advantage (e.g. to epistemic progress) as well as to the disadvantage (e.g. to a harmony of illusions) of epistemic practices in science. In this sense, his approach seems to be more nuanced than Oreskes’s – who mostly highlights the positive effects of social mechanisms, which provide an important reason why people should trust science. “The social character of science forms the basis of its approach to objectivity and therefore the grounds on which we may trust it” (Oreskes 2019, 58). Of course, she is aware of the fact that science can also go awry, as her many examples show (see Oreskes and Conway 2012; Oreskes 2019). But she seems to underestimate that such problems can also arise at the heart of the division of epistemic labour, that is, with regard to processes that she highlights as crucial epistemic correctives in science. “What leads to reliable scientific knowledge is the process by which claims are vetted. Crucially, that vetting must involve diverse perspectives and the presentation of evidence collected in diverse ways” (Oreskes 2019, 232, emphasis added).

However, the case study of SCOPUS shows that negative effects of ICTs affect the vetting processes pointed out by Oreskes. In particular, the crucial call for a diversity of perspectives seems to be under threat. Additionally, due to the database provider’s intention of offering a one-stop shop solution, possible routes of developing a more pluralistic setting of science also appear to be blocked. Detecting and eliminating systematic biases that might once have infected groups of scientists, as highlighted by feminist philosophers of science, therefore becomes even harder in the digital world of science communication.

Now, what can be done to improve this problematic situation? Unfortunately, there is no easy answer. We have seen that crucial mechanisms of digital publishing processes and processes aimed at developing metrical means for the evaluation of science interact at this point and are currently dominated by commercial agents. Consequently, a suggestion would be to reduce the grip of these players by putting power back into the hands of the scientific community. Possibilities under discussion include developing alternative infrastructural means at universities, for instance at academic libraries, that can support scientists in their daily practices of searching for information and publishing results in the digital age. Such solutions could take into account the call for diversity right from the start. However, strengthening the autonomy of the scientific community in this sense also implies a change in metrical practices. What is called for in the end is the development of a new attitude towards what counts as scientific achievements and how they can be properly assessed.Footnote 31

Notes

See https://www.elsevier.com/en-gb/about, accessed August 18, 2021. They have recently re-named their portfolio in the following way: “Elsevier is a leader in information and analytics for customers across the global research and health ecosystems” (ibid., emphasis added).

Fleck was primarily working as a microbiologist (see Schnelle 1986).

He talks about “vademecum science” and distinguishes this from textbooks used to introduce students to a scientific field (see Fleck 1979, 112).

He claims: “Communication never occurs without transformation, and indeed always involves a stylized remodeling, which intracollectively achieves corroboration and which intercollectively yields fundamental alteration” (Fleck 1979, 111). ‘Intracollective’ refers to the exchange of ideas amongst members of the same community (or “thought collective”, as Fleck would call such a group of people – for Fleck’s terminology and related problems of translation, see Jarnicki 2016). ‘Intercollective’ refers to communication processes between members of different communities. His claim regarding the alteration of ideas due to communication processes has been critically analysed in relation to the use of visual representations as means to transmit scientific hypotheses see Mößner 2016.

“Thoughts pass from one individual to another, each time a little transformed, for each individual can attach to them somewhat different associations. Strictly speaking, the receiver never understands the thought exactly in the way that the transmitter intended it to be understood. After a series of such encounters, practically nothing is left of the original content. Whose thought is it that continues to circulate? It is one that obviously belongs not to any single individual but to the collective” (Fleck 1979, 42, emphasis added).

Fleck discusses this point in relation to his example of the development of the Wassermann reaction (see Fleck 1979, Chap. 3). He points out that Wassermann himself wrongly recapitulated the invention of this diagnostic device by telling a story of success right from the beginning although it can be shown that many modifications had to take place for the Wassermann reaction to work properly (see ibid., 75 f.).

Admittedly, this is a very brief remark on the vast discussion between scientific realists and anti-realists. For more details see, for example, Peter Kosso (1993), who offers insightful remarks on how to reconcile the relevance of theories and the claim of objectivity in science.

Scholars can use repositories hosted by the libraries of their universities. Moreover, the platform “arXiv.org” became famous in scientific circles: “arXiv® is a free distribution service and an open archive for scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics. arXiv is a collaboratively funded, community-supported resource founded by Paul Ginsparg in 1991 and maintained and operated by Cornell University”, https://arxiv.org/about, accessed August 16, 2021. A similar project in philosophy is “philpapers.org”: “PhilPapers is a comprehensive index and bibliography of philosophy maintained by the community of philosophers. […] We also host the largest open access archive in philosophy. Our index currently contains 2,595,824 entries categorized in 5,684 categories. […]”, https://philpapers.org/, accessed August 19, 2021.

https://www.elsevier.com/solutions/scopus/why-choose-scopus, accessed August 16, 2021.

This might vary a bit due to different user groups. Of course, university administrations and corporate managements might highlight different performances of this IT-tool than, for instance, individual researchers. On the intended user groups, see https://www.elsevier.com/solutions/scopus/who-uses, accessed August 16, 2021. In the present paper, the focus will be on scientists and their use of SCOPUS in their daily practices.

An overview of the developing field of scientometrics and its relation to bibliometrics is offered by (Leydesdorff and Milojević 2020).

The h-index was invented by Jorge Hirsch in 2005 as an alternative to the formerly used and highly criticised impact factor (see Hirsch 2005). The impact factor offers information about a particular journal, i.e. how often it has been cited during a certain period of time. The impact factor is therefore not suitable for measuring the achievements of a particular author who has published an article within a journal, because it is unclear whether this particular article has been cited at all. By contrast, the h-index was designed to allow for the assessment of a particular scientist or author – not the journal in which they published their research.

See https://www.elsevier.com/solutions/scopus/how-scopus-works, accessed August 16, 2021.

Big data can be characterised by referring to the three “Vs”, namely, volume, velocity, and variety. ‘Volume’ is related to the vast amount of data. ‘Velocity’ refers to the ability to process, but also to generate, data very quickly. ‘Variety’ refers to the diversity of data gained by many different sources (see Mayer-Schönberger 2015, 14). On the topic of big data and its impact on science and society see Mayer-Schönberger and Cukier 2013; Floridi 2014, 13 ff.

https://www.elsevier.com/solutions/scopus/why-choose-scopus, accessed August 16, 2021, emphasis added.

See https://www.elsevier.com/solutions/scopus/how-scopus-works/content/content-policy-and-selection, accessed August 16, 2021.

See https://www.elsevier.com/solutions/scopus/how-scopus-works/content/scopus-content-selection-and-advisory-board, accessed August 16, 2021.

https://www.elsevier.com/solutions/scopus/how-scopus-works/content/content-policy-and-selection, accessed August 2016, 2021.

https://www.elsevier.com/solutions/scopus, accessed August 16, 2021, emphasis added.

SCOPUS was launched in 2004. There are only two competitors on the market, namely “Web of Science (WOS)”, https://clarivate.com/webofsciencegroup/solutions/web-of-science/, and “Google Scholar”, https://scholar.google.com/, accessed March 15, 2021.

https://www.elsevier.com/solutions/scopus/how-scopus-works/content, accessed August 16, 2021, emphasis added.

It is also possible that automatic translation processes etc. entail that technology itself might become the direct cause of changes in meaning and of ideas transmitted via those means – although SCOPUS does not (yet) work this way. Thanks to an anonymous reviewer who made me aware of this point.

See https://www.elsevier.com/solutions/scopus/why-choose-scopus, accessed August 16, 2021.

See for instance, the examples discussed in (Longino 2008).

Concerning the concept of truth, Fleck claims the following: “Such a stylized solution, and there is always only one, is called truth. Truth is not ‘relative’ and certainly not ‘subjective’ in the popular sense of the word. It is always, or almost always, completely determined within a thought style. One can never say that the same thought is true for A and false for B. If A and B belong to the same thought collective, the thought will be either true or false for both. But if they belong to different thought collectives, it will just not be the same thought! It must either be unclear to, or be understood differently by, one of them” (Fleck 1979, 100, emphasis added).

Another example of hers is getting tenure (see ibid.).

Admittedly, there is a debate in the philosophy of science whether ‘seeking the truth’ can be regarded as the (main) goal of science. It might be helpful to turn to virtue epistemology to seek support for defending the thesis that truth is indeed an epistemic virtue worth striving for (see e.g. Pritchard 2021 and references therein). Thanks to an anonymous referee who drew my attention to this point.

To clarify the relation between bias and vices: not reflecting upon biases, ignoring them, or not even considering the possibility that biases might have infected the scientific process can be regarded as a vice of the scientific community.

Ulrich Herb and Uwe Geith (2020) have stressed that these numbers are just one means of properly evaluating the publications of scientists and should be supplemented by a diverse set of qualitative criteria.

A statement paper by the German Research Foundation has recently claimed that it is time to re-think these practices (see DFG 2022).

References

Deutsche Forschungsgemeinschaft (DFG) (2022) Wissenschaftliches Publizieren als Grundlage und Gestaltungsfeld der Wissenschaftsbewertung Herausforderungen und Handlungsfelder.https://www.dfg.de/download/pdf/foerderung/grundlagen_dfg_foerderung/publikationswesen/positionspapier_publikationswesen.pdf

Douglas HE (2009) Science, Policy, and the Value-Free Ideal. University of Pittsburgh Press, Pittsburgh, Pa

Elsevier ed (2020) Scopus. Content Coverage Guide.https://www.elsevier.com/__data/assets/pdf_file/0007/69451/Scopus_ContentCoverage_Guide_WEB.pdf

Fleck L (1979) Genesis and Development of a Scientific Fact. Edited by Trenn TJ, Merton RK, translated by Bradley F, Trenn TJ, Chicago and London: University of Chicago Press

Fleck L (1986). “The Problem of Epistemology [1936]”. In: Fleck L, Cognition and Fact. Materials on Ludwik Fleck, edited by Cohen RS, Schnelle T, Dordrecht : Reidel, pp. 79–112

Floridi L (2014) The 4th Revolution. How the Infosphere Is Reshaping Human Reality. Oxford: Oxford University Press

Gillies D (2008) How Should Research Be Organised? College Publications, London

Herb U (2018) Zwangsehen und Bastarde. Wohin steuert Big Data die Wissenschaft? Inform - Wissenschaft Praxis 69(2–3):81–88. https://doi.org/10.1515/iwp-2018-0021

Herb U, Geith U (2020) Kriterien der qualitativen Bewertung wissenschaftlicher Publikationen. Inform Wissenschaft Praxis 71(2–3):77–85. https://doi.org/10.1515/iwp-2020-2074

Hirsch JE (2005) An Index to Quantify an Individual’s Scientific Research Output. Proc Natl Acad Sci United States Am PNAS 102(46):16569–16572. https://doi.org/10.1073/pnas.0507655102

Jarnicki P (2016) On the Shoulders of Ludwik Fleck? On the Bilingual Philosophical Legacy of Ludwik Fleck and its Polish, German and English Translations. The Translator 22:271–286. https://doi.org/10.1080/13556509.2015.1126881

Kitcher P (1995) The Advancement of Science. Science without Legend, Objectivity without Illusions. Paperback edition. New York and Oxford: Oxford University Press

Kitcher P (2004). On the Autonomy of the Sciences. Philosophy Today 48: 51–57. https://doi.org/10.5840/philtoday200448Supplement6

Kitcher P (2011). Science in a Democratic Society. Amherst, NY: Prometheus Books

Kosso P (1993) Reading the Book of Nature. An Introduction to the Philosophy of Science. Cambridge University Press, Reprinted, Cambridge et al.

Krosnick JA (2019) “Comments on the Present and Future of Science, Inspired by Naomi Oreskes”. In: Oreskes N, Why Trust Science? Oxford: Princeton University Press, Princeton and Oxford, pp 202–211

Krull W (2017) Die vermessene Universität: Ziel, Wunsch und Wirklichkeit. Passagen Verlag, Wien

Kuhn TS (1977) Die Entstehung des Neuen. Studien zur Struktur der Wissenschaftsgeschichte. Edited by Krüger L, Frankfurt/Main: Suhrkamp

Leydesdorff L, Milojević S (2020). “Bibliometrics/Scientometrics”. In: Schintler LA, McNeely CL (eds) Encyclopedia of Big Data, Cham: Springer. https://doi.org/10.1007/978-3-319-32001-4_520-1

Longino HE (2008) “Values, Heuristics, and the Politics of Knowledge”. In: Carrier M, Howard D, Kourany JA (eds) The Challenge of the Social and the Pressure of Practice. Science and Values Revisited. University of Pittsburgh Press, Pittsburgh, Pa., pp 68–86

Mayer-Schönberger V (2015) Was ist Big Data? Zur Beschleunigung des menschlichen Erkenntnisprozesses. APUZ Aus Politik und Zeitgeschichte 65(11–12):14–19

Mayer-Schönberger V, Cukier K (2013) Big Data. A Revolution that Will Transform How We Live, Work, and Think. John Murray, London

Mößner, N (2011) Thought Styles and Paradigms—a Comparative Study of Ludwik Fleck and Thomas S. Kuhn. Studies in History and Philosophy of Science 42 (2): 362-371. https://doi.org/10.1016/j.shpsa.2010.12.002

Mößner, N (2016) Scientific Images as Circulating Ideas: An Application of Ludwik Fleck’s Theory of Thought Styles. Journal for General Philosophy of Science 47 (2): 307-329. https://doi.org/10.1007/s10838-016-9327-y

Oreskes N (2019) Why Trust Science? Princeton University Press, Princeton and Oxford

Oreskes N, Conway EM (2012) Merchants of Doubt. How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. London et al.: Bloomsbury

Popper KR (2002) “On the Sources of Knowledge and Ignorance”. In: Popper KR, Conjectures and Refutations. The Growth of Scientific Knowledge. Routledge, London and New York, pp 3–39

Pritchard D (2021) Intellectual virtues and the epistemic value of truth. Synthese 198:5515–5528. https://doi.org/10.1007/s11229-019-02418-z

Schmid J (2020) “Scientometrics”. In: Schintler LA, McNeely CL (eds), Encyclopedia of Big Data, Springer, Cham. https://doi.org/10.1007/978-3-319-32001-4_180-2

Schnelle T (1986) “Microbiology and Philosophy of Science, Lwów and the German Holocaust: Stations of a Life - Ludwik Fleck 1896–1961”. In: Fleck, L., Cognition and Fact. Materials on Ludwik Fleck, edited by Cohen RS, Schnelle T, Dordrecht et al.: Reidel, pp. 3–36

Zittel C (2012) Ludwik Fleck and the Concept of Style in the Natural Sciences. Stud East Eur Thought 64(1–2):53–79. https://doi.org/10.1007/s11212-012-9160-8

Internet sources

ArXiv.org (2021) : https://arxiv.org/about, accessed August 16,

Elsevier (2021) : https://www.elsevier.com/en-gb/about, accessed August 18,

Google Scholar (2021) : https://scholar.google.com/, accessed March 15,

Philpapers.org (2021) : https://philpapers.org/, accessed August 19,

SCOPUS (2021) : https://www.elsevier.com/solutions/scopus, accessed August 16,

———. https://www.elsevier.com/solutions/scopus/how-scopus-works, accessed August 16,

———. https://www.elsevier.com/solutions/scopus/how-scopus-works/content, accessed March 10,

———. https://www.elsevier.com/solutions/scopus/how-scopus-works/content/content-policy-and-selection, accessed August 16,

———. https://www.elsevier.com/solutions/scopus/how-scopus-works/content/scopus-content-selection-and-advisory-board, accessed August 16,

———. https://www.elsevier.com/solutions/scopus/why-choose-scopus, accessed August 16,

———. https://www.elsevier.com/solutions/scopus/who-uses-scopus, accessed August 16,

Web of Science (WOS) (2021) : https://clarivate.com/webofsciencegroup/solutions/web-of-science/, accessed March 15,

Acknowledgements

I would like to thank the participants of the workshop “Wissenschaft im digitalen Raum – Erkenntnis in Filterblasen?” and an anonymous reviewer for helpful comments on a previous version of this paper.

Funding

No funds, grants, or other support was received.

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest/Competing Interests

The author has no relevant financial or non-financial interests to disclose.

Research involving Human Participants and/or Animals

Not applicable.

Informed Consent

Not applicable.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mößner, N. Databases, Science Communication, and the Division of Epistemic Labour. Axiomathes 32 (Suppl 3), 853–870 (2022). https://doi.org/10.1007/s10516-022-09638-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10516-022-09638-y