Abstract

Natural language syntax yields an unbounded array of hierarchically structured expressions. We claim that these are used in the service of active inference in accord with the free-energy principle (FEP). While conceptual advances alongside modelling and simulation work have attempted to connect speech segmentation and linguistic communication with the FEP, we extend this program to the underlying computations responsible for generating syntactic objects. We argue that recently proposed principles of economy in language design—such as “minimal search” criteria from theoretical syntax—adhere to the FEP. This affords a greater degree of explanatory power to the FEP—with respect to higher language functions—and offers linguistics a grounding in first principles with respect to computability. While we mostly focus on building new principled conceptual relations between syntax and the FEP, we also show through a sample of preliminary examples how both tree-geometric depth and a Kolmogorov complexity estimate (recruiting a Lempel–Ziv compression algorithm) can be used to accurately predict legal operations on syntactic workspaces, directly in line with formulations of variational free energy minimization. This is used to motivate a general principle of language design that we term Turing–Chomsky Compression (TCC). We use TCC to align concerns of linguists with the normative account of self-organization furnished by the FEP, by marshalling evidence from theoretical linguistics and psycholinguistics to ground core principles of efficient syntactic computation within active inference.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Implementational models of language must be plausible from the perspective of neuroanatomy (Embick & Poeppel, 2015), but they must also be plausible from the perspective of how biophysical systems behave. We will argue that the structuring influence of the free-energy principle (FEP) can be detected in language, not only via narrative (Bouizegarene et al., 2020), interpersonal dialogue (Friston et al., 2020), cooperative/intentional communication (Vasil et al., 2020) and speech segmentation (Friston et al., 2021), but also at the more fundamental level of what linguists consider to be basic structure-building computations (Adger Forthcoming, Berwick & Stabler, 2019; Chomsky, 1949, 1951, 1956, 1959, 2021a, b, c, 2023).

Natural language syntax yields an unbounded array of hierarchically structured expressions. We argue that many historical insights into syntax are consistent with the FEP—providing a novel perspective under which the principles governing syntax are not limited to language, but rather reflect domain-general processes. This is consistent with a strain within theoretical linguistics that explores how syntactic computation may adhere to “general principles that may well fall within extra-biological natural law, particularly considerations of minimal computation” (Chomsky, 2011, p. 263), such that certain linguistic theories might be engaging with general properties of organic systems (Chomsky, 2004, 2014). Here, we consider the idea that many aspects of natural language syntax may be special cases of a variational principle of least free-energy. To this end, we examine whether a complexity measure relevant to formulations of free-energy—namely, Kolmogorov complexity (Hutter, 2006; MacKay, 1995; Wallace & Dowe, 1999)—relates to legal syntactic processes.

While the FEP has a substantial explanatory scope, across a large range of cognitive systems, it can also be seen as a method or principle of least action for multi-disciplinary research (Ramstead et al., 2021), in much the same way that the notion of economy is typically entertained in linguistics as a programmatic notion (Chomsky, 1995). The FEP describes the optimal behavior of an organism interacting with the environment. The FEP itself has been argued to be more of a conceptual-mathematical model for self-organizing systems (for some, it is a “generic” model; Barandiaran & Chemero, 2009), or a guiding framework (Colombo & Wright, 2021). Thus, when we argue that natural language syntax “complies” with the FEP, this is not to imply that the FEP necessarily bears any specific, direct predictions for linguistic behaviour. Rather, it motivates the construction of conceptual arguments for how some property of organic systems might be seen as realizing the FEP. Hence, we will mostly focus here on presenting a series of principled conceptual relations between the FEP and natural language syntax, with our goal being to promote more systematic empirical research in the near future, given the space required to fully elaborate conceptual sympathies between two mature scientific fields with extensive histories. Nevertheless, after reviewing some of these general sympathies, we will aim to defend a specific analytic approach to the empirical assessment of syntactic models. We will suggest that syntactic derivations minimising algorithmic complexity are licensed over those that result in structures and derivational paths that are less algorithmically compressible.

We begin by summarising the FEP, and describe how syntactic principles are consistent with it. We consider how the FEP is a variational principle of “least action”, such as those that describe systems with conserved quantities (Coopersmith, 2017). We then review key observations from linguistics that speak to the structuring influence of computational efficiency, involving “least effort” and “minimal search” restrictions (Bošković & Lasnik, 2007; Gallego & Martin, 2018; Larson, 2015), viewing language as a product of an individual’s mind/brain, following the standard ‘I-language’ (Chomsky, 1986, 2000) perspective in generative linguistics (i.e., ‘internal’, ‘individual’, ‘intensional’). After modeling the complexity of postulated minimal search procedures—versus their ungrammatical alternatives across a small but representative number of exemplar cases—we propose a unifying principle for how the goals of the FEP might be realised during the derivation of syntactic structures, which we term Turing–Chomsky Compression (TCC). TCC provides a formal description of how the basic mechanisms of syntax (i.e., the merging of lexical items into hierarchically organized sets) directly comply with the FEP. We conclude by highlighting directions for future work.

1 Active inference and the free-energy principle

Before we evaluate any work pertaining to linguistic behaviour, this section introduces key elements of the FEP that motivate its application to language.

1.1 The free-energy principle

The FEP has a long history in theoretical neuroscience (see Friston, 2010 for a review). It states that any adaptive change in the brain will minimise free-energy, either over evolutionary time or immediate, perceptual time (Ramstead et al., 2018). Free-energy is an information-theoretic quantity and is a function of sensory data and brain states: in brief, it is the upper bound on the ‘surprise’—or surprisal (Tribus, 1961)—of sensory data, given predictions that are based on an internal model of how those data were generated. The difference between free-energy and surprise is the difference (specified by the Kullback–Leibler divergence) between probabilistic representations encoded by the brain and the true conditional distribution of the causes of sensory input. This is evident in the following equation, which specifies variational free energy (F) as the negative log probability of observations (õ) under a generative model (i.e., ‘surprise’) plus the Kullback–Leibler divergence (DKL) between the approximate posterior distribution and the true posterior distribution (where Q indicates posterior beliefs, ŝ indicates the states in the generative model, and P indicates the probability under the internal model):

Unlike surprise itself, variational free energy can be evaluated (for a detailed explanation, see Friston et al., 2017a). Under simplifying assumptions, free-energy can be considered as the amount of prediction error; for a mathematical comparison, see Friston et al. (2017b). Minimising free energy minimises surprise, and is equivalent to maximising the evidence for the internal model of how sensory data were generated. By minimising free-energy, the brain is essentially performing approximate Bayesian inference. By reformulating variational free energy—in a way that is mathematically equivalent; see Penny et al. (2004), Winn and Bishop (2005)—we see that free-energy can be considered as a trade-off between accuracy and complexity, whereby the best internal model is the one that accurately describes the data in the simplest manner (where EQ indicates the expected value, and the other variables are the same as those defined above):

Because the Kullback–Leibler divergence can never be less than zero, the variational free energy provides an upper bound on negative log evidence: equivalently, the negative free energy provides a lower bound on log evidence; known as an evidence lower bound (ELBO) in machine learning (Winn & Bishop, 2005). The final equality shows a complementary decomposition of variational free energy into accuracy and complexity. In effect, it reflects the degree of belief updating afforded by some new sensory data; in other words, how much some new evidence causes one to “change one’s mind”. A good generative model—with the right kind of priors—will minimise the need for extensive belief updating and thereby minimise complexity.

The complexity part of variational free energy will become important later, when we will be evaluating the complexity of syntactic processes using a measure derived both in spirit and mathematical heritage from the foundations of the FEP. To present some initial details about this, consider how complexity also appears in treatments of universal computation (Hutter, 2006) and in the guise of minimum message or description lengths (Wallace & Dowe, 1999). Indeed, in machine learning, variational free energy minimisation has been cast as minimising complexity—or maximising efficiency in this setting (MacKay, 1995). One sees that same theme emerge in predictive coding—and related—formulations of free energy minimisation, where the underlying theme is to compress representations (Schmidhuber, 2010), thereby maximising their efficiency (Barlow, 1961; Linsker, 1990; Rao & Ballard, 1999). We will return to these topics below when we begin to formalise features of natural language syntax.

Lastly, the FEP can also be considered from the perspective of a Markov blanket (see Kirchhoff et al., 2018; Palacios et al., 2020; Parr et al., 2020 for detailed explanation), which instantiates a statistical boundary between internal states and external states. In other words, internal (e.g., in the brain) and external (e.g., in the external world) states are conditionally independent: they can only influence one another through blanket states. The blanket states can be partitioned into sensory states and active states. External states affect internal states only through sensory states, while internal states affect external states only through active states (Murphy, 2023a). The implicit circular causality is formally identical to the perception–action cycle (Fuster, 2004). Under previous accounts (Friston et al., 2017a, 2017b), the brain can minimise free-energy either through perception or action. The former refers to optimising (i.e., using approximate Bayesian inference to invert) its probabilistic generative model that specifies how hidden states cause sensory data; in other words, inferring the cause of sensory consequences by minimising variational free energy. The latter refers to initiating actions to sample data that are predicted by its model—which we turn to next.

1.2 Active inference

The enactive component of active inference rests on the assumption that action is biased to realize preferred outcomes. Beliefs about which actions are best to pursue rely on predictions about future outcomes, and the probability of pursuing any particular outcome is given by the expected free energy of that action. Expected free energy (G) can be expressed as the combination of extrinsic and epistemic value (Friston et al., 2017b), where π is a series of actions (i.e., the policy) being pursued, τ is the current time point, and the other variables are the same as those defined above:

Extrinsic value is the expected evidence for the internal model under a particular course of action, whereas epistemic value is the expected information gain; in other words, the extent a series of actions reduces uncertainty.

Notice that the expected versions of Kullback–Leibler divergence and log evidence in the free energy A2) now become intrinsic and extrinsic value respectively (Eq. 3). As such, selecting an action to minimise expected free energy reduces expected surprise (i.e., uncertainty) in virtue of maximising the information gain while—at the same time—maximising the expected log evidence; namely, actively self-evidencing (Hohwy, 2016, 2020). When applied to a variety of topics in cognitive neuroscience, active inference has been shown to predict human behaviour and neuronal responses; e.g., Brown et al. (2013), Friston et al., (2017a, 2017b), Friston (2019), Smith et al. (2019).

As will soon become clear, we will be using these observations concerning complexity to motivate a specific application of these ideas to natural language syntax, utilizing a measurement of algorithmic complexity that shares a mathematical heritage with the FEP, as outlined here.

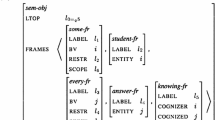

1.3 Belief updating

Belief updating refers to a process by which free energy is minimised. By specifying a process theory that explains neuronal responses during perception and action, neuronal dynamics have previously been cast as a gradient flow on free energy (known as variational free energy in physics, introduced in Feynman, 1972; see Hinton & Zemel, 1994); we refer the reader to Friston et al. (2017b) for a treatment of neuronal message passing and belief propagation. That is to say, any neural process can be formulated as a minimisation of the same quantity used in approximate Bayesian inference (Andrews, 2021; Hohwy, 2016). We provide an example of the computational architecture implied by this formulation of belief updating in the brain (Fig. 1). This illustrative example is based upon a discrete state space generative model, where the equations describe the solutions to Bayesian belief updating of expectations pertaining to hidden states, policies, policy precision and parameters.

Schematic overview of belief updates for active inference under discrete Markovian models. The left panel lists the belief updating equations, associating various updates with action, perception, policy selection, precision and learning. The left panel assigns the variables (sufficient statistics or expectations) that are updated to various brain areas. This attribution serves to illustrate a rough functional anatomy—implied by the form of the belief updates. In this simplified scheme, we have assigned observed outcomes to visual representations in the occipital cortex and updates to hidden states to the hippocampal formation. The evaluation of policies, in terms of their (expected) free energy, has been placed in the ventral prefrontal cortex. Expectations about policies per se and the precision of these beliefs have been attributed to striatal and ventral tegmental areas to indicate a putative role for dopamine in encoding precision. Finally, beliefs about policies are used to create Bayesian model averages over future states, which are fulfilled by action. The red arrows denote message passing. In brief, the parameters of the generative model correspond to matrices or arrays encoding the likelihood A, prior state transitions B, and initial hidden states D. F corresponds to the free energy of each policy and G corresponds to the expected free energy, which is weighted by a precision or softmax parameter γ that is usually attributed to dopaminergic neurotransmission. See Friston et al. (2017a) for further explanation of the equations and variables

In short, the brain seeks to minimise free energy, which is mathematically equivalent to maximising model evidence. This view of neuronal responses can be conceived with respect to Hamilton’s principle of least action, whereby action is the path integral of free energy.

1.4 Previous applications

Applying active inference to language relies on finding the right sort of generative model, and many different structures and forms of generative models are possible. Most relevant to the current application, deep temporal models accommodate a nesting of states that unfold at different temporal scales. Since language output is inherently temporal, this leads to the question of how to map hierarchical structures onto serial outputs (Epstein et al., 1998), and models that are deep in time allow us to deconstruct associated nested structures.

Recently, a deep temporal model for communication was developed based on a simulated conversation between two synthetic subjects, showing that certain behavioural and neurophysiological correlates of communication arise under variational message passing (Friston et al., 2020). The model incorporates various levels that operate at different temporal scales. At the lowest level, it specifies mappings among syntactic units that, when combined with particular semantic beliefs, predict individual words. At a higher level (i.e., longer temporal scales), the model contains beliefs about the context it is in, which specifies the syntactic structure at the level below. This model is congruent with core assumptions from linguistics concerning the generative nature of language. Specifically, elementary syntactic units provide belief structures that are used to reduce uncertainty about the world, through rapid and reflexive categorization of events, objects and their relations. Then, sentential representations can be thought of as structures designed (partially) to consolidate and appropriately frame experiences, and to contextualise and anticipate future experiences. The range of parseable syntactic structures available to comprehenders provides alternate hypotheses that afford parsimonious explanations for sensory data and, as such, preclude overfitting. If the complexities of linguistic stimuli can be efficiently mapped to a small series of regular syntactic formats, this contributes to the brain’s goal of restricting itself to a limited number of states. Essentially, the active inference models for linguistic communication previously developed can generally capture interactional dynamics between agents. We take as our point of departure here the question of what needs to happen within a single agent’s language system. Meanwhile, the psycholinguistic validity and polynomial parseability of minimalist ‘bare phrase structure’ grammars have recently been demonstrated (Torr et al., 2019), but little else has been said of how to motivate the fundamentals of syntactic theory from extra-linguistic computational constraints.

Before moving forward, we stress here that we will be working within the framework of theoretical linguistics (which deals with derivational stages of word-by-word, element-wise operations that underlie sentences), and not a framework such as corpus linguistics (which deals with the output of the generative/derivational process). Questions we raise here therefore cannot be addressed by consulting large corpora, but instead require investigation of incremental computational steps that ultimately appear to be responsible for the complex forms of human language behavior studied by sociolinguists, corpus linguists and historical linguists. Relatedly, embracing the traditional distinction between competence and performance, our focus will be on the former (the mental formatting and generation of linguistic structure), and not on the range of complex cognitive processes that enter into the use of language in a specific context.

2 Computational principles and syntactic hierarchies

2.1 A system of discrete infinity

How can the FEP contribute to our understanding of syntactic computation? Most immediately, it provides fundamental constraints on the physical realisation of a computational system. Consider first the three principles in classical recursive function theory which allow functions to compose (Kleene, 1952): substitution; primitive recursion; and minimisation. These are all designed in a way that one might think of as computationally efficient: they reuse the output of earlier computations. Substitution replaces the argument of a function with another function (possibly the original one); primitive recursion defines a new function on the basis of a recursive call to itself, bottoming out in a previously defined function (Lobina, 2017); minimisation (also termed ‘bounded search’) produces the output of a function with the smallest number of steps (see also Piantadosi, 2021, for whom human thought is essentially like Church encoding). More broadly, we note that free energy minimization—by construction—entails Bayesian inference, which in turn is a computational process, and so the FEP entails computationalism (Korbak, 2021) and at least a type of (basic) computational architecture for language we assume here (but see Kirchhoff & Robertson, 2018). Examining some core principles of recursion, natural language clearly exhibits minimisation, while binary branching of structures (Radford, 2016) limits redundant computation, reducing the range of possible computations. Even limitations on short-term memory have been hypothesized to contribute to the efficiency of memory search (MacGregor, 1987).

Syntax involves the construction of binary-branching hierarchically organized sets via the operation MERGE, taking objects from the lexicon or objects already part of the workspace (Marcolli et al., 2023). For example, given the set {X, Y}, we can either select a new lexical object and MERGE it, to form {Z, {X, Y}}, or we can select an existing object and MERGE it to the same workspace, to form {X, {X, Y}}.Footnote 1 MERGE serves a similar role to an elementary function, as in the theory of computability (e.g., the zero function, the identity function), in that it is meant to be non-decomposable. Putting many subsidiary technical details aside, these sets are then ‘labeled’ and given a syntactic identity, or a ‘head’ (Frampton & Gutmann, 1999; Leivada et al., 2023; Murphy et al., 2022, 2023; Murphy, 2015a, b, c, 2023a; Woolnough et al., 2023), based on which element is most structurally prominent and easiest to search for (i.e., Z in the structure {Z, {X, Y}}).Footnote 2 Labeling takes place when conceptual systems access the structures generated by syntax. This occurs at distinct derivational punctuations based on the configuration and subcategorization demands of the lexical items involved (e.g., in many instances subjects seem to be featurally richer than objects, and provide the relevant feature for the label; Longobardi, 2008). For example, in the case of head-complement structures this is done immediately after MERGE (Bošković, 2016). MERGE can also derive some set-theoretic properties of linguistic relations, such as membership, dominate and term-of, as well as the derived relation of c-command (= sister of) which is relevant for interpreting hierarchical relations between linguistic elements (Haegeman, 1994). These also appear to be the simplest possible formal relations entertained, potentially indexing a feature of organic computation that adheres closely to criteria of simplicity (Chomsky, 2022).

One might also think of MERGE as physical information coarse-graining (i.e., the removal of superfluous degrees of freedom in order to describe a given physical system at a different scale), with the core process of syntax being information renormalization according to different timescales. For instance, MERGE can be framed as a probability tensor implementing coarse-graining, akin to a probabilistic context-free grammar (Gallego & Orús, 2022). The model proposed in Gallego and Orús (2022, p. 20) assumes that language is “the cheapest non-trivial computational system”, exhibiting a high degree of efficiency with respect to its MERGE-based coarse-graining. More recently, MERGE has been described mathematically in terms of Hopf algebras, with a formalism similar to the one arising in the physics of renormalization (Marcolli et al., 2023), and the persistent homology method of topological data analysis and dimensional analysis techniques has been used to study syntactic parameters (Port et al., 2022). Hence, both the computational and mathematical foundations of syntax can be cast in ways that directly accord with the demands of the FEP and active inference.

Natural language syntax exhibits discrete units which lead to a discreteness-continuity duality (the boundary between syntactic categories can be non-distinct).Footnote 3 Syntax is driven by closeness of computation (syntactic objects X and Y form a distinct syntactic object, {X, Y}). Its objects are bounded (a fixed list, e.g., N, V, Adj, Adv, P, C, T, n, v, Asp, Cl, Neg, Q, Det) and their hierarchical ordering is based on a specific functional sequence such as C-T-v-V (e.g., C is always higher than V; Starke, 2004) which imposes direct restrictions on combinatorics (Adger & Svenonius, 2011). These objects can be combined in workspaces, phases or cycles (Frampton & Gutmann, 1999), which can be extended to form non-local dependencies. As we will discuss, these properties are guided by principles of minimal search (an optimal tree-search procedure, informed by notions from computer science; Aycock, 2020; Ke, 2019; Roberts, 2019) and least effort (Larson, 2015), akin to FEP formulations, fulfilling the imperatives of active inference to construct meaningful representations as efficiently as possible (Bouizegarene et al., 2020), directly contributing to surprise minimisation.

2.2 Compositionality

Recently, certain efficiency principles at the conceptual interface (where syntax interfaces with general conceptualization) have been proposed (Pietroski, 2018), such that the ‘instructions’ that language provides for the brain to build specific meanings are interpreted with notable simplicity. Leaving more technical details aside, this is ultimately achieved (in Pietroski’s recent model) through M-join (e.g., F(_) + G(_) → F^G(_), which combines monadic concepts, like red + boat) and D-join (e.g., D(_,_) + M(_) → Ǝ[D(_,_)^M(_)], which merges dyadic concepts with monadic concepts, deriving the meaning of X verb(ed) Y). Hence, natural language permits limited dyadicity as a very minimal departure from the most elementary monadic combinatorial system. Human language is marginally more expressive (in its conceptual interpretations) than a first-order language (i.e., one set, and one embedding), but the interpretation system is the least complex needed to express dyadicity and permit relations between sets. As with models of syntax invoking a simple process of binary set-formation to derive recursion, by restricting the number of computational procedures able to generate semantic structures, this model restricts in highly predictable ways the possible range of outputs.

Consider also how, in neo-Davidsonian event semantics, conjunction is limited to predicates of certain semantic types (Pietroski, 2005; Schein, 1993). Certain semantic rules of composition, in (1b), have been claimed to arise directly from more elementary syntactic computations (Pietroski, 2018) which adhere to principles of efficient computation.

-

(1)

-

a.

Dutch shot Micah quickly.

-

b.

Ǝe[Agent(e, Dutch) & Theme(e, Micah) & shot(e) & quickly(e)]

-

a.

In this connection, it has further been observed that language acts as an artificial context which helps “constrain what representations are recruited and what impact they have on reasoning and inference” (Lupyan & Clark, 2015, p. 283). Words themselves are “highly flexible (and metabolically cheap) sources of priors throughout the neural hierarchy” (Ibid) (for discussion of simplicity in semantic computation, see Al-Mutairi, 2014; Bošković & Messick, 2017; Collins, 2020; Gallego & Martin, 2018; González Escribano, 2005; Hauser et al., 2002; Hornstein & Pietroski, 2009). Overall, the entirety of the core language system (compositional syntax-semantics) appears to be shot through with criteria of efficiency that would inform any nascent generative model of linguistic syntax.

3 A Kolmogorov complexity estimate for narrow syntax

3.1 Economy

The notion of simplicity has been a methodological value which has guided linguistic inquiry for decades (Terzian & Corbalan 2021). Chomsky (2021b, p. 13) notes that “measuring simplicity is an essential task and is no simple matter”. We aim in this section to elaborate a measure of syntactic complexity that connects directly to the principles that underwrite the FEP. We will not be concerned with typological, phonological or acquisitional notions of complexity, which form the bulk of current literature. Instead, we are interested in underlying representational issues that pertain to syntax-semantics. Even the most recent explorations of simplicity in language, such as the volume on simplicity in grammar learning in Katzir et al. (2021), focus on modelling minimum description length in phonology and morphology, or morphosyntactic complexity across distinct languages (Ehret et al., 2023), but not processes pertaining to the internal derivation of syntactic objects. Much of this work fruitfully explores complexity and simplicity across languages (Ehret et al., 2023), using measures such as second language acquisition difficulty and situational diversity (counting the range of communicative contexts a language can be used in), as opposed to the computational architecture of the language faculty itself. Even if we consider possible complexity metrics for ‘syntax’ as a global system, such as degree of subordination (how hierarchically deep a structure can be; e.g., McCarty et al., 2023), this only gets us part of the way; something much more generic and encompassing is needed.

A number of economy principles have been proposed in theoretical linguistics: the No Tampering Condition (Chomsky, 2008), Minimal Link Condition (Chomsky, 1995), Minimal Yield (Chomsky, 2021c), Extension Condition (Chomsky, 1995), Last Resort (Chomsky, 1995), Relativised Minimality (Rizzi, 1990, 2001), Inclusiveness Condition (Chomsky, 1995), Precedence Resolution Principle (Epstein et al., 1998), Scope Economy (Fox, 2000), Phase Impenetrability Condition (Chomsky, 2004), Full Interpretation (Freidin & Lasnik, 2011; Lohndal & Uriagereka, 2016), Global Economy Condition (Sternefeld, 1997), Feature Economy (van Gelderen, 2011), Accord Maximization Principle (Schütze, 1997), Input Generalisation (Holmberg & Roberts, 2014), Maximise Minimal Means (Biberauer, 2019a), Resource Restriction (Chomsky et al., 2019), and Equal Embedding (Murphy & Shim, 2020) (for further discussion, see Frampton & Gutmann, 1999; Fukui, 1996; Titov, 2020).

Although economy principles have long figured in models of phonology, morphology and the lexicon (e.g., the Elsewhere condition, underspecification), it is only relatively recently that theories of syntax have embraced economy not just as a heuristic guiding research, but more concretely as a constitutive principle of language design (Leivada & Murphy, 2021; Murphy, 2012, 2020a; Reuland, 2011; Sundaresan, 2020). These have been framed within a linguistic context, often invoking domain-specific notions (Wilder et al., 1997), despite a core part of the intended project of modern theoretical linguistics being to embed linguistic theory within principles general to cognition. Motivating language-specific computational generalizations by direct reference to the FEP may broaden the explanatory scope for the existence and prevalence of particular syntactic phenomena. Since linguists lack a general theory of computational efficiency for language (e.g., Gallego & Chomsky, 2020: “To be sure, we do not have a general theory of computational efficiency”), additional support with respect to grounding these concerns within a well-motivated framework for general organic behaviour will likely prove productive. Linguists have long speculated about how to model simplicity, but surprisingly few have done so rigorously. For example, Pearl (2022) speculates that “perhaps the knowledge of the tight relationship between syntax and meaning is some kind of simplicity bias that assumes maximal similarity between representational systems, unless shown otherwise”. There are many promising paths to take here: minimising energy expenditure during language processing (Rahman & Kaykobad, 2005), shortening description length (Schmidhuber, 2015), reducing Kolmogorov complexity (Ming & Vitányi, 2008; Wallace & Dowe, 1999), and the degree of requisite belief updating. Relatedly, we might consult the principles of minimum redundancy and maximum efficiency in perception (Barlow, 1961, 1974, 2001; Wipf & Rao, 2007). We will provide a concrete exploration of one of these notions below (Kolmogorov complexity) in order to defend what we will term Turing–Chomsky Compression, with the immediate disclaimer that we acknowledge that other measures may in fact ultimately be more useful and well-motivated with respect to building FEP-syntax sympathies, and that we consider our survey to be purely preliminary.

A core fact about many natural language expressions is that they involve arrangements of nested constituents that enter into relations and dependencies of various kinds. How are syntactic operations compressed into determinate, unambiguous instructions to conceptual systems, and are there any general laws of organic design that can be inferred from the FEP that appear to constrain this process (and which successfully predict which objects cannot be constructed)?

Consider how under the No Tampering Condition the merging of two syntactic objects, X and Y, leaves X and Y unchanged. The set {X, Y} created by MERGE (Chomsky et al., 2019) cannot be broken and no new features can be added.Footnote 4 The original structure in (2a) can be modified by the merging of a new element, λ, to form (2b), adhering to the No Tampering Condition, while (2c) violates this condition since the original structure (2a) is modified (Lohndal & Uriagereka, 2016); hence why adjuncts that merge ‘downstairs’ do not alter the structure of the object they adjoin to (adjuncts are not labeled; Bošković, 2015) (subscripts denote syntactic heads/labels, standard script denotes lexical items, where (2a) could represent a structure like ‘the red boat’).Footnote 5

-

(2)

-

a.

[α [β [γ [δ ε]]]]

-

b.

[α λ [α [β [γ [δ ε]]]]]

-

c.

[α [β [γ [δ [ε ε λ]]]]]

-

a.

Further, it is more economical to expand a structure, as in (2b), than to backtrack and modify a structure that has already been built, as in (2c) (Lasnik & Lohndal, 2013). How can we more formally demonstrate these claims? We turn here to Kolmogorov complexity.

3.2 Compression

Kolmogorov complexity is a measure of the length of the shortest program that can reproduce a given pattern (Kolmogorov, 1965; Li & Vitányi, 2019). While measures of minimum description length and Kolmogorov complexity have been typically applied to linear, ‘externalized’ sequences, they can also be fruitfully applied to grammatical relations, permitting measurement of the inherent information content of an individual object or operation (Biberauer, 2019b, Grünwald 1996, 2007, Newmeyer & Preston, 2014). Sequence complexity is identified with richness of content (Mitchell, 2009), such that any given signal or sequence is regarded as complex if it is not possible to provide a compressed representation of it. While complexity differences across languages can be measured, for example, as a function of featural specifications on functional elements (Longobardi, 2017), here we are interested in the complexity of I-language derivations. Previous efforts have already connected the theory of program size to psychology by implementing a concrete language of thought with Turing-computable Kolmogorov complexity (Romano et al., 2013), which satisfied the requirements of (i) being simple enough so that the complexity of any binary sequence can be measured, and (ii) utilizing cognitively plausible operations like printing and repeating. In contrast, we aim to relate similar measures to syntactic economy criteria.

The concept of syntactic complexity remains underexplored in the literature relative to other measures of linguistic complexity (Shieber, 1985; Trudgill, 2011). While syntacticians have proposed economy principles, these all effectively boil down to efficient tree-search—without formalizing these intuitions or attempting to broach this topic with neighboring fields that might be able to provide highly valuable analytic tools. It is our contention that mathematical tools emerging from concerns of the FEP can help couch these verbal generalizations into a concrete model.

Syntactic complexity can be operationalized across a number of dimensions, such as online processing/parsing complexity (Hawkins, 2004), tree-search and node counts (Szmrecsányi, 2004), number of MERGE applications (Samo, 2021), cyclic/derivational complexity (Trotzke & Zwart, 2014), internal representational complexity as opposed to derivational size (van Gelderen, 2011, 2021), entropy reduction (Hale, 2016), or stages of second-language development (Walkden & Breitbarth, 2019). Syntactic complexity can be framed as grammar-based (derivational), or user-based (parsing) (Newmeyer & Preston, 2014); here, will be elaborating on the former type. Crucially, sentence length does not always scale with syntactic complexity (Szmrecsányi, 2004), and instead an examination of the underlying operations is required. Although syntactic complexity is often thought of in derivational terms, removed and independent from surface realization, Kolmogorov complexity is relatively theory-neutral and can be applied indiscriminately to mental objects with any number of internal sequences, patterns, irregularities, and surface redundancies (Miestamo et al., 2008).

Why do we choose to focus here on such a generic, theory-neutral measure as opposed to a more domain-specific one? We stress that Kolmogorov complexity (and the related notion of minimal message length) relates directly to frameworks emerging from the FEP (Friston, 2019; Hinton & Zemel, 1994; Korbak, 2021), with the prediction for natural language syntax of reducing the complexity of hierarchical syntactic structures that are interpreted at conceptual interfaces being sympathetic to a corollary of the FEP that every computation comes with a concrete energetic cost (Jarzynski, 1997; Sengupta & Stemmler, 2014). As shown above (Eq. 2), variational free energy can be formulated as a trade-off between accuracy and complexity, whereby minimising complexity minimises variational free energy. Considering the topic of universal computation, as in Solomonoff induction (Solomonoff, 1964) (which is directly grounded in the minimization of Kolmogorov complexity), many formulations of variational free energy minimization explicitly invoke algorithmic complexity and the type of mathematical formulations underlying universal computation. Relating this more directly to our present concerns, the theme of message length reduction has been fruitfully applied to analyses of grammar acquisition in children. Rasin et al. (2021) show that minimum description length (closely related to Bayesian grammar induction) can provide the child with a criterion for comparing hypotheses about grammatical structures that may match basic intuitions across a number of cases. The restrictiveness generated by these complexity measures supplements the more general simplicity criterion of theoretical syntax; much as how the ‘subset principle’ (restrictiveness) supplemented the original evaluation metric (simplicity) (Berwick, 1985). Lambert et al. (2021) demonstrated that the computational simplicity of learning mechanisms appears to have a major impact on the types of patterns found in natural language, including for syntactic trees, and so it seems to us well motivated to turn to the issue of the underlying processes that guide the generation of these structures.

Other recent work has successfully used minimum description length in a domain much closer to our own concerns. Focusing on semantic properties of quantifiers (e.g., ‘some’, ‘most’) and noting that natural language quantifiers adhere to the properties of monotonicity, quantity and conservativity (Barwise & Cooper, 1981), van de Pol et al. (2021) generated a large collection of over 24,000 logically possible quantifiers and measured their complexity and whether they adhered to the three universal properties. They found that quantifiers that satisfied universal semantic properties were less complex and also exhibited a shorter minimal description length compared to quantifiers that did not satisfy the universals, pointing in intriguing directions towards efficiency biases in natural language semantics that appear to restrict the development of lexical meaning. Quantifiers that adhere to semantic universals are simpler than logically possible competitors that do not.

To briefly formalize our discussion of compression and complexity, given a Turing machine M, a program p and a string x, we can say that the Kolmogorov complexity of x relative to M is defined by the length of x. Formally, this can be expressed as follows (Eq. 4), where |p| denotes the length of p and M is any given Turing machine:

This represents the length of the shortest program that prints the string x and then halts. Yet, as implied by Gödel’s incompleteness theorem or Turing’s halting theorem, we cannot compute the Kolmogorov complexity of an arbitrary string, given that it is impossible to test all possible algorithms smaller than the size of the string to be compressed, and given that we cannot know that the Turing machine will halt (Chaitin, 1995). We therefore used an estimate of approximate Kolmogorov complexity (given its fundamental non-computability) based on the Lempel–Ziv compression algorithm, which we applied to the labeling/search algorithm needed to derive each syntactic node in (2) and their subordinated terminal elements, investigating how ‘diverse’ the patterns are that are present in any given representation of a syntactic tree-search. In the service of replicability and for completeness, we used a current generative grammar labeling/search algorithm that checks tree-structure heads and terminal elements (Chomsky, 2013; Ke, 2019; Murphy & Shim, 2020) (see also f.n. 11). In this respect, Kolmogorov complexity is a more fine-grained measure of complexity than previous measures in theoretical syntax (e.g., node count across a c-commanded probe-goal path). While we acknowledge here and elsewhere (below) that our choice of algorithmic complexity is motivated by a similarity in spirit and mathematical heritage, we feel that this approach—given the relative novelty of connecting the FEP with theoretical linguistics—is suitably noncommittal with respect to which algorithmic complexity measure is ultimately going to show the most direct sympathy with syntactic derivational architecture, and below we will discuss some other possible measures.

Searching the structure from top to bottom, identifying each branching node and its elements (e.g., inputting [α λ α β γ δ ε]), we used a Lempel–Ziv implementation (Faul, 2021) of the classical Kolmogorov complexity algorithm (Kaspar & Schuster, 1987; Lempel & Ziv, 1976) to measure the number of unique sub-patterns when scanning the string of compiled nodes.Footnote 6 This Lempel–Ziv algorithm computes a Kolmogorov complexity estimate derived from a limited programming language that permits only copy and insertion in strings (Kaspar & Schuster, 1987).Footnote 7 The algorithm scans an n-digit sequence, S = s1 · s2 · …sn, from left to right, and adds a new element to its memory each time it encounters a substring of consecutive digits not previously encountered. Our measure of Kolmogorov complexity takes as input a digital string and outputs a normalised measure of complexity (Urwin et al., 2017).

To connect these ideas with the FEP, we note that minimising free energy corresponds to minimising complexity, while maximising the accuracy afforded by internal representationsFootnote 8\(r\in R\), of hidden states \(s\in S\), given outcomes \(o\in O\) (Eq. 2). In short, belief updating or making sense of any data implies the minimisation of complexity:

When choosing how to sample data, the expected complexity becomes the intrinsic value or expected information gain (in expected free energy):

This is just the mutual information between (unobservable) hidden states generating (observable) outcomes, under a particular choice or policy.

Importantly, variational free energy and formulations of artificial general intelligence pertaining to universal computation both share a mathematical legacy. This is rooted in the relationship between the complexity term in variational free energy and algorithmic complexity (Hinton & Zemel, 1994; Wallace & Dowe, 1999), described in terms of information length and total variation distance. As such, relating syntactic operations to algorithmic compression maximisation feeds directly into assumptions from the FEP (Schmidhuber, 2010).

Lempel–Ziv complexity is a measure of algorithmic complexity which, under the law of large numbers, plays the same role as the complexity part of log model evidence or marginal likelihood. Interestingly, minimising algorithmic complexity underwrites universal computation, speaking to a deep link between compression, efficiency and optimality in message passing and information processing. This is why we suggest here that although we are using Lempel–Ziv complexity, we suspect that many other, potentially more suitable measures of compressibility might be used in future to relate the FEP to syntax.

With this background, we now return to the structures in (2b) (licensed) and (2c) (unlicensed). Inputting the labeled nodes (subscript elements) and terminal elements (regular script) across both structures into the Lempel–Ziv compression algorithm (Faul, 2021) left-to-right, the licensed representation in (2b) exhibits a normalized Kolmogorov complexity of 1.88, while the unlicensed representation in (2c) exhibits a complexity of 1.99. Crucially, while both (2b) and (2c) exhibit the same node-count complexity and depth (i.e., bracket count), they can be operationally distinguished by their Kolmogorov complexity, in compliance with what the FEP would demand. The increased compression rate for (2b) indicates lower information content, hence lower Kolmogorov complexity (Juola, 2008), and so the representation adheres to the priority to minimise computational load.

3.3 Relativised minimality

A further observation pertaining to economy in the literature concerns Relativised Minimality (Rizzi, 1990, 1991): Given a configuration, [X … Z … Y], “a local relation cannot connect X and Y if Z intervenes, and Z fully matches the specification of X and Y in terms of the relevant features” (Starke, 2001). In other words, if X and Y attempt to establish a syntactic relation, but some element, Z, can provide a superset of X’s particular features (i.e., X’s features plus additional features), this blocks such a relation. In (3a), which game provides a superset of the features hosted by how, resulting in unacceptability. The equivalent does not obtain in (3b), and so a relationship between both copies of which game can be established (strikethroughs denote originally merged positions).

-

(3)

-

a.

*[[How[+Q]] [C[+Q] [do you wonder [[which game[+Q, +N]] [C[+Q] [PRO to play

how[+Q]]]]]]]. -

b.

[[Which game[+Q,+N]] [C[+Q] [do you wonder [[how+Q] [C+Q [PRO to play

which game[+Q,+N]]]]]]]

-

a.

Relativised Minimality emerges directly from minimal search (Aycock, 2020): Consider how when searching for matching features in (3b) the search procedure would skip how but find the original copy of which game.

We note that the notion of movement ‘distance’ here is relativised to the specific units across the path. In order to reach a more fundamental analysis we may need some means of understanding what distance actually reduces to. These avenues of current research may lend themselves quite readily to explorations directed by notions of complexity and compression. We speculate here that this may relate to the compressibility of movement paths, and more systematic investigations will be needed to address this.

3.4 Resource restriction

The principle of Resource Restriction (or ‘Restrict Computational Resources’, RCR; Chomsky, 2019; Chomsky et al., 2019) states that when the combinatorial operation MERGE maps workspace n to workspace n + 1, the number of computationally accessible elements (syntactic objects) can only increase by one (Huybregts, 2019; Komachi et al., 2019). This can account for a peculiar property of natural language recursion that separates it from other forms of recursion (e.g., propositional calculus, proof theory): natural language MERGE involves a recursive mapping of workspaces that removes previously manipulated objects (Chomsky, 2021c). Hence, Resource Restriction renders natural language derivations strictly Markovian: The present stage is independent of what was generated earlier, unlike standard recursion. MERGE itself exhibits the formal characteristics of a finite-state rewrite rule (Trotzke & Zwart, 2014, p. 145), exhibiting minimal computational complexity, with MERGE being distinct from the ultimate grammatical constructions later derived from its cyclic application. Even though this is in line with traditional assumptions from generative grammar that there is something unique to human syntax (which we concur with), we wish to stress that the formalization of this property of recursion does not necessitate complete isolation from domain-general approaches in the cognitive sciences; i.e., there are means to ground and explain this property through models emerging from the FEP.

A topic of recent discussion concerns how we can define the ‘size’ of a workspace. Fong et al. (2019) suggest that the size of a syntactic workspace should be considered to be the number of accessible terms plus the number of syntactic objects. This proposal to constrain syntactic combinatorics can account for why the applications of certain types of MERGE are ungrammatical (Fong et al., 2019), providing a genuine explanation for language design. We again return to the theme of every computational procedure delivering a concrete cost, as under the FEP.

Principles such as Resource Restriction and other economy considerations are essential once we consider that a workspace with two elements with a simple MERGE operation can generate excessive levels of combinatoriality. Within 8 MERGE steps from two elements, around 8 million distinct sets can be formed (Fong & Ginsburg, 2018). Older definitions of basic syntactic computations did not “worry about the fact that it’s an organic creature carrying out the activities”, as Chomsky (2020) notes. Many aspects of these theories exhibited, to borrow a phrase from Quine (1995, p. 5), an “excess of notation over subject matter”. Even many current models of syntax have ignored questions of cognitive, implementational plausibility (e.g., Chomsky, 2013; Citko & Gračanin-Yuksek, 2021; Collins, 2017, Epstein et al., 2021). Computational tractability (van Rooj & Baggio, 2021) is a powerful constraint in this respect (e.g., implementable in polynomial time), and given that minimizing the model complexity term (in formulations of free energy) entails reducing computational cost, this efficiency constraint is also implicitly present in the FEP.

3.5 Interim conclusion

We have considered how the FEP can in principle provide a novel explanation for the prevalence of efficiency-encoded syntactic structures. To further stress this point, consider Dasgupta and Gershman’s (2021) assessment that mental arithmetic, mental imagery, planning, and probabilistic inference all share a common resource: memory that enables efficient computation. Other domains exhibiting computational efficiency include concept learning (Feldman, 2003), causal reasoning (Lombrozo, 2016) and sensorimotor learning (Genewein & Braun, 2014). As Piantadosi (2021) reviews, human learners prefer to induce hypotheses that have a shorter description length in logic (Goodman et al., 2008), with simplicity preferences possibly being “a governing principle of cognitive systems” (Piantadosi, 2021, p. 15; see Chater & Vitányi, 2003). Although our arguments have been almost exclusively conceptual, we believe that more extensive computational modelling should seek to compare the dynamics of MERGE-based workspaces via compressibility constraints.

We will now turn to the most commonly explored syntactic processes claimed to arise from economy considerations: syntactic movement and minimal search. Further examples will be used to motivate what we term the principle of Turing–Chomsky Compression, through which stages of syntactic derivations are evaluated based on the algorithmic compressibility of some feature of the computation, such as the movement path of an object, or the procedure of nodal labeling/search—which can be unified based on how they manipulate the syntactic workspace. Turing–Chomsky Compression provides a concrete architectural principle for language design, which is crucially sympathetic to a number of compressibility criteria beyond the types entertained here; we therefore conclude with a number of promising directions to refine this new approach to syntax.

4 Minimising free-energy, maximising interpretability

As has long been recognised, the syntactic categories of words are not tagged acoustically, and yet sentential meaning is inferred from syntactic categorization (Adger, 2019). Sentences are often ambiguous between distinct syntactic structures. For instance, below we can interpret Jim Carrey as starring in the movie (4a), or sitting next to us (4b).

-

(4)

-

a.

[tp [npWe] [vpwatched [npa [nmovie [ppwith [npJim Carrey]]]]]].

-

b.

[tp [npWe] [vp[vpwatched [npa movie]] [ppwith [npJim Carrey]]]].

-

a.

Linear distance (i.e., the number of intervening elements between dependents in a sentence) can be contrasted with structural distance (the number of hierarchical nodes intervening), and only the latter is a significant predictor of reading times in an eye-tracking corpus (Baumann, 2014). Violations of hierarchical sentence rules results in slower reading times (Kush et al., 2015), and expectations of word category based on hierarchical grammars also predicts reading times (Boston et al., 2011).

The apparent use of hierarchical structure to limit interpretation adheres to a core tenet of the FEP, whereby interpretive processes that yield the lowest possible amount of complexity (and thereby computational cost) can mostly (perhaps entirely; Hinzen, 2006) be derived directly from what the syntactic component produces. This notion is closely related to the imperatives for structure learning (Tervo et al., 2016)—or Bayesian model reduction—in optimising the structural (syntax) of generative models based purely on complexity (pertaining to model parameters); see Friston et al. (2017b) for an example simulating active inference and insight in rule learning.

While sensorimotor systems naturally impose linear order, linguistic expressions are complex n-dimensional objects with hierarchical relations (Gärtner & Sauerland, 2007; Grohmann, 2007; Kosta et al., 2014; Murphy & Benítez-Burraco, 2018; Murphy, 2020b, 2024). The following sections provide concrete demonstrations of these design principles in action in order to motivate an architectural framework for language emerging from the FEP. We also provide suggestions for how to explore further sympathies between the FEP and minimalist syntax.

4.1 Structural distance

Consider the sentence in (5).

-

(5)

Routinely, poems that rhyme evaporate.

In (5), ‘routinely’ exclusively modifies ‘evaporate’. The matrix predicate ‘evaporate’ is closer in terms of structural distance to ‘routinely’ than to ‘rhyme’, since the relative clause embeds ‘rhyme’ more deeply (minimal search is partly “defined by least embedding”; Chomsky, 2004, p. 109).Footnote 9 Language computes over structural distance, not linear distance (Berwick et al., 2011, 2013; Friederici et al., 2017; Martin et al., 2020).

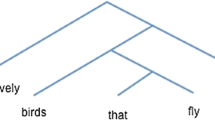

This can also be shown with simple interrogative structures. Consider the sentence in (6a) and its syntactic representation in (6b), where the verb in the relative clause (‘rhyme’) is more deeply embedded than ‘evaporate’.

-

(6)

-

a.

Do poems that rhyme evaporate?

-

b.

[CP[C Do][TP[DP[DP poems][CP[C that][TP rhyme]]][T’[T][V evaporate]]]].

-

a.

We can compute the complexity of both nodal search and Kolmogorov complexity, contrasting the grammatical association between ‘Do’ and ‘evaporate’, and the ungrammatical association between ‘Do’ and ‘rhyme’. When the [+ q] feature on C searches for a goal, it needs to search down three node steps (from CP to V) to get to the grammatical option, but needs to search down four node steps (from CP to embedded TP) to construct the ungrammatical option. Since we are concerned with analyzing a small but representative number of syntactic derivational processes, this analysis differs from the approach to the structures in (2), which do not involve any labeling procedure. This time, we enumerated the search steps across nodes, replacing specific nodal categories with symbols interpretable to the Lempel–Ziv compression algorithm (Faul, 2021), since this is what the syntactic search algorithm is claimed to monitor. The Lempel–Ziv complexity of the sequence of steps enumerated from the C-V labeling/search algorithm is 1.72. For the embedded C-TP search, it increases to 2.01.

While one might invoke purely semantic constraints on polar interrogatives and other forms of question-formation (Bouchard, 2021) to derive the kinds of acceptability contrasts we have discussed, we see no way to ground these observations in concerns of computability and complexity, and no way to quantify or formalize these notions.

4.2 Ignoring other people: question formation via economy

As recent literature has explored, whenever there is a conflict between principles of computational efficiency and principles of communicative clarity, the former seems to be prioritized (Asoulin, 2016; Murphy, 2020a). For instance, consider (7).

-

(7)

You persuaded Saul to sell his car.

The individual (‘Saul’) and the object (‘car’) can be questioned, but questioning the more deeply embedded object forces the speaker to produce a more complex circumlocution (‘[]’ denotes the originally merged position of the wh-expression).

-

(8)

-

a.

*[What] did you persuade who to sell []?

-

b.

[Who] did you persuade [] to sell what?

-

a.

The structures in (8) involve the same words and interpretations, yet the more computationally costly process of searching for—and then moving—the more deeply embedded element cannot be licensed, despite the potential benefits of communicative flexibility. Interestingly, one cannot feasibly posit parsing-related factors to derive some independent complexity measure to explain this contrast (e.g., Newmeyer, 2007), given the same number of words and same semantic interpretations (i.e., give me the Agent and Object of the event). Experimental work has supported the prevalence of these grammaticality intuitions (Clifton et al., 2006).Footnote 10

The syntactic structures for both (8a) and (8b) are represented in (9) (where < DP > represents the movement path). With respect to tree-search depth, (9a) involves searching down 11 nodes, while (9b) involves searching down 9 nodes. To expand our survey of syntactic processes beyond labeling/search paths, we focused here on the postulated path of syntactic object movement across the structure. The movement path was represented with each site being attributed a symbol fed to the compression algorithm, in keeping with a more general approach to annotating movement paths (Adger, 2003). Enumerating the movement path from the initially merged root, to intermediate landing sites, to the terminal landing site in Spec-CP, the Lempel–Ziv complexity of movement for (9a) is 2.15. For (9b), path complexity is 1.5.

-

(9)

-

a.

[CP [DP what] [C’ [C did] [TP [DP you] [T’ [T [pres] [VP [<DP>] [V’ [V persuade] [CP [C’ [C Ø] [TP [<DP>] [T’ [T] [VP [DP who] [PP [P to] [VP [V’ [V sell] [<DP>]]]]]]]]]]]]]]]].

-

b.

[CP [DP who] [C’ [C did] [TP [DP you] [T’ [T [pres] [VP [<DP>] [V’ [V persuade] [CP [C’ [C Ø] [TP [<DP>] [T’ [T ] [VP [<DP>] [PP [P to] [VP [V’ [V sell] [DP what]]]]]]]]]]]]]]]]

-

a.

A further empirical reason to assume that this economy condition is a general property of language comes from the following data of Bulgarian multiple wh-fronting (Bošković & Messick, 2017; see also Dayal, 2017). The wh-phrase highest prior to movement (the subject in (10) and the indirect object in (11)) needs to be first in the linear order of the sentence, such that the structurally highest wh-phrase moves first, and the second wh-phrase either right-adjoins to the first wh-phrase, or moves to a structurally lower Spec-CP position.

-

(10)

-

a.

*Koj e vidjal kogo?

who is seen whom

-

b.

Koj kogo e vidjal?

“Who saw whom?”

-

a.

-

(11)

-

a.

Kogo kakvo e pital Ivan?

whom what is asked Ivan

“Whom did Ivan ask what?”

-

b.

*Kakvo kogo e pital Ivan?

-

a.

Thus far, this suffices to show that the wh-element easiest to search for is selected for movement. However, does syntactic economy simply rule out all but one option? Crucially, Bošković and Messick (2017) show that when multiple options of equal tree-geometric complexity are available, they are both licensed as grammatical. Consider constructions with three wh-phrases. We can assume that whichever wh-element moves to the structurally highest position (Spec-CP) satisfies the featural requirement of interrogative C to have a filled Spec-CP position. After this structurally highest element moves to Spec-CP, we can further assume that the remaining wh-elements then move to Spec-CP to satisfy their own featural ‘Focus’-based requirements. At this point, whichever order the remaining wh-elements move in, the requirements are satisfied through movements of identical length (i.e., both cross the same number of nodes, and hence generate the same sequence of derivational steps, and therefore the same Lempel–Ziv complexity). As such, this predicts that the remaining two wh-elements can move in any order after the initial wh-movement of the subject. This prediction is borne out: the subject (‘koj’) is moved first in both constructions below, but then either of the remaining wh-elements can move in any order.

-

(12)

-

a.

Koj kogo kakvo e pital?

who whom what is asked

“Who asked whom what?”

-

b.

Koj kakvo kogo e pital?

-

a.

4.3 Labeling

As a more stringent test, can Lempel–Ziv complexity shed light on cases in which the ungrammatical derivation has less structural tree-geometric complexity than the grammatical derivation? Consider the following case from Murphy and Shim (2020, p. 204). Putting ancillary technical details aside (see Mizuguchi, 2019), (13a) is claimed to be ungrammatical because one final necessary operation on the syntactic workspace has not been carried out; namely, merging ‘the students’ to the structure marked by γ. For expository purposes, we provide a schematic representation to demonstrate the relevant movement path (the path of ‘the student’ is marked by t).

-

(13)

-

a.

*[γ Seems to be likely [α the student [to [t understand the theory]]]].

-

b.

[δ The student [γ seems to be likely [α t [to [t understand the theory]]]]]

-

a.

The explanations from within syntactic theory as to why (13b) is grammatical concern successful feature valuation and the minimal search of copies via the labeling algorithm. However, this process might also be linked to more efficient compression rates of syntactic labels at the interpretive systems. We can enumerate each labeled node left-to-right marking the phrase boundaries separating each embedded object that pertain to the grammaticality contrast (e.g., V-D-P-V). Computing the Lempel–Ziv complexity of each successive phrase label in these structures, (13a) exhibits a complexity of 1.86, while (13b) exhibits a complexity of 1.66, despite (13b) being a more complex structure from the perspectives of node count and element count. As such, both minimal search of syntactic labels and algorithmic compression rates may be playing separate but interacting roles in determining how the interpretive systems access objects generated by syntax.

4.4 Turing–Chomsky Compression

The brief number of cases we have derived syntactic economy principles from, using a Lempel–Ziv estimate of Kolmogorov complexity, can be used to motivate the following language design principle that directly relates the FEP to syntactic structure building:

Turing–Chomsky Compression An operation (M) on an accessible object (O1) in a syntactic workspace (Wp) minimizes variational free energy if structures from the resulting workspace (Wq) are compressed to a lower Kolmogorov complexity than if M had accessed O2 in Wp.

This is principally named after specifications over what (Chomsky) is compressed and how (Turing) such compression can be achieved (Chomsky, 2021c; Turing, 1950). The interaction between Turing–Chomsky Compression (TCC) and more domain-specific subcategorization requirements emerging from lexico-semantic features, and formal syntactic features, is a promising topic for future research. This will require a more accelerated survey of cognitive models of semantics that emerge from the FEP, including a more extensive and formal assessment of how to generate specific active components (under active inference) for lexico-semantic processing, with this stage coming into greater relevance after the initial generation of an abstract hierarchical structure that feeds, for example, event semantics. For now, we have shown across a small but representative number of syntactic processes that derivations minimising algorithmic complexity are licensed over those that result in structures and derivational paths that are less compressible.

We stress here that TCC is a concrete architectural proposal for language design, and is the type of principled settlement that could be established between mathematical models of compressibility that share a lineage with the FEP on the one hand, and models of minimalist syntax on the other. With this in mind, we now discuss some prospects for future research that could address these issues more systematically.

5 Future work

Language and thought, in anything remotely like the human sense, might indeed turn out to be a brief and rare spark in the universe, one that we may soon extinguish. We are seeking to understand what may be a true marvel of the cosmos.

Chomsky (2021c, p. 4)

We have arrived at a number of suggestive explanations for how language implements the construction of hierarchical syntactic objects: to minimise the computational burden of reading syntactic instructions at conceptual systems; to minimise uncertainty about the causes of sensory data; and to adhere to a least effort natural law (i.e., variational principle of least action) when composing sets of linguistic features for interpretation, planning and prediction. We have shown that measuring a Kolmogorov complexity estimate of syntactic representations and movement paths can align with acceptability judgments. This was used to motivate a possible principle of language design that could emerge from this research direction, Turing–Chomsky Compression (TCC). Our use of Lempel–Ziv complexity presents a more explicit measure than previous accounts. For instance, consider Sternefeld’s (1997) Global Economy Condition, which states that, given two derivations of a syntactic structure (D1, D2), D1 is preferred if D1 fares better than D2 with respect to some metrical measure M (namely, number of derivational steps). This basic ‘step counting’ measure (as with tree-search depth) seems to be in line with grammaticality predictions emerging from the more general complexity measure provided by Lempel–Ziv complexity. Yet, algorithmic complexity also benefits from being applicable across a range of other domains in syntax where nodal count does not differ between competing structures, and is also related to formulations of variational free energy minimization. Ultimately, this has the advantage of generating quantitative predictions for syntactic computation based on general principles that apply more broadly.

Following neighbouring research in the active inference framework (Da Costa et al., 2021), one could feasibly view our research programme as comparing the information length of belief updating between distinct syntactic derivations and theories. We view our proposals as being, in principle, concordant with the view that neural representations in organic agents evolve by approximating steepest descent in information space towards the point of optimal inference (Da Costa et al., 2021). Future work could explore the utility of minimum description length (van de Pol et al., 2021) and Gell-Mann/Lloyd ‘effective complexity’. In contrast to Kolmogorov complexity, which measures the description length of a whole object, effective complexity measures the description length of regularities (structured patterns) within an object (Gell-Mann & Lloyd, 1996), which may speak to properties of cyclic, phasal computation in natural language.

Recent work has provided evidence for a mental compression algorithm in humans (termed the Language of Thought chunking algorithm) responsible for parsing very basic, binary sequences, providing evidence that human sequence coding involves a form of internal compression using language-like nested structures (Planton et al., 2021). Dehaene et al. (2022) extend this project to auditory sequences and geometrical shapes. We have effectively extended these ideas further into the domain of natural language syntax, suggesting some common capacity for symbolic recursion across cognitive systems being constrained by compressibility.

Some linguists might object to our complexity measure in the following way: Why should syntax be organized so as to produce structures that minimise Kolmogorov complexity, and why should the semantic component of language aim to read off structures that are of a corresponding level of complexity? We note here that the core ‘phase’/non-phase pattern of syntactic derivations (e.g., {C {T v {V D/n {N}}}}; Richards, 2011, Uriagereka, 2012) optimizes compression rate (effectively, 010101), and since phase construction constitutes the major determining period when syntactic workspaces are accessed by the conceptual systems, we see our proposal as aligning closely with existing—if only implicit—assumptions.

Plainly, there are many issues with the framework we have outlined here that need to be further unpacked and clarified. Our proposals concerning compression of structures accessed from the syntactic workspace via TCC have been discussed in the context of a cursory overview of the mathematical lineage shared between formulations pertaining to the FEP and theories of universal computation. This suited our current expository purposes, with our proposals being buttressed by conceptual overviews of the FEP and syntactic economy, but future work should more carefully align models emerging from the FEP with TCC. Although we made our assumptions about syntactic complexity based on whether or not our measure can be formally grounded within the FEP, we note that we have effectively equated complexity with compressibility. As such, we acknowledge that there may be a number of other fruitful directions to measure complexity in ways that are sympathetic to the FEP (e.g., the “complexity equals change” framework; Aksentijevic & Gibson, 2012).

Lastly, we acknowledge that our choice of complexity metric (Lempel–Ziv complexity) could well be argued to be sub-optimal, or even inappropriate for our focus on derivational syntax, and we hope to explore different varieties of compression algorithms that share a mathematical lineage with the FEP moving forward. This does not detract from our main conceptual arguments, which have constituted the bulk of our discussion, and nor does it preclude TCC being subject to further modification pending an expansion of compression algorithms tested, but we wish to note here that Lempel–Ziv is an optimally appropriate estimator for Kolmogorov complexity given long sequences produced by an independent and identically distributed (‘iid’) source; when Lempel–Ziv is applied to very short sequences, it becomes more sensitive to the structure of the compression algorithm. Concurrently, our sequences used to compute Lempel–Ziv complexity are produced by a non-iid (Markovian) source, and so would become increasingly redundant as string length increases. Hence, it may be the case that our choice of compression algorithm is non-optimal for both very short and longer sequences, and future work should seek to contrast multiple minimal description and compression algorithms jointly, potentially modifying the architecture of TCC.

6 Conclusions

We have reviewed how the FEP is an expression of the principle of least action, which is additionally a principle implemented in models of theoretical syntax. An intuition from 70 years ago—that the extent to which a model of grammar is simple seems to determine its explanatory power—echoes in the modern framing of syntactic computation as a system of economy: “[T]he motives behind the demand for economy are in many ways the same as those behind the demand that there be a system at all” (Chomsky, 1951; see also Goodman, 1951). Generative linguistics has long appealed to economy considerations (e.g., the evaluation metric in Chomsky & Halle, 1968). Meanwhile, the FEP has produced formal, simulation-supported models of complex cognitive mechanisms such as action, perception, learning, attention and communication, while theories of syntax embracing computational efficiency have led to empirically successful outcomes, explaining grammaticality intuitions (Adger, 2003; Martin et al., 2020; Sprouse, 2011; Sprouse & Almeida, 2017), certain poverty of stimulus issues (Berwick et al., 2011; Crain et al., 2017; Culbertson et al., 2012; Wexler, 2003; Yang et al., 2017) and the pervasive organizational role that hierarchy has in language (Friederici et al., 2017; Grimaldi, 2012; McCarty et al., 2023) and the seemingly unique proclivity humans have to parse sequences into tree-structures.

We find seeds for these ideas in the foundational principles of universal computation, where, as we have noted, free energy is often discussed in terms of minimum description or message lengths (MacKay, 2003; Schmidhuber, 2010). Relatedly, findings from dependency length minimization (DLM) research suggest that, during online parsing, comprehenders seek to minimize the total length of dependencies in a given structure since this reduces working memory load (Gibson et al., 2019).

A core objective of current theories of syntax is to explain why language design is the way it is (Adger, 2019; Narita, 2014), and we have suggested that the FEP can contribute to this goal. The more efficiently a language user can internally construct meaningful, hierarchically organized syntactic structures, the more readily they can use these structures to contribute to the planning and organization of action, reflection and consolidation of experience, exogenous and endogenous monitoring, imagination of possible states, adaptive environmental sampling, and the consideration of personal responsibilities. We used a brief number of examples to demonstrate proof of concept for how compression algorithms, such as a Kolmogorov complexity estimate, can provide principled insight into efficiency concerns alongside more traditional economy criteria such as node count and tree-search depth.

Moving beyond, we note that our measures of Kolmogorov complexity in the operations of natural language syntax are exploiting a highly general, theory-neutral measure of complexity, and that these and other related measures serve to index some underlying, independent process of organic computation, which remains elusive in its formal character and neural basis.

More broadly, what the FEP can offer theoretical linguistics is proof of principle: a foundational grounding and means of additional motivation for investigating language in terms of efficient computation. The FEP is fundamentally a normative model (Allen 2018) which can aid the generation of implementational models and can place constraints on feasibility. Further simulation and modeling work is required to push these ideas further for natural language and its various sub-systems, and we envisage that this type of future work will provide fruitful insights into natural language syntax.

Notes

There are recent debates concerning whether merging new lexical objects is more computationally demanding (since it requires searching the lexicon) than merging lexical objects that are already in the workspace. This may motivate a Move-over-Merge bias (reversing the Merge-over-Move assumption from the early minimalism of the 1990s), however we leave this issue to one side.

There is increasing evidence that only elements in the workspace that have been labeled can be subject to movement (Bošković 2021).