Abstract

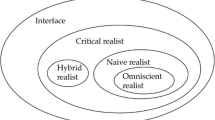

The Interface Theory of Perception, as stated by D. Hoffman, says that perceptual experiences do not to approximate properties of an “objective” world; instead, they have evolved to provide a simplified, species-specific, user interface to the world. Conscious Realism states that the objective world consists of ‘conscious agents’ and their experiences. Under these two theses, consciousness creates all objects and properties of the physical world: the problem of explaining this process reverses the mind-body problem. In support of the interface theory I propose that our perceptions have evolved, not to report the truth, but to guide adaptive behaviors. Using evolutionary game theory, I state a theorem asserting that perceptual strategies that see the truth will, under natural selection, be driven to extinction by perceptual strategies of equal complexity but tuned instead to fitness. I then give a minimal mathematical definition of the essential elements of a “conscious agent.” Under the conscious realism thesis, this leads to a non-dualistic, dynamical theory of conscious process in which both observer and observed have the same mathematical structure. The dynamics raises the possibility of emergence of combinations of conscious agents, in whose experiences those of the component agents are entangled. In support of conscious realism, I discuss two more theorems showing that a conscious agent can consistently see geometric and probabilistic structures of space that are not necessarily in the world per se but are properties of the conscious agent itself. The world simply has to be amenable to such a construction on the part of the agent; and different agents may construct different (even incompatible) structures as seeming to belong to the world. This again supports the idea that any true structure of the world is likely quite different from what we see. I conclude by observing that these theorems suggest the need for a new theory which resolves the reverse mind-body problem, a good candidate for which is conscious agent theory.

Similar content being viewed by others

Notes

If the space X is discrete, this just means that each pair (w, x) is assigned a probability K(w, x) between 0 and 1 and that \(\sum _{x\in X}K(w,x)=1\), for all \(w\in W\). The ideal strategy is then just a “Dirac” kernel: \(K(w,\;{\mathrm {d}}x)=\delta _{p(w)}({\mathrm {d}}x)\), for some function \(p: W\rightarrow X\); the right-hand side is the Dirac point measure assigning the value 1 to any measurable set containing p(w) and zero otherwise. We will generalize this further later in this article, when we allow the current perceptual strategy to depend also on the previous percept.

The above can easily be generalized (Prakash et al. 2018) to the situation where the perceptual map is a Markov kernel p(w, x): replace \(1_{p^{-1}(x)}\) by p(w; x) and the sums by sums (or integrals) over the whole of W.

Prior to this definition, given in Fields et al. (2017), a CA was defined in Hoffman and Prakash (2014) more restrictively as what should now be termed a forgetful CA: we take for the perception kernel a Markov kernel \(P:W\rightarrow X\), where the probability that the new perception is x depends on the current world state w: it is denoted \(P(w;\; {\mathrm {d}}x)\). The decision kernel is a Markov kernel, \(D:X\rightarrow G\). Here, given the current perception x, the probability that the next action will be g is \(D(x;\; {\mathrm {d}}g)\). Finally, the action Markov kernel is \(A:G\rightarrow W\). This means that, given the current action state g, the probability that the next world state will be w is \(A(g;\; {\mathrm {d}}w)\).

Here, by “conscious agent” we are not restricting our attention to human agents, self-conscious and aware of their perceptions, decision and actions, or even to human agents in any state of consciousness or lack thereof.

In the non-dispersive instance, it suffices, for the sake of consistency in the definition of conscious agent, to put the discrete \(\sigma\)-algebra on the spaces.

The group Hacts on S if there is a mapping \(H\times S\ni (h,s)\times S\mapsto h\cdot s\in H\), such that the identity \(e\in H\) acts as \(e\cdot s=s, \forall s\in S\) and whenever \(h,k\in H\) and \(s\in S\), \(h(k\cdot s)=(hk)\cdot s\). In the last equality, hk is a product in the group H, while \(k\cdot s\) and \((hk)\cdot s\) express the action of the group elements k and hk, respectively. The action is transitive if, for all pairs of elements of S, there is a group element taking one to the other; it is faithful if there is no \(s\in S\) with \(h\cdot s=s, \forall h\in H\).

Let \(w'=A(g,w)\) and \(w''=A(g^{-1},w')\). Then \(w''=A(g^{-1},A(g,w))\). If this were a group action, we would require \(w''=A(g^{-1}g,w))= A(\iota ,w)=w\), where \(\iota\) is the identity of G.

By \(p_x^{-1}(x')\) we mean the set of all \(w\in W\) such that \(p_x(w)=P(w,x)=x'\).

It is perhaps an incomplete description to refer to the elements of X as “perceptual” states, as a quale can be a perception, a feeling or even a thought.

When a CA is devoted mostly to spatiotemporality, its functionality would, e.g., be that of a “measuring rod” or a“clock.”

If this turns out not to be true, the CA definition may have to be amended to exhibit what we know of quantum behaviour. But the jury is still out on this.

One of the earliest, those of J. Hampton, is referred to in Aerts et al. (2018)

References

Aerts, D., Sassoli de Bianchi, M., Sozzo, S., & Veloz, T. (2018). On the conceptuality interpretation of quantum and relativity theories. Foundations of Science. https://doi.org/10.1007/s10699-018-9557-z.

Ay, N., & Löhr, (2015). The Umwelt of an embodied agent—A measure-theoretic definition. Theory in Biosciences, 134, 105–116.

Chalmers, D. (1995). Facing up to the problem of consciousness. Journal of Consciousness Studies, 2(3), 200–219.

Chalmers, D. J. (2016). The combination problem for panpsychism. In L. Jaskolla & G. Bruntrup (Eds.), Panpsychism (pp. 179–214). Oxford: Oxford University Press.

Chemero, A. (2009). Radical embodied cognitive science. Cambridge, MA: MIT Press.

Coecke, B. (2010). Quantum picturalism, Comtemporary Physics, 51, 59–83.

D’Ariano, G. M., Chiribella, G., & Perinotti, P. (2017). Quantum theory from first principles: An informational approach. Cambridge: Cambridge University Press.

Dennett, D. C. (1995). Darwin’s dangerous idea: Evolution and the meanings of life. New York: Simon & Schuster.

Faggin, F. (2015). The nature of reality. Atti e Memorie dell’Accademia Galileiana di Scienze, Lettere ed Arti, Volume CXXVII (2014–2015). Padova: Accademia Galileiana di Scienze, Lettere ed Arti. Also, Requirements for a Mathematical Theory of Conscoiusness, Journal of Consciousness, 18, 421.

Fields, C. (2018). Private communication.

Fields, C., Hoffman, D. D., Prakash, C., & Singh, M. (2017). Conscious agent networks: Formal analysis and application to cognition. Cognitive Systems Research, 47(2018), 186–213.

Giesler, W. S. (2003). A Bayesian approach to the evolution of perceptual and cognitive systems. Cognitive Science, 27, 379–402.

Hoffman, D. D. (2000). How we create what we see. New York: Norton.

Hoffman, D. D. (2009). The interface theory of perception. In S. Dickinson, M. Tarr, A. Leonardis, & B. Schiele (Eds.), Object characterization: Computer and human vision perspectives (pp. 148–165). New York, NY: Cambridge University Press.

Hoffman, D. D. (2019). The case against reality: Why evolution hid the truth from our eyes. W. W. Norton, In press.

Hoffman, D. D., & Prakash, C. (2014). Objects of consciousness. Frontiers of Psychology, 5, 577. https://doi.org/10.3389/fpsyg.2014.00577.

Hoffman, D. D., Singh, M., & Prakash, C. (2015). The interface theory of perception. Psychonomic Bulletin and Review. https://doi.org/10.3758/s13423-015-0890-8.

James, W. (1895). The principles of psychology. New York: Henry Holt.

Koenderink, J.J. (2013). World, environment, umwelt, and inner-world: A biological perspective on visual awareness. In Human vision and electronic imaging XVIII, edited by Bernice E. Rogowitz, Thrasyvoulos N. Pappas, Huib de Ridder, Proceedings of SPIE-IS&T Electronic Imaging, SPIE (Vol. 8651, p. 865103) (2013).

Koenderink, J. J. (2015). Esse est percipi & verum factum est. Psychonomic Bulletin & Review, 22, 1530–1534.

Mark, J., Marion, B., & Hoffman, D. D. (2010). Natural selection and veridical perception. Journal of Theoretical Biology, 266, 504–515.

Marr, D. (1982). Vision. San Francisco, CA: Freeman.

Mausfeld, R. (2015). Notions such as “truth” or “correspon- dence to the objective world” play no role in explanatory accounts of perception. Psychonomic Bulletin & Review, 22, 1535–1540.

Mendelovici, A. (2019). Panpsychism’s combinaiton problem is a problem for everyone. In W. Seager (Ed.), The routledge handbook of panpsychism. London, UK: Routledge.

Novak, M. (2006). Evolutionary dynamics: Exploring the equations of life. Cambridge: Belknap Press.

Palmer, S. (1999). Vision science: Photons to phenomenology. Cambridge, MA: MIT Press.

Pizlo, Z., Li, Y., Sawada, T., & Steinman, R. M. (2014). Making a machine that sees like us. New York, NY: Oxford University Press.

Prakash, C., Stephens, K., Hoffman, D.D., Singh, M. & Fields, C. (2018). Fitness beats truth in the evolution of perception (Under review).

Russel, B. (1959). The analysis of matter. Crows Nest: G. Allen & Unwin.

Von Uexküll, J. (2014). Umwelt und Innenwelt der Tiere. In F. Mildenberger & B. Herrmann (Eds.), Klassische Texte der Wissenschaft. Berlin: Springer.

Acknowledgements

Thanks to the Federico & Elvia Foundation for support for this work. Thanks for fruitful discussions with Federico Faggin, my research colleagues: Donald Hoffman, Chris Fields, Robert Prentner and Manish Singh, and with G. Mauro d’Ariano and Urban Kordes. Thanks also to an anonymous reviewer, whose comments significantly improved the Discussion section. I thank Diederik Aerts, Massimiliano Sassoli de Bianchi and others at VUB, Brussels for hosting the excellent symposium “Worlds of Entanglement” in 2017 and Tomas Veloz and his colleagues for managing the event with aplomb.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Prakash, C. On Invention of Structure in the World: Interfaces and Conscious Agents. Found Sci 25, 121–134 (2020). https://doi.org/10.1007/s10699-019-09579-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10699-019-09579-7