Abstract

Empirical research suggests that motive states modulate perception affecting perceptual processing either directly, or indirectly through the modulation of spatial attention. The affective modulation of perception occurs at various latencies, some of which fall within late vision, that is, after 150 ms. poststimulus. Earlier effects enhance the C1 and P1 ERP components in early vision, the former enhancement being the result of direct emotive effects on perceptual processing, and the latter being the result of indirect effects of emotional stimuli on perceptual processing that automatically capture exogenous attention. Other research suggests that emotional stimuli do not capture attention automatically but attentional capture is conditioned on the context. Since context dependent effects are first registered with the elicitation of N1 ERP component about 170 ms. poststimulus, emotional stimuli affect late vision. However, the early affective modulation of early vision by emotive states threatens the cognitive impenetrability of early vision since emotive states are associated with learning and past experiences. I argue that the emotive modulation of early vision does not entail the cognitive penetrability of early vision. First, the early indirect affective modulation of P1 is akin to the effects of spatial pre-cueing by non-emotive cues and these preparatory effects do not signify the cognitive impenetrability of early vision. Second, because the direct modulation of C1 signifies an initial, involuntary appraisal of threat in the incoming stimulus that precedes any cognitive states.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Discussions on the cognitive impenetrability (CI) of perception usually concern the putative cognitive modulation of perceptual processing either by cognitively driven attention or other cognitively driven influences. Some researchers have argued that the various attentional effects on perception do not signify the cognitive penetrability (CP) of early vision (Firestone & Scholl 2016; Pylyshyn 1999; Raftopoulos 2009, 2019), but influences might cognitively modulate early vision. Siegel (2006) and Stokes (2012), among philosophers, have argued that emotive states affect the phenomenology of perception. Research in Psychology has established the affective modulation of perception, and of visual perception, at various latencies. A series of behavioral and cognitive studies show that desired objects are seen as closer (Balcetis & Dunning (2010), that elevated arousal enhances contrast perception (Kim et al. 2017), that terrifying heights look higher (Teachman et al 2008), etc. These suggest that emotive states affect the way emotively charged objects appear in visual phenomenology. There is also a host of studies, which we shall discuss extensively, involving neuroimaging that show emotive modulation of visual perceptual processing.

A first question is whether the affective modulation of perception entails that perception is CP. This question has two parts. One is whether affective states involve cognitive states, and the other is, granting that they do, whether the affective modulation of early vision constitutes a case of genuine cognitive penetrability. An interesting further question is whether emotional effects are independent of attention, or whether they should be interpreted as attentional phenomena in which emotional stimuli are more attended (Brown et al. 2010).

Affective states modulate perceptual processing and affect the allocation of processing resources to incoming sensory stimuli. In this sense, they function as attention does and for this reason, the difference between attentional and emotional mechanisms notwithstanding, many researchers talk of ‘emotional attention’ (Vuilleumier 2005). Emotional states can affect perceptual processing both directly or indirectly. The indirect effects occur when signals form brain areas like the OFC (orbitofrontal cortex) or the amygdala, which predominately process the emotional aspects of stimuli, are transmitted to parietal and frontal areas and affect the semantic processing of the stimulus that takes place there, as when the valence of a stimulus speeds up or inhibits object recognition. In this case, the emotional processes co-determine the allocation of cognitively driven attention and, thus, affect indirectly perceptual processing through attentional effects; emotional effects modulate attention and, thereby, perception (Phelps 2006). Since, it is assumed that the earliest effects of cognitively driven attention in on-line perception modulate perceptual processing (that is, excluding pre-cueing) 150–170 ms. after stimulus onset (Carrasco 2011; Raftopoulos 2009, 2019), and that early vision lasts for up to 150 ms., this sort of indirect emotional influences on perceptual processing through attentional allocation do not threaten the CI of early vision.

There are also indirect emotive effects on perceptual processing that are mediated by exogenous or endogenous spatial attention that is captured by the appearance of an emotive stimulus before the presentation of the target stimulus in the pre-cueing experimental paradigm. When a threatening stimulus is presented as a cue, the brain areas that process the emotive significance of the cue send signals to the prefrontal and parietal areas that are responsible for directing spatial attention. As a result, spatial attention is oriented toward the location of the cue and this facilitates processing of the target stimuli presented at that location and inhibits processing of stimuli appearing in other locations. This is shown by the enhancement of the P1 ERP waveform (at 120–160 ms. after stimulus onset) time-locked to the target that appeared at the location of the fearful cue (Domınguez-Borras and Vuilleumier 2013; Pourtois et al. 2004; Pourtois et al. 2005). The enhancement of P1 suggests attentional involvement, and since P1 occurs within early vision, the affective states affect early vision. To assess whether the role of attention entails the cognitive modulation of the P1 component, one should analyze these emotively driven attentional effects.

Finally, there are direct emotional effects on perceptual processing through top-down transmission of signals from either the OFC or the amygdala to visual areas, signals that are distinct from those generated in parietal and frontal areas, and which are transmitted to these two areas very rapidly via sub-cortical pathways. These effects are independent of attentional biases and selection. Should this be the case, early vision despite its impenetrability from cognitively-driven attention, would be emotionally penetrated (EP). There is evidence that irrespective of whether or not a face cue directs covert attention, a fear face cue enhances contrast sensitivity (Phelps and LeDoux 2005). There is also evidence that fearful or threated-conditioned faces, as opposed to neutral or happy faces, acting as cues enhance C1 (about 80 ms. after stimulus onset) time-locked to the fearful cue (Domınguez-Borras and Vuilleumier 2013; Pourtois et al. 2004; Pourtois et al. 2005).

These studies also found that the modulation of C1 at about 80 ms. was positively correlated with the subsequent amplitude modulation of P1 at 130–160 ms. in the fear condition, suggesting a possible functional coupling between these two successive events. The latency of the enhancement of C1 from fearful faces precludes this modulation being the result of top-down attentional signals, but can be accounted for by signals from the amygdala affecting directly visual processing (Vuilleumier 2005). Amygdala are well known to be involved in encoding emotional stimuli (see Domınguez-Borras and Vuilleumier (2013) for an extensive list of references). Amygdala process the emotional significance of the stimulus, that is, whether it is a threat to the perceiving organism, and, thus, the early modulation of C1 by amygdala activation may entail the cognitive penetrability of early vision if the processing of the emotional value of the stimulus in the amygdala involves the cognitive states of the perceiver, such as beliefs as to what constitutes a threat based on past experiences.

In this paper, I argue that the emotive modulation of early vision, as evidenced by the enhancement of C1 and P1 ERP components, does not entail that early vision is cognitively penetrated. This is so because, first, the early indirect modulation by affective states of P1 is akin to the effects of spatial pre-cueing by non-emotive cues and is, thus, considered to be similar to the modulation of perception by exogenous or endogenous spatial attention in the pre-cueing paradigm. That is, the emotive effects are similar to the preparatory base-line shifts associated with endogenous top-down, or exogenous bottom-up, attention. It has been argued (Raftopoulos 2019) that these preparatory effects do not signify that early vision is cognitively penetrated. Moreover, many researchers associate the emotive effects on P1 with the sudden grasp of attention by a sudden change in the visual field, such as a rapid flash, which is a typical case of exogenous attention that is independent of cognitively-driven attention. The second reason that this early modulation of visual processing does not entail the CP of early vision is that the direct modulation of perception as evidenced by the enhancement of C1 signifies an initial, involuntary appraisal of threat in the incoming stimulus that is independent of, and precedes, any cognitive states.

I said that neuroimaging and behavioral/cognitive studies show that emotive states modulate visual processing and affect visual phenomenology. Here, I focus mainly on the neuroimaging studies, although some among these studies also includes behavioral findings, and do not discuss specifically the findings of the behavioral/cognitive studies. The reason is that although these studies show that emotive states do affect how things look, they cannot help us decide whether these effects concern early vision or visual stages after early vision, such as late vision, or, even, whether they are at the level of perceptual judgments. Carrasco and her lab had made similar claims concerning the many ways attention affects various aspects of the phenomenology of visual scenes, but closer examination based on neuroimaging studies reveal that these attentional modulations do not affect early vision.

Behavioral studies cannot determine whether emotive states modulate early vision, late vision, or function in the context of perceptual judgments, because it is not clear what kind of phenomenology is affected by the emotive states. Let me explain this. Dretske (1993, 2006) argues that in order for someone to be aware of X, it is not required that they should be able to notice or realize that they see X. This is the weak sense of awareness. Dretske distinguishes “thing awareness” from “fact awareness.” The former is perceptual awareness of an object or event and is awareness that characterizes pure (that is conceptually encapsulated) visual processing, that is the processing during early vision; it is the awareness to which the experience during early vision owns its phenomenology. The latter is awareness that something is the case and is awareness of conceptual content, since for Dretske (1993, 421) the awareness of a fact is a perceptual belief. Dretske (1993, 419) relates thing awareness with phenomenal, nonconceptual seeing and fact awareness with doxastic seeing. “Unless one understands the difference between a consciousness of things... and a consciousness of facts... and the way this difference depends, in turn, on a difference between a concept-free mental state (e.g., an experience) and a concept-charged mental state (e.g., a belief), one will fail to understand how one can have conscious experiences without being aware that one is having them.”

One can have thing awareness of X without believing or realizing that they see X. Perceivers have fact awareness when they reflect on their perceptual content and they are aware of the fact that they have such content; they realize or notice that they have it. ‘Awareness’ in thing awareness is awareness in the weak sense, while ‘awareness’ in ‘fact awareness’ is awareness in the strong sense. Dretske’s ‘thing awareness’, being the awareness responsible for visual phenomenology and the sort of awareness one can have without realizing or believing that they see something. This is similar to Block view about phenomenal and access consciousness. Block (2007) distinguishes between two concepts of consciousness, phenomenal and access consciousness. ‘Phenomenal consciousness is just experience; access consciousness is a kind of direct control... a representation is access conscious if it is actively poised for direct control of reasoning, reporting and action.’ Access consciousness is the consciousness of content that is available to the cognitive centers of the brain; it is information that is broadcast in the global neuronal workspace. The qualification ‘actively poised’ is meant to exclude from being characterized as access conscious those beliefs that are not occurrent, as the pieces of knowledge acquired in the past that rest inactivated in long term memory. Phenomenal consciousness, on the other hand, concerns the way a visual scene is presented in visual experience without the viewers necessarily notice that they undergo this experience. As with Dretske, phenomenal awareness is associated with pure visual perceptual content.

With this vocabulary at hand, one can reinstate the problem of the behavioral studies. Granting that emotive states affect the phenomenology of visual scenes, which phenomenology is affected? Phenomenal awareness, in which case, these effects would indeed show the CP of early vision, or cognitive access phenomenology, in which case the emotive effects do not concern early vision? In addition, since these studies examine the behavior of the participants in various tasks, it is very likely that the performance in these tasks involves perceptual judgments, and, thus, the effects are post-perceptual. Neuroimaging studies can help to address this problem, because they pinpoint the timing of the emotive modulations. If these modulations occurred during early vision, early vision would be CP, on the condition that the emotive states include cognitive components.

Let us turn this argument on its head. Suppose that emotion changes the neural signature that correlate with early vision and that these states are causally related to cognitive states, but the modulatory emotive states leave no behavioral traces, or do not affect the visual phenomenology. Should a defender of the CI of early vision be worried? This is a very interesting questionFootnote 1 and the answer to it depends on the way one construes of CP. From a strict scientific perspective, one might say that since neuroimaging studies do show that emotive states with cognitive components modulate early vision, early vision is CP. The modulatory effects may be too weak to show in behavioral studies, the effects on phenomenology may be below the human visual discriminatory capabilities and, thus, do not show in the content of the visual experiences, but, still, they are there. Early vision is CP even if this does not make any behavioral or phenomenological difference. From a Philosophical perspective, however, things are very different. The whole discussion on CP (Raftopoulos 2009.) started from worries that CP undermines the rationality objectivity of science, not to mention the role of perceptual evidence in the rationality of our decisions in everyday activities, because cognition affects visual perception and, thus, what one thinks or believes affects what they see. If it turns out that cognition affects early vision but this does not affect what one sees (since, as we assumed, the emotive states, despite their effects on early vision processing, do not affect visual phenomenology), then, indeed, one should not worry about this kind of CP of early vision. The problem with this line of thought is that the cognitive effects may be so subtle that they do not influence how things look, but they might, nevertheless, induce other sorts of implicit biases, which, in turn, may affect scientific decisions. This is not the place to address these worries. Besides, Behavioral studies do show that emotive states affect behavior and phenomenology and, thus, the question that needs to be answered is whether these effects concern early vision. As I said, only neuroimaging studies can conform or disconfirm this claim.

2 Visual Stages, CP, and EP

I assume that perception consists of two stages; early vision and late vision. The former is CI, while the latter is CP by being modulated by cognitively driven attention. Thus, cduring early vision no cognitively-driven attentional effects exist. Neurophysiological evidence for this comes from various findings (discussed in Raftopoulos 2009, 2019) that strongly suggest that the first signs of cognitively driven spatial attentional effects on visual areas up to V4 occur at about 150 ms., followed at later latencies by object/feature based-attentional effects. Thus, early vision is a pre-attentional, CI, visual processing stage, in the sense that its formation is not directly affected by signals from cognitive centers. It is in defining what ‘directly’ means that considerations about attention enter the picture and make necessary some explication of what ‘pre-attentional’ means.

First, this claim does not entail that there is no selection during early vision. There are non-attentional selection mechanisms that filter information before it reaches awareness. These mechanisms are not considered to be attentional because they occur very early and do not involve higher brain areas associated with attentional mechanisms (in the prefrontal cortex, parietal cortex, etc.) Second, “pre-attentional” should be construed in relation to cognitively driven attention that affects perceptual processing directly. In this sense, spatial or object-based attentional effects that result from the presence of a cue and influence the perceptual processing when a target appears are not considered to be cognitive effects, because they do not affect in a top-down manner visual processing but just rig up the feedforward sweep (this is called the attentional modulation of spontaneous activity).

Late vision is affected by cognitive effects and, thus, involves higher cognitive areas of the brain (semantic memory etc.), and involves the global neuronal workspace (Dehaene et al. 2006). Such effects start at about 150 ms when information concerning the gist of a visual scene, retrieved on the basis of low spatial frequency (LSF) information in the parietal cortex in about 130 ms, reenters the extrastriate cortex and facilitates the processing of the high spatial frequency information (HSF), leading to faster scene and object identification (Kihara and Takeda 2010).

Returning to the problem the CP of early vision, let me explain what I mean by CP.

CP=The CP of early vision is the nomological possibility that cognitive states can causally affect either in a top-down, direct, on-line way (that is, while viewers have in their visual field and attend to the same location or stimulus, or are prepared to attend to the same stimulus when it appears), or from the within, early vision, in a way that changes the visual contents that are or would be experienced by the viewer or other viewers with similar perceptual systems, under the same external viewing conditions.

The reference to direct on-line effects prevents a process that is indirectly affected by cognitive inferences from being construed as CP. The indirect effects include both cognitively driven spatial and feature/object-based attention, and the preparedness to attend, which covers cases in which the viewer expects a certain object or feature to appear either at a certain cued location or with a cued feature. The former cases are post-early vision effects. The later are cases of the attentional modulation of spontaneous activity, which do not entail CP (Raftopoulos 2019). The condition of causality ensures that any relation between contents occurs as a result of the causal influences of cognitive states on perceptual states and contents and is not a matter of coincidence. Finally, the specification “from within” covers the operational constraints in perception that solve the various problems of underdetermination of the distal objects and of the percept from the retinal image, and which do note entail that early vision is CP (Burge 2010; Raftopoulos 2009).

A somewhat similar definition applies to EP.

EP=The EP of early vision is the nomological possibility that affective states can either causally affect in a top-down, on-line way (that is, while viewers have in their visual field the same stimulus or are prepared for the appearance of the same stimulus) early vision by modulating attention, or can affect directly perceptual processing without attentional modulation. The effects should be such that they change the visual contents that are or would be experienced by the viewers or viewers with similar perceptual systems, under the same external viewing conditions.

Note that there are some differences from the definition of CP owing to the fact that it is likely that emotional stimuli can be processed independently of attention. As in the case of CP, the preparedness purports to cover cases in which a cue regarding the valence of an upcoming stimulus influences the base-line activation of the neurons encoding the stimulus.

3 Emotion and Attention (take 1)

Let me start with a few comments about the relation between emotive and attentional effects on perceptual processing, although this issue recurs throughout this paper. Emotional stimuli, owing to their intrinsic significance, have a competitive advantage relative to neutral stimuli, and are more likely to win the attentional (both overt or covert) biased competition among stimuli for further processing. However, affecting the biased competition among stimuli is what attentional effects do as well and, thus, the question arises as to the relation between emotional and attentional influences on visual processing. Evidence shows that both attention to non-emotional stimuli, and emotional stimuli per se can boost neural responses (Vuilleumier et al. 2004; Shupp et al. 2004). This suggests that the net result of both attentional and motivational modulation of the visual cortex is similar in that they both enhance perceptual processing. For this reason, emotional effects are sometimes referred to as ‘emotional attention’ (Vuilleumier 2005).

Nevertheless, the neuronal pathways responsible for attentional and emotional effects are likely different, since, among other things, differences in size and duration of the time courses of semantic and emotional processing and their influences on the visual cortex have been observed (Attar et al. 2010; Vuilleumier 2005; Vuilleumier and Driver 2007). Another reason for being skeptical of the view that the same mechanism underlies attentional and emotional effects is that there is mixed evidence concerning the extent to which unattended fearful faces are processed. Williams et al. (2005) argue that although differential amygdala responses to fearful versus happy facial expressions are tuned by mechanisms of attention, the amygdala gives preference to potentially threatening stimuli under conditions of inattention as well. Furthermore, the influence of selective attention on amygdala activity depends on the valence of the facial expression. Bishop et al. (2007) argue that affective modulation of the BOLD signals occurs only when the task demands low attention. Research with patients with pathologies in the amygdala or in the parietal cortex show a clear, at least partial, dissociation between attentional end emotive effects on perceptual processing (Domınguez-Borras and Vuilleumier 2013; Pourtois and Vuilleumier 2006) .

There are also differences concerning the brain regions involved in emotional and attentional influences. Amygdala is involved in emotional modulation of perceptual processing, whereas the FEF and other parietal regions are involved in the modulation of perceptual processing by spatial attention. Amygdala is well poised to modulate perception because it receives sensory inputs from all modalities and sends signals to many cortical and subcortical regions that can potentially influence perception. Amygdala is sensitive both to coarse LSF information that travels fast in the brain and to slow HSF information. This way, an initial appraisal of emotional significance based on a limited amount of information may proceed quicker than the elaborate and time-consuming processing associated with conscious awareness of a stimulus. This may explain why ERPs to fearful expressions in face selective neurons in monkeys are registered very early (50–100 ms after the initial selective activity), while the fine encoding of faces that relies on the slower traveling HSF information starts at 170 ms as indexed by the specifically related to face-processing N170.

Concerning the relation between affective and attentional effects, one can make the following general remarks. The amygdala responds to fearful expressions independent of attentional modulation. The amygdala can reinforce the representation of fearful faces in fusiform cortex, an influence that is disrupted when the amygdala is damaged (Vuilleumier et al. 2004). Recordings of face-selective neurons in monkeys (Sugase et al. 1999) suggest that the amygdala modulates perceptual processing 50–100 ms after the initial face-selective activity. Since the monkey amygdala neurons respond to threatening face expressions between 120–250 ms (Pessoa & Adolphs 2010), the earliest modulation of face selective neurons by amygdala signals starts at about 170 ms, in accordance with Holmes et al. (2003) findings. The amygdala activity probably reflects coarse-grained global processing of the input, while the affective modulation of face processing reflects affective information contributing to a more fine-grained representation of faces at later latencies with a delay of 50 ms compared to global processing.

Emotional and attentional effects can also compete. Emotional modulation of distractors enhances the responses of the neurons encoding them. This increases the competition with the targets by reducing the responses of the neurons encoding them. Emotional signals, however, may be suppressed by high perceptual competition where spatial attention filters out very early most of the distractors (Lavie 2005). Finally, amygdala’s influence can persist in conditions where cortical responses are reduced, contributing, thus, to the amplification of cortical processing when sensory inputs are insufficient (Vuilleumier 2005).

Emotional effects act separately from attentional effects and provide an additional bias to the processes of sensory representations that lead to the selection of some among the items in the input, either adding to or competing with attention. The competition that emotional effects pose to attention is advantageous for an organism since unexpected events that have a particular emotional value can be detected, and influence behavioral responses, independently of the organism’s current attentional loads.

4 Timing Emotive Effects

Let us turn to the processing of emotional stimuli in the brain. When the brain receives information, it generates hypotheses based on the input and what it knows from past experiences to guide recognition and action, and these hypotheses are tested against the incoming sensory information (Bar 2009: Barrett 2017; Clark 2013; Friston 2010; Raftopoulos 2019). In addition to what it knows, the brain uses affective representations, that is, prior experiences of how the input had influenced internal bodily sensations. In determining the meaning of the incoming stimulus, the brain employs representations of the affective impact of the stimulus to form affective predictions. These predictions are made within ms. and do not occur as a separate step after the object is identified; rather they assist in object identification (Bar 2009).

There is substantial evidence that the OFC, which is one of the centerpieces of the neuronal workspace that realizes affective responses, plays an important role in forming the predictions that support object recognition. The earliest activation of the OFC owing to bottom-up signals is observed between 80–130 ms (Bar 2009) . This activity is driven by fast LSF information through magnocellular pathways. Since it takes at least 80 ms. for signals from OFC to reenter the occipital cortex, OFC affects in a top-down manner perceptual processing after about 160 ms. that is, after early vision has ended. A second wave of activity in the OFC is registered at 200 to 450 ms, probably reflecting the refinement and elaboration of the initial hypothesis. There is evidence that the brain uses LSF information to make an initial prediction about the gist of a visual scene or object, that is, to form a hypothesis regarding the class to which the scene/object belongs. This hypothesis is tested and details are filled using HSF information in the visual brain and information from visual working memory (Kihara and Takeda 2010).

Barrett and Bar (2009) argue that the medial OFC directs the body to prepare a physical response to the input, while the lateral parts of OFC are integrating the sensory feedback from the bodily states with sensory cues. The medial OFC has reciprocal connections to the lateral parietal areas in the dorsal system from where it receives LSF information transmitted through magnocellular pathways. Using LSF information, the medial OFC extracts the affective context in which the object has been experienced in the past and this information is relayed to the dorsal system where it contributes to the determination of the sketchy gist of the scene or object. The lateral OFC, in its turn, has reciprocal connections with inferior temporal areas of the ventral stream, whence it receives HSF information through parvocellular pathways. Its role is to integrate sensory with affective information to create a specific representation of the scene or object, which eventually leads to conscious experience.

The role of OFC in the modulation of perceptual processing suggests that even the early activation of OFC does not affect early vision. It plays a significant role in the formation of hypotheses concerning the identity of the stimulus and their testing but this takes place during late vision. This entails, in turn, that the role of any cognitive states in relating the stimulus to past experiences and determining their emotive significance or task relevance (as indicated by the N1 ERP component elicited at 170 ms. poststimulus) for the viewer, does not affect early vision.

Another significant part of the brain that processes emotive stimuli is the amygdala (Domınguez-Borras and Vuilleumier 2013). The profile of amygdala reactivity is generally compatible with biases observed in behavioral performance or in sensory regions. The amygdala seems to be activated primarily in response to the arousal or relevance value of sensory events, rather than to negative valence only, although arousal-valence interactions and stronger responses to threat are frequently observed. The amygdala is well poised to modulate cortical pathways involved in perception and attention, because it has bidirectional connections with all sensory systems, and is also connected with fronto-parietal areas subserving attention. Studies in the macaque show that projections to visual cortices are highly organized, so that rostral regions of the amygdala project to rostral (i.e., higher level) visual areas, whereas caudal regions of the amygdala project to caudal (i.e., lower level) visual areas (Freese & Amaral 2006). At the microscopic level, there is evidence that projections from the amygdala reach pyramidal neurons in early visual areas with synaptic patterns suggestive of excitatory feedback (Freese & Amaral 2006). MRI studies in humans using diffusion tensor imaging (DTI) have identified topographically organized fibbers in the inferior longitudinal fasciculus that directly connect the amygdala with early visual areas and might contain such back-projections (Gschwind, et al 2012).

Functional studies of the amygdala in humans suggest that amygdala activation reflects the integration of perceptual information with emotional associations of the stimuli (Oya et al. 2002). The amygdala in humans processes the emotional content of facial expressions at 140–170 ms. after stimulus onset (Conty et al. 2012), or at 200 ms. (Pessoa & Adolphs 2010), or at two distinct latencies, a transient early and a later sustained period (Krolak-Salmon et al. 2004). Krolak-Salmon et al. (2004) argue that the time course of amygdala involvement and its dependence on the attended facial features confirm the critical implication of cortical frontal areas for visual emotional stimuli. There seems to be a functional link between the amygdala, the visual occipito-temporal stream, and OFC. These three regions may belong to a temporally linked triangular network implicated in facial expression processing, especially when specific attention is engaged. Intracranial recordings show that the fear effect on amygdala is recorded between 200–300 ms. after stimulus onset. Finally, Kawasaki et al. (2001) report an earlier activation onset (120–160 ms.) in ventral sites of the right prefrontal cortex for aversive visual stimuli.

The previous studies focused on the interactions of amygdala through cortical pathways. However, the amygdala and OFC receive inputs and are activated through subcortical circuits from subcortical regions in the basal ganglia, thalamus, and brainstem (Kawasaki et al. 2001; Schmid et al. 2010; Tamietto & de Gelder 2010; Vuilleumier, et al. 2003) that bypass the occipital cortex. This means that the amygdala might exert direct influences on early visual areas very early, independent of any subsequent attentional effects modulated by the affective stimulus. Tamietto and de Gelder (2010) argue that their study, which combined MEG and MRI methods, revealed early, event-related synchronization in the posterior thalamus (probably in the pulvinar), as fast as 10–20 ms. after onset of the presentation of fearful facial expressions, followed by event related synchronization in the amygdala at 20–30 ms. after onset. By comparison, synchronization in the striate cortex occurred only 40–50 ms. after stimulus onset. Thus, amygdala process fearful expressions earlier than the visual processing of the stimulus in the visual striate cortex and could affect through reentrant connections visual processing. What may explain this early onset is the fact that responses in the superior colliculus and pulvinar are tuned to coarse information in low spatial frequencies. Consistently with these findings, the subcortical pathway to the amygdala is sensitive to the presentation of fearful faces in low spatial frequencies.

Let us turn now to the empirical evidence concerning the affective modulation of visual perceptual processing by signals emanating from the amygdala or the OFC. Studies by Domınguez-Borras and Vuilleumier (2013), Pourtois & Vuilleumier (2006), Pourtois et al. (2004), and Pourtois et al. (2005), in which emotional or neutral stimuli were presented as cues before the presentation of a target stimulus, usually a bar, either at the location of the cue (valid trials), or at some other location (invalid trials), suggest that the C1 ERP component that is generated very early (in less than 80 ms.) in the early striate visual cortex had a significantly higher amplitude for fearful faces cues than for happy or neutral faces. Since the EEG recordings were time-locked to the emotive cue (a fearful face, for example) and not to the subsequent bar-target, any brain response observed is likely the result of the effect of the affective cue on visual processing rather than a response to the subsequently shown target-bar. Moreover, since the sites of C1 are mainly in the cuneus and lingual gyrus in the occipital visual system, they clearly belong to early vision. The enhancement of C1 by fearful faces is clearly a direct emotive effect on early visual processing that is independent of any attentional effects, which is consistent with the findings that C1 is not mediated by any sort of attention, as it is known that C1 is not affected by spatial attention (Di Russo et al. 2003).

This early effect could not have been produced by low-level features of faces rather than the emotional trait of the face, since experiments with inverted faces did not produce similar effects. This is supported by EEG experiments that contrasted the involuntary effects triggered by exogenous and emotional cues (Brosch et al. 2011) and revealed that these two factors operated during two distinct time windows: ERPs time-locked to the exogenous cue showed a specific enhancement of the N2pc component, consistent with a rapid shift in attention to the cued side, whereas the emotional cue enhanced the P1 time-locked to the target, consistent with enhanced visual perception. Thus, ERPs clearly differentiated between processes mediating attentional biases induced by emotional meaning and biased caused by the physical properties of the stimuli. In Saito’s et al. (2022) study, finally, participants were engaged in an associative learning task wherein neutral faces were associated with either monetary rewards, monetary punishments, or zero outcome in order for the neutral faces to acquire positive, negative, and no emotional value, respectively. Then, during the visual search task, the participants detected a target-neutral face associated with high reward or punishment from among newly presented neutral faces. Their findings showed that there were no prominent differences in terms of visual saliency between neutral faces with and without value associations or between high- and low probability faces. This indicates that an efficient search for emotional faces can emerge without any influence of visual saliency.

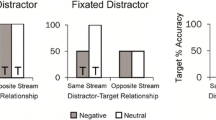

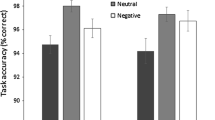

Attar et al. (2010) did not examine the time course of emotional processing of stimuli per se but its effects on attentional resource allocation in a primary task with respect to which the emotional stimuli functioned as distractors. Their findings suggest that highly arousing emotional pictures consume much more processing resources than neutral pictures over a prolonged period of time, which means that emotional distractors receive prioritized processing despite severe resource limitations. This effect, however, is of relatively small size when compared to the effects of general picture processing during task-related activity, where irrelevant whole pictures without any emotional value that act as distractors have a detrimental effect on task related activity. More importantly for this paper, Attar et al (2010) found, at the behavioral level, significant decreases in target detection rates when emotional compared to neutral pictures were concurrently presented in the background. At the neuronal level, the effect was accompanied by a stronger decrease of SSVEP amplitudes directed to a primary task for emotional relative to neutral pictures. The earliest onset for the affective deflective amplitude was at 270 ms. According to our knowledge about the neural sites at which SSVEP signals are generated, the deflection observed stems from sources in early visual areas (Andersen et al. 2012).

Pourtois et al. (2005) also found that even though the C1 responses might reflect the enhanced visual processing of fearful faces, this early effect of emotional face expression in V1 was no longer present in the EEG at the time of target onset. No valence effect was observed for the P1 and N170 ERP wave forms succeeding C1 time-locked to the fearful face cue. This means that there is no early direct emotive effect on perceptual processing other than that observed for C1. Thus, the subsequent effects observed in the scalp topography 40– 80 ms. after target onset in the fear valid trials probably correspond to a distinct modulation of the mechanisms of spatial attention towards visual targets appearing at the same location, perhaps triggered by the initial emotional response to facial cues. As we shall see, this means that the functional coupling between temporal and posterior parietal regions that direct the focus of spatial attention and occipito-temporal cortex through top-down signals from the former to the latter might be enhanced following threat-related cues (such as fearful faces). The positive correlation between the early temporo-parietal activity and the subsequent extrastriate response was significantly higher in valid fear trials than in all other conditions. This correlation may provide a neural mechanism for the prioritized orienting of spatial attention towards the location of emotional stimuli (Pourtois et al. 2004; Vuilleumier 2002).

The early C1 emotive effect may result from a rapid modulation of visual processing in V1 by reentrant feedback from the amygdala (Amaral et al. 2003; Tamietto & de Gelder 2010; Vuilleumier et al. 2004), since, as we have seen, the amygdala is connected to the early visual cortex and it is likely that is activated subcortically by emotional stimuli through signals bypassing the occipital cortex, at earlier latencies than the early visual striate cortex. Pourtois and Vuilleumier (2006, 76) speculate that this early differential response to emotional faces, as evidenced by the enhancement of C1 ERP component in V1, may serve a rapid decoding of socially relevant stimuli in distant regions such as the amygdala that begin responding to faces at 120 ms. poststimulus as a result of receiving signals from V1. Alternatively, it may reflect a rapid modulation of V1 by reentrant feedback from the amygdala that process emotional stimuli at earlier latencies through signals that they receive from subcortical pathways bypassing the occipital cortex. These two functions are not mutually exclusive; the early amygdala responses to emotive stimuli subserved by subcortical pathways rely on coarse low-spatial frequency information, whereas the later response of the amygdala to signals from V1 may reflect the more refined processing of the emotive stimuli based on high-spatial frequency, more detailed information. This is consistent with findings showing that the face-responsive area in fusiform gyrus responds to emotional faces after the registration of N170 ERP waveform that signals the recognition of faces.

This is a case in which the CI of early vision seems to be threatened. If fearful faces or other threatening stimuli affect visual processing at these early latencies, and if the assessment of threat relies on cognitive states that are formed as the result of past experiences, this clearly means that early vision is CP. Since the onset of C1 is too early to be attributable to any top-down cognitive influences, CP could occur only if the cognitive information guiding the attribution of threatening character of the stimulus was embedded within the early visual system, the way the formation principles (Burge 2010), or operational constraints (Raftopoulos 2009) were thought to be embedded in the visual system allegedly rendering visual perception CP and the state transformations in it similar in nature to discursive inferences. The problem with this line of thought is that it is likely wrong, for the same reasons that view that the operational constraints at work in vision render early vision CP is wrong (Raftopoulos 2019).

Pourtois & Vuilleumier (2006), based on work by Lang et al. (1998) and Thiel et al. (2004), suggest that the early activity of the amygdala in response only to threatening and not to other affective stimuli possibly reflect some general alerting or arousal effect triggered by fearful faces. Along these lines, Tamietto & de Gelder (2010) offer an intriguing explanation of the early registration of threatening stimuli in the amygdala. They argue that data from human and animal studies suggest the continuity of subcortical emotion processing across species and its evolutionary role in shaping adaptive behavior. They discuss a proposal by Isbell (2006), who suggests that fear detection has played a major part in shaping the visual system of primates, and in its integration with an emotion circuit centered on the amygdala. Snakes, for example, presumably represented a major threat for our ancestors and the need to detect them on the ground accelerated the development of greater orbital convergence, allowing better shortrange stereopsis, particularly in the lower visual field. The koniocellular and magnocellular pathways from the retina were developed further to connect the superior colliculus with the pulvinar promoting fast and automatic detection of snakes. It is likely that this mechanism generalized for detection of other fear-relevant stimuli. The fast reactivity of this subcortical pathway to visual stimuli with low resolution has also promoted the parallel development of a cortical visual pathway to the amygdala with complementary features to those of the subcortical pathway. Consistent with this claim is evidence that the subcortical pathway for processing emotional stimuli emerged early in phylogenesis, as evidence in human and non-human primates indicates that the formation of these structures is more developed at birth compared to the relatively immature development of the cortical areas involved in visual and emotional processing.

Hodgson (2008, 345) reaches the same conclusion. ‘Animals... were integral to the evolution of the human brain to the extent that the encoding of animal forms seems to have become a dedicated domain of the visual cortex.’ Considering that in the Palaeolithic our hunters-gatherers ancestors were constantly living in close proximity with animals some of which were dangerous (cave-bears, cave-lions, wolves) and that animals were an integral part of human life, it is only natural to assume that the conditions in which they lived shaped the way they perceived, and thought of, animals and, thus, their brains (which are the same as our brains) were accordingly shaped. From these considerations, it is it very plausible that our brains have developed mechanisms embedded in our perceptual systems that allow tracking of threating animal-related stimuli very fast, enabling fast responses to avoid danger. This requires that our perceptual systems be linked directly through fast interconnections with the brain systems that encode the emotive significance of the stimulus and is not conditioned on interactions with, and modulation by, the cognitive areas through attention. As Kim et al. (2017, 7) remark ‘Although attention and arousal are often considered linked processes, the origins of their modulatory signals are quite distinct. Arousal signals have primarily been attributed to the locus coeruleus–norepinephrine system, whereas the attentional-control signals stem from a cortical constellation encompassing both dorsal and ventral frontoparietal networks. Thus, while they are potentially complementary modulatory signals, it remains unclear as to whether these two processes influence response properties in the brain interactively or they act as two independent processes.’

This idea is supported by findings that the perceptual and emotive-significance-assessment brain areas have phylogenetically developed prior to the formation of the areas that are closely related with the cognitive control of the neuronal activity elsewhere in the brain. These are the association areas that assess and integrate information across different brain areas subserving different modalities (Preuss 2011; Schoenemann 2006; Neubauer et al. 2018). The cognitive areas are mostly associated with a network involving frontal and parietal areas (Jung & Haier 2007; Barbey et al. 2012). The brain has formed a system of threat appraisal and avoidance behavior, most likely hardwired in the brain, well before the development of the cognitive areas. In view of the fact that evolution never undoes previous constructions and functionalities that successfully addressed evolutionary pressures in order to reshape the brain a nouveau, even when the newer cognitive areas developed and new white matter tracks—the superior longitudinal fasciculus, the arcuate fasciculus, the uncinate fasciculus and the cingulum (Lebel and Leoni 2018) — were formed connecting these new areas with the older areas of the brain (allowing, thus, cognitive control of the neural computations), the previously developed mechanisms of threat-appraisal and reaction remained intact and fully functional enabling fast reaction to possible threat. It goes without saying that the new connectivity enables further elaboration of the threat assessment, it allows integration with other mental abilities, etc., but it does not abolish the established instinctive and hardwired fast threat assessment and reaction, which is advantageous to the agent because it allows fast responses to perceived threat.

If these considerations are on the right track, the detection of the threatening character of the stimulus by the early visual perceptual system does not rely on the workings of some cognitive states that are formed on the basis of past experiences and are embedded within the early visual system. Most likely, the relevant mechanisms are hardwired in the early visual system, exactly the way the operational constraints are hardwired, as a result of the phylogenetic development of our species in its environment. For this reason, it is very probable that no contentful states are involved in the assessment of threat, and, thus, no CP could occur. One might retort that even if it is correct that the attribution of threat in a stimulus does not require any cognitive involvement, it is still the case that organisms with different past experiences in differing environments will make different assessments concerning the threat that a stimulus might pose for the organism. This is correct, of course, but it is either irrelevant to the problem of the CP of early vision, or does not pose any threat to the claim that early vision is CI, for the same reasons that perceptual learning does not (Raftopoulos 2009).

Let us move to the emotive influences on P1. The evidence we have examined shows that there are no valence effects on P1 time-locked to the fearful cue, which means that this cue does not affect directly P1, even though there is a positive correlation between the C1 modulated by the cue and P1 enhancement. Studies in humans (Domınguez-Borras and Vuilleumier 2013; Olofsson et al. 2008; Pourtois & Vuilleumier 2006; Pourtois et al. 2004; Pourtois et al. 2005; Vuilleumier et al. 2004; Vuilleumier and Driver 2007) show that fearful or threatening vs. neutral or happy faces presented as cues induce a higher amplitude of VEP (visual evoked potentials) and an enhancement of the P1 ERP component time-locked to the stimulus presentation at the cued location at about 120 ms. Thus, the enhanced evoked potential concerns the perception of the target-bar presented at the location of the emotional cue (or at another location in invalid trials) and not the perception of the cue per se. P1 originates in extrastriate areas and is considered to be the hallmark of the effects of exogenous spatial attention on visual processing, that is, the effects of the automatic orienting response to a location where a sudden stimulation occurs. This entails that the emotion-related modulation of the visual cortex arises prior to the processing stages associated with fine-grained face perception indexed by the N170 component for face recognition. Emotional affects are prior to, and help in determining, the categorization of the stimuli and can collaborate with attentional effects by enhancing the processing of spatially relevant and emotionally significant stimuli. Notice that.

The Pourtois et al. (2004; 2005; 2006) experiments were designed with short cue-target intervals (CTI) so that allocation of attention be automatic and free from any cognitive manipulations. The short CTI are also important to avoid a phenomenon observed in a variation of the cueing task that we have discussed thus far, namely the dot-probe task in which two cues, one emotional and the other neutral, are presented simultaneously and then a target appears at the location of the emotional cue (valid trails) or the location of the neutral cue (invalid trials). While attention is allocated automatically to one of the two cues during the initial presentation, participants often try to move their attention back strategically to the central position if the presentation of the cues is extended for a longer duration (CTI > 300 ms.). Should this happen, the re-allocation of attention to the initial position is inhibited and this may result in a reversed validity effect; for example, Cooper and Langton (2006) found a threat bias for 100 CTI, but a reversed effect at 500 ms.

EEG recordings show that targets preceded by emotional cues elicit a larger visual P1 component, relative to those preceded by neutral cues. This is consistent with enhanced visual processing of targets when pre-cued by emotional stimuli. When the target is preceded by an emotional valid cue, activity to the target is first increased in parietal areas (50–100 ms.), probably reflecting top-down attentional signals induced by the emotional cue and responsible for faster spatial orienting to the target location and enhanced processing in extrastriate visual cortex at the P1 latency (100–150 ms.).

In addition to enhancing responses in perceptual regions, emotion signals may also increase activity in brain regions associated with attention control, including the posterior parietal cortex (Vuilleumier 2005). This might in turn influence top-down attentional signals on perceptual pathways. In particular, such effects have been observed in dot-probe tasks with fear-conditioned images (Armony & Dolan 2002), threatening faces (Pourtois, et al 2005), or even positive affective stimuli. Presentation of a peripheral threat-related cue in these tasks typically produces an increased activation in frontoparietal networks, reflecting a shift of attention to the location of the emotional cue (Armony & Dolan 2002). Moreover, when a neutral target (dot) is preceded by a pair of face cues (one neutral and one emotional), those targets preceded by neutral cues, as compared to by emotional ones, elicited reduced BOLD responses in intraparietal sulcus (IPS) ipsilateral to the targets, consistent with a capture of attention by the emotional cue on the contralateral side and a reduced ability to reorient to the target on the ipsilateral side (Pourtois, et al. 2006, 2013). Targets appearing after an emotional cue produce stronger BOLD responses in the lateral occipital cortex (Pourtois et al. 2006) and a larger P1 component in EEG recordings (Pourtois et al. 2004), consistent with improved visual processing and better target detection. A detailed analysis of the time course of these effects with EEG (Pourtois et al. 2005) suggests that the modulation of parietal areas may be triggered by an initial response to the emotional cue, and subsequently induce top-down spatial attentional signals responsible for enhanced processing of the target, but only in valid trials.

Notice that the effects of fearful faces on ERPs time-locked to the target bars affected the lateral occipital P1 and N170 in the fusiform gyrus but did not affect C1 generated in the primary visual cortex or N1 generated in higher visual areas within occipito-parietal cortex.

The suggestion that emotional cues play a causal role in the enhancement of P1 is reinforced by the finding of a positive correlation between the enhancements of C1 and P1 ERP components even though the former is time-locked to the cue and the latter is time-locked to the target (Pourtois et al. 2004, 2005). Correlation analysis revealed a significant positive correlation between the amplitude of C1 and P1 in the fear condition, which, however, was restricted to the left hemisphere. There was, also, no significant correlation between the amplitude of the C1 and the P1 validity effect with happy faces in either hemisphere. In addition, a direct comparison of the C1–P1 correlations for each emotion condition suggests that, even though the time interval between the two stimuli varied randomly, the larger the C1 response to a fearful face in the peripheral visual field, the larger the subsequent validity effect on the occipital P1 evoked by a bar-target appearing at the same location. However, (Pourtois et al 2004) note, this correlation was significant only for electrodes in the left hemisphere and they consider it as a tentative result that suggests a possible functional relationship between the magnitude of responses to faces and the enhanced processing of bar-probes on valid trials.

The early latency precludes the P1 modulation being the result of top-down cognitive signals. Neither can the modulation be accounted for by signals from the amygdala activated through cortical circuits, because the amygdala in humans processes the emotional content of facial expressions at 140–170 ms after stimulus onset (Conty et al. 2012), or at 200 ms (Pessoa & Adolphs 2010), or at two distinct latencies, a transient early and a later sustained period (Krolak-Salmon et al. 2004). However, the evidence is compatible with the view that amygdala affects visual processing very early through its rapid activation via sub-cortical pathways that we discussed above.

5 Emotion and Attention (take 2)

Taken together, these results provide evidence for neural mechanisms allowing rapid, exogenous spatial orienting of attention towards fear stimuli (Pourtois et al. 2004); threat-related stimuli may capture attention in an involuntary and exogenous way. This facilitation involves reflexive (exogenous) orienting mechanisms, because it occurs after short time intervals between the cue and target (< 300 ms), even when the cue is masked (Mogg & Bradley 2002). Likewise, a brief emotional stimulus (e.g., a fearful face presented for 75 ms.) can enhance contrast sensitivity and potentiate the effect of spatial attention on detection accuracy for a subsequent visual target (a gabor pattern), suggesting a modulation of early perceptual processing in V1 (Phelps et al. 2006). Affective biases may guide attention and enhance perception for emotionally significant stimuli across various conditions. These biases are generally unintentional, independent of explicit relevance, and triggered without overt attention. However, they may also be modulated by expectations, task characteristics, and available resources for covert attention. In the latter case, emotive stimuli functions as cues and modulate cognitively driven attention; perceivers attend to the location at which a behaviorally significant stimulus is likely to appear.

In sum, fearful faces may elicit an involuntary orienting of spatial attention towards their location, the same way a sudden flash at a location may cause the same effect, with the time-course of this process being rapid, modulating an early exogenous VEP in the P1 component. Or, emotional cues may modulate cognitively driven attention, prompting perceivers to attend at a certain location, enhancing thus P1 time-locked to the appearance of the target. Both effects of fearful faces on P1 are very similar to those induced by other traditional manipulations of spatial attention in the pre-cueing paradigm. This being the case, the emotive modulation of the P1 component does not entail the CP of early vision, because the indirect modulation of P1 by affective states is akin to the effects of spatial pre-cueing by non-emotive cues. It follows that the emotive effects are similar to the preparatory base-line shifts associated with endogenous, or exogenous attention. It has been argued by many researchers (see Raftopoulos 2019) that these preparatory effects do not signify the CP of early vision.

Of course, things are more complicated. First, although the evidence examined thus far suggests that threatening stimuli attract automatically exogenous attention and affect directly perceptual processing, and that these effects that are not found with neutral stimuli or even with emotional stimuli that are not threatening, experiments with search task that presented facial stimuli with different emotions and compared attentional capture for angry and happy faces suggest mixed results. Some studies suggest an advantage for angry faces compared to neutral faces (Horstmann et al. 2006; Huang, et al. 2011), but sometimes also a reversed asymmetry (Juth et al. 2005). These findings are not necessarily conflicting, since it is possible that there is a general threat bias, probably hardwired in our brains as suggested above, that leads to early attentional capture for threatening stimuli, which is then followed by an avoidance response (orienting away from the threatening stimulus) during later processing stages.

Second, evidence shows that the capture of attention by emotional stimuli may not be as automatic as the previous studies suggest. Using a flanker task, Tannert and Rothermund (2020) found that emotional faces do not automatically capture attention, except when they are task-relevant. Plus and Rothermund (2018) found no validity effects in a dot-probe task (an index of attentional capture by emotional faces), and suggested that attentional capture by emotional faces is a conditional, context-dependent phenomenon; if the emotions depicted are task-relevant, or relevant to the person such as a depressed patient experiencing negative emotions, faces displaying such emotions automatically capture attention.

Context-effects and task-relevance are registered at about 170 ms. poststimulus, as indexed by N1 ERP component. We discussed the role of both OFC and amygdala in affecting perceptual and attentional processing at this latency. This means that emotional significance, attentional capture, and perceptual processing are certainly intertwined with task demands and, thus, that the latter affect the former. This means that, indeed, emotions capture attention easier if they are relevant to viewers. This, however, cannot explain the much earlier modulation of both C1 and P1 by threating stimuli; these ERP components are much too early to be affected by task demands. One might reply that an early amygdala response to fear (time-locked to the cue) does not necessarily translate into an "automatic" attention orienting (time-locked to the target) (Pourtois personal communication), but this does not explain the positive correlation between the C1 and P1 enhancement by threatening stimuli. Clearly the early modulation of amygdala, through its connections to fronto-parietal areas that are involved in attentional control, facilitates exogenous attention for the stimuli appearing at the locations cued by the threatening stimulus.

It is possible that the discrepancies concerning attentional capture by affective stimuli are due to the different experimental designs used. The experiments suggesting automatic capture use mainly EEGs or fMRIs, although there are behavioral studies as well, while the experiments that show conditional automatic capture are mainly behavioral. Furthermore, the former rely mostly on pre-cueing by the affective stimulus, while the latter deploy flanking or use dot-probe, or visual search tasks. Both lines of research show that in populations with some form of social pathologies (high anxiety, or chronic depression etc.) affective stimuli do capture attention automatically, apparently because they are task relevant, since, given their high anxiety levels, these stimuli are significant to people with such pathologies.

Be that as it may, it has no bearing to the problem of the CP of early vision by affective stimuli. If it is correct that the capture of attention by emotional stimuli is not automatic but context dependent, or if it is conditionally automatic within the appropriate context that is determined by the task demands, the allocation of attention is controlled by task relevance. This, however, occurs at about 170 ms. poststimulus and, thus, the control of attention by the affective stimulus occurs after that latency. Therefore, any attentional modulation of perceptual processing occurs after 170 ms., which means that it takes place during late vision and does not affect early vision. It follows that any cognitive states involved in the determination of task relevance of the affective stimulus do not affect early vision and, thus, do not threaten its CI.

6 Late Effects of Emotive States

The N170 is also modulated by emotional content and this modulation occurs at about the same time that amygdala start processing the emotional content of face expressions. (Conty et al. 2012) EEG studies that manipulate attentional and emotional facial expressions orthogonally (Holmes et al. 2003) show that emotional effects start modulating face processing at the fusiform gyrus closely following the N170 face specific component. Thus, the emotional modulation of the extrastriate cortex takes place prior to task-related attentional selection and prior to the full processing of faces in the cortex. This is also an indication that emotional effects enhance or inhibit the processes that lead to object recognition.

ERP results on affective processing show also an early posterior negativity (EPN) at about 200–300 ms for arousing vs. neutral pictures, which involves both fronto-central and temporo-occipital sites and which is thought to index ‘motivated attention’. The motivated attention selects affectively arousing stimuli for further processing on the basis of perceptual features. Other findings show that the affective amplitude modulation persists for a prolonged period of time, which entails that emotionally arousing stimuli receive enhanced encoding even when they are task irrelevant (Olofsson et al. 2008). Around the same time (200–300 ms), stimulus valence has been shown to elicit a decreased N2 negativity (unpleasant compared to pleasant stimuli).

The negativity biases of ERP waveforms at these latencies may reflect rapid activity by amygdala processing of aversive information and the transmission of this information to fronto-parietal areas where it modulates the allocation of attention so that unpleasant stimuli may receive priority processing. Or, they may reflect the functioning of an early selective attention mechanism that does not depend on valence categorization but on motivational relevance and which facilitates processing of stimuli with high motivational relevance (Shupp et al. 2004).

Emotional effects are found at long latencies as well (> 300 ms), probably reflecting the impact of emotional signals to the processing of sensory information in fronto-parietal areas. Both P3 and the following positive slow wave relate to the elevated ERP positivity caused by the emotional modulation of P3 and of the slow wave, and by the valence value and arousal level of the stimulus (valence influences P3b but not P3a, while arousal influences both).

In general, valence effects are found predominantly for early and middle-range ERP components, probably reflecting the role of emotional intrinsic value of the stimulus for stimulus selection. Arousal effects, manifested in a positive shift in the ERP waveforms, are found for middle-range and late components and constitute the primary affective influence at these latencies (Olofsson et al. 2008). They probably reflect the allocation of processing resources to the selected stimuli.

7 Conclusion

I examined the evidence concerning the emotional effects on perceptual processing concentrating on early vision to address the issue whether affective influences, if any, on early vision entail that early vision is CI. We examined one line of research that suggests that emotional stimuli affect directly the early visual cortex enhancing the early C1 ERP component, through signals from the amygdala to the early visual areas,

and indirectly, by facilitating exogenous attentional capture, an effect that is evidenced by the enhancement if the P1 ERP component, probably though signals from the amygdala to fronto-parietal areas involved in attentional control. We explained why these two sorts of effects do not necessarily mean that early vision is CP. The other line of research that we examined suggests that emotional stimuli affect attention only if they are task relevant. Since the task relevance of a stimulus is encoded about 170 ms. poststimulus, the emotional stimuli engage endogenous, cognitively-driven attention. Therefore, the affective modulation of perceptual processing via endogenous attention occurs at latencies falling within late vision and does not affect early vision.

Notes

I would to thank an anonymous reviewer for making this point.

References

Amaral, D.G., H. Behniea, and J.L. Kelly. 2003. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience 118: 1099–1120.

Andersen, S., M. Muller, and S. Hillyard. 2012. Tracking the allocation of attention in visual scenes with SSEVP. In Cognitive Neuroscience of Attention, ed. M.I. Posner. N.Y: Guilford Press.

Armony, J.L., and R.J. Dolan. 2002. Modulation of spatial attention by fear-conditioned stimuli: An event-related fMRI study. Neuropsychologia 40 (7): 817–826.

Ashley, V., P. Vuilleumier, and D. Swick. 2003. Effects of orbitofrontal lesions on the recognition of emotional faces expressions. Paper presented at the Cognitive Neuroscience Society Meeting.

Attar, C.H., S. Andersen, and M.M. Muller. 2010. Time course of affective bias in visual attention: Convergent evidence from steady-state visual evoked potentials and behavioral data. NeuroImage 53: 1326–1333.

Balcetis, E., and D. Dunning. 2010. Wishful seeing: Desired objects are seen as closer. Psychological Science 21: 147–152.

Barbey, A.K., R. Colom, J. Solomon, F. Krueger, C. Forbes, and J. Grafman. 2012. An integrative architecture for general intelligence and executive function revealed by lesion mapping. Brain 135 (4): 1154–1164.

Bar, M. 2009. The proactive brain: Memory for predictions. Philosophical Transactions of the Royal Society, Biology 364: 1235–1243.

Barrett, L.F. 2017. How Emotions are Made: The Secret Life of the Brain. NY: Houghton Mifflin Harcourt.

Barrett, L.F., and M. Bar. 2009. See it with feeling: Affective predictions during object perception. Philosophical Transactions of the Royal Society 364: 1325–1334.

Bishop, S.J., R. Jenkins, and A.D. Lawrence. 2007. Neural processing of fearful faces: Effects of anxiety are gated by perceptual capacity limitations. Cerebral Cortex 17: 1595–1603.

Block, N. 2007. Consciousness, accessibility, and the mesh between Psychology and Neuroscience. Brain and Behavioral Sciences 30: 481–548.

Brosch, T., G. Pourtois, D. Sander, and P. Vuilleumier. 2011. Additive effects of emotional, endogenous, and exogenous attention: Behavioral and electrophysiological evidence. Neuropsychologia 49 (7): 1779–1787.

Brown, Ch., W. El-Deredy, and I. Blanhette. 2010. Attentional modulation of visual-evoked potentials by threat: Investigating the effect of evolutionary relevance. Brain and Cognition 74: 281–287.

Burge, T. 2010. Origins of Objectivity. Oxford: Clarendon Press.

Carrasco, M. 2011. Visual attention: The past 25 years. Vision Research 51: 1484–1525.

Clark, A. 2013. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences 36: 181–204.

Conty, L., G. Dezecache, L. Hugueville, and J. Grezes. 2012. Early binding of gaze, gesture, and emotion: Neural time course and correlates. NeuroImage 28: 4531–4539.

Cooper, R.M., and S.H. Langton. 2006. Attentional bias to angry faces using the dot-probe task? It depends when you look for it. Behaviour Research and Therapy 44 (9): 1321–1329.

Dehaene, S., J.-P. Changeux, L. Naccache, J. Sackur, and C. Sergent. 2006. Conscious, preconscious, and subliminal processing: A testable taxonomy. Trends in Cognitive Science 10 (5): 204–211.

Di Russo, F., A. Martinez, and S.A. Hillyard. 2003. Source analysis of event-related cortical activity during visuo-spatial attention. Cerebral Cortex 13: 486–499.

Domınguez-Borras, J., and P. Vuilleumier. 2013. Affective biases in attention and perception. In Handbook of Affective Neuroscience, ed. J. Armony and P. Vuilleumier, 331–356. Cambridge: Cambridge University Press.

Dretske, F. 2006. Perception without awareness. In Perceptual Experience, ed. T. Gendler and J. Hawthorne. Oxford: Oxford University Press.

Dretske, F. (1993). Conscious experience. Mind, 102 (406), 263–283, reprinted in Noë and Thompson (2002), Vision and Mind. Cambridge, MA: The MIT Press

Firestone, Ch., and B.J. Scholl. 2016. Cognition does not affect perception: Evaluating the evidence for ‘top-down’ effects. Behavioral and Brain Sciences, Behavioral and Brain Sciences. https://doi.org/10.1017/S0140525X15000965.

Freese, J.L., and D.G. Amaral. 2006. Synaptic organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. Journal of Comparative Neurology 496 (5): 655–667.

Friston, K. 2010. The free-energy principle: A unified brain theory? Nature Reviews Neuroscience 11: 127–138.

Gschwind, M., G. Pourtois, S. Schwartz, D. Van De Ville, and P. Vuilleumier. 2012. White matter connectivity between face-responsive regions in the human brain. Cerebral Cortex 22 (7): 1564–1576.

Hodgson, D. 2008. The visual dynamics of Upper Palaeolithic art. Cambridge Archaeological Journal 18: 341–353.

Holmes, A., P. Vuilleumier, and M. Eimer. 2003. The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Brain Research 16: 174–184.

Horstmann, G., K. Borgstedt, and M. Heumann. 2006. Flanker effects with faces may depend on perceptual as well as emotional differences. Emotion 6 (1): 28–39.

Huang, S.L., Y.C. Chang, and Y.J. Chen. 2011. Task-irrelevant angry faces capture attention in visual search while modulated by resources. Emotion 11 (3): 544–552.

Isbell, L.A. 2006. Snakes as agents of evolutionary change in primate brains. Journal of Human Evolution 51: 1–35.

Johnson, J.S., and B.A. Olshausen. 2005. The earliest EEG signatures of object recognition in a cued-target task are postesensory. Journal of Vision 5: 299–312.

Jung, R.E., and R.J. Haier. 2007. The parieto-frontal integration theory (P-Fit) of Intelligence: Converging neuroimaging evidence. Behavioral and Brain Sciences 30: 135–187.

Juth, P., D. Lundqvist, A. Karlsson, and A. Ohman. 2005. Looking for foes and friends: Perceptual and emotional factors when finding a face in the crowd. Emotion 5 (4): 379–395.

Kawasaki, H., O. Kaufman, H. Damasio, A.R. Damasio, M. Granner, H. Bakken, T. Hori, M.A. Howard, and R. Adolphs. 2001. Single-neuron responses to emotional visual stimuli recorded in human ventral prefrontal cortex. Nature, Neuroscience 4: 15–16.

Kihara, K., and Y. Takeda. 2010. Time course of the integration of spatial frequency-based information in natural scenes. Vision Research 50: 2158–2162.

Kim, D., S. Lokey, and S. Ling. 2017. Elevated arousal levels enhance contrast perception. Journal of Vision 17 (14): 1–10.

Krolak-Salmon, P., M.A. Henaff, A. Vighetto, O. Bertrand, and F. Mauguiere. 2004. Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: A depth electrode ERP study in human. Neuron 42: 665–676.

Lamme, V.A.F. 2003. Why visual attention and awareness are different. Trends in Cognitive Sciences 7 (1): 12–18.

Lang, P.J., M.M. Bradley, J.R. Fitzsimmons, B.N. Cuthbert, J.D. Scott, B. Moulder, and V. Nangia. 1998. Emotional arousal and activation of the visual cortex: An fMRI analysis. Psychophysiology 35: 199–210.

Lavie, N. 2005. Distracted and confused? selective attention under load. Trends in Cognitive Science 9: 75–82.

Lebel, C., and S. Leoni. 2018. The development of brain white matter microstructure. NeuroImage 182 (15): 207–218.

Leppanen, J.M., and C.A. Nelson. 2009. Tuning the developing brain to social signals of emotions. Nature Reviews, Neuroscience 10: 37–47.

Mogg, K., and B.P. Bradley. 2002. Selective orienting of attention to masked threat faces in social anxiety. Behavior Research and Therapy 40 (12): 1403–1414.

Muller, M.M., S.K. Andersen, and A. Keil. 2008. Time course of competition for visual processing resources between emotional pictures and foreground task. Cerebral Cortex 18: 1892–1899.

Neubauer, S., Hublin, J. J., & Gunz, P. (2018). The evolution of modern brain shape. Science Advances, 4(1), https://doi.org/10.1126/sciadv.aao5691.

Olofsson, J.K., S. Nordin, H. Sequeira, and J. Polich. 2008. Affective picture processing: An integrative review of ERP findings. Biological Psychology 77: 247–265.

Oya, H., H. Kawasaki, A. Matthew, M.A. Howard, and R. Adolphs. 2002. Electrophysiological responses in the human amygdala discriminate emotion categories of complex visual stimuli. Journal of Neuroscience 22 (21): 9502–9512.

Pessoa, L., and R. Adolphs. 2010. Emotion processing and the amygdala: From a “low road” to “many roads” of evaluating biological significance. Nature Reviews Neuroscience 11: 773–783.

Phelps, E.A. 2006. Emotion and cognition. Annual Review of Psychology 57: 27–73.

Phelps, E.A., and J.E. LeDoux. 2005. Contributions of the amygdala to emotion processing: From animal models to human behavior. Neuron 48: 175–187.

Phelps, E.A., S. Ling, and M. Carrasco. 2006. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychological Science 17 (4): 292–299.

Plus, S., and K. Rothermund. 2018. Attending to emotional expressions: no evidence for automatic capture in the dot-probe task. Cognition and Emotion 32 (3): 450–463.

Pourtois, G., D. Grandjean, D. Sander, and P. Vuilleumier. 2004. Electrophysiological correlates of spatial orienting towards fearful faces. Cerebral Cortex 14 (6): 619–633.

Pourtois, G., Gr. Thut, Rolando Grave, R. de Peralta, Ch. Michel, and P. Vuilleumier. 2005. Two electrophysiological stages of spatial orienting towards fearful faces: Early temporo-parietal activation preceding gain control in extrastriate visual cortex. NeuroImage 26: 149–163.