Abstract

In the scenario of growing polarization of promises and dangers that surround artificial intelligence (AI), how to introduce responsible AI and robotics in healthcare? In this paper, we develop an ethical–political approach to introduce democratic mechanisms to technological development, what we call “Caring in the In-Between”. Focusing on the multiple possibilities for action that emerge in the realm of uncertainty, we propose an ethical and responsible framework focused on care actions in between fears and hopes. Using the theoretical perspective of Science and Technology Studies and empirical research, “Caring in the In-Between” is based on three movements: the first is a change of focus from the world of promises and dangers to the world of uncertainties; the second is a conceptual shift from assuming a relationship with robotics based on a Human–Robot Interaction to another focused on the network in which the robot is embedded (the “Robot Embedded in a Network”); and the last is an ethical shift from a general normative framework to a discussion on the context of use. Based on these suggestions, “Caring in the In-Between” implies institutional challenges, as well as new practices in healthcare systems. It is articulated around three simultaneous processes, each of them related to practical actions in the “in-between” dimensions considered: monitoring relations and caring processes, through public engagement and institutional changes; including concerns and priorities of stakeholders, with the organization of participatory processes and alternative forms of representation; and making fears and hopes commensurable, through the choice of progressive and reversible actions.

Similar content being viewed by others

1 Introduction

In the landscape of imaginaries that surround artificial intelligence (AI) and robotics in healthcare, there is a prominent debate regarding utopian and dystopian scenarios, whereby social and ethical discussions are polarized between the promises and the dangers of AI. This paper is grounded in a realm that is far removed from the so-called ‘abyss’ between utopias and dystopias, and is instead situated in the terrain that lies between the fears and hopes, and where the multiple possibilities are experienced (de Sousa Santos 2016). Using the theoretical approach of Science and Technology Studies (STS), we propose a set of actions in these in-between spaces to introduce responsible AI and robotics to healthcare. However, we do not propose any kind of action, but actions based on the ethics of care. We do so by exploring a particular case that could exemplify some of the common dynamics of medical AI, namely that of care robots in a children’s hospital (i.e., socially assistive robots).

1.1 The narrative of promises and dangers in AI

There is an increasing narrative in public and academic domains that assumes that the solution to the main clinical, economic, and general well-being problems that healthcare systems are facing around the world will come from AI (Morley et al. 2020; Topol 2019). Medical AI offers plenty of promise: early diagnosis using image analysis in radiology, pathology, and dermatology, with excellent diagnostic speed and accuracy when working in parallel with medical experts; personalized treatments that optimize the care trajectory of chronically ill patients; precision therapies for complex illnesses; reduction of medical errors; and greater enrolment for clinical trials (Miller and Brown 2018). Leading healthcare and computer scientists have argued that AI will help healthcare systems achieve greater efficiency in two ways: on the one hand by improving the timeframe and accuracy of patient diagnosis and treatment and, when possible, helping with early prevention; and, on the other hand, streamlining the workload, using staff more efficiently (Kerasidou 2020). Robotics is considered to be a specific sub-category of medical AI (Shoham et al 2018). In the time of so-called New Robotics, a new family of robots devoted to healthcare applications has become particularly prolific (Schaal 2007). This new era of robotics enables safe robot interactions with humans, easy programming and adjustment to particular needs, and the possibility for robots to function as mobile, interactive information systems in hospitals, nursing homes and other healthcare settings. Due to their capacity to interact with humans, these robots open up an interesting scenario for working on the emotional and social dimensions of health.

The impetus of AI and robotics science, and the deep learning subtype in particular, facilitated by the use of so-called big data, along with highly enhanced computing power and cloud storage, enables greater automation in a variety of sectors. However, although automation has a long-standing impact on employment, productivity and the economic performance of companies and nations, the introduction of AI systems to healthcare environments has had an unprecedented impact on the automation of care. (Vallès-Peris and Domènech 2020; Sampath and Khargonekar 2018). Thus, along with the narrative on the promises of AI, these innovative technologies are also the source of many of the uncertainties of our time.

The field is certainly high on promise and relatively low on data and proof. Moreover, many problems have been associated directly to AI and robotics, such as social bias, privacy and security, and lack of transparency (Topol 2019). The possibility of machines performing activities that are traditionally associated with the exclusively human condition -such as abstract problem-solving, perceptual recognition, social interaction and natural language (Vallor and Bekey 2017)-generates many dangers. These dangers relate to a hypothetical scenario of future AI functionalities, rather than real, current ones, and are associated with the “supposed” possibility of humans being replaced by technological systems or of care for dependent and ill persons becoming "dehumanised" (Vallès-Peris et al. 2021a).

Although it is undeniable that the development of AI and robotics is exponentially real, there is a gap between the ethical concerns around its supposed functionalities and the real possibilities of contemporary AI (Hagendorff 2020). If the debate focuses only on a utopian or dystopian development of robotics, some issues are exaggerated, while the identification of contexts, situations and controversies regarding artefacts that do not respond to this promised future remains unclear. At the same time, it is difficult to identify problems and generate an ethical debate around some robots that are already in use (that do not integrate the complex functionalities and capabilities expected of highly intelligent autonomous robots).

As a proposal to go beyond this scenario of promises/dangers, we develop an approach to introduce responsible AI and robotics to healthcare based on three movements: (a) the first is a change of focus from the world of promises and dangers to the world of uncertainties; (b) the second is a conceptual shift from assuming a relationship with robotics based on a Human–Robot Interaction (HRI) to another focused on the network in which the robot is embedded (the “Robot Embedded in a Network-REN”); and the last is (c) an ethical shift from a general normative framework to a discussion on the context of use, from the logic of the ethics of care. Based on these suggestions, we define a new ethical–political proposal for introducing responsible AI and robotics, what we call “Caring in the In-Between”.

In order to develop our proposal, this paper is organized in seven sections. The one that follows contextualizes our research on care robots; we then develop the three movements on which we base our proposal: in Sect. 3 we focus on the movement from the world of promises and dangers to the world of uncertainties, in Sect. 4 we explore the movement from HRI to a REN relation and, in Sect. 5 we explain the movement from an general ethical framework to the context of use, from the ethics of care; in Sect. 6 we outline our ethical–political proposal to introduce responsible robotics and AI to healthcare and; finally, we summarize the main conclusions.

2 Empirical research on care robots in a children’s hospital

Our proposal for responsible AI and robotics is based on a series of empirical studies that we carried out on the introduction of care robots to a children’s hospital in Barcelona. In children’s health settings, robots for care are typically considered to be those that are used in specific therapeutic interventions, e.g., with autistic children (Heerink et al 2016) or in rehabilitation processes (Meyer-Heim and van Hedel 2013). Care robots are also those involved in the extensive line of research and application of robots to reduce pain and anxiety when children are subjected to some type of intervention (Crossman et al 2018) or simply to make hospital stays more pleasant (Díaz-Boladeras et al 2016). In engineering and computer science, the care robots to which we refer in this paper are usually included in the field of so-called “socially assistive robotics”. In general terms, this field is focused on providing artificially intelligent robotic systems to aid end-users with special physical or cognitive needs in their daily activities (Pareto Boada et al. 2021).

Since 2015, we have been involved in the research being done by a children’s hospital in Barcelona to design and introduce care robots. As social scientists, our work together with an interdisciplinary team of physicians, nurses and engineers involved identifying the ethical and social implications of introducing such robots to the hospital. Throughout the process, we conducted three empirical research projects that shared the common objective of exploring the fears and hopes of the different actors that, in one way or another, established or would have to establish a relationship with a robot for care.

Some details of these research projects are the following:

-

A participatory process with children to design a robot for care for a children’s hospital. For three months, we conducted a participatory process in a school with 60 six-year-old children. Children were organized into two large groups of 30, and 12 sessions were held with each group (24 sessions in total). The participatory process employed design-thinking techniques.

-

A Vision-Assessment process to identify the risks and benefits associated with future visions of robots for care among the different categories of people involved in care at a children’s hospital. We organized: three focus groups with nurses, with a total of 22 people; three focus groups with hospital volunteers, also with 22 people taking part; one focus group with the relatives of hospitalized children—after previously holding a workshop with them on robotic technologies in care—with 10 people participating; and three interviews with physicians who were heads of units at a children’s hospital.

-

A set of interviews with roboticists to identify their concerns regarding robots for care. Eleven face-to-face semi-structured interviews were conducted with roboticists working in the field of care robots.

The framework of reference for the various participants and the fieldwork carried out during the investigations, was the robots that were being implemented in that hospital (already introduced or in pilot/prospective phases): pet robots used to reduce anxiety in pre-operative and diagnostic tests, as well as to make the hospital stay more pleasant for children with long admissions; therapy robots for children with autism and; tele-communication robotic systems, for diagnostic visits by doctors or to facilitate communication with their school in the case of hospitalised children. This does not mean that our proposal refers only to this type of robot, but that our proposal is nurtured by a study of the implementation of these artefacts. The fact that our study was carried out on robots used with hospitalised children meant we could identify particularly sensitive issues and highly delicate situations more easily.

We analysed the collected data using qualitative techniques, i.e. thematic analysis for the interviews and focus groups (Clarke and Braun 2014) and thick description for the participatory process (Ponterotto 2006). However, the goal of this paper is not to present the analysis and results of these three research projects, but to build a responsible approach to AI and robotics that embraces the practices and concerns of the different groups of actors identified in those projects.

3 From the world of promises and dangers to the world of uncertainties

As explained in the introduction, the social and ethical debates surrounding AI and robotics are often articulated around utopian or dystopian scenarios (Shatzer 2013), or focused on the identification of risks and benefits (Verbeek 2006), which contributes to a growing polarization of promises and dangers. As sociologist de Sousa Santos (2016) warns, in our technologically modified world, fear and hope are collapsing in the face of the growing polarization of hope without fear (the health innovation sector and markets with exponential benefits) and fear without hope (anyone who believes that technological progress cannot be stopped and that we will need to adapt to whatever changes may come about). In this situation, uncertainties become abysmal, and leave no room for action.

Inspired by Spinoza's notions, de Sousa Santos (2016) examines the unequal epistemological and experiential distribution of fear and hope. In addition to the idea of abysmal uncertainties, uncertainty is also the experience of the possibilities that arise from the multiple relationships that can exist between fear and hope. Since the relationships between different groups and actors are different, the types of uncertainty are also different. Fear and hope are not equally distributed among all social groups or historical epochs. In relation to technologies, fear and hope are defined by parameters that tend to benefit social groups that have greater access to scientific knowledge and technology. For these groups, the belief in scientific progress and innovation is strong enough to neutralise any fear regarding the limitations of current knowledge. For those who have less access to scientific and technological knowledge, this is experienced as inferiority that generates uncertainty. For them, uncertainty is generated by their place in a world that is defined and legislated by powerful and alien knowledge that affects them and over which they have little or no control. This knowledge is produced about them and eventually against them, but never with them (de Sousa Santos 2016).

In the dreamscape of promises and dangers that surround medical AI, we defend the need for ethical and social discussion to move away from a speculative scenario that considers an abyss between utopias and dystopias. In contrast, we situate ourselves in the terrain that lies in between fears and hopes, where multiple possibilities arise (de Sousa Santos 2016). In this terrain, uncertainty is situated and negotiated, embedded in the actors that participate in healthcare relations. So, in order to develop more responsible AI in healthcare, which is particularly addressed at social needs and guaranteeing individual and collective well-being, we propose a focus on care (caring as action) in the spaces in between that are opened by a particular artifact (i.e. robots for care in paediatric hospitals).

The literature on ethics and robots primarily addresses the interests of what the community focused on study in this field considers important, while the opinions of other stakeholders, such as robotics researchers, health professionals and patients, are not directly discussed. In the public debate, questions about whether humans are replaceable by robots or whether robots should have rights seem to take precedence over the current and more real challenges that could arise from their use in specific applications (van der Plat et al. 2010). This “a priori” philosophical approach has some limitations (Stahl and Coeckelbergh 2016), including the difficulty of establishing a normative framework to guide and orient everyday problems that may arise in hospitals or other health settings when care robots are introduced.

Our proposal to go beyond a discussion centred on the promises of AI is based on an analysis of how fears and hopes are articulated and negotiated with different actors. While looking into the controversies that the several actors involved in care processes with robots have to deal with, we came to study the practices and values of the different actors that participate in healthcare relations in a children’s hospital. Callon et al (2009) maintain that the controversies over the use of certain technologies create uncertainty and bring about unforeseen concerns. Instead of simply seeking consensus or general principles, if we wish to enrich the debate around technologies, it is important to call attention to the importance of collective discussion of the matter. As observed by Epstein (1995), the participation of non-specialists in the development of knowledge that actually concerns them may lead to problems being formulated and research results being disseminated and implemented in a different way. Following this view, what we try do here with regard to care robots is to reflect the importance of collective discussion of the controversies surrounding medical AI to thereby enrich the formulation of problems and propose alternative frameworks.

Given that the level of innovation in robotics and AI is accelerating and surpassing our capacity to anticipate its consequences and influences on our lives, the responsible introduction of these technologies to healthcare is situated in the realm of uncertainty that lies between fears and hopes. In these contexts of the uncertain impact of technologies, Callon et al (2009) call for precaution with regard to the potential harm, impact or causal relation of a technological innovation. The action that may better represent precaution is the so-called “measured action”, meaning a progressive action motivated by feedback and constant debate that considers the consequences of that action. “Measured action implies an active, open, contingent and revisable approach, exactly the opposite of a clear final decision. And then, this approach rests on a deepening of knowledge, but not only of the knowledge provided by the scientific disciplines of isolated research. The proportionality of actions for society, acceptability and economic cost also have their place in deliberation” (Callon et al. 2009: 210).

This position leads us away from the realm of abysmal uncertainties between utopian promises and dystopian dangers. This is uncertainty about desired futures, about what a care robot is, about the risk of introducing such devices to children’s care, and so on. In our proposal, uncertainty and precaution are the most relevant characteristics of AI and robotics, and are thus a privileged space for responsible introduction of these technologies to healthcare. This idea is grounded on an STS theoretical background and on a particular way of understanding robots, as well as on the centrality of the ethics of care when considering responsible technologies. In the following sections, we develop each of these issues.

4 From human–robot interaction (HRI) to a robot embedded in a network (REN)

Care robots are used to perform a number of specific tasks-such as facilitating the relationship between patients and places outside the hospital, reducing distress in pre-operative care, and modifying the ways that healthcare staff perform certain actions—such as measuring vital signs, performing detailed interventions, etc. When thinking about and designing a robot’s agency in medical settings, as well as its ability to interact with children, it is relevant to take into account how artefacts or devices are embedded in a network of caring relationships that involves several actors(López-Gómez 2015). Children’s well-being is not only defined by the robot’s ability to interact with them, but also by its ability to interact with the whole social system of care relationships. However, in engineering and the related ethical debates, the relationship between children and AI robots is often conceptualized by the notion of Children-Robot Interaction (CHRI), which is the children’s equivalent of Human–Robot Interaction (HRI), and which describes two isolated entities interacting with each other: a human and a robot.

Most STS approaches, such as the Actor Network Theory and social constructivism of technology, postulate a more or less open ontological universe in which it is difficult to establish rigid boundaries between the social, the human, the natural and the technological (Karakayali 2015). With the abandonment of the notions of nature and society as separate entities, a new entity emerges: a heterogeneous network (Callon and Latour 1992). In this view, any technological innovation is explained by its relational and contextual nature, i.e. how it makes sense in its network of relationships (Domènech and Tirado 2009). Therefore, a technological innovation is not only an artefact, but a whole network of devices, processes and actors (Latour 1999). From this perspective, care robots are a conglomerate of material, social and semiotic relations in which technical, scientific, political, economic, social and ethical considerations are intimately entwined within a single actor (Latour 1999). This idea of heterogeneity can be accompanied by relational materialism, according to which the elements do not exist for reason of any essence but are constituted from the networks of which they are part. This approach could be carried to its logical conclusion by assuming that objects, entities or actors are nodes in a network. These nodes are also constituted interactively, and do not exist outside their interactions. Artefacts, people, institutions, protocols… everything is an effect or a product (Law and Mol 1995).

Some authors use the concept of interpretive flexibility to classify robots for care. According to this concept, a robot could be classified by its context of use, the function for which it is used, and the user (Howcroft et al 2004). This notion highlights the impossibility of separating the definition of technical problems from the socio-economic framework to which they are associated (Bijker 2009). Therefore, a robot can be called a care robot when it is used in a hospital to reduce children’s anxiety in preoperative spaces, but the same robot can also be classified as an entertainment robot when it is used by engineering students to compete in international robot soccer leagues. Likewise, a robot that is used by nursing staff to lift patients with little or no mobility can be classified as a robot for care, but when it is used by workers in a factory to lift heavy objects, it can be considered an industrial robot (van Wynsberghe 2015).

When technologies are used, they always help to shape the context in which they fulfil their function. They help to shape human actions and perceptions, and create new practices and ways of living. Latour (1999) calls this phenomenon “technological mediation”: technologies mediate the experiences and practices of their users. Such mediations have at least as much moral relevance as technological risks and disaster prevention (Ihde 1999; Verbeek 2006). Technologies help to shape the quality of our lives and, more importantly, they help to shape our moral actions and decisions. Robots for care can help medical staff in healthcare settings, for example, by monitoring vital signs and thus preventing certain situations in critical paediatric patients, or by personalizing and adjusting therapies with autistic children. However, some studies have warned us that the introduction of telemedicine devices (technologies that are often built into AI robots) has changed healthcare practices and knowledge (Mort et al 2003). The heterogeneity of care, as well as robot heterogeneity, implies that the practices and values of care are transformed when new nodes are introduced to healthcare networks. When a robot is introduced to a network of care relations in a hospital, it and the network are transformed. Technologies enable certain relationships between human beings and the world that would not otherwise be possible. However, technologies are not neutral intermediaries, but active mediators that contribute to the formation of human perceptions and interpretations of reality (Verbeek 2006). Robots mediate the way we understand and practice care processes, just as the robot is reconstructed from the assemblage of social relations in which it participates (Law and Mol 1995).

Following the idea that, in the use of technologies, forms of mediation are performed that are pre-inscribed in the artefact—what Peter-Paul Verbeek (2006) called the materialized morality—, we analysed how children performed when designing a robot for care (Vallès-Peris et al. 2018). Children’s representations of well-being in healthcare settings typically include the presence of their relatives or other people. Their representation of themselves alone while hospitalized or sick is a sad one, while that of being surrounded by relatives, siblings or medical personnel is a happy one. Prospective interactions with a robot that produce a feeling of well-being are imagined in a network with other people. In the same manner, assessment of a robot made by those responsible for care in a hospital (in this case, the focus groups of nurses and volunteers who evaluated the risks and benefits of a dinosaur-shaped robot introduced to the hospital in a pilot phase) is based on the robot’s capacity to be integrated into the relationship network that accompanies the child during his or her hospitalization.

Going beyond the conceptualization of human–robot relationships in a binary model represented by the HRI, the interest of the Robot Embedded in a Network approach (REN) lies in the uncertainties and controversies that appear in daily care in children’s hospitals, such as: how tasks are distributed between nurses and robots, for example, if the nurse is accompanied by a robot that takes the patient's vital signs; how a hospital integrates the psychology staff’s opinions when deciding whether to take part in a pilot program to introduce this type of device to therapies for children with autism; how nurses take advantage of the presence of a robot to entertain a child when trying to insert an IV (intravenous line); how parents and doctors can assess whether it is necessary to collect facial expression data to monitor a child when in the ICU, etc. Knowing how to manage and solve these uncertainties requires alternative ethical frameworks that go beyond “big” philosophical issues about robotics for care; issues that, in turn, are based on “hypothetical” developments of robots for care, not on “real” functionalities and tasks that they can perform nowadays.

5 From an ethical general framework to the context of use, using the ethics of care

This conceptualization of technological innovation raises questions about the traditional ethical approach to AI while delving deeper into the relational theory in bioethics that includes a more-than-human-approach (Lupton 2020). Relational theory reshapes the notion of autonomy, emphasizing the patients’ social and contextual circumstances, highlighting that people are always part of social networks and all ethical considerations have to take such networks of relationships into account (Sherwin and Stockdale 2017). Deborah Lupton (2020) proposes consideration of the role of technological artefacts in such networks for a better understanding of digital health and bioethical considerations. In her view, this more-than-human analysis highlights the complexities involved when robots for care or other digital health technologies are introduced to medical settings (Lupton 2020). In this same line of thought, we situate ethical and social controversies around care robotics in the network made up by a diverse range of actors: technology designers, healthcare professionals, relatives, patients and robots. Our focus would not be on AI technologies, but on identifying the relevant variables that shape healthcare relationships when AI technologies are introduced.

To develop an ethical framework, together with the STS tradition, we use the notion of the ethics of care. From the different approaches to this field, our proposal is based on the perspective developed by Joan C. Tronto that seeks to understand care from political philosophy. The starting point of Tronto’s approach is that the core definition of the human is its relational involvement with others, in a network of relationships in which each individual has to reconcile different forms of care responsibilities (Vallès-Peris and Domènech 2020). In these relationships, the morality of care is bound to concrete situations rather than being abstract and based on principles (Cockburn 2005). From these bases, analysis of care processes provides us with a useful guide for thinking about how we perform a particular care task and its ethical dimensions (Tronto 1998). Likewise, and in line with the idea that not all collectives face uncertainty under the same conditions, the perspective of the ethics of care engages with those who have difficulties voicing their concerns (Puig de la Bellacasa 2011). In medical AI and robotics, this also means identifying prevailing care issues in technological development, what power relations the artefacts generate and what relations they contribute to.

Care experiences and practices can be identified, researched and understood concretely and empirically. However, “care” is ambivalent in its meaning and ontology (Puig de la Bellacasa 2017). Assuming this complexity and diversity in its understanding, Fisher and Tronto (1990) propose it should be viewed from a heterogeneous perspective, inseparable from the economic, political, symbolic and material considerations that shape it. For them, care includes: the practices of care, what is usually considered domestic work; the affections and emotional meaning involved in care; and the organisational and political conception that involves managing and regulating everything that sustains care relationships. In STS focused on health technologies, this perspective has been included in the notion of “empirical ethics of care” (Vallès-Peris 2021a), from which the analysis of care relationships implies rejecting the logic according to which there is prior and true knowledge about how care should be provided. Thus, the empirical ethics of care revolve around the idea that it is located in the practices of people who, with the help of processes, protocols, routines or machines, act to achieve good care (Willems and Pols 2010).

From the idea of heterogeneity, the emergence of any artefact has to do with the diverse biases, values or political and economic relations existing during its creation and design process, conditions that are inscribed in that artefact (Bijker 2009; Callon 1998). Hence, the creation and design of care robots cannot be disassociated from the neoliberal logic in which we live, from the low social and political value attributed to care, nor from the sexual division of labor that organizes care in an unequal way between women and men (Tronto 2018). It is in a similar vein that Maibaum et al. (2021) propose that robotics should be approached from its political reality. It is commonplace to say that we are living a care crisis, which has to do with the pressure caused by the lack of nurses, teachers, carers and domestic staff, aggravated by demographic change and the ageing of the population (Vallès-Peris et al. 2021b). In the neoliberal model, the solution to the so-called care crisis is articulated by the market: after years of state downsizing promoted precisely by this neoliberal model, the solution proposed is that the market should be set up to meet human needs (Tronto 2018).

In this context, the growth of robotics in the field of healthcare cannot be separated from its powerful economic impact on the technological innovation market. Despite the fact that the care crisis is one of the arguments that are most often used to explain the need to develop care robotics (Maibaum et al. 2021), the issue of care tends to be virtually absent from the debates surrounding it—although there are some exceptional proposals, such as those by van Wynsberghe (2013). This issue can be seen, for example, in the scarce attention given to the tensions that might arise between commercial or business interests and issues linked to the needs and organization of care, in the various guidelines and regulatory mechanisms for AI systems, including robots (Hagendorff 2020).

From this approach, it is understood that the ethical debate surrounding AI and robotics cannot focus solely on major philosophical issues, but must also consider aspects linked to healthcare management and everyday practices. Thus, in the case of care robots, the interest should not only lie in questions such as whether it is appropriate to replace humans with robots (Sharkey 2008), whether it is desirable to establish affective bonds (Sharkey and Sharkey 2012; Sparrow and Sparrow 2006), or liability in the event of harm or damage (Matsuzaki and Lindemann 2016). The debate from the ethics of care also looks at identifying the practices, fears and hopes that shape care relationships when robots are introduced to specific contexts and situations. For example, how a nurse in a pediatric ward gets the children in the different rooms to go out into the corridor to feed the pet robot; or how it might be decided at a meeting that from now on, therapies with autistic children will use robots that have been donated by a large robotics company.

From a REN approach, we turn away from the standard discussion about utopian or dystopian scenarios based on the risks and benefits of promised care robots, an approach that seems addressed at building trust and anticipation of objections and reticence (Nordmann and Rip 2009). The possibilities for mediation with regard to care robots are configured by the technical elements of a concrete AI device in its interaction with a network of healthcare relations. Thus, it is in that network of social relations that the robot mediates; specific mediations with a particular device within a particular social context (Feng and Feenberg 2008). Andrew Feenberg (1999) illustrates this idea in his instrumentalization theory. The technical dispositions of a robot for care determine the conditions of the robot’s functional possibilities in the network, and these have to do with its design and production history. However, these features are reoriented when they are integrated into a given environment. Robots have their (instrumental) rationality, but when they enter a children’s hospital, what they are and the mediations they make possible depend on their use in that hospital, and in a particular Care Unit with specific protocols and actors (Feenberg 2010).

Next to the door to the operating theatre, a mother strokes a robot pet that her child was holding 10 min before and that calms her down while her child is being operated, it is then when the robot becomes a robot for care for the mother. In that situation, controversies and ethical discussions arise about, for example, the dangers of establishing emotional ties with artificial objects. It is in the specific daily practices of different actors involved in healthcare relations in a hospital (patients, relatives, medical staff, volunteers, protocols, units, tests, etc.) that the problems and the rules to solve such problems emerge. Thus, the point of reference of our research is not the robot, but the relationships in which it participates. There is no prominent actor in analysis of and reflection on ethical and social controversies, but rather various actors that are involved in healthcare relations in a children’s hospital.

It is in the collective network of care relations in a particular environment where we situate research on social and ethical issues on medical AI, approaching the topic from a notion close to the empirical ethics of care in which theory around “good care” is localized in practices, and not just underlying them or guiding actions (Willems and Pols 2010). Consequently, the results of this type of approach are not prescriptive solutions. They do not give answers to questions such as whether or not to install a robot with a webcam in order to monitor paediatric patients in the ICU at all times but to offer suggestive proposals in relation to specific problems. Continuing with the example, what are the arguments for and against installing a webcam, what are the views of relatives, patients, medical staff, innovation departments, etc., and how can we tweak the robot to fit routines, needs and concerns in this context? (Vallès-Peris and Domènech 2020).

6 Caring in the in-between

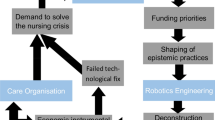

The heuristics of research on ethical and social issues around care robots, from the three defined movements, could be summarized in three assumptions: (1) if a robot is a network of actors and processes making up an assembly, then the identification of such actors and processes, as well as knowledge of their relations and forms of negotiation, becomes the starting point of any ethical reflection. (2) Since the robot is not only the artefact, but is inscribed within a full network of care, the ethical implications are specific to each context of application, to the way in which the robot changes or modifies the articulation of specific care relationships. (3) Therefore, a responsible framework guiding the introduction of robots to hospitals or other healthcare settings must be particularly linked to these contexts and their ethical principles and values.

As explained in the previous sections, if our conception of robots from the ethics of care assumes heterogeneity, the focus of ethics shifts to the healthcare relationships that are interactively constituted when a robot for care is introduced, and debates on how to introduce responsible robots come to revolve around REN. From a REN perspective, the focus of the analysis is on the network of care relationships in which the robot participates, not on the specific functionalities or characteristics of a robot taken as an isolated entity, nor in the dyadic interaction between individual entities. The network of relationships between multiple agents (the robot, the child, family members, hospital protocols, healthcare staff, etc.) constitutes the only framework for discussion. It is within this framework of particular and specific everyday practices, imaginaries, narratives, etc., that the problems and rules for solving these problems emerge.

From this approach, for example, it is possible to analyze how the introduction of certain robotic devices can change the care relationship between the hospital and the patient, or how the diagnosis or treatment skills of healthcare staff are transformed (Vallès-Peris et al. 2021a). Focusing attention on REN, we can identify those elements that are considered necessary to ensure that the introduction of a robot does not undermine the ethical values that shape medical practice and care relationships in a particular context. So, it will not be the same to introduce a robot to make a remote diagnostic visit to a child in hospital, while the doctor is elsewhere, as it will be to perform the same visit while the patient is at home. Similarly, it will not be the same when the same robot is used in the hospital to make a follow-up visit to a pediatric patient admitted to the trauma unit, as it is to a patient admitted to the mental health unit.

6.1 An ethical–political proposal to ensure responsible introduction of AI and robotics to healthcare

Focusing on the multiple possibilities for action that emerge in the realm of uncertainty, we propose an ethical and responsible framework focused on care actions in between fears and hopes. From this idea, we take the notion of measured action from an ethics of care. According to Callon et al (2009), measured action occurs through three interrelated dimensions: (1) a surveillance system: precaution is only possible when formalised socio-technical devices are used to collect information and thereby enable us to move from surveillance to alarm; (2) the deepening of knowledge, through the exploration and identification of excesses. Precaution requires a preliminary assessment of the associated risks and dangers in order to evaluate their severity and (3) the choice of temporary measures to be taken, which must be adapted to each of the situations to which they are applied, guaranteeing their follow-up and update according to the constant polemics that arise. In each of these three dimensions, there may be specific actors, with particular modes of action and different levels of responsibility. Measured action replaces clear and forceful decisions with a series of “small” decisions in each of the three dimensions. Small decisions represent gradual advances, but none of them entails irrevocable commitments.

We adapt the measured action proposed from the ethics of care to discuss care robots and thereby define a responsible framework for introducing these technologies based on “caring in the in-between”. The primary argument regarding the ethics of care is that in order for interdependent living to be conceivable, care is the common element that makes the relationships we establish between our lives and our environment possible. Thus, if we take care seriously, it represents a necessary ethical and political proposition for thinking about the technologies that surround us. This idea problematises a deontological approach according to which ethical standards can only be realised through the declaration of principles. These principles describe moral aspirations, but do not make it possible to think critically or to develop transformative practices (Tronto and Kohlen 2018). Thus, responsible robotics, from its conceptualisation as REN, requires institutional changes to the framework in which robotic care is organised, managed and practised. From the integration of measured action and the ethics of care, caring in the in-between is defined as a triadic process or relationship: (a) between the nodes, in the threads of the web; actions as movements of care relations and care processes in which healthcare technologies participate; (b) between the different actors in a network; actions as an exercise of listening to the voices and different concerns of all the actors involved in healthcare settings and integrating their formulations of problems and solutions into the ethical debate; and (c) between fears and hopes, in the realm of uncertainty; actions as a tool for overcoming the polarization between the promises and the dangers of medical AI, thus offering an alternative to abysmal uncertainty.

This proposal focuses on the possibilities for mediation inscribed in the robot, configured by the design of a specific AI device in its interaction with a network of healthcare relations. Thus, broadening and complexifying the process of responsible development of care robots, caring in the in-between is a complement to other proposals that focus on the design process of the artefacts that also integrate the ethics of care -such as the Care Centered Value-Sensitive Design proposed by van Wynsberghe (2013). From these bases, caring in the in-between is a proposal of responsible robotics that implies institutional challenges, as well as new practices in healthcare systems. It is articulated around three simultaneous processes, each of them related to practical actions in one of the in-between dimensions considered: monitoring relations and caring processes; considering stakeholders’ opinions; and making fears and hopes commensurable. Each of these processes could be defined as:

-

Monitoring relations and caring processes The creation of local public health systems to monitor the design and introduction of medical AI technologies. This implies the creation of follow-up and assessment procedures to also be applied to pilot or experimental projects. Care is carried out by “all the hospital”, by the networks of material, semiotic and social fluxes that occur every day among all the actors in which technologies participate. If care relations and care practices are placed at the centre of the debate, and the whole hospital is in charge of giving care, then we need to put mechanisms in place that encompass hospitals and other healthcare settings, mechanisms that ensure that the introduction of these devices responds to medical and care values and priorities and not, for example, to commercial or prestige interests. This process of monitoring relations and care processes particularly needs public entities to ensure that the introduction of AI and robotic systems to healthcare settings responds to collective health and well-being needs; and to guarantee that market or innovation interests in AI and robotics are not detrimental to the ethical and social criteria governing public health systems.

-

Considering stakeholders’ opinions The development of inclusiveness and public participation strategies to establish a prioritisation strategy for the development of IA in health, as well as to identify the main concerns around its introduction. In view of this need, multiple strategies are traditionally developed from STS and what is known as the “participatory turn” in science and technology (hybrid forums, citizen conferences, etc.). The idea of heterogeneity is central to these types of proposals, using diverse mechanisms to integrate the knowledge and expertise of multiple actors (engineers, medical practitioners, formal and informal caregivers, patients or relatives). But it is not enough to speak of heterogeneous assemblies, because in these assemblies not all the agents involved are the same, nor do they participate in the same way, nor are uncertainties distributed equally. Different groups are not equal in their capacity to impose their logic and some groups represent more than others the dominant economic-instrumental interpretation of care inscribed in technology (Hergesell and Maibaum 2018). The limitations of participatory processes to address these inequalities are well-known, so there is a need to develop complementary strategies to allow for alternative forms of inclusion and representation in matters related to the introduction of AI and robotics systems to healthcare. In this sense, we propose the need to explore systematic methods to integrate informal and spontaneous popular movements and expression of the population’s fears and hopes (also viewed as potential patients, relatives, and caregivers of the health system).

-

Making fears and hopes commensurable The choice of progressive, small actions to “keep under control” the effects or consequences of a development, small actions based on progressive feedback loops with monitoring systems and constant debate with all the stakeholders of each hospital or healthcare setting. Such feedback loops will ensure that the introduction of AI devices is in line with care procedures in a specific hospital or healthcare setting and integrates the concerns of all the different actors involved. Undoubtedly, the introduction of forms of feed-back loops also implies slowing down the processes of designing and implementing AI systems in healthcare. We understand that a slowdown is necessary as a prerequisite for radically integrating responsiveness into the design of healthcare technologies.

From this perspective, then, the responsibility to introduce robotic and AI devices in accordance with ethical criteria and societal needs and priorities does not only refer to the technical design process of such devices, nor is it limited to establishing strong legal frameworks to regulate their use. The development of robotics and AI in consideration of ethical and social concerns requires public engagement and institutional changes to health systems on the path towards more responsible technologies. Monitoring relations and caring processes, considering the opinions of stakeholders and making fears and hopes commensurable are, as a whole, a way to introduce democratic mechanisms to technological development. And, it is no doubt impossible to talk about responsible AI and robotics if technology and democracy do not go together. It is also impossible to talk about democracy in AI and robotics if care is not integrated as a central ethical and political proposition in all processes and relations involved in the development of healthcare technologies, what we call “caring in the in-between”.

7 Conclusions

Just as a single technology cannot undertake all care or cure alone, studies of AI and robotics in healthcare cannot be focused only on a particular device. In this paper, we develop an approach grounded on the uncertainty surrounding AI and robotics, on the heterogeneity and mediations that the robot makes possible (what we call the REN approach) and on the ethical and social debate regarding its context of use. Within this framework, we propose a method for responsible introduction of AI and robotics based on an ethical–political proposal called “Caring in the In-Between.” This proposal for action looks into the eyes of uncertainties to collectively discuss ways to design and use technological devices in healthcare settings, while respecting the values that guide public health practice and its networks of care. Using the ethics of care and the notion of measured action developed from the STS, we propose responsible AI and robotics by focusing on the mediations of concrete devices in their interaction with a network of healthcare relations. We articulate AI and responsible robotics around the action that occurs in between fears and hopes, and which revolve around three dimensions of action: (a) caring as monitoring the relations that occur when introducing AI and robotic systems to healthcare settings, through the creation of local public health systems for the purpose of monitoring; (b) caring as including concerns and priorities of the most distanced collectives from the creation and design of knowledge and technologies, with the organization of participatory processes and alternative forms of stakeholder representation; and (c) caring as making fears and hopes commensurable, through the choice of progressive and reversible actions.

Availability of data and material

Not applicable.

Code availability

Not applicable.

References

Anderson SL, Anderson M (2015) Towards a principle-based healthcare agent. In: van Rysewyk SP, Pontier M (eds) Machine medical ethics. Springer, Cham, pp 67–78

Bijker WE (2009) How is technology made? That is the question! Camb J Econ 34(1):63–76

Boada Pareto J, Román Maestre B, Torras Genís C (2021) The ethical issues of social assistive robotics: a critical literature review. Technol Soc 67:101726

Cabibihan JJ, Javed H, Ang M, Aljunied SM (2013) Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. Int J Soc Robot 5(4):593–618

Callon M (1998) El proceso de construcción de la sociedad. El estudio de la tecnología como herramienta para el análisis sociológico. In: Domènech M, Tirado FJ (eds) Sociología simétrica. Ensayos sobre ciencia, tecnología y sociedad. Gedisa, p 143–170

Callon M, Latour B (1992) Don’t throw the baby out with the bath school! A reply to Collins and Yearley. In: Pickering A (ed) Science as practice and culture. The Univer, Chicago and London, pp 343–368

Callon M, Lascoumes P, Barthe Y (2009) Acting in an uncertain world: an essay on technical democracy. MIT Press, Cambridge

Calo R (2017) Artificial Intelligence Policy: A Primer and Roadmap. Available at SSRN: https://ssrn.com/abstract=3015350 or https://doi.org/10.2139/ssrn.3015350

Clarke V, Braun V (2014) Thematic analysis. In: Michalos AC (ed) Encyclopaedia of quality of life and well-being research. Springer, Dordrecht, pp 6626–6628

Cockburn T (2005) Children and the feminist ethics of care. Childhood 12(1):71–89

Coeckelbergh M, Pop C, Simut R, Peca A, Pintea S, David D, Vanderborght B (2016) A survey of expectations about the role of robots in robot-assisted therapy for children with ASD: ethical acceptability, trust, sociability, appearance, and attachment. Sci Eng Ethics 22(1):47–65

Coninx A, Baxter P, Oleari E, Bellini S, Bierman B, Blanson Henkemans O, Belpaeme T (2016) Towards long-term social child-robot interaction: using multi-activity switching to engage young users. J Hum Robot Interact 5(1):32–67

Crossman MK, Kazdin AE, Kitt ER (2018) The influence of a socially assistive robot on mood, anxiety, and arousal in children. Prof Psychol Res Pract 49(1):48–56

de Sousa SB (2016) La incertidumbre: entre el miedo y la esperanza. América Latina: la democracia en la encrucijada. CLACSO, Buenos Aires, pp p161-169

DeCanio S (2016) Robots and humans-complements or substitutes? J Macroecon 49:280–291

Díaz-Boladeras M, Angulo C, Domènech M, Albo-Canals J, Serrallonga N, Raya C, Barco A (2016) Assessing pediatrics patients’ psychological states from biomedical signals in a cloud of social robots. In: XIV Mediterranean Conference on medical and biological engineering and computing, vol. 57, p 1179–1184

Domènech M, Tirado F (2009) El problema de la materialidad en los estudios de la ciencia y la tecnología. In: Gatti G, Martínez de Albéniz I, Tejerina B (eds.) Tecnología, cultura experta e identidad en la sociedad del conocimiento. Euskal Herriko Unibertsitatea, Argitalpen Zerbitzua Servicio Editorial, p 25–51

Epstein S (1995) The construction of lay expertise: AIDS activism and the forging of credibility in the reform of clinical trials. Sci Technol Human Values 20(4):408–437

Feenberg A (1999) Questioning technology. Routledge, London

Feenberg A (2010) Between reason and experience. essays in technology and modernity. The MIT Press, Cambridge

Feil-Seifer BD, Matari MJ (2011) Ethical issues related to technology. Robot Autom Mag 18(1):24–31

Feng P, Feenberg A (2008) Thinking about design: critical theory of technology and the design process. Philosopy and design. From engineering to architecure. Springer, Berlin, pp 105–118

Fisher B, Tronto J (1990) Toward a feminist theory for caring. In: Abel EK, Nelson MK (eds) Circles of care: work and identity in women’s lives. SUNY Press, pp 35–62

Frith L (2012) Symbiotic empirical ethics: a practical methodology. Bioethics 26(4):198–206

Hagendorff T (2020) The ethics of AI ethics: an evaluation of guidelines. Mind Mach 30(1):99–120

Heerink M, Vanderborght B, Broekens J, Albó-Canals J (2016) New friends: social robots in therapy and education. Int J Soc Robot 8(4):443–444

Hergesell J, Maibaum A (2018) Interests and side effects in geriatic care. In: Weidner R, Karafilidis A (eds) Developing support technologies—integrating multiple perspectives to create support that people really want. VS-Verlag, Wiesbaden, pp 163–168

Howcroft D, Mitev N, Wilson M (2004) What we may learn from the social shaping of technology approach. In: Mingers J, Willcocks L (eds) Social theory and philosophy for information systems. John Wiley and Sons, West Sussex, pp 329–371

Ihde D (1999) Technology and prognostic predicaments. AI Soc 13(1–2):44–51

Iosa M, Morone G, Cherubini A, Paolucci S (2016) The three laws of neurorobotics: a review on what neurorehabilitation robots should do for patients and clinicians. J Med Biol Eng 36(1):1–11

Jenkins S, Draper H (2015) Care, monitoring, and companionship: views on care robots from older people and their carers. Int J Soc Robot 7(5):673–683

Karakayali N (2015) Two Ontological Orientations in Sociology: Building Social Ontologies and Blurring the Boundaries of the ‘Social.’ Sociology 49(4):732–747

Kerasidou A (2020) Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bull World Health Organ 98(4):245–250

Latour B (1999) Pandora’s hope: essays on the reality of science studies. Harvard University Press

Law J, Mol A (1995) Notes on materiality and sociality. Sociol Rev 43(2):274–294

López Gómez D (2015) Little arrangements that matter. Rethinking autonomy-enabling innovations for later life. Technol Forecast Soc Chang 93:91–101

Lupton D (2020) A more-than-human approach to bioethics: the example of digital health. Bioethics

Maibaum A, Bischof A, Hergesell J, Lipp B (2021) A critique of robotics in health care. AI Soc 0123456789.

Matsuzaki H, Lindemann G (2016) The autonomy-safety-paradox of service robotics in Europe and Japan: a comparative analysis. AI Soc 31(4):501–517

Mejia C, Kajikawa Y (2017) Bibliometric analysis of social robotics research: identifying research trends and knowledgebase. Appl Sci 7(12):1316

Meyer-Heim A, van Hedel HJA (2013) Robot-assisted and computer-enhanced therapies for children with cerebral palsy: current state and clinical implementation. Semin Pediatr Neurol 20(2):139–145

Morley J et al (2020) The ethics of AI in health care: a mapping review. Soc Sci Med 260:113172

Mort M, May CR, Williams T (2003) Remote doctors and absent patients: acting at a distance in telemedicine? Sci Technol Human Values 28(2):274–295

Muller VC (2020) Ethics of artificial intelligence and robotics (Stanford Encyclopedia of Philosophy). Stanford Encycl Philos 1–30

Nordmann A, Rip A (2009) Mind the gap revisited. Nat Nanotechnol 4:273–274

Pistono F, Yampolskiy RV (2016) Unethical research: how to create a malevolent artificial intelligence

Ponterotto JG (2006) Brief Note on the Origins, Evolution, and Meaning of the Qualitative Research Concept Thick Description. The Qualitative Report 11(3):538–549

Puig de la Bellacasa M (2011) Matters of care in technoscience: assembling neglected things. Soc Stud Sci 41(1):85–106

Puig de la Bellacasa M (2017) Matters of care. Speculative ethics in more than human worlds. University of Minnesota Press.

Russell SJ, Norvig P (2003) Artificial intelligence: a modern approach. Prentice Hall Series in Artificial Intelligence, New Jersey

Sabanovic S, Reeder S, Kechavarzi B (2014) Designing robots in the wild: in situ prototype evaluation for a break management robot. J Hum Robot Interact Ion 3(1):70–88

Sampath M, Khargonekar P (2018) Socially responsible automation: a framework for shaping future. Nat Acad Eng Bridge 48(4):45–52

Schaal S (2007) The new robotics—towards human-centered machines. HFSP Journal 1(2):115–126

Sharkey A, Sharkey N (2011) Children, the elderly, and interactive robots: anthropomorphism and deception in robot care and companionship. IEEE Robot Autom Mag 18(1):32–38

Sharkey N, Sharkey A (2012) The eldercare factory. Gerontology 58(3):282–288

Sherwin S, Stockdale K (2017) Whither bioethics now? The promise of relational theory. Int J Fem Approach Bioethics 10(1):7–29

Shoham Y, Perrault R, Brynjolfsson E, Clark J, Manyika J, Niebles JC, Bauer Z (2018) AI Index 2018. Annual report 1–94

Sparrow R (2016) Robots in aged care: a dystopian future? Introduction. AI Soc 31(4):445–454

Sparrow R, Sparrow L (2006) In the hands of machines? The future of aged care. Minds Mach 16(2):141–161

Stahl BC, Coeckelbergh M (2016) Ethics of healthcare robotics: towards responsible research and innovation. Robot Auton

Steels L, Mantaras RLD (2018) The Barcelona declaration for the proper development and usage of artificial intelligence in Europe. AI Commun 31:485–494

Stone P, Brooks R, Brynjolfsson E, Calo R, Etzioni O, Hager G, Teller A (2016) Artificial intelligence and life in 2030: one hundred year study on artificial intelligence. Stanford University, Stanford

Topol EJ (2019) High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25(1):44–56

Tronto JC (1998) An ethic of care. J Am Soc Aging 22(3):15–20

Tronto J (2018) La democracia del cuidado como antídoto frente al neoliberalismo. In: Domínguez Alcón C, Kohlen H, Tronto J (Eds) El futuro del cuidado. Comprensión de la ética del cuidado y práctica enfermera. Ediciones San Juan de Dios, p 7–19

Tronto J, Kohlen H (2018) ¿Puede ser codificada la ética del cuidado? In: Domínguez Alcón C, Tronto J, Kohlen H (eds) El futuro del cuidado. Comprensión de la ética del cuidado y práctica enfermera. Ediciones San Juan de Dios, p 20–32

Vallès-Peris N (2021) Repensar la robótica y la inteligencia artificial desde la ética de los cuidados Teknokultura. Rev De Cult Digit y Mov Soc 18(2):137–146

Vallès-Peris N, Domènech M (2020) Roboticists’ imaginaries of robots for care: the radical imaginary as a tool for an ethical discussion. Eng Stud 12(3):157–176

Vallès-Peris N, Angulo C, Domènech M (2018) Children’s imaginaries of human–robot interaction in healthcare. Int J Environ Res Public Health 15(5):970–988

Vallès-Peris N, Argudo-Portal V, Domènech M (2021a) Manufacturing life, what life? Ethical debates around biobanks and social robots. NanoEthics 1–14.

Vallès-Peris N, Barat-Auleda O, Domènech M (2021b) Robots in Healthcare? What Patients Say. Int J Environ Res Public Health 18:9933

Vallor S, Bekey GA (2017) Artificial intelligence and the ethics of self-learning robots. In: Lin P, Abney K, Jenkins R (eds) Robot ethics. Oxford University Press, Oxford, pp 338–353

van der Plas A, Smits M, Wehrmann C (2010) Beyond speculative robot ethics: a vision assessment study on the future of the robotic caretaker. Account Res 17(6):299–315

van Wynsberghe A (2013) Designing robots for care: care centered value-sensitive design. Sci Eng Ethics 19(2):407–433

van Wynsberghe A (2015) Healthcare robots. Ethics, design and implementation. Routledge, London

Verbeek P-P (2006) Materializing morality: design ethics and technological mediation. Sci Technol Human Values 31(3):361–380

Volti R (2005) Society and technological change. Macmillan

Willems D, Pols J (2010) Goodness! The empirical turn in health care ethics. Med Antropol 22(1):161–170

Funding

Open Access Funding provided by Universitat Autonoma de Barcelona. This study was supported by “la Caixa” Foundation under agreement LCF/PR/RC17/10110004.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. The first draft of the manuscript was written by NV-P and MD commented on and edited previous versions. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vallès-Peris, N., Domènech, M. Caring in the in-between: a proposal to introduce responsible AI and robotics to healthcare. AI & Soc 38, 1685–1695 (2023). https://doi.org/10.1007/s00146-021-01330-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-021-01330-w